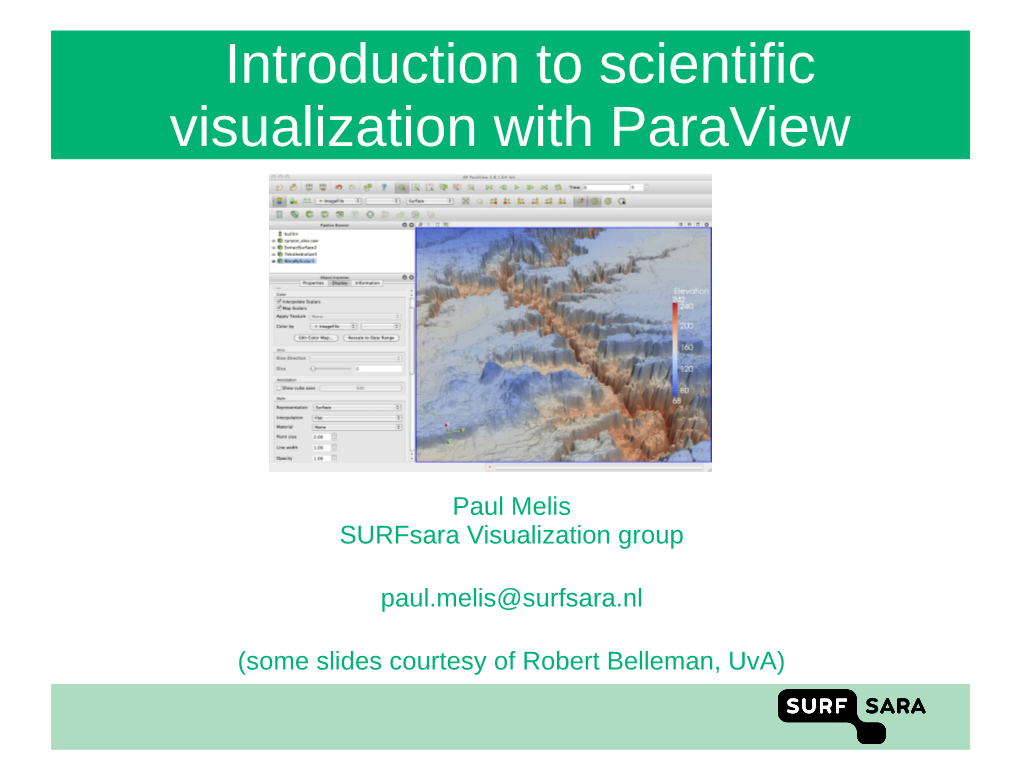

Introduction to Scientific Visualization with Paraview

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Bio 219 Biomedical Imaging and Scientific Visualization

Bio 219 Biomedical Imaging and Scientific Visualization Ch. Zollikofer M. Ponce de León Organization • OLAT: – course scripts (pdf) • website: www.aim.uzh.ch/morpho/wiki/Teaching/SciVis – course scripts (pdf); passwd: scivisdocs – link collection (tutorials, applets, software/data downloads, ...) • book (background information): Zollikofer & Ponce de León, Virtual Reconstruction. A Primer in Computer-assisted Paleontology and Biomedicine (NY: Wiley, 2005) CHF 55 • final exam – Monday, 26. May 2014, 1015-1100 BioMedImg & SciVis • at the intersection between – theory/practice of image data acquisition – computer graphics – medical diagnostics – computer-assisted paleoanthropology Grotte Chauvet, France Biomedical Imaging • acquisition • processing • analysis • visualization ... of biomedical data Scientific Visualization visual... • representation (cf. data presentation) • exploration • analysis ...of scientific data aims of this course • provide theoretical (and practical) foundations of – image data acquisition, storage, retrieval – image data processing and analysis – image data visualization/rendering • establish links between – real-life vision and computer vision – computer science and biomedical sciences – theory and practice of handling biomedical data contents • real-life vision • computers and data representation • 2D image data acquisition • 3D image data acquisition • biomedical image processing in 2D and 3D • biomedical image data visualization and interaction biomedical data types of data data flow humans and computers facts and data • facts exist by definition (±independent of the observer): – females and males – humans and Neanderthals – dogs and wolves • data are generated through observation: – number of living human species: 1 – proportion of females to males at birth: 49/51 – nr. of wolves per square km biomedical data: general • physical/physiological data about the human body: – density – temperature – pressure – mass – chemical composition biomedical data: space and time • spatial – 1D – 2D – 3D • temporal • spatiotemporal (4D) .. -

Arxiv:1911.09220V2 [Cs.MS] 13 Jul 2020

MFEM: A MODULAR FINITE ELEMENT METHODS LIBRARY ROBERT ANDERSON, JULIAN ANDREJ, ANDREW BARKER, JAMIE BRAMWELL, JEAN- SYLVAIN CAMIER, JAKUB CERVENY, VESELIN DOBREV, YOHANN DUDOUIT, AARON FISHER, TZANIO KOLEV, WILL PAZNER, MARK STOWELL, VLADIMIR TOMOV Lawrence Livermore National Laboratory, Livermore, USA IDO AKKERMAN Delft University of Technology, Netherlands JOHANN DAHM IBM Research { Almaden, Almaden, USA DAVID MEDINA Occalytics, LLC, Houston, USA STEFANO ZAMPINI King Abdullah University of Science and Technology, Thuwal, Saudi Arabia Abstract. MFEM is an open-source, lightweight, flexible and scalable C++ library for modular finite element methods that features arbitrary high-order finite element meshes and spaces, support for a wide variety of dis- cretization approaches and emphasis on usability, portability, and high-performance computing efficiency. MFEM's goal is to provide application scientists with access to cutting-edge algorithms for high-order finite element mesh- ing, discretizations and linear solvers, while enabling researchers to quickly and easily develop and test new algorithms in very general, fully unstructured, high-order, parallel and GPU-accelerated settings. In this paper we describe the underlying algorithms and finite element abstractions provided by MFEM, discuss the software implementation, and illustrate various applications of the library. arXiv:1911.09220v2 [cs.MS] 13 Jul 2020 1. Introduction The Finite Element Method (FEM) is a powerful discretization technique that uses general unstructured grids to approximate the solutions of many partial differential equations (PDEs). It has been exhaustively studied, both theoretically and in practice, in the past several decades [1, 2, 3, 4, 5, 6, 7, 8]. MFEM is an open-source, lightweight, modular and scalable software library for finite elements, featuring arbitrary high-order finite element meshes and spaces, support for a wide variety of discretization approaches and emphasis on usability, portability, and high-performance computing (HPC) efficiency [9]. -

Scientific Visualization

GeoVisualization “Geovisualization integrates approaches from visualization in scientific computing (ViSC), cartography, image analysis, information systems (GISystems) to provide theory, methods, and tools for visual exploration, analysis, synthesis, and presentation of geospatial data” -- International cartographic association commission (2001) Point/line/surface, 3D, spatial-temporal 1 Point: gas stations on Google map Location information only. 2 Point: geo-temporal data 3 Point: vectors 4 Point: bricks & colors 5 Line: Google map 6 Line: Facebook (dense edges) 7 Line: bundling technique Population migration: Airline routes: 8 Region: contours (boundaries only) 9 Region: color (filled regions) 10 Health Data (Disease Distributions) 11 Cartogram: scale and deform regions to reflect the size of the attributes 12 Multi-relationship: line/bubble set Multiple relationships Avoid re-layout 13 3D Map (2.5 Dimension) 14 3D Map: issues Realism vs. Abstraction Distraction Occlusion Applications: – Travel guide – City planning/simulation 15 3D Map: occlusion & landmark 16 Spatial-temporal data Time stamps/labels on 2D map Space-time cube Color curves Attribute changes 17 Time-trajectory (Tornado path) 18 Space-Time Cube 19 Trajectory Wall (C. Tominsk, et al) 20 Color Curves Using curves in color space to represent time. 21 Using Color Curves to Draw Taxis Trajectories on 2D Maps 22 Attribute changes Robbery geo-temporal data 23 Attribute changes (Health Data) 24 Interactive Techniques in InfoVis Select: mark something as interesting -

Course Title: Principles of Scientific Visualization Course Number: 9400110 Course Credit: 1 Course Description: This Course

Course Title: Principles of Scientific Visualization Course Number: 9400110 Course Credit: 1 Course Description: This course provides students with instruction in the evolution and underlying principles of scientific visualization, including two-dimensional representation of scientific and other forms of data. Included in the content is the use of color and other graphical elements such as vector and bitmap images in different presentation techniques. Students will also learn about the use of charts and graphs in representing data and the software tools used to produce them. The ultimate output of this course is a design portfolio created by the student from a scenario. The portfolio should include a narrative description of the scenario, the approach to data collection, resulting charts and graphs, and an interpretation of each chart/graph. Research references should be cited appropriately. Consideration should be given to having students produce the portfolio using presentation software. CTE Standards and Benchmarks NGSSS-Sci 28.0 Describe scientific & technical visualization. – The student will be able to: 28.01 Define scientific and technical visualization and provide examples of each. 28.02 Explain the importance of scientific visualization and its applicability to various industries. 28.03 Provide examples of 2-D and 3D rendered visualizations. 29.0 Describe the historical significance of scientific & technical visualization. – The student will be able to: 29.01 Describe the evolution of drawings from cave through perspective drawings to photography, television, and the Internet. 29.02 Define and describe the elements contained on various types of maps (e.g., road, topographic, aeronautical, weather, concept, and gene). SC.912.L.14.4; SC.912.E.5.8; 30.0 Describe the technological advancements of scientific & technical visualization. -

Elmer Finite Element Software for Multiphysical Problems

Elmer finite element software for multiphysical problems Peter Råback ElmerTeam CSC – IT Center for Science 10/04/2014 PRACE Spring School, 15-17 April, 2014 1 Elmer track in PRACE spring school • Wed 16th, 10.45-12.15=1.5 h Introduction to Elmer finite element software • Wed 16th, 13.30-15.00=1.5 h Hands-on session using ElmerGUI • Wed 16th, 15.15-16.45=1.5 h OpenLab • Thu 17th, 10.45-12.15=1.5 h Advanced use of Elmer • Thu 17th, 13.30-14.30=1 h Hands-on session on advanced features and parallel computation • Thu 17th, 14.45-15.45=1h Hands-on & improviced 10/04/2014 2 Elmer finite element software for multiphysical problems Figures by Esko Järvinen, Mikko Lyly, Peter Råback, Timo Veijola (TKK) & Thomas Zwinger Short history of Elmer 1995 Elmer development was started as part of a national CFD program – Collaboration of CSC, TKK, VTT, JyU, and Okmetic Ltd. 2000 After the initial phase the development driven by number of application projects – MEMS, Microfluidics, Acoustics, Crystal Growth, Hemodynamics, Glaciology, … 2005 Elmer published under GPL-license 2007 Elmer version control put under sourceforge.net – Resulted to a rapid increase in the number of users 2010 Elmer became one of the central codes in PRACE project 2012 ElmerSolver library published under LGPL – More freedom for serious developers Elmer in numbers ~350,000 lines of code (~2/3 in Fortran, 1/3 in C/C++) ~500 code commits yearly ~280 consistency tests in 3/2014 ~730 pages of documentation in LaTeX ~60 people participated on Elmer courses in 2012 9 Elmer related visits -

Overview of Elmer

Overview of Elmer Peter Råback and Mika Malinen CSC – IT Center for Science October 19, 2012 1 2 Copyright This document is licensed under the Creative Commons Attribution-No Derivative Works 3.0 License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nd/3.0/. 1 Introduction This exposition gives an overview of the Elmer software package. General information on the capabilities of the software, its usage, and how the material of the package is organized is presented. More detailed information is given in the other Elmer manuals, the scopes of which are described in this document. What is Elmer Elmer is a finite element software package for the solution of partial differential equations. Elmer can deal with a great number of different equations, which may be coupled in a generic manner making Elmer a versatile tool for multiphysical simulations. As an open source software, Elmer also gives the user the means to modify the existing solution procedures and to develop new solvers for equations of interest to the user. History of Elmer The development of Elmer was started in 1995 as part of a national CFD technology program funded by the Finnish funding agency for technology and innovation, Tekes. The original development consortia included partners from CSC – IT Center for Science (formely known as CSC – Scientific Computing). Helsinki University of Technology TKK, VTT Technical Research Centre of Finland, University of Jyväskylä, and Okmetic Ltd. CSC is a governmental non-profit company fully owned by the Ministry of Education. After the five years initial project ended the development has been continued by CSC in different application fields. -

Scientific Visualization for Secondary and Post–Secondary Schools Aaron C

24 Scientific Visualization for Secondary and Post–Secondary Schools Aaron C. Clark & Eric N. Wiebe North Carolina State University’s Department of marily brought about by advances in technology have Math, Science, and Technology Education along created new opportunities to use similar tools to pro- with Wake Technical Community College’s mote and enhance the study of the physical, biologi- Engineering Technology Division and North cal, and mathematical sciences. Carolina’s Department of Public Instruction These new courses are designed to articulate into (Vocational Education Division), sought ways in scientific visualization and technical graphics curric- which to build a strong secondary program in scien- ula at both two-year and four-year colleges and uni- tific and technical visualization, focusing on the use versities through the Tech-Prep initiative. of sophisticated graphics tools to study mathematics Articulation between schools allows for a broader and the sciences. Momentum for this high school- range of students to have a visual science course level scientific visualization curriculum developed out count for admission into a college or university. The of a revision of the complete high school technical courses have the potential to replace the fundamental graphics curriculum used throughout the state drafting course required for most degrees in engi- (North Carolina Public Schools, 1997). It became neering and technology. clear that a scientific visualization track could both The proposed student populations taking the sci- broaden the scope of the current technical graphics entific visualization courses are traditional vocational curriculum and attract a new group of students to track students and pre-college students who plan on technical graphics. -

NPR in Scientific Visualization

NPR Techniques for Scientific Visualization NPR Techniques for Scientific Visualization Victoria Interrante University of Minnesota 1 Introduction Visualization research is concerned with the design, implementation and evaluation of novel methods for effectively communicating information through images. For example: How do we determine how to portray a complicated set of data so that its essential information can be easily and accurately perceived and understood? How can we measure the success of our efforts? Where should we look for insight into the science behind the art of effective visual representation? For many years, photorealism was the gold standard, and the goal in visualization was to achieve renderings of scientific data that were as nearly indistinguishable as possible from a photograph. However in recent years, there has been an upsurge of interest in using non-photorealistic rendering (NPR) techniques in scientific visualization. What does NPR offer to visualization applications? What are some of the important research issues in developing NPR techniques for data visualization? What are the recent advances in this area? My presentation in this half-day course will attempt to survey these topics, beginning with a discussion of the motivation for using NPR in scientific visualization and continuing through an overview of recent research in the development and assessment of NPR techniques for visualization applications. 2 Motivation and Background Historical examples of the use of ‘non-photorealistic rendering’ techniques in scientific visualization can be found in the hand-drawn illustrations from scores of textbooks across multiple fields in the sciences [Loe64][Law71][Zwe61][Rid38]. The universal goal in such illustrations was to clarify the subject by emphasizing its most important or significant features, while de-emphasizing extraneous details [Hod89]. -

Scientific Visualization

0 Introduction CEG790 Introduction to Scientific Visualization Department of Computer Science and Engineering 0-1 0 Introduction Outline • Introduction • The visualization pipeline • Basic data representations • Fundamental algorithms • Advanced computer graphics • Advanced data representations • Advanced algorithms Department of Computer Science and Engineering 0-2 0 Introduction Literature • Will Schroeder, Ken Martin, Bill Lorenson, The Visualization Toolkit - An Object-Oriented Approach To 3D Graphics, Kitware, 2004 • Charles D. Hansen, Chris Johnson, Visualization Handbook, First Edition, Academic Press, 2004 • Scientific visualization: overviews, methodologies, and techniques, Gregory M. Nielson, Hans Hagen, Heinrich Müller, Los Alamitos, Calif., IEEE Computer Society Press, 1997 • Woo, Neider, Davis, Shreiner, OpenGL Programming Guide, Addison Wesley, 2000, http://www.opengl.org/documentation/red_book_1.0 • http://www-static.cc.gatech.edu/scivis/tutorial/linked/ classification.html Department of Computer Science and Engineering 0-3 0 Introduction What is Scientific Visualization? Scientific visualization, sometimes referred to in shorthand as SciVis, is the representation of data graphically as a means of gaining understanding and insight into the data. It is sometimes referred to as visual data analysis. This allows the researcher to gain insight into the system that is studied in ways previously impossible. Department of Computer Science and Engineering 0-4 0 Introduction What it is not It is important to differentiate between scientific visualization and presentation graphics. Presentation graphics is primarily concerned with the communication of information and results in ways that are easily understood. In scientific visualization, we seek to understand the data. However, often the two methods are intertwined. Department of Computer Science and Engineering 0-5 0 Introduction What is Scientific Visualization? From a computing perspective, SciVis is part of a greater field called visualization. -

Customizing Paraview

Customizing ParaView Utkarsh A. Ayachit∗ David E. DeMarle† Kitware Inc. ABSTRACT VTK developers must overcome in order to extend ParaView. For- ParaView is an Open Source Visualization Application that scales, tunately the ServerManager includes both Module [2] and server via data parallel processing, to massive problem sizes. The nature side Plugin [3] facilities. The two facilities differ in that Modules of Open Source software means that ParaView has always been well are statically linked into ParaView at compile time, whereas Plug- suited to customization. However having access to the source code ins are dynamically linked into ParaView at run time. Either facility does not imply that it is trivial to extend or reuse the application. An standardizes, through CMake[6] configuration macros, the process ongoing goal of the ParaView project is to make the code simple to by which new types of VTK objects can be added to ParaView. Plu- extend, customize, and change arbitrarily. gin and Module macros turn the build environment definition into In this paper we present the ways by which the application can be a simple procedure call. A standard list of arguments is populated modified to date. We then describe in more detail the latest efforts with information such as the names of the C++ files for the new rou- to simplify the task of reusing the most visible part of ParaView, the tines, and the macro creates the correct platform independent build client GUI application. These efforts include a new CMake config- environment to make a library that is compatible with the applica- uration macro that makes it simple to assemble the major functional tion. -

Usr/Bin/Cmake # You Can Edit This File to Change Values Found and Used by Cmake

# This is the CMakeCache file. # For build in directory: /home/build2 # It was generated by CMake: /usr/bin/cmake # You can edit this file to change values found and used by cmake. # If you do not want to change any of the values, simply exit the editor. # If you do want to change a value, simply edit, save, and exit the editor. # The syntax for the file is as follows: # KEY:TYPE=VALUE # KEY is the name of a variable in the cache. # TYPE is a hint to GUIs for the type of VALUE, DO NOT EDIT TYPE!. # VALUE is the current value for the KEY. ######################## # EXTERNAL cache entries ######################## //Build shared libraries (so/dylib/dll). BUILD_SHARED_LIBS:BOOL=ON //Build testing BUILD_TESTING:BOOL=OFF //Path to a program. BZRCOMMAND:FILEPATH=BZRCOMMAND-NOTFOUND //Path to a program. CMAKE_AR:FILEPATH=/usr/bin/ar //The build mode CMAKE_BUILD_TYPE:STRING=Release //The build type for the paraview project. CMAKE_BUILD_TYPE_paraview:STRING=Release //Enable/Disable color output during build. CMAKE_COLOR_MAKEFILE:BOOL=ON //CXX compiler CMAKE_CXX_COMPILER:FILEPATH=/usr/bin/c++ //A wrapper around 'ar' adding the appropriate '--plugin' option // for the GCC compiler CMAKE_CXX_COMPILER_AR:FILEPATH=/usr/bin/gcc-ar //A wrapper around 'ranlib' adding the appropriate '--plugin' option // for the GCC compiler CMAKE_CXX_COMPILER_RANLIB:FILEPATH=/usr/bin/gcc-ranlib //Flags used by the CXX compiler during all build types. CMAKE_CXX_FLAGS:STRING= //Flags used by the CXX compiler during DEBUG builds. CMAKE_CXX_FLAGS_DEBUG:STRING=-g //Flags used by the CXX compiler during MINSIZEREL builds. CMAKE_CXX_FLAGS_MINSIZEREL:STRING=-Os -DNDEBUG //Flags used by the CXX compiler during RELEASE builds. CMAKE_CXX_FLAGS_RELEASE:STRING=-O2 -DNDEBUG //Flags used by the CXX compiler during RELWITHDEBINFO builds. -

Pyvista: Managing & Visualizing Geospatial Data Using an Open

PYVISTA: MANAGING & VISUALIZING GEOSPATIAL DATA USING AN OPEN-SOURCE FRAMEWORK by C. Bane Sullivan c Copyright by C. Bane Sullivan, 2020 All Rights Reserved A thesis submitted to the Faculty and the Board of Trustees of the Colorado School of Mines in partial fulfillment of the requirements for the degree of Master of Science (Hydrol- ogy). Golden, Colorado Date Signed: C. Bane Sullivan Signed: Dr. Whitney J. Trainor-Guitton Thesis Advisor Golden, Colorado Date Signed: Dr. Josh Sharp Professor and Director Hydrologic Science & Engineering Program ii ABSTRACT There is a wide range of data types present in typical hydrogeophysical studies; being able to gather all data types for a given project into a single framework is challenging and often unachievable at an affordable cost for hydrological researchers. Steep licensing fees for commercial software and complex user interfaces in existing open software have limited the accessibility of tools to build, integrate, and make decisions with diverse types of 3D geospa- tial data and models. In earth science research, particularly in hydrology, restricted budgets exacerbate these limitations, creating barriers for using software to manage, visualize, and exchange 3D data in reproducible workflows. In response to these challenges, I have created the PyVista software as an open-source framework for 3D geospatial data management, fu- sion, and visualization. The PyVista Python package provides an accessible and intuitive interface back to a robust and established visualization library, the Visualization Toolkit (VTK), to facilitate rapid analysis and visual integration of spatially referenced datasets. This interface implements spatial data structures encompassing a majority of subsurface applications to make creating, managing, and analyzing spatial data more streamlined for domain scientists.