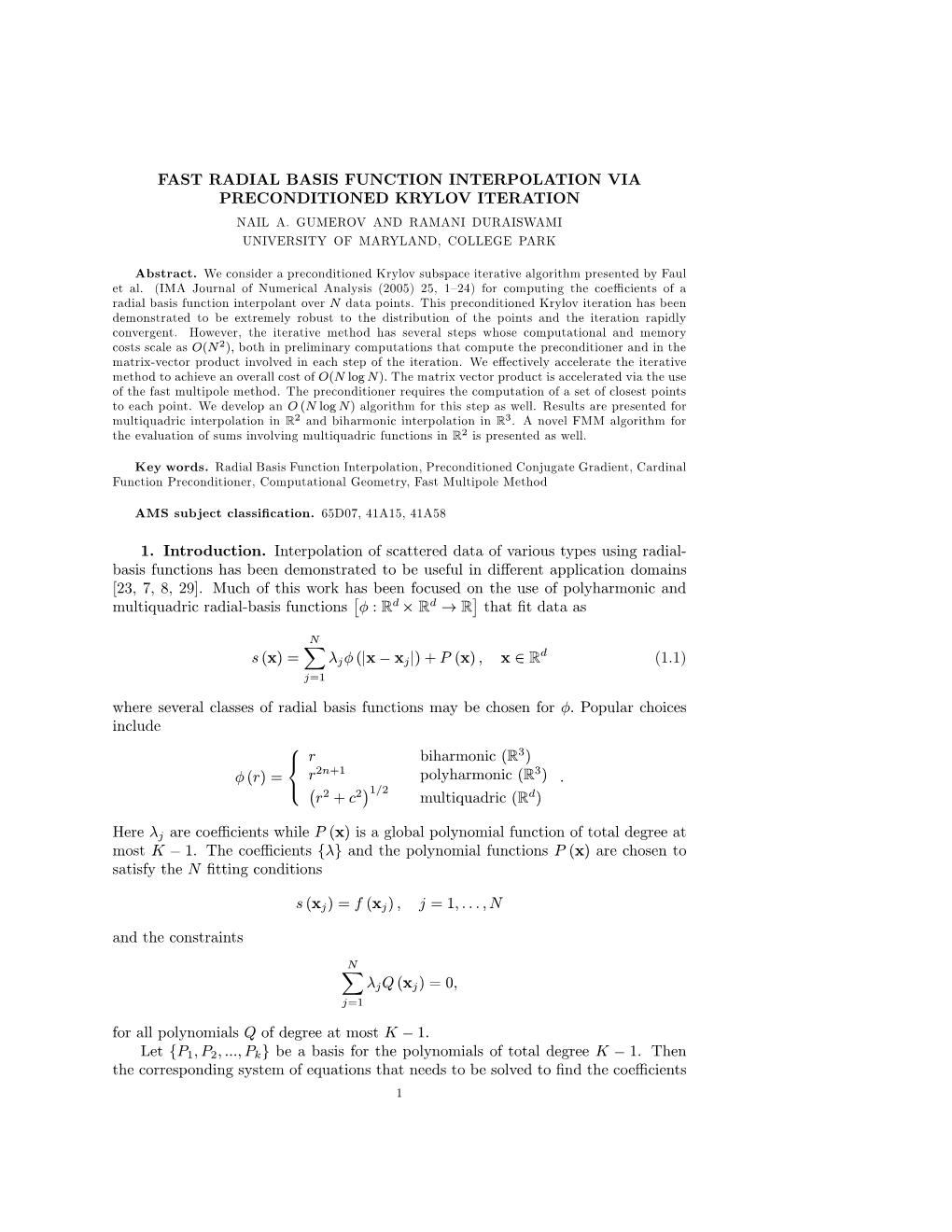

Fast Radial Basis Function Interpolation Via Preconditioned Krylov Iteration Nail A

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Implementation of Bourbaki's Elements of Mathematics in Coq

Implementation of Bourbaki’s Elements of Mathematics in Coq: Part Two, From Natural Numbers to Real Numbers JOSÉ GRIMM Inria Sophia-Antipolis, Marelle Team Email: [email protected] This paper describes a formalization of the rst book of the series Elements of Mathematics by Nicolas Bourbaki, using the Coq proof assistant. In a rst paper published in this journal, we presented the axioms and basic constructions (corresponding to a part of the rst two chapters of book I, Theory of sets). We discuss here the set of integers (third chapter of book I, theory of set), the sets Z and Q (rst chapter of book II, Algebra) and the set of real numbers (Chapter 4 of book III, General topology). We start with a comparison of the Bourbaki approach, the Coq standard library, and the Ssreect library, then present our implementation. 1. INTRODUCTION 1.1 The Number Sets This paper is the second in a series that explains our work on a project (named Gaia), whose aim is to formalize, on a computer, the fundamental notions of math- ematics. We have chosen the “Elements of Mathematics” of Bourbaki as our guide- line, the Coq proof assistant as tool, and ssreflect as proof language. As ex- plained in the first paper [Gri10], our implementation relies on the theory of sets, rather than a theory of types. In this paper we focus on the construction of some sets of numbers. Starting with the set of integers N (which is an additive monoid), one can define Z as its group of differences (this is a ring), then Q as its field of fractions (this is naturally a topological space), then R, the topological completion (that happens to be an Archimedean field); next comes the algebraic closure C of R. -

Set-Theoretic and Algebraic Properties of Certain Families of Real Functions

Set-theoretic and Algebraic Properties of Certain Families of Real Functions Krzysztof PÃlotka Dissertation submitted to the Eberly College of Arts and Sciences at West Virginia University in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Mathematics Krzysztof Chris Ciesielski, Ph.D., Chair Dening Li, Ph.D. Magdalena Niewiadomska-Bugaj, Ph.D. Jerzy Wojciechowski, Ph.D. Cun-Quan Zhang, Ph.D. Department of Mathematics Morgantown, West Virginia 2001 Keywords: Darboux-like, Hamel, Sierpi´nski-Zygmund functions; Martin’s Axiom Copyright °c 2001 Krzysztof PÃlotka ABSTRACT Set-theoretic and Algebraic Properties of Certain Families of Real Functions Krzysztof PÃlotka Given two families of real functions F1 and F2 we consider the following question: can every real function f be represented as f = f1 +f2, where f1 and f2 belong to F1 and F2, respectively? This question leads to the definition of the cardinal function Add: Add(F1; F2) is the smallest cardinality of a family F of functions for which there is no function g in F1 such that g + F is contained in F2. This work is devoted entirely to the study of the function Add for different pairs of families of real functions. We focus on the classes that are related to the additive properties and generalized continuity. Chapter 2 deals with the classes related to the generalized continuity. In particu- lar, we show that Martin’s Axiom (MA) implies Add(D,SZ) is infinite and Add(SZ,D) equals to the cardinality of the set of all real numbers. SZ and D denote the families of Sierpi´nski-Zygmund and Darboux functions, respectively. -

The Non-Hausdorff Number of a Topological Space

THE NON-HAUSDORFF NUMBER OF A TOPOLOGICAL SPACE IVAN S. GOTCHEV Abstract. We call a non-empty subset A of a topological space X finitely non-Hausdorff if for every non-empty finite subset F of A and every family fUx : x 2 F g of open neighborhoods Ux of x 2 F , \fUx : x 2 F g 6= ; and we define the non-Hausdorff number nh(X) of X as follows: nh(X) := 1 + supfjAj : A is a finitely non-Hausdorff subset of Xg. Using this new cardinal function we show that the following three inequalities are true for every topological space X d(X) (i) jXj ≤ 22 · nh(X); 2d(X) (ii) w(X) ≤ 2(2 ·nh(X)); (iii) jXj ≤ d(X)χ(X) · nh(X) and (iv) jXj ≤ nh(X)χ(X)L(X) is true for every T1-topological space X, where d(X) is the density, w(X) is the weight, χ(X) is the character and L(X) is the Lindel¨ofdegree of the space X. The first three inequalities extend to the class of all topological spaces d(X) Pospiˇsil'sinequalities that for every Hausdorff space X, jXj ≤ 22 , 2d(X) w(X) ≤ 22 and jXj ≤ d(X)χ(X). The fourth inequality generalizes 0 to the class of all T1-spaces Arhangel ski˘ı’s inequality that for every Hausdorff space X, jXj ≤ 2χ(X)L(X). It is still an open question if 0 Arhangel ski˘ı’sinequality is true for all T1-spaces. It follows from (iv) that the answer of this question is in the afirmative for all T1-spaces with nh(X) not greater than the cardianilty of the continuum. -

Six Strategies for Defeating the Runge Phenomenon in Gaussian Radial Basis Functions on a Finite Interval✩ John P

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Computers and Mathematics with Applications 60 (2010) 3108–3122 Contents lists available at ScienceDirect Computers and Mathematics with Applications journal homepage: www.elsevier.com/locate/camwa Six strategies for defeating the Runge Phenomenon in Gaussian radial basis functions on a finite intervalI John P. Boyd Department of Atmospheric, Oceanic and Space Science, University of Michigan, 2455 Hayward Avenue, Ann Arbor MI 48109, United States article info a b s t r a c t Article history: Radial basis function (RBF) interpolation is a ``meshless'' strategy with great promise for Received 19 August 2010 adaptive approximation. Because it is meshless, there is no canonical grid to act as a starting Accepted 5 October 2010 point for function-adaptive grid modification. Uniform grids are therefore common with RBFs. Like polynomial interpolation, which is the limit of RBF interpolation when the RBF Keywords: functions are very wide (the ``flat limit''), RBF interpolants are vulnerable to the Runge Radial basis function Phenomenon, whether the grid is uniform or irregular: the N-point interpolant may diverge Runge Phenomenon as N ! 1 on the interval spanned by the interpolation points even for a function f .x/ that is analytic everywhere on and near this interval. In this work, we discuss six strategies for defeating the Runge Phenomenon, specializing to a uniform grid on a finite interval and one particular species of RBFs in one space dimension: φ.x/ D exp.−[α2=h2Ux2/ where h is the grid spacing. -

Cardinal Functions for ¿-Spaces 357

PROCEEDINGS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 68, Number 3, March 1978 CARDINALFUNCTIONS FOR ¿-SPACES JAMES R. BOONE, SHELDON W. DAVIS AND GARY GRUENHAGE Abstract. In this paper, four cardinal functions are defined on the class of fc-spaces. Some of the relationships between these cardinal functions are studied. Characterizations of various ¿-spaces are presented in terms of the existence of these cardinal functions. A bound for the ordinal invariant k of Arhangelskii and Franklin is established in terms of the tightness of the space. Examples are presented which exhibit the interaction between these cardinal invariants and the ordinal invariants of Arhangelskii and Franklin. 1. Introduction. The class of ¿-spaces, introduced by Arens [1], has become a widely used class of topological spaces, both because of their intrinsic interest and because they provide a natural category of spaces in which to study a variety of other topological problems. The ¿-spaces are precisely those spaces which are the quotient spaces of locally compact spaces. In particular, X is a k-space provided: H is closed in X if and only if H n K is closed in K, for each compact set K. That is, X has the weak topology induced by the collection of compact subspaces. Franklin [5] initiated the study of a restricted class of ¿-spaces, called sequential spaces. This is the class of ¿-spaces in which the weak topology is induced by the collection of convergent sequences. If A c X, let k c\(A) be the set of all points/? such that p E c\K(A n K) for some compact set K, and let s cl(A) be the set of all points/» such that there exists a sequence in A that converges top. -

A Cardinal Function on the Category of Metric Spaces 1

International Journal of Contemporary Mathematical Sciences Vol. 9, 2014, no. 15, 703 - 713 HIKARI Ltd, www.m-hikari.com http://dx.doi.org/10.12988/ijcms.2014.4442 A Cardinal Function on the Category of Metric Spaces Fatemah Ayatollah Zadeh Shirazi Faculty of Mathematics, Statistics and Computer Science, College of Science University of Tehran, Enghelab Ave., Tehran, Iran Zakieh Farabi Khanghahi Department of Mathematics, Faculty of Mathematical Sciences University of Mazandaran, Babolsar, Iran Copyright c 2014 Fatemah Ayatollah Zadeh Shirazi and Zakieh Farabi Khanghahi. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Abstract In the following text in the metric spaces category we introduce a topologically invariant cardinal function, Θ (clearly Θ is a tool to classify metric spaces). For this aim in metric space X we consider cone metrics (X; H; P; ρ) such that H is a Hilbert space, image of ρ has nonempty interior, and this cone metric induces the original metric topology on X. We prove that for all sufficiently large cardinal numbers α, there exists a metric space (X; d) with Θ(X; d) = α. Mathematics Subject Classification: 54E35, 46B99 Keywords: cone, cone metric order, Fp−cone metric order, Hilbert space, Hilbert-cone metric order, metric space, solid cone 1 Introduction As it has been mentioned in several texts, cone metric spaces in the following form has been introduced for the first time in [9] as a generalization of metric 704 F. Ayatollah Zadeh Shirazi and Z. -

Power Homogeneous Compacta and the Order Theory of Local Bases

POWER HOMOGENEOUS COMPACTA AND THE ORDER THEORY OF LOCAL BASES DAVID MILOVICH AND G. J. RIDDERBOS Abstract. We show that if a power homogeneous compactum X has character κ+ and density at most κ, then there is a nonempty open U ⊆ X such that every p in U is flat, “flat” meaning that p has a family F of χ(p; X)-many neighborhoods such that p is not in the interior of the intersection of any infinite subfamiliy of F. The binary notion of a point being flat or not flat is refined by a cardinal function, the local Noetherian type, which is in turn refined by the κ-wide splitting numbers, a new family of cardinal functions we introduce. We show that the flatness of p and the κ-wide splitting numbers of p are invariant λ with respect to passing from p in X to hpiα<λ in X , provided that λ < χ(p; X), or, respectively, that λ < cf κ. The above <χ(p; X)-power- invariance is not generally true for the local Noetherian type of p, as shown by a counterexample where χ(p; X) is singular. 1. Introduction Definition 1.1. A space X is homogeneous if for any p; q 2 X there is a homeomorphism h: X ! X such that h(p) = q. There are several known restrictions on the cardinalities of homogeneous compacta. First we mention a classical result, and then we very briefly survey some more recent progress. Theorem 1.2. • Arhangel 0ski˘ı’sTheorem: if X is compact, then jXj ≤ 2χ(X). -

On Approximate Cardinal Preconditioning Methods for Solving Pdes with Radial Basis Functions

On Approximate Cardinal Preconditioning Methods for Solving PDEs with Radial Basis Functions Damian Brown Department of Mathematics, University of Leicester, University Road, Leicester, LE1 7RH UK. Leevan Ling Department of Mathematics, City University of Hong Kong, 83, Tat Chee Avenue, Kowloon, Hong Kong. Edward Kansa Department of Mechanical and Aeronautical Engineering, One Shields Ave., University of California, Davis, Davis, CA 95616 USA. Jeremy Levesley Department of Mathematics, University of Leicester, University Road, Leicester, LE1 7RH UK. Abstract The approximate cardinal basis function (ACBF) preconditioning technique has been used to solve partial differential equations (PDEs) with radial basis func- tions (RBFs). In [31], a preconditioning scheme that is based upon constructing the least-squares approximate cardinal basis function from linear combinations of the RBF-PDE matrix elements has shown very attractive numerical results. This pre- conditioning technique is sufficiently general that it can be easily applied to many differential operators. In this paper, we review the ACBF preconditioning techniques previously used for interpolation problems and investigate a class of preconditioners based on the one proposed in [31] when a cardinality condition is enforced on different subsets. We numerically compare the ACBF preconditioners on several numerical examples of Poisson's, modified Helmholtz and Helmholtz equations, as well as a diffusion equation and discuss their performance. Key words: radial basis function, partial differential equation, preconditioner, cardinal basis function Preprint submitted to Engng. Anal. Bound. Elem. 17 November 2004 Hardy's multiquadric φ(r) = pr2 + c2; Inverse multiquadric φ(r) = 1=pr2 + c2; cr2 Gaussian spline φ(r) = e− ; Table 1 Examples of infinitely smooth RBFs. -

On the Cardinality of a Topological Space

PROCEEDINGS OF THE AMERICAN MATHEMATICAL SOCIETY Volume 66, Number 1, September 1977 ON THE CARDINALITY OF A TOPOLOGICAL SPACE ARTHUR CHARLESWORTH Abstract. In recent papers, B. Sapirovskiï, R. Pol, and R. E. Hodel have used a transfinite construction technique of Sapirovskil to provide a unified treatment of fundamental inequalities in the theory of cardinal functions. Sapirovskil's technique is used in this paper to establish an inequality whose countable version states that the continuum is an upper bound for the cardinality of any Lindelöf space having countable pseudocharacter and a point-continuum separating open cover. In addition, the unified treatment is extended to include a recent theorem of Sapirovskil concerning the cardinality of T3 spaces. 1. Introduction. Throughout this paper a and ß denote ordinals, m and n denote infinite cardinals, and \A\ denotes the cardinality of a set A. We let d, c, L, w, it, and \¡>denote the density, cellularity, Lindelöf degree, weight, 77-weight, and pseudocharacter. (For definitions see Juhász [5].) We also let nw, 77% and psw denote the net weight, vT-character, and point separating weight. A collection "31 of subsets of a topological space X is a network for X if whenever p E V, with V open in X, we have p E N C F for some N in CX. A collection % of nonempty open sets is a tr-local base at/7 if whenever p E V, with V open in X, we have U C V for some U in %. A separating open cover § for X is an open cover for X having the property that for each distinct x and y in X there is an S in S such that x E S and y G S. -

The Coherence of Semifilters: a Survey

The Coherence of Semifilters: a Survey Taras Banakh and Lubomyr Zdomskyy Contents 1. Some history and motivation (55). 2. Introducing semifilters (56). 3. The lattice SF of semifilters (57). 4. The limit operator on SF (59). 5. Algebraic operations on the lattice SF (59). 6. (Sub)coherence relation (60). 7. Near coherence of semifilters (62). 8. A characterization of the semifilters coherent to Fr or Fr⊥ (62). 9. The strict subcoherence and regularity of semifilters (63). 10. The coherence lattice [SF] (64). 11. Topologizing the coherence lattice [SF] (65). 12. Algebraic operations on [SF] (66). 13. Cardinal characteristics of semifilters: general theory (67). 14. Cardinal characteristics of semifilters: the four levels of complexity (71). 15. Cardinal characteristics of the first complexity level (72). 16. The minimization πχ[−] of the π-character (73). 17. The function representation%F of a semifilter (75). 18. The nonification πχc[−] of πχ[−] (76). 19. The “Ideal” cardinal characteristics of semifilters (77). 20. The relation ≤F and its cardinal characteristics (82). 21. Constructing non-coherent semifilters (86). 22. Total coherence (87). 23. The supremization of the linkedness number πp(−) (88). 24. Some cardinal characteristics of rapid semifilters (89). 25. Inequalities between some critical values (90). 26. The consistent structures of the coherence lattice [SF] (90). 27. Some applications (91). 28. Some open problems (92). Appendix (99). The Coherence of Semifilters 55 1. Some history and motivation The aim of this survey is to present the principal ideas and results of the theory of semifilters from the book “Coherence of Semifilters” [6], which will be our basic reference. -

The Measure Ring for a Cube of Arbitrary Dimension

Pacific Journal of Mathematics THE MEASURE RING FOR A CUBE OF ARBITRARY DIMENSION JAMES MICHAEL GARDNER FELL Vol. 5, No. 4 December 1955 THE MEASURE RING FOR A CUBE OF ARBITRARY DIMENSION J.M.G. FELL 1. Introduction. From Maharam's theorem [2] on the structure of measure algebras it is very easy to obtain a unique characterization, in terms of cardinal numbers, of an arbitrary measure ring. An example of a measure ring is the ring of lίellinger types of (finite) measures on an additive class of sets. In this note, the cardinal numbers are computed which characterize the ring of Hellinger types of measures on the Baire subsets of a cube of arbitrary dimension. 2. Definitions. A lattice R is a Boolean σ-rίng if (a) the family Rx of all subelements of any given element x form a Boolean algebra, and (b) any count- able family of elements has a least upper bound. If, in addition, for each x in R there exists some countably additive finite-valued real function on Rx which is 0 only for the zero-element of R, then R is a measure ring. x A Boolean σ- ring with a largest element is a Boolean σ-algebra. A measure ring with a largest element is a measure algebra. The measure algebra of a finite measure μ is the Boolean σ-algebra of μ measurable sets modulo μ null sets. A subset S of a Boolean σ-algebra R is a σ-basis if the smallest σ-sub~ algebra of R containing S is R itself. -

Spline Techniques for Magnetic Fields

PFC/RR-83-27 DOE/ET-51013-98 UC-20 Spline Techniques for Magnetic Fields John G. Aspinall MIT Plasma Fusion Center, Cambridge Ma. USA June 8, 1984 Spline Techniques for Magnetic Fields. John G. Aspinall Spline techniques are becoming an increasingly popular method to ap- proximate magnetic fields for computational studies in controlled fusion. This report provides an introduction to the theory and practice of B-spline techniques for the computational physicist. 0. Forward 2 1. What is a Spline? 4 2. B-splines in One Dimension - on an Infinite Set of Knots 8 3. B-splines in One Dimension - Boundary Conditions 22 4. Other Methods and Bases for Interpolation 29 5. B-splines in Multiple Dimensions 35 6. Approximating a Magnetic Field with B-splines 42 7. Algorithms and Data Structure 49 8. Error and Sensitivity Analysis 57 0. Forward This report is an overview of B-spline techniques, oriented towards magnetic field computation. These techniques form a powerful mathematical approximating method for many physics and engineering calculations. Sections 1, 2, and 3 form the core of the report. In section 1, the concept of a polynomial spline is introduced. Section 2 shows how a particular spline with well chosen properties, the B-spline, can be used to build any spline. In section 3, the description of how to solve a simple spline approximation problem is completed, and some practical examples of using splines are shown. All these sections deal exclusively in scalar functions of one variable for simplicity. Section 4 is partly digression. Techniques that are not B-spline techniques, but are closely related, are covered.