Author.. Signature Redacted I Department of Brain and Cognitive Science August 12, 2016 Signature Redacted Certified By

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

View Annual Report

TO OUR SHAREHOLDERS, CUSTOMERS, PARTNERS AND EMPLOYEES: It is a humbling experience to write this letter to you as only the third CEO in Microsoft’s history. As I said when I took this role, I originally joined Microsoft to have an opportunity to change the world through technology and empower people to do amazing things. Many companies aspire to change the world, but very few have the talent, resources and perseverance of Microsoft. I believe this is a landmark moment for the company and for our industry as a whole. Cloud and mobile technologies are redefining how people work and play. Three billion people will soon be connected to Internet-enabled devices; 212 billion sensors will come online in a few short years; trillions will be spent in consumer and business technologies. But it’s not about technology for technology’s sake! It’s our mission to enable the use of technology to realize the true potential of people, teams and organizations. As I shared in my email to employees in July, we will be the productivity and platform company for this mobile-first and cloud-first world. We will empower every person and every organization on the planet to do more and achieve more. And we will accomplish this by building incredible Digital Work and Life Experiences, supported by our Cloud Operating System, the Device Operating System and Hardware platforms. In the same way that we aspired to and achieved our original vision of a PC on every desk and in every home, we will reinvent productivity. This clarity of purpose and boldness of our aspiration inspires me and all of us at Microsoft. -

Hybrid Cloud Infrastructure-As-A-Service (Iaas) Antonius Susanto | Partner Business & Development Lead | Microsoft

Hybrid Cloud Infrastructure-as-a-Service (IaaS) Antonius Susanto | Partner Business & Development Lead | Microsoft Cloud Channel Summit 2015 | @rhipecloud #RCCS15 75% 49% ~49%75%10K40%new allowgrowth of federal work personal in & 62%93%80%only can’t ofof 24% employeesemployees use info requiresmobileindustryglobal data devices networkregulations for foradmitare effectivehave ineffective to a violating BYOD decision at - 10K 40% contributionbusinesscreatedgenerated in use last/ year 5 years making!compliancecollaboration!policy in place policies ¥ Computing Technology Industry Association's (CompTIA) 2nd annual Trends in Enterprise Mobility study from February 2013. *The Future of Corporate IT 2013-2017 ** CEB Survey of 165,000 employees †2012 Survey on Self-Service BI and Analytics, Unisphere Research 2017 $107Bil 67% 84% Spending on public IT cloud services $107 59%84%67% oflookwant customers toan their established cloud expect provider relationshipto for is expected to be more than planning,withpurchase a vendor aintegration wide to varietytrust and them of ongoing cloud as a Cloud Billion 59% management$107BillionServiceservices Provider from ain single 2017 vendor Datacenters are being transformed 71% 70% 45% of companies see of CIOs will embrace of total IT services will rising demand for IT a “cloud first" strategy be spent on cloud projects in 2013 in 2016 services by 2020 -InformationWeek -IDC -Forrester Sources: “Outlook 2013,” InformationWeek Report, 12/06/2012; “Worldwide CIO Agenda 2013 Top 10 Predictions,” IDC, doc #238464, -

Setting up Rep Pair

InMage Scout Standard Release Notes Version – 8.0.1 GA Table: Document History Document Document Remarks Version Date 1.0 March 1, 2015 Standard version 1.1 April 7, 2015 Minor update 1.2 Nov 20 ,2015 Updated CX and vContinuum MT known issues and limitations sections. 2 Contents 1 Disclaimer of Warranty .................................................................................................................................... 4 2 About this document ........................................................................................................................................ 4 3 Overview ............................................................................................................................................................. 5 3.1 Scout ........................................................................................................................................................ 5 3.2 ScoutCloud RX ....................................................................................................................................... 5 4 What’s new in this release? ............................................................................................................................. 5 5 Upgrade Path ...................................................................................................................................................... 5 5.1 Agents .................................................................................................................................................... -

Microsoft Azure

Yesterday Datacenter, hardware, individual servers, and VMs Slow, expensive innovation Siloed infrastructure and operations Development constraints Tactical and reactive thinking Today and tomorrow People, cloud, apps, service-focused Standardized, automated processes and configurations Industry-standard, low-cost hardware Fast, cost-effective innovation, developer empowerment Holistic, strategic thinking Bringing the cloud to you What if you could have the control of the datacenter and the power of the cloud? Reduced developer chaos and shadow IT Simplified, abstracted experience Added consistency to modern development One portal, one user experience Write once, deploy anywhere Microsoft Azure Microsoft Private Cloud Microsoft Azure (on premises | hosted) Microsoft Private Cloud Microsoft Azure (on premises | hosted) MicrosoftMicrosoft Private Azure StackCloud Microsoft Azure (on(on(on- -premisespremisespremises/hosted) || hosted)hosted) App innovation MicrosoftMicrosoft Private Azure StackCloud Microsoft Azure (on(on -premisespremises/hosted) | hosted) Cloud-optimized application platform Cloud-consistent service delivery Cloud-inspired hybrid infrastructure MicrosoftMicrosoft Private Azure StackCloud (on(on -premisespremises/hosted) | hosted) . Server Roles/Features GUI Shell Windows/ Minimal Server Full Server WindowsNT Interface Server Core Server Core Windows NT to Windows Server 2008 Windows Server 2012 Windows Server and and 2003 Windows Server 2008 R2 Windows Server 2012 R2 . Basic Client . Experience . Server with Local Admin -

REO BCA Administration Guide

Overland ® Storage REO Business Continuity Appliance Administration Guide REO BCA Administration Guide ©2007-2009 Overland Storage, Inc. All rights reserved. Overland®, Overland Data®, Overland Storage®, ARCvault®, LibraryPro®, LoaderXpress®, Multi-SitePAC®, NEO Series®, PowerLoader®, Protection OS®, REO®, REO 4000®, REO Series®, Snap Care®, Snap Server®, StorAssure®, ULTAMUS®, VR2®, WebTLC®, and XchangeNOW® are registered trademarks of Overland Storage, Inc. GuardianOS™, NEO™, REO Compass™, SnapWrite™, Snap Enterprise Data Replicator™, and Snap Server Manager™ are trademarks of Overland Storage, Inc. All other brand names or trademarks are the property of their respective owners. The names of companies and individuals used in examples are fictitious and intended to illustrate the use of the software. Any resemblance to actual companies or individuals, whether past or present, is coincidental. PROPRIETARY NOTICE All information contained in or disclosed by this document is considered proprietary by Overland Storage. By accepting this material the recipient agrees that this material and the information contained therein are held in confidence and in trust and will not be used, reproduced in whole or in part, nor its contents revealed to others, except to meet the purpose for which it was delivered. It is understood that no right is conveyed to reproduce or have reproduced any item herein disclosed without express permission from Overland Storage. Overland Storage provides this manual as is, without warranty of any kind, either expressed or implied, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Overland Storage may make improvements or changes in the products or programs described in this manual at any time. -

1. Azure Site Recovery: 1 VM Promo

Building disaster-recovery solution using Azure Site Recovery (ASR) for VMware & Physical Servers (Part 2) KR Kandavel Technical Solutions Professional Asia Azure Site Recovery Specialist Team Microsoft Corporation . Azure Site Recovery use case scenarios for DR (Part 1) . 1. On-premise to On-premise DR (for Hyper-V and Virtual Machine Manager) . 2. On-premise to On-premise DR (for Hyper-V, Virtual Machine Manager and SAN) . 3. On-premise to Azure DR (for Hyper-V, and Virtual Machine Manager) . 4. On-premise to Azure DR (for Hyper-V) . Azure Site Recovery use case scenarios for DR (Part 2) . 5. On-premise to On-premise DR (for VMware and Physical Servers) . 6. On-premise to Service Provider DR (for VMware and Physical Servers) . 7. On-premise to Azure DR (for VMware and Physical Servers) . 8. On-premise to Azure Migration (for VMware, Hyper-V and Physical Servers) . 9. Azure Site Recovery - FAQ, Pricing & Licensing . Wrap up Replication Replication Replication SAN SAN Microsoft Hyper-V Hyper-V Hyper-V Hyper-V Hyper-V Azure Hyper-V to Hyper-V Hyper-V to Hyper-V Hyper-V to Microsoft Azure (on-premises) (on-premises) Replication Replication Microsoft VMware or physical VMware VMware or physical Azure VMware or physical to VMware or physical to VMware (on-premises) Microsoft Azure DR Scenario 5: On-premise to On-premise DR for VMware and Physical Servers Microsoft Azure Site Recovery Download InMage Scout InMage InMage Scout Scout Replication and orchestration channel: InMage Replication VMware/ Primary Recovery Physical site site VMware Download -

Azure Site Recovery: Automated VM Provisioning and Failover

White Paper Azure Site Recovery: Automated VM Provisioning and Failover Sponsored by: Microsoft Peter Rutten Al Gillen March 2015 EXECUTIVE SUMMARY Data is the currency of modern business, and vigorously protecting this data is imperative, regardless of industry or company size — from small and medium-sized business (SMB) to enterprise to hosted service provider (HSP). The penalty for prolonged downtime or data loss can be severe. In the 3rd Platform era, in which mobile, big data, cloud, and social networking are transforming IT, data of systems of record is being joined by a tsunami of data from systems of engagement, and together they form the basis for data of insight from which competitive advantage is obtained. In this evolving environment, data protection means ensuring that the infrastructure — whether onsite or in the cloud — that captures and processes data cannot go down (high availability, or HA); ensuring that if there is an infrastructure problem, no data is lost (disaster recovery, or DR); and guaranteeing that if data is lost, the most recent copies possible of that data exist somewhere, can be retrieved, and can be brought online instantly to maintain uninterrupted business continuity (backup and recovery). The majority of mission-critical workloads are running on x86 systems today, and classic availability software that has been used in the past primarily on RISC-based Unix servers can be costly to acquire and complex to implement on x86 servers. Further, implementation of classic HA software often required built-in application awareness. These complexities caused enough financial and resource burden that medium-sized firms, and even some branch offices or remote sites of larger organizations, elected to avoid the expense and live dangerously — without an availability solution. -

Meet UCSC's Ninth Chancellor: Denice D. Denton

UCUC SANTASANTA CRUZCRUZ REVIEW Spring 2005 Meet UCSC’s Ninth Chancellor: Denice D. Denton Celebrating 40 years of alumni achievement Providing financial support for students UC SANTA CRUZ REVIEW UC Santa Cruz Q&A: Chancellor Review 8 Denice D. Denton Chancellor New chancellor Denice Denton Denice D. Denton describes the UCSC qualities Vice Chancellor, University Relations Ronald P. Suduiko that attracted her to the post— and that make her optimistic Associate Vice Chancellor Communications about the campus’s future. Elizabeth Irwin Editor schraub paul Jim Burns Art Director 40 Years... Jim MacKenzie 10 and Counting Associate Editors Julie Packard, executive Mary Ann Dewey Jeanne Lance director of the Monterey Bay Aquarium, is one of many Writers Louise Gilmore Donahue alumni we celebrate to mark Jennifer McNulty the campus’s 40th year. Scott Rappaport Jennifer Dunn, student Doreen Schack Telephone Outreach Program Tim Stephens r. r. jones r. r. Cover Photography Cornerstone Paul Schraub (B.A. Politics ’75, Stevenson) 22 Offi ce of University Relations Campaign Update Carriage House Raising money for scholarships When a student calls, say ‘YES.’ University of California 1156 High Street and fellowships, which support Santa Cruz, CA 95064-1077 students like Charles Tolliver, is a Voice: 831.459.2501 priority of UCSC’s fi rst campus- Fax: 831.459.5795 wide fundraising campaign. tudents are making an all-out effort this year to raise funds for E-mail: [email protected] scholarships and fellowships at UC Santa Cruz. They are asking Web: review.ucsc.edu S Produced by UC Santa Cruz Public Affairs jim mackenzie you to help by making a generous pledge 3/05(05-045/89.3M) Departments to the $50 million Cornerstone Campaign. -

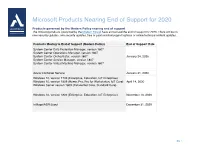

Microsoft Products Nearing End of Support for 2020

Microsoft Products Nearing End of Support for 2020 Products governed by the Modern Policy nearing end of support The following products (governed by the Modern Policy) have announced the end of support for 2020. There will be no new security updates, non-security updates, free or paid assisted support options or online technical content updates. Products Moving to End of Support (Modern Policy) End of Support Date System Center Data Protection Manager, version 1807 System Center Operations Manager, version 1807 System Center Orchestrator, version 1807 January 24, 2020 System Center Service Manager, version 1807 System Center Virtual Machine Manager, version 1807 Azure Container Service January 31, 2020 Windows 10, version 1709 (Enterprise, Education, IoT Enterprise) Windows 10, version 1809 (Home, Pro, Pro for Workstation, IoT Core) April 14, 2020 Windows Server version 1809 (Datacenter Core, Standard Core) Windows 10, version 1803 (Enterprise, Education, IoT Enterprise) November 10, 2020 InMage/ASR Scout December 31, 2020 pg. 1 Microsoft Products Nearing End of Support for 2020 Products governed by the Fixed Policy nearing end of support The following products (governed by the Fixed Policy) have announced the end of support in 2020. There will be no new security updates, non-security updates, free or paid assisted support options or online technical content updates. End of Support Products Moving to End of Support (Fixed Policy) Date Exchange Server 2010 (all editions) Microsoft Dynamics NAV 2009 R2 Microsoft Expression Blend 3 Microsoft Expression Design 3 Microsoft Expression Encoder 3 Microsoft Expression Studio 3 Microsoft Expression Web 3 January 14, 2020 Microsoft Hyper-V Server 2008 Microsoft Hyper-V Server 2008 R2 Windows 7 Windows Embedded Handheld 6.5 Windows Server 2008 R2 Windows Server 2008 Windows Server Update Services 3.0 April 14, 2020 Forefront Unified Access Gateway 2010 Microsoft Forefront Threat Management Gateway 2010 Enterprise Microsoft HPC Pack 2008 R2 pg. -

Disaster Recovery AS a SERVICE Microsoft Has Made a Big Bet This Year That Draas Will Be a Killer Application for Its Cloud OS and Azure Service

Foley: 5 Microsoft Changes That Mattered Most December 2014 VOL.20 NO.12 REDMONDMAG.COM Disaster Recovery AS A SERVICE Microsoft has made a big bet this year that DRaaS will be a killer application for its Cloud OS and Azure service. Numerous players are making similar gambits. Also: INSIDE HYPER-V REPLICA & AZURE SITE RECOVERY › What’s Missing in the System Center Technical Preview › Why You Must Adapt to the Subscription Services Shift Untitled-3 1 11/14/14 1:37 PM PREPARE. MAXIMIZE RESOURCES. Maintain business productivity. Reduce complexity. Millions of mailboxes migrated to Exchange. DELIVER DETAILED PRE-MIGRATION ASSESSMENTS. MIGRATE. ZeroIMPACT MANAGE. Hundreds of terabytes migrated Reduce to SharePoint. dataloss. Millions of users Work with migrated to legacy Active Directory. systems. COEXIST. CUT COSTS. MAXIMIZE VALUE. No disruptions. 24/7 support. Zero. Discover Dell’s ZeroIMPACT migration solutions. Migrating from Windows Server 2003? You’ll need a solid migration plan. When support for Windows Server 2003 ends, discover zero downtime, zero data loss and zero budget hassles. It’s time for a ZeroIMPACT migration. Learn more at software.dell.com/Win2013Migration Untitled-4 1 10/31/14 3:14 PM Redmond The Independent Voice of the Microsoft IT CommunityContentsDECEMBER 2014 COVER STORY REDMOND REPORT 4 Microsoft Azure Disaster Marketplace Is Now Open Recovery Approximately 1,000 applications and tools are available on the new one-stop as a Microsoft store to provide Service Azure virtual images. TECH & TOOLS WATCH Microsoft has made a big bet this year that disaster 6 BlueStripe Embeds recovery as a service will be App Monitor into a killer application for its System Center, Cloud OS and Azure Windows Azure Pack service. -

Ray Bernard [email protected]

Ray Bernard [email protected] PROFESSIONAL EXPERIENCE SuprFanz.com, Toronto, Canada- Founder (2016-Present) SuprFanz is a cloud-based marketing company which helps companies discover and market directly to their community of “SuprFanz” using data science over social media like Facebook, LinkedIn, Twitter, Meetup.com, StackOverflow and Github. We used Data Science to understand our community and reach out targets at scale in a personalized way. As a founder, I developed, designed and programmed our cloud solution on AWS, Google cloud and Digital Ocean using Python 3.x. Our solution follows a microarchitecture approach built on Docker containers. Also, I have designed all of our solutions job task functions using Celery/ RabbitMQ. We have also gained expertise in programming API interfaces in Python, Javascript, and Neo4j. Our Marketing platform helps accelerate the growth of small to medium size business. TicketMaster, -- Toronto, Canada (2018-Present) We are developing cloud-based ticket recommendation system in Python using Facebook messenger API and Neo4j to recommend artists similar sounding artists who are playing near you. The system uses AI and NLP to discover meaning from Facebook messenger. It is scheduled to be released in April 2019. Maxta Inc, NY, NY - Pre Sales Engineer (2014-2016) Full contributing Sr. Systems Engineer responsible for helping create the pre-sales strategy and drive the pre-sales effort in the field in the North East. Tradeshow support, delivering the technical presentation and helping drive successful POCs. EMC | Isilon systems, Hopkinton, MA - Global Presales Engineer (2011-2013) ● Won large financial Global Accounts for EMC|Isilon at ADP, Thomson Reuters, Bank of America, Citigroup, Barclays CAP. -

A Preliminary Analysis of Mergers and Acquisitions by Microsoft from 1992 to 2016: a Resource and Competence Perspective

A Service of Leibniz-Informationszentrum econstor Wirtschaft Leibniz Information Centre Make Your Publications Visible. zbw for Economics Lopez Giron, Ali Jose; Vialle, Pierre Conference Paper A preliminary analysis of mergers and acquisitions by Microsoft from 1992 to 2016: A resource and competence perspective 28th European Regional Conference of the International Telecommunications Society (ITS): "Competition and Regulation in the Information Age", Passau, Germany, 30th July - 2nd August, 2017 Provided in Cooperation with: International Telecommunications Society (ITS) Suggested Citation: Lopez Giron, Ali Jose; Vialle, Pierre (2017) : A preliminary analysis of mergers and acquisitions by Microsoft from 1992 to 2016: A resource and competence perspective, 28th European Regional Conference of the International Telecommunications Society (ITS): "Competition and Regulation in the Information Age", Passau, Germany, 30th July - 2nd August, 2017, International Telecommunications Society (ITS), Calgary This Version is available at: http://hdl.handle.net/10419/169462 Standard-Nutzungsbedingungen: Terms of use: Die Dokumente auf EconStor dürfen zu eigenen wissenschaftlichen Documents in EconStor may be saved and copied for your Zwecken und zum Privatgebrauch gespeichert und kopiert werden. personal and scholarly purposes. Sie dürfen die Dokumente nicht für öffentliche oder kommerzielle You are not to copy documents for public or commercial Zwecke vervielfältigen, öffentlich ausstellen, öffentlich zugänglich purposes, to exhibit the documents publicly, to make them machen, vertreiben oder anderweitig nutzen. publicly available on the internet, or to distribute or otherwise use the documents in public. Sofern die Verfasser die Dokumente unter Open-Content-Lizenzen (insbesondere CC-Lizenzen) zur Verfügung gestellt haben sollten, If the documents have been made available under an Open gelten abweichend von diesen Nutzungsbedingungen die in der dort Content Licence (especially Creative Commons Licences), you genannten Lizenz gewährten Nutzungsrechte.