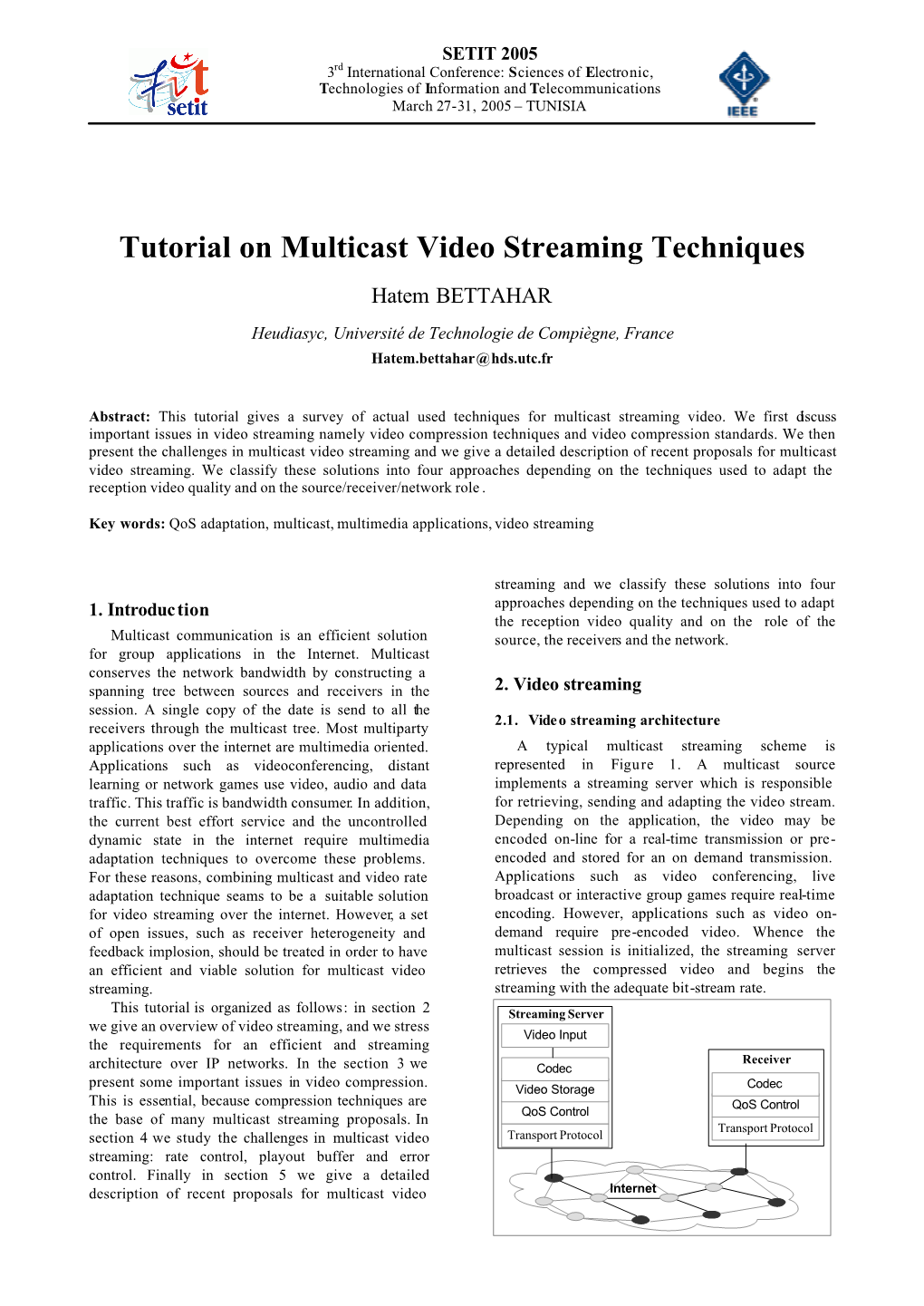

Tutorial on Multicast Video Streaming Techniques Hatem BETTAHAR

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IP Multicast Routing Technology Overview

IP Multicast Routing Technology Overview • Information About IP Multicast Technology, on page 1 • Additional References for IP Multicast, on page 15 Information About IP Multicast Technology This section provides information about IP multicast technology. About IP Multicast Controlling the transmission rate to a multicast group is not supported. At one end of the IP communication spectrum is IP unicast, where a source IP host sends packets to a specific destination IP host. In IP unicast, the destination address in the IP packet is the address of a single, unique host in the IP network. These IP packets are forwarded across the network from the source to the destination host by devices. At each point on the path between source and destination, a device uses a unicast routing table to make unicast forwarding decisions, based on the IP destination address in the packet. At the other end of the IP communication spectrum is an IP broadcast, where a source host sends packets to all hosts on a network segment. The destination address of an IP broadcast packet has the host portion of the destination IP address set to all ones and the network portion set to the address of the subnet. IP hosts, including devices, understand that packets, which contain an IP broadcast address as the destination address, are addressed to all IP hosts on the subnet. Unless specifically configured otherwise, devices do not forward IP broadcast packets, so IP broadcast communication is normally limited to a local subnet. IP multicasting falls between IP unicast and IP broadcast communication. IP multicast communication enables a host to send IP packets to a group of hosts anywhere within the IP network. -

WAN-LAN PIM Multicast Routing and LAN IGMP FEATURE OVERVIEW and CONFIGURATION GUIDE

Technical Guide WAN-LAN PIM Multicast Routing and LAN IGMP FEATURE OVERVIEW AND CONFIGURATION GUIDE Introduction This guide describes WAN-LAN PIM Multicast Routing and IGMP on the LAN and how to configure WAN-LAN PIM multicast routing and LAN IGMP snooping. The AlliedTelesis Next Generation Firewalls (NGFWs) can perform routing of IPv4 and IPv6 multicast, using PIM-SM and PIM-DM. Also, switching interfaces of the NGFWs are IGMP aware, and will only forward multicast steams to these switch ports that have received reports. IGMP snooping allows a device to only forward multicast streams to the links on which they have been requested. PIM Sparse mode requires specific designated routers to receive notification of all streams destined to specific ranges of multicast addresses. When a router needs to get hold of a given group, it sends a request to the designated Rendezvous Point for that group. If there is a source in the network that is transmitting a stream to this group, then the Rendezvous Point will be receiving it, and will forward it to the requesting router. C613-22042-00 REV A alliedtelesis.com x Products and software version that apply to this guide Contents Introduction.............................................................................................................................................................................1 Products and software version that apply to this guide .......................................................................2 Configuring WAN-LAN PIM Multicast Routing and LAN IGMP Snooping........................................3 -

Seamless Handover for Unidirectional Broadcast Access Networks in Mobile Ipv6

46 JOURNAL OF COMMUNICATIONS, VOL. 2, NO. 6, NOVEMBER 2007 Seamless Handover For Unidirectional Broadcast Access Networks In Mobile IPv6 Ilka Miloucheva, Jens Mödeker, Karl Jonas Fraunhofer Institute, Schloss Birlinghoven, Sankt Augustin, Germany Email: {ilka.miloucheva, jens.moedeker, karl.jonas}@fokus.fraunhofer.de Dirk Hetzer T-Systems, Goslarer Ufer, Berlin, Germany Email: [email protected] Abstract-- Mechanisms and protocol interactions for - Intelligent access network selection for mobile nodes seamless handover of mobile multicast/broadcast services in converged heterogeneous infrastructures. using unidirectional access networks in heterogeneous Recent standardization efforts focused on multimedia Mobile IP infrastructures are discussed and proposed. QoS services and applications, such as IPDatacast [2] and based applications, such as content delivery, mobile TV, DIMS [3], are aimed to support convergence of carousel and reliable downloads, requiring interaction channel, are considered, as well as recent standardization unidirectional broadcast and Mobile IP services. efforts for converged broadcast and mobile IP Different architectures have been proposed for cost infrastructures. The proposed mechanisms are aimed to efficient support of content delivery, mobile TV and other support handover of interactive mobile services using interactive mobile multicast applications using broadcast unidirectional broadcast media (DVB-H) combined with media. bidirectional mobile access technologies (UMTS, WLAN, Examples are hybrid broadcast -

Introduction to IP Multicast Routing

Introduction to IP Multicast Routing by Chuck Semeria and Tom Maufer Abstract The first part of this paper describes the benefits of multicasting, the Multicast Backbone (MBONE), Class D addressing, and the operation of the Internet Group Management Protocol (IGMP). The second section explores a number of different algorithms that may potentially be employed by multicast routing protocols: - Flooding - Spanning Trees - Reverse Path Broadcasting (RPB) - Truncated Reverse Path Broadcasting (TRPB) - Reverse Path Multicasting (RPM) - Core-Based Trees The third part contains the main body of the paper. It describes how the previous algorithms are implemented in multicast routing protocols available today. - Distance Vector Multicast Routing Protocol (DVMRP) - Multicast OSPF (MOSPF) - Protocol-Independent Multicast (PIM) Introduction There are three fundamental types of IPv4 addresses: unicast, broadcast, and multicast. A unicast address is designed to transmit a packet to a single destination. A broadcast address is used to send a datagram to an entire subnetwork. A multicast address is designed to enable the delivery of datagrams to a set of hosts that have been configured as members of a multicast group in various scattered subnetworks. Multicasting is not connection oriented. A multicast datagram is delivered to destination group members with the same “best-effort” reliability as a standard unicast IP datagram. This means that a multicast datagram is not guaranteed to reach all members of the group, or arrive in the same order relative to the transmission of other packets. The only difference between a multicast IP packet and a unicast IP packet is the presence of a “group address” in the Destination Address field of the IP header. -

IP Multicast

Data Communication & Networks G22.2262-001 Session 10 - Main Theme IP Multicast Dr. Jean-Claude Franchitti New York University Computer Science Department Courant Institute of Mathematical Sciences 1 Agenda Introduction to Multicast Multicast Addresses IP Multicast Reliable Multicast Pragmatic General Multicast (PGM) Reliable Multicast Protocol (RMP) Conclusion 2 Part I Introduction to Multicast 3 Cast Definitions Unicast - send to one destination (198.122.15.20) General Broadcast - send to EVERY local node (255.255.255.255) Directed Broadcast - send to subset of nodes on LAN (198.122.15.255) Multicast - send to every member of a Group of “interested” nodes (Class D address). RFC 1112 (an easy read!) 4 Why Multicast, Why Not Unicast? Unicast: Many applications require same message sent to many nodes (10, 100, 1000, n) Same message transits network n times. n messages requires n*(CPU time) as 1 message Need to deliver “timely” information. Message arrives at node n >> node 1 5 Why Multicast, Why Not Broadcast? Broadcast: Send a copy to every machine on the net Simple, but inefficient All nodes “must” process the packet even if they don’t care Wastes more CPU cycles of slower machines (“broadcast radiation”) General broadcast cannot be routed Directed broadcast is limited in scope (to machines on same sub-net or same domain) 6 Multicast Applications News/sports/stock/weather updates Software distribution Video-conferencing, shared whiteboards Distributed interactive gaming or simulations Email distribution lists Database replication 7 IP Multicast - Concepts Message sent to multicast “group” of receivers Senders need not be group members Each group has a “group address” Groups can have any size End-stations (receivers) can join/leave at will Data Packets are UDP (uh oh!) 8 IP Multicast Benefits Distribution tree for delivery/distribution of packets (i.e., scope extends beyond LAN) Tree is built by multicast routing protocols. -

Digital Video Broadcasting (DVB); Generic Stream Encapsulation (GSE); Part 2: Logical Link Control (LLC)

Digital Video Broadcasting (DVB); Generic Stream Encapsulation (GSE); Part 2: Logical Link Control (LLC) DVB Document A116-2 June 2016 3 Contents Contents .............................................................................................................................................................. 3 Intellectual Property Rights ................................................................................................................................ 5 Foreword............................................................................................................................................................. 5 Introduction ........................................................................................................................................................ 6 1 Scope ........................................................................................................................................................ 8 2 References ................................................................................................................................................ 8 2.1 Normative references ......................................................................................................................................... 8 2.2 Informative references ....................................................................................................................................... 9 3 Symbols and abbreviations .................................................................................................................... -

Junos Multicast Routing (JMR)

Junos Multicast Routing (JMR) Junos Multicast Routing (JMR) Engineering Simplicity COURSE LEVEL COURSE OVERVIEW Junos Multicast Routing (JMR) is an advanced-level This two-day course is designed to provide students with detailed coverage of multicast course. protocols including Internet Group Management Protocol (IGMP), Protocol Independent Multicast-Dense Mode (PIM-DM), Protocol Independent Multicast-Sparse Mode (PIM- SM), Bidirectional PIM, and Multicast Source Discovery Protocol (MSDP). AUDIENCE Through demonstrations and hands-on labs, students will gain experience in configuring and monitoring the Junos OS and monitoring device and protocol This course benefits individuals responsible for implementing, monitoring, and troubleshooting operations. This course utilizes Juniper Networks vMX Series devices for the hands-on multicast components in a service provider’s component, but the lab environment does not preclude the course from being applicable to other Juniper hardware platforms running the Junos OS. The Juniper Networks vMX network. Series devices run Junos OS Release 16.2R1.6. PREREQUISITES Students should have basic networking knowledge OBJECTIVES and an understanding of the Open Systems • Describe IP multicast traffic flow. Interconnection (OSI) model and the TCP/IP • Identify the components of IP multicast. protocol suite. Students should also have working knowledge of security policies. • Explain how IP multicast addressing works. • Describe the need for reverse path forwarding (RPF) in multicast. Students should also attend the Introduction to the • Explain the role of IGMP and describe the available IGMP versions. Junos Operating System (IJOS) and Junos • Configure and monitor IGMP. Intermediate Routing (JIR) courses prior to • Identify common multicast routing protocols. attending this class. • Explain the differences between dense-mode and sparse-mode protocols. -

Ipv6 Segment Routing for Multicast @ Comcast

IPv6 Segment Routing for Multicast @ Comcast John Jason Brzozowski IETF 96 LISP WG Why? • Because of the topology and relative volume of traffic compared to unicast; there is no benefit to running IP Multicast in the Backbone/Regional area networks – RAN is a hub and spoke architecture: multicast traffic needs to be on all links to the hubs receiving it – BB is a sparse topology with multiple Tb/s links • Multicast is a significant burden on - vendor silicon/code/testing – Comcast multi-vendor interoperability testing and Operations (estimates of 20% of silicon for bus/fabric chips) • Multicast is an obvious, very beneficial choice to move out of the Underlay Network and into x86, Software and Application control Simplicity • The IPv6 header has the capability of adding Option Headers for specific functions – The Segment Routing Header (SRH) is one; it is only processed if the Router is the destination of the packet being processed – The function of the header is very similar to the Loose Source Route (LSR) function in IPv4; the intermediate IPV6 addresses are SID’s – There was an original Option Header defined in IPv6 for this function that was deprecated; SR brings back the function with a new Option Header definition. IPv6 SR SUPPORT IS NOT REQUIRED BY ANY ROUTER/SWITCH/DEVICE not identified as a SID!!! IPv6 SR Solution Source and CMTS support SR x86 x86 Source X::1 Packet: Source X::1 DC/BB/CRAN Dest Y::1 SRH SID1 FF:/8 Packet on CMTS recv: Network Provides Recognize last SID Unicast Path Protection Move SID to Dest Bit set to strip SRH CMTS recognizes IPV6 CMTS: Y::1 CMTS multicast dest and CMTS process MLD forwards as a normal Joins as normal multicast originated locally Clients send MLD Packet: Joins as normal Source X::1 Dest FF:/8 Multicast Address: FF:/8 IPv6 SR Solution One Source, Multiple CMTS support x86 x86 Source X::1 DC/BB/CRAN Network Provides Unicast Path Protection CMTS Y::4 CMTS CMTS Y::1 CMTS CMTS Y::3 CMTS CMTS Y::2 CMTS. -

Design Guide For: Streaming

Crestron Electronics, Inc. Streaming Design Guide Crestron product development software is licensed to Crestron dealers and Crestron Service Providers (CSPs) under a limited non-exclusive, non transferable Software Development Tools License Agreement. Crestron product operating system software is licensed to Crestron dealers, CSPs, and end-users under a separate End-User License Agreement. Both of these Agreements can be found on the Crestron website at www.crestron.com/legal/software_license_agreement. The product warranty can be found at www.crestron.com/warranty. The specific patents that cover Crestron products are listed at patents.crestron.com. Crestron, the Crestron logo, Capture HD, Crestron Studio, DM, and DigitalMedia are trademarks or registered trademarks of Crestron Electronics, Inc. in the United States and/or other countries. HDMI and the HDMI logo are either trademarks or registered trademarks of HDMI Licensing LLC in the United States and/or other countries. Hulu is either a trademark or registered trademark of Hulu, LLC in the United States and/or other countries. Netflix is either a trademark or registered trademark of Netflix, Inc. in the United States and/or other countries. Wi-Fi is either a trademark or registered trademark of Wi-Fi Alliance in the United States and/or other countries. Other trademarks, registered trademarks, and trade names may be used in this document to refer to either the entities claiming the marks and names or their products. Crestron disclaims any proprietary interest in the marks and names of others. Crestron is not responsible for errors in typography or photography. This document was written by the Technical Publications department at Crestron. -

Streaming Multicast Video Over Software-Defined Networks Kyoomars Alizadeh Noghani, M

Streaming Multicast Video over Software-Defined Networks Kyoomars Alizadeh Noghani, M. Oğuz Sunay Özyeğin University, Department of Computer Science and Electrical Engineering, 34794 Istanbul, Turkey [email protected], [email protected] Abstract—Many of the video streaming applications in today's the multicast tree between a source and all its subscribers Internet involve the distribution of content from a CDN source to using a control application running on the logically centralized a large population of interested clients. However, widespread SDN-controller that has a global network view. The support of IP multicast is unavailable due to technical and programmable nature of SDN allows for immediate economical reasons, leaving the floor to application layer deployability, scalability, adaptability, and updatability - traits multicast which introduces excessive delays for the clients and all previously associated with ALM and not IP multicast. In increased traffic load for the network. This paper is concerned this paper, we present an IP multicast application running on with the introduction of an SDN-based framework that allows the SDN controller that also keeps track of the subscription the network controller to not only deploy IP multicast between a source and subscribers, but also control, via a simple northbound activities via a simple northbound interface and illustrate its interface, the distributed set of sources where multiple- performance advantage. description coded (MDC) video content is available. We observe IP Multicast is an ideal approach to mitigate the traffic load that for medium to heavy network loads, relative to the state-of- generated by streaming video services. A more efficient the-art, the SDN-based streaming multicast video framework delivery of the video packets reduces the congestion increases the PSNR of the received video significantly, from a probability in the network, which in turn improves the level that is practically unwatchable to one that has good quality. -

Multicast for Enterprise Video Streaming

WHITE PAPER MULTICAST FOR ENTERPRISE VIDEO STREAMING Protocols and Design Guide This document provides a network equipment neutral, technical overview of multicast protocols and a discussion of techniques and best practices for implementing multicast video on an Enterprise Network. CONTENTS VIDEO STREAMING ON IP NETWORKS �������������������������4 Multicast on Enterprise Networks ��������������������������5 Multicast Addressing ������������������������������5 Multicast on Layer 2 �������������������������������7 IGMP �������������������������������������7 IGMP Snooping ��������������������������������7 Example �����������������������������������8 Multicast on Layer 3 �������������������������������9 PIM �������������������������������������9 PIM Sparse Mode (PIM-SM) ���������������������������9 Multicast Session Example ���������������������������� 11 Other Relevant Network Configurations ���������������������� 13 Spanning Tree �������������������������������� 13 Storm Control �������������������������������� 13 IMPLEMENTING MULTICAST VIDEO STREAMING ������������������� 14 Network Preparation ������������������������������ 14 Best Practices �������������������������������� 14 Scenario 1 Enterprise IPTV ��������������������������� 16 Best Practices �������������������������������� 16 MULTICAST FOR ENTERPRISE VIDEO STREAMING | WHITE PAPER | © 2015 HARMAN | v.01.2015 Page 2 of 38 Scenario 2 Room Overflow ���������������������������� 17 Best Practices �������������������������������� 17 Scenario 3 Non-Routed Multicast ������������������������� -

DVB); Generic Stream Encapsulation (GSE); Part 1: Protocol

ETSI TS 102 606-1 V1.2.1 (2014-07) TECHNICAL SPECIFICATION Digital Video Broadcasting (DVB); Generic Stream Encapsulation (GSE); Part 1: Protocol 2 ETSI TS 102 606-1 V1.2.1 (2014-07) Reference RTS/JTC-DVB-338-1 Keywords broadcasting, DVB ETSI 650 Route des Lucioles F-06921 Sophia Antipolis Cedex - FRANCE Tel.: +33 4 92 94 42 00 Fax: +33 4 93 65 47 16 Siret N° 348 623 562 00017 - NAF 742 C Association à but non lucratif enregistrée à la Sous-Préfecture de Grasse (06) N° 7803/88 Important notice The present document can be downloaded from: http://www.etsi.org The present document may be made available in electronic versions and/or in print. The content of any electronic and/or print versions of the present document shall not be modified without the prior written authorization of ETSI. In case of any existing or perceived difference in contents between such versions and/or in print, the only prevailing document is the print of the Portable Document Format (PDF) version kept on a specific network drive within ETSI Secretariat. Users of the present document should be aware that the document may be subject to revision or change of status. Information on the current status of this and other ETSI documents is available at http://portal.etsi.org/tb/status/status.asp If you find errors in the present document, please send your comment to one of the following services: http://portal.etsi.org/chaircor/ETSI_support.asp Copyright Notification No part may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm except as authorized by written permission of ETSI.