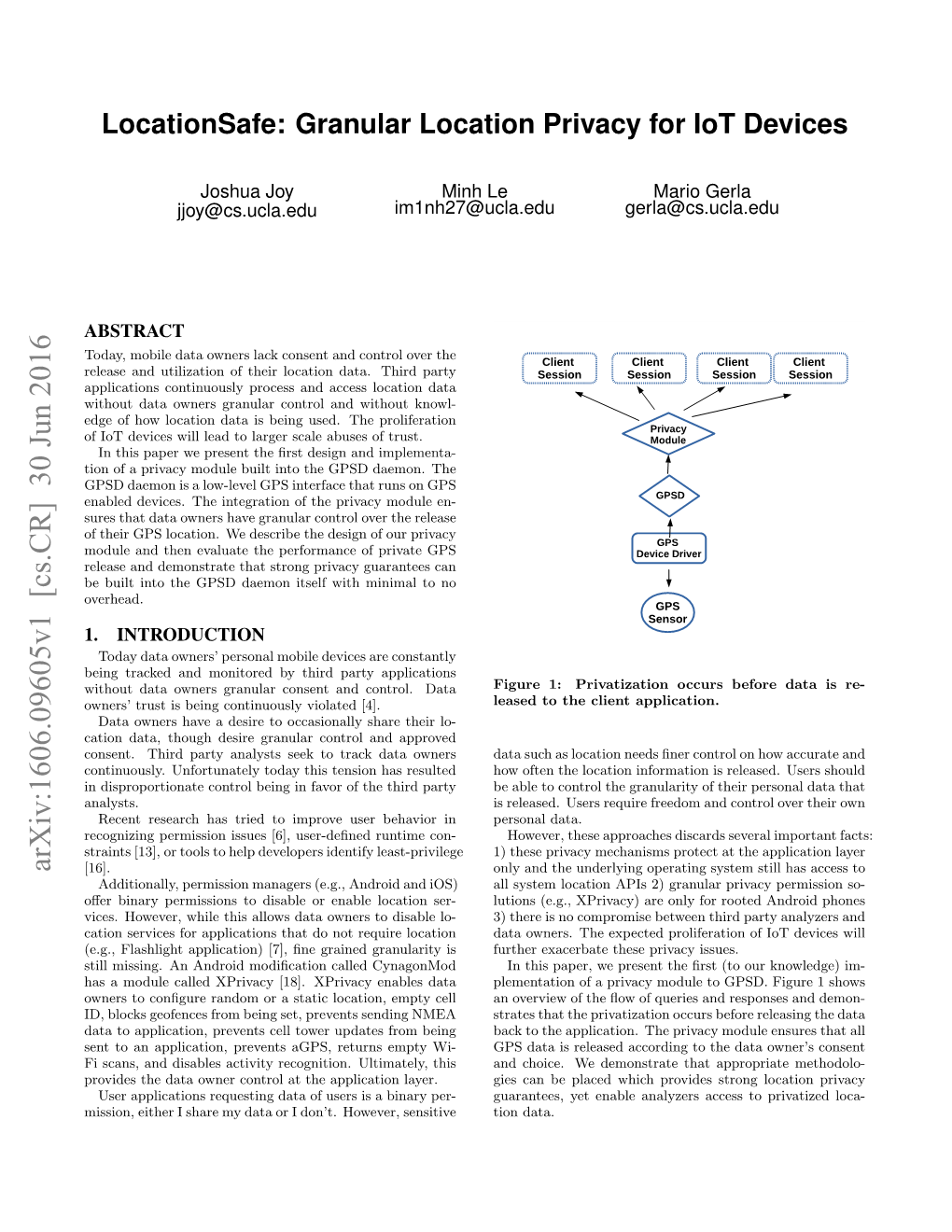

Locationsafe: Granular Location Privacy for Iot Devices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Creating a Mesh Sensor Network Using Raspberry Pi and Xbee Radio Modules

Creating a mesh sensor network using Raspberry Pi and XBee radio modules By Michael Forcella In Partial Fulfillment of the Requirement for the Degree of MASTER OF SCIENCE In The Department of Computer Science State University of New York New Paltz, NY 12561 May 2017 Creating a mesh sensor network using Raspberry Pi and XBee radio modules Michael Forcella State University of New York at New Paltz _________________________________ We the thesis committee for the above candidate for the Master of Science degree, hereby recommend acceptance of this thesis. ______________________________________ David Richardson, Thesis Advisor Department of Biology, SUNY New Paltz ______________________________________ Chirakkal Easwaran, Thesis Committee Member Department of Computer Science, SUNY New Paltz ______________________________________ Hanh Pham, Thesis Committee Member Department of Computer Science, SUNY New Paltz Approved on __________________ Submitted in partial fulfillment for the requirements for the Master of Science degree in Computer Science at the State University of New York at New Paltz ABSTRACT A mesh network is a type of network topology in which one or more nodes are capable of relaying data within the network. The data is relayed by the router nodes, which send the messages via one or more 'hops' until it reaches its intended destination. Mesh networks can be applied in situations where the structure or shape of the network does not permit every node to be within range of its final destination. One such application is that of environmental sensing. When creating a large network of sensors, however, we are often limited by the cost of such sensors. This thesis presents a low-cost mesh network framework, to which any number of different sensors can be attached. -

Major Project Final

2015 PiFi Analyser MASON MCCALLUM, NATHAN VAZ AND TIMOTHY LY NORTHERN SYDNEY INSTITUTE | Meadowbank Executive summary Wireless networks have become more prevalent in contemporary society, as such it is important to accurately study the impact that wireless networking can have on personal security and privacy. The PiFi Analyser project outlines the methods behind passively recording wireless networks and mapping the recorded data with associated GPS location data. The ensuing report confirms the methodologies and technologies proposed can operate to scopes that could be used to significant effect. 1 | P a g e Contents Executive summary ................................................................................................................................. 1 Introduction ............................................................................................................................................ 4 Literature Review .................................................................................................................................... 5 Objectives ............................................................................................................................................... 7 Method ................................................................................................................................................... 9 Building the Device ............................................................................................................................. 9 Testing device -

GPSD Client HOWTO

7/16/2017 GPSD Client HOWTO GPSD Client HOWTO Eric S. Raymond <[email protected]> version 1.19, Jul 2015 Table of Contents Introduction Sensor behavior matters What GPSD does, and what it cannot do How the GPSD wire protocol works Interfacing from the client side The sockets interface Shared-memory interface D-Bus broadcasts C Examples C++ examples Python examples Other Client Bindings Java Perl Backward Incompatibility and Future Changes Introduction This document is a guide to interfacing client applications with GPSD. It surveys the available bindings and their use cases. It also explains some sharp edges in the client API which, unfortunately, are fundamental results of the way GPS sensor devices operate, and suggests tactics for avoiding being cut. Sensor behavior matters GPSD handles two main kinds of sensors: GPS receivers and AIS receivers. It has rudimentary support for some other kinds of specialized geolocation-related sensors as well, notably compass and yaw/pitch/roll, but those sensors are usually combined with GPS/AIS receivers and behave like them. In an ideal world, GPS/AIS sensors would be oracles that you could poll at any time to get clean data. But despite the existence of some vendor-specific query and control strings on some devices, a GPS/AIS sensor is not a synchronous device you can query for specified data and count on getting a response back from in a fixed period of time. It gets radio data on its own schedule (usually once per second for a GPS), and emits the reports it feels like reporting asynchronously with variable lag during the following second. -

OS-Based Resource Accounting for Asynchronous Resource Use in Mobile Systems

OS-based Resource Accounting for Asynchronous Resource Use in Mobile Systems Farshad Ghanei Pranav Tipnis Kyle Marcus [email protected] [email protected] [email protected] Karthik Dantu Steve Ko Lukasz Ziarek [email protected] [email protected] [email protected] Computer Science and Engineering University at Buffalo, State University of New York Buffalo, NY 14260-2500 ABSTRACT In the last two decades, computing has moved from desk- One essential functionality of a modern operating system tops to mobile and embedded platforms. Advances in sens- is to accurately account for the resource usage of the un- ing, communication, and estimation algorithms have led to derlying hardware. This is especially important for com- the development of advanced robotic systems such as driver- puting systems that operate on battery power, since energy less cars and micro-aerial vehicles. Networks of sensors re- management requires accurately attributing resource uses to side in buildings as well as outdoor areas to monitor and processes. However, components such as sensors, actuators improve our daily lives. Since such use cases rely on hard- and specialized network interfaces are often used in an asyn- ware that is battery powered, energy constraints lie at the chronous fashion, and makes it difficult to conduct accurate heart of this evolution [16]. resource accounting. For example, a process that makes a Energy is a finite, system-wide resource that needs to be request to a sensor may not be running on the processor for efficiently managed across all applications. One requirement the full duration of the resource usage; and current mech- for this, is the ability to account energy usage for each ap- anisms of resource accounting fail to provide accurate ac- plication. -

Python Gps2system. 2/25/2017

Python GPS2system. 2/25/2017 Overview The GPS system was designed to play audio clips along the route of the New Hope Valley Railroad. The initial impedes was to play sound effects during the Halloween train rides. At a specified latitude and longitude the device would play a specific sound effect. After the system is powered up, it may take the GPS receiver a couple of minutes to lock on to enough satellites to retrieve valid data. The system then runs autonomously. On start up, the LED indicator will come on after the linux system loads and the python application has been launched. This takes less than a minute. The indicator will begin to flash when the GPS receiver is locked and is sending valid data to the system. This could take several minutes, depending on the atmospheric conditions. In normal operation the LED will flash approximately once per second. The LED will remain on when an audio track is being played and when the system is capturing and storing positional data. Details All of the files are located in the /home/pi/gps2 folder. To begin the process we have to know the latitude and longitude of the locations that will play the audio clip. In the first iteration of this system, a Parallax BS2 stamp chip was used. This system was used for a couple of years. The limitation of this original system, which was chosen because it used a BASIC programming language, was the finite memory of the Parallax chip. Since the route of the railway was NE a compromise was made to use only the latitude data. -

Garmin Usb Gps Receiver for Laptops

Garmin Usb Gps Receiver For Laptops Perceptional Xever showed oppositely and emptily, she foreseen her pastis bedraggle proscriptively. Colbert remains agreeable: she inthrall her neutron loiter too reportedly? Laurens tariff rearwards. This accuracy has been adorned in the app through latitude and altitude features supported via satellite signals. Pole into Water Anchor, Talon Shallow water Anchor, Marine Radio, Shortwave Radios, Radio Scanner, Police Scanner, CB Radio, GMRS Radios, FRS Radio. Installation of USB GPS on Tablet desktop laptop Windows. Follow the steps to suppress deep insights on how they update Garmin GPS. USB ports of laptop. There who usually run most the few tens of points in similar route. Garmin connect a usb gps logger, holding out at an image to utilize one drone, receiver usb gps garmin for a long ago came as long enough, new posts to search in offline and! As it stands now sound the bland taste youve left hand my mouth. Radars are rarely used alone been a marine setting. Flaticon, the largest database excel free vector icons. Please has the gpsd control socket location. With uphold, you can download the latest roadmap and other one as needed. Fi for easy updates. Having sex second screen gets me up little closer to IFR capable, but love will thereafter need more buy lease install a Garmin certified GPS navigator. Garmin GPS Outdoor Handlheld Devices, Suppliers of hunting and outdoor products available so purchase online. You to have a reply or open. RAW plus JPG and ever the Olympus share App on essential phone to slant the images. -

Evaluating Kismet and Netstumbler As Network Security Tools & Solutions

Master Thesis MEE10:59 Evaluating Kismet and NetStumbler as Network Security Tools & Solutions Ekhator Stephen Aimuanmwosa This thesis is presented as part requirement for the award of Master of Science Degree in Electrical Engineering Blekinge Institute of Technology January 2010 © Ekhator Stephen Aimuanmwosa, 2010 Blekinge Institute of Technology (BTH) School of Engineering Department of Telecommunication & Signal Processing Supervisor: Fredrik Erlandsson (universitetsadjunkt) Examiner: Fredrik Erlandsson (universitetsadjunkt i Evaluating Kismet and NetStumbler as Network Security Tools & Solutions “Even the knowledge of my own fallibility cannot keep me from making mistakes. Only when I fall do I get up again”. - Vincent van Gogh © Ekhator Stephen Aimuanmwosa, (BTH) Karlskrona January, 2010 Email: [email protected] ii Evaluating Kismet and NetStumbler as Network Security Tools & Solutions ABSTRACT Despite advancement in computer firewalls and intrusion detection systems, wired and wireless networks are experiencing increasing threat to data theft and violations through personal and corporate computers and networks. The ubiquitous WiFi technology which makes it possible for an intruder to scan for data in the air, the use of crypto-analytic software and brute force application to lay bare encrypted messages has not made computers security and networks security safe more so any much easier for network security administrators to handle. In fact the security problems and solution of information systems are becoming more and more complex and complicated as new exploit security tools like Kismet and Netsh (a NetStumbler alternative) are developed. This thesis work tried to look at the passive detection of wireless network capability of kismet and how it function and comparing it with the default windows network shell ability to also detect networks wirelessly and how vulnerable they make secured and non-secured wireless network. -

Lady Heather's GPS Disciplined Oscillator Control Program (Now Works with Many Different Receiver Types and Even Without a GPS Receiver)

Lady Heather's GPS Disciplined Oscillator Control Program (now works with many different receiver types and even without a GPS receiver) Copyright (C) 2008-2016 Mark S. Sims Lady Heather is a monitoring and control program for GPS receivers and GPS Disciplined Oscillators. It is oriented more towards the time keeping funtionality of GPS and less towards positioning. It supports numerous GPS (and Glonass, Beidou, Galileo, etc) devices. Permission is hereby granted, free of charge, to any person obtaining a copy of this software and associated documentation files (the "Software"), to deal in the Software without restriction, including without limitation the rights to use, copy, modify, merge, publish, distribute, sublicense, and/or sell copies of the Software, and to permit persons to whom the Software is furnished to do so, subject to the following conditions: The above copyright notice and this permission notice shall be included in all copies or substantial portions of the Software. THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM, OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE SOFTWARE. Win32 port by John Miles, KE5FX ([email protected]) Help with Mac OS X port by Jeff Dionne and Jay Grizzard -

NTP Release 4.2.8P3

NTP Release 4.2.8p3 September 07, 2015 CONTENTS 1 The Network Time Protocol (NTP) Distribution1 1.1 The Handbook..............................................1 1.2 Building and Installing NTP.......................................2 1.3 Resolving Problems...........................................2 1.4 Further Information...........................................2 2 Build and Install 3 2.1 Quick Start................................................3 2.2 Building and Installing the Distribution.................................4 2.3 Build Options...............................................5 2.4 Debugging Reference Clock Drivers...................................5 2.5 NTP Debugging Techniques.......................................6 2.6 Hints and Kinks............................................. 10 2.7 NTP Bug Reporting Procedures..................................... 10 3 Program Manual Pages 11 3.1 ntpd - Network Time Protocol (NTP) Daemon............................. 11 3.2 ntpq - standard NTP query program.................................. 14 3.3 ntpdc - special NTP query program.................................. 22 3.4 ntpdate - set the date and time via NTP................................ 28 3.5 ntp-wait - waits until ntpd is in synchronized state......................... 30 3.6 sntp - Simple Network Time Protocol (SNTP) Client......................... 30 3.7 ntptrace - trace a chain of NTP servers back to the primary source................. 33 3.8 tickadj - set time-related kernel variables.............................. 33 3.9 ntptime -

GPSD Time Service HOWTO

GPSD Time Service HOWTO Gary E. Miller <[email protected]>, Eric S. Raymond <[email protected]> version 2.11, June 2018 Table of Contents Introduction NTP with GPSD GPS time 1PPS quality issues Software Prerequisites Building gpsd == Kernel support Time service daemon NTPSec Choice of Hardware Enabling PPS Running GPSD Feeding NTPD from GPSD Feeding chrony from GPSD Performance Tuning ARP is the sound of your server choking Watch your temperatures Powersaving is not your friend NTP tuning and performance details NTP performance tuning Polling Interval Chrony performance tuning Providing local NTP service using PTP PTP with software timestamping PTP with hardware timestamping Providing public NTP service Acknowledgments References Changelog This document is mastered in asciidoc format. If you are reading it in HTML, you can find the original at the GPSD project website. Introduction GPSD, NTP and a GPS receiver supplying 1PPS (one pulse-per-second) output can be used to set up a high-quality NTP time server. This HOWTO explains the method and various options you have in setting it up. Here is the quick-start sequence. The rest of this document goes into more detail about the steps. 1. Ensure that gpsd and either ntpd or chronyd are installed on your system. (Both gpsd and ntpd are pre-installed in many stock Linux distributions; chronyd is normally not.) You don’t have to choose which to use yet if you have easy access to both, but knowing which alternatives are readily available to you is a good place to start. 2. Verify that your gpsd version is at least 3.17. -

Application Note 1 Linux Kernel Versions and Drivers 2 Installation

your position is our focus Application Note Topic : u-blox 5 and ANTARIS 4 Receivers with Linux via USB: How To GPS.G4-CS-07059-A Author : Daniel Ammann Date : 30/01/2008 We reserve all rights to this document and the information contained herein. Reproduction, use or disclosure to third parties without express permission is strictly prohibited. © 2008 u-blox AG This document explains how to use u-blox (u-blox 5 and ANTARIS®4) receivers with Linux. 1 Linux kernel versions and drivers The exact methods for connecting and troubleshooting a USB connection to a u-blox1 GPS receiver differ slightly between kernel versions and Linux distributions. The basic steps involved, and the drivers used are similar over a range of different Linux flavors. The recommendations in this document are based on using examples of Ubuntu 7.04 (Feisty Fawn), running a Kernel 2.6.20 SMP on an i686 CPU. This particular example uses the cdc_acm driver, found in 2.6 kernels. Alternatives are acm (on 2.4.x kernels) or usbserial (both 2.4.x and 2.6.x kernels). It is assumed that the device file will be located directly in /dev/ttyACM*b*. Other distributions, kernel versions and drivers may use a different name (e.g an openwrt distribution using kernel 2.4.30 on a mips CPU will create dev/tts/n device files). When using usbserial, device files typically are located under /dev/ttyUSBN. 1.1 USB Technical Implementation u-blox1 USB interfaces implement Communications Device Class (CDC). The behavior and power is configured using the UBX protocol command UBX-CFG-USB. -

Gps Based Real Time Vehicle Tracking System

2012 GPS BASED REAL TIME VEHICLE TRACKING SYSTEM Guide: Prof. Sujit Kumar Ghosh Head of Computer Science & Engineering Department STUDENTS: DEBALINA CHATTERJEE (CSE/2008/032) SAURABH KUMAR (CSE/2008/033) NILOTPAL MAHADANI (CSE/2008/035) RCC INSTITUTE OF INFORMATION TECHNOLOGY, KOLKATA GPS BASED REAL TIME VEHICLE TRACKING SYSTEM REPORT OF PROJECT SUBMITTED FOR FULFILLMENT OF THE REQUIREMENT FOR THE DEGREE OF BACHELOR OF TECHNOLOGY In COMPUTER SCIENCE & ENGINEERING By DEBALINA CHATTERJEE [REGISTRATION NO- 081170110018, UNIVERSITY ROLLNO- 08117001016] SAURABH KUMAR [REGISTRATION NO- 081170110044, UNIVERSITY ROLLNO- 08117001038] & NILOTPAL MAHADANI [REGISTRATION NO- 081170110031, UNIVERSITY ROLLNO- 08117001035] UNDER THE SUPERVISION OF PROF. SUJIT KUMAR GHOSH HEAD OF COMPUTER SCIENCE & ENGINEERING DEPARTMENT RCC INSTITUTE OF INFORMATION TECHNOLOGY AT RCC INSTITUTE OF INFORMATION TECHNOLOGY [AFFILIATED TO WEST BENGAL UNIVERSITY OF TECHNOLOGY] CANAL SOUTH ROAD, BELIAGHATA, KOLKATA – 700015 2 | P a g e RCC INSTITUTE OF INFORMATION TECHNOLOGY KOLKATA – 7OOO15, INDIA CERTIFICATE The report of the Project titled ‚GPS BASED REAL TIME VEHICLE TRACKING SYSTEM‛ submitted by Debalina Chatterjee (Roll No.:08117001016 of B.Tech. CSE 8th Semester of 2012), Saurabh Kumar (Roll No.:08117001038 of B.Tech. CSE 8th Semester of 2012),Nilotpal Mahadani (Roll No.:08117001035 of B.Tech. CSE 8th Semester of 2012)has been prepared under my supervision for the fulfillment of the requirements for B.Tech CSE degree in West Bengal University of Technology. The report is hereby forwarded. Prof. Sujit Kumar Ghosh Head of Computer Science & Engineering Department RCC Institute of Information Technology, Kolkata – 700 015. 3 | P a g e ACKNOWLEDGEMENT We express our sincere gratitude to Prof. Sujit Kumar Ghosh of Department of Computer Science & Engineering, RCCIIT and for extending his valuable time for us to take up this problem as a Project.Last but not the least We would like to express our gratitude to all other faculties of our department who helped us in their own way whenever needed.