Fifth Generation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Use of Social Media in Risk and Crisis Communication”

OECD Working Papers on Public Governance No. 24 The Use of Social Media Cécile Wendling, in Risk and Crisis Jack Radisch, Communication Stephane Jacobzone https://dx.doi.org/10.1787/5k3v01fskp9s-en WORKING PAPER “THE USE OF SOCIAL MEDIA IN RISK AND CRISIS COMMUNICATION” CÉCILE WENDLING, JACK RADISCH, STEPHANE JACOBZONE ABSTRACT This report highlights the changing landscape of risk and crisis communications and in particular how social media can be a beneficial tool, but also create challenges for crisis managers. It explores different practices of risk and crisis communications experts related to the use of social media and proposes a framework for monitoring the development of practices among countries in the use of social media for risk and crisis communications. The three step process spans passive to dynamic use of social media, and provides governments a self-assessment tool to monitor and track progress in the uptake of effective use of social media by emergency services or crisis managers. 2 NOTE BY THE SECRETARIAT This report addresses risk and crisis communications, a core policy area for the High Level Risk Forum. It draws on the outcome of the discussions held at a workshop organized jointly by the OECD and the International Risk Governance Council (IRGC), in Geneva (Switzerland), on 29 June 2012 on the theme of ''Risk and crisis communication: the challenges of social media''. This workshop gathered participants from 12 OECD countries, think tanks, academia, the private sector and international organizations to discuss the challenges that emergency services and public relations managers confront in relation to the emergence of social media. -

Effective Communication”

Team – 184 San Academy, Pallikaranai PROBLEM CHOSEN “EFFECTIVE COMMUNICATION” STUDENTS NAMES Rakshan K.K Sam Rozario D Sachin U.S Saifudeen S SAN ACADEMY, PALLIKARANAI TEAM - 184 PREPARING PPT As a presenting tool our students used PPT to show the evolution of communication from cave age to modern age .Here are some glimpse of the PPT slide. THE SMOKE SIGNAL It is one of the oldest forms of long-distance communication It is a form of visual communications used over long distances In Ancient China, soldiers stationed along the Great Wall would alert each other of impending enemy attack by signaling from tower to tower In this way they were able to transmit a message as far away as 750 Km( 470 mi ) in just a few hours ELECTRICAL TELEGRAPH It is a telegraph that uses electrical signals, usually conveyed via telecommunications lines or radio. The electromagnetic telegraph is a device for human to human transmission of coded text messages It is the first form of electrical telecommunication Later this networks permitted people and commerce to almost instantly transmit messages across both continents and oceans PIGEON POST It is the use of homing pigeons to carry messages Pigeons were effective as messengers due to their natural homing abilities The pigeons were transported to a destination in cages, where they would be attached with messages , then naturally the pigeon would fly back to its home where the owner reads the mail Pigeons have been used to great effect in military situations SALE AND MARKETING SAN ACADEMY, PALLIKARANAI TEAM - 184 To develop a clarity and focus on what need to be done, students made a plan to promote some textbook. -

Battle Management Language: History, Employment and NATO Technical Activities

Battle Management Language: History, Employment and NATO Technical Activities Mr. Kevin Galvin Quintec Mountbatten House, Basing View, Basingstoke Hampshire, RG21 4HJ UNITED KINGDOM [email protected] ABSTRACT This paper is one of a coordinated set prepared for a NATO Modelling and Simulation Group Lecture Series in Command and Control – Simulation Interoperability (C2SIM). This paper provides an introduction to the concept and historical use and employment of Battle Management Language as they have developed, and the technical activities that were started to achieve interoperability between digitised command and control and simulation systems. 1.0 INTRODUCTION This paper provides a background to the historical employment and implementation of Battle Management Languages (BML) and the challenges that face the military forces today as they deploy digitised C2 systems and have increasingly used simulation tools to both stimulate the training of commanders and their staffs at all echelons of command. The specific areas covered within this section include the following: • The current problem space. • Historical background to the development and employment of Battle Management Languages (BML) as technology evolved to communicate within military organisations. • The challenges that NATO and nations face in C2SIM interoperation. • Strategy and Policy Statements on interoperability between C2 and simulation systems. • NATO technical activities that have been instigated to examine C2Sim interoperation. 2.0 CURRENT PROBLEM SPACE “Linking sensors, decision makers and weapon systems so that information can be translated into synchronised and overwhelming military effect at optimum tempo” (Lt Gen Sir Robert Fulton, Deputy Chief of Defence Staff, 29th May 2002) Although General Fulton made that statement in 2002 at a time when the concept of network enabled operations was being formulated by the UK and within other nations, the requirement remains extant. -

Statement of Terms and Conditions

APPENDIX PRICING – AM-MI PAGE 1 OF 7 AM-MI/SMOKE SIGNAL COMMUNICATIONS 060502 APPENDIX-PRICING APPENDIX PRICING – AM-MI PAGE 2 OF 7 AM-MI/SMOKE SIGNAL COMMUNICATIONS 060502 TABLE OF CONTENTS 1. INTRODUCTION............................................................................................................... 3 2. RECURRING CHARGES ................................................................................................. 4 3. NON-RECURRING CHARGES ....................................................................................... 5 4. UNBUNDLED LOCAL SWITCHING (ULS) .................................................................. 5 5. BILLING ............................................................................................................................. 6 6. APPLICABILITY OF OTHER RATES, TERMS AND CONDITIONS....................... 6 APPENDIX PRICING – AM-MI PAGE 3 OF 7 AM-MI/SMOKE SIGNAL COMMUNICATIONS 060502 APPENDIX PRICING 1. INTRODUCTION 1.1 This Appendix sets forth the pricing terms and conditions for the applicable SBC Communications Inc. (SBC) owned Incumbent Local Exchange Carrier (ILEC) identified in 1.2 below. The rate table included in this Appendix is divided into the following five categories: Unbundled Network Elements (UNEs), Resale, Other (Resale), Other and Reciprocal Compensation. These categories are for convenience only and shall not be construed to define or limit any of the terms herein or affect the meaning or interpretation of this Agreement. 1.2 SBC Communications Inc. (SBC) means -

A Framework for Evaluating Telemedicine-Based Healthcare Inequality Reduction in Ethiopia: a Grounded Theory Approach

A Framework for Evaluating Telemedicine-Based Healthcare Inequality Reduction in Ethiopia: A Grounded Theory Approach by MEKONNEN WAGAW TEMESGEN submitted in accordance with the requirements for the degree of DOCTOR OF PHILOSOPHY in the subject INFORMATION SYSTEMS at the UNIVERSITY OF SOUTH AFRICA Supervisor: Prof HH Lotriet Mentor: Prof Isabella Venter OCTOBER, 2019 ii Declaration Name: Mekonnen Wagaw Temesgen Student number: 4724-575-1 Degree: Doctor of Philosophy in Information Systems Thesis title: A Framework for Evaluating Telemedicine-Based Healthcare Inequality Reduction in Ethiopia: A Grounded Theory Approach I declare that the above thesis is my own work and that all the sources that I have used or quoted have been indicated and acknowledged by means of complete references. I further declare that I submitted the thesis to originality checking software and that it falls within the accepted requirements for originality. I further declare that I have not previously submitted this work, or part of it, for examination at Unisa for another qualification or at any other higher education institution. __________________________ _____October 25, 2019______ Signature Date iii Acknowledgement The author would like thank the following people and institutes: First and foremost, I would like to thank the God almighty for making my dream come true. My first gratitude goes to my supervisor, Prof HH Lotriet, for his guidance and support throughout the study. Thank you very much for your prompt constructive comments and feedbacks. I wish to express my appreciation to my mentor, Prof Isabella Venter, for her support and comments. Thank you very much for supporting me and shaping the thesis. -

~//" ACCEPTED for PROCESSING - 2019 December 2 1:00 PM - SCPSC - 1999-315-C - Page 2 of 44 I: E COMMISSION 1999 IV 4 E SHMCE JUL'l1 PUSUC EC ACCEPTED C

ACCEPTED S. C. PtIUC SEttVCE COMMISSION GTE Telephone Operations FOR Nations Bank Tower 1301 Gervais Street Suite 625 PROCESSING Columbia, South Carolina 29201 ssttytt B03 254-5736 C. Pl!Sl tC ccqincp rnvtr ~c nu 1999 July I, - 2019 Mr. Gary E. Walsh December Executive Director L ~ The Public Service Commission P. O. Drawer 11649 29211 Columbia, SC 2 1:00 RE; Resale Agreement between GTE South Incorporated and Choctaw Communications, Inc. d/b/a Smoke Signal Communications (" Smoke Signal") PM - Dear Mr. Walsh: SCPSC Enclosed you will find three copies of the above referenced agreement which is being filed with your office for consideration and approval. I am also forwarding a copy of - 1999-315-C same as an electronic file. Should you have any questions concerning this matter please do not hesitate to contact my office. Very truly yours, - Page 1 of STAN J. BUGNER 44 State Director, Government Affairs c: Ms. Maria J. HHanle, Smoke Signal ) ~//" f0* A part of GTE Corporation ACCEPTED ACCEPTED FOR LpECE1VEC. PUSUC SHMCE COMMISSION PROCESSING JUL'L1 4 1999 I: RESALE AGREEMENT EC E IV E - BETWEEN 2019 December GTE SOUTH INCORPORATED 2 AND 1:00 PM - CHOCKTAW COMNIUNICATIONS, INC. SCPSC d/b/a SMOKE SIGNAL COMMUNICATIONS - 1999-315-C - Page 2 of 44 SMOKESIG SC42SWS.DOC -289 ACCEPTED TABLE CONTENTS OF FOR ARTICLE I: SCOPE AND INTENT OF AGREEMENT. PROCESSING ARTICLE II: DEFINITIONS .. General Definitions ..... 11-1 1.1 Act .....11-1 1.2 Applicabfik Law. ..... II- 1 1.3 Asks Transfer (AIT) . .... II- 1 1.4 Basic Local Exchange Service. -

Case 20-32299-KLP Doc 208 Filed 06/01/20 Entered 06/01/20 16

Case 20-32299-KLP Doc 208 Filed 06/01/20 Entered 06/01/20 16:57:32 Desc Main Document Page 1 of 137 Case 20-32299-KLP Doc 208 Filed 06/01/20 Entered 06/01/20 16:57:32 Desc Main Document Page 2 of 137 Exhibit A Case 20-32299-KLP Doc 208 Filed 06/01/20 Entered 06/01/20 16:57:32 Desc Main Document Page 3 of 137 Exhibit A1 Served via Overnight Mail Name Attention Address 1 Address 2 City State Zip Country Aastha Broadcasting Network Limited Attn: Legal Unit213 MezzanineFl Morya LandMark1 Off Link Road, Andheri (West) Mumbai 400053 IN Abs Global LTD Attn: Legal O'Hara House 3 Bermudiana Road Hamilton HM08 BM Abs-Cbn Global Limited Attn: Legal Mother Ignacia Quezon City Manila PH Aditya Jain S/O Sudhir Kumar Jain Attn: Legal 12, Printing Press Area behind Punjab Kesari Wazirpur Delhi 110035 IN AdminNacinl TelecomunicacionUruguay Complejo Torre De Telecomuniciones Guatemala 1075. Nivel 22 HojaDeEntrada 1000007292 5000009660 Montevideo CP 11800 UY Advert Bereau Company Limited Attn: Legal East Legon Ars Obojo Road Asafoatse Accra GH Africa Digital Network Limited c/o Nation Media Group Nation Centre 7th Floor Kimathi St PO Box 28753-00100 Nairobi KE Africa Media Group Limited Attn: Legal Jamhuri/Zaramo Streets Dar Es Salaam TZ Africa Mobile Network Communication Attn: Legal 2 Jide Close, Idimu Council Alimosho Lagos NG Africa Mobile Networks Cameroon Attn: Legal 131Rue1221 Entree Des Hydrocarbures Derriere Star Land Hotel Bonapriso-Douala Douala CM Africa Mobile Networks Cameroon Attn: Legal BP12153 Bonapriso Douala CM Africa Mobile Networks Gb, -

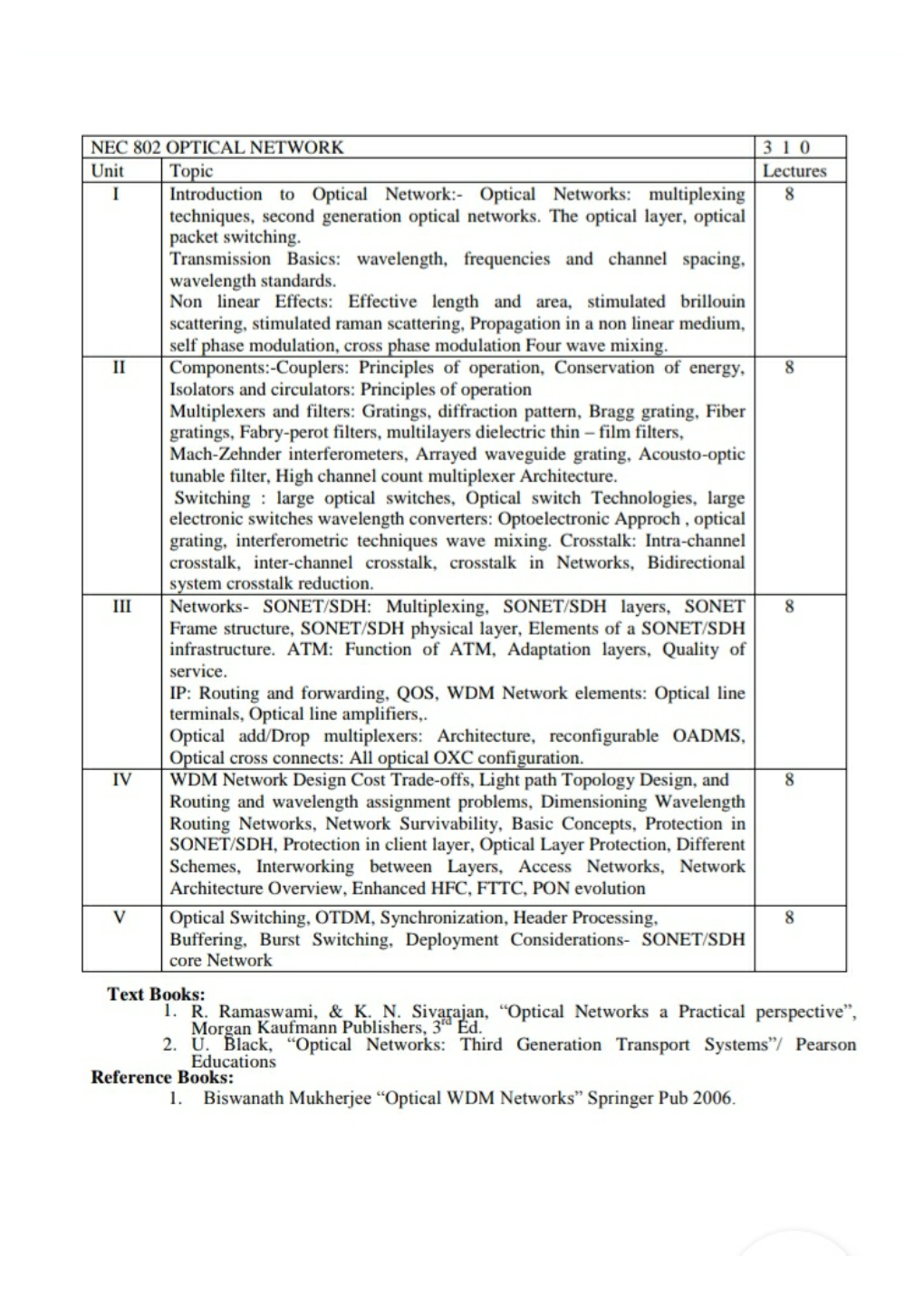

Optical Communication: Its History and Recent Progress Govind P

177 8 Optical Communication: Its History and Recent Progress Govind P. Agrawal 8.1 Historical Perspective – 178 8.2 Basic Concepts Behind Optical Communication – 181 8.2.1 Optical Transmitters and Receivers – 181 8.2.2 Optical Fibers and Cables – 182 8.2.3 Modulations Formats – 184 8.2.4 Channel Multiplexing – 185 8.3 Evolution of Optical Communication from 1975 to 2000 – 187 8.3.1 The First Three Generations – 187 8.3.2 The Fourth Generation – 188 8.3.3 Bursting of the Telecom Bubble in 2000 – 190 8.4 The Fifth Generation – 191 8.5 The Sixth Generation – 192 8.5.1 Capacity Limit of Single-Mode Fibers – 193 8.5.2 Space-Division Multiplexing – 194 8.6 Worldwide Fiber-Optic Communication Network – 195 8.7 Conclusions – 197 References – 198 G.P. Agrawal (*) The Institute of Optics, University of Rochester, Rochester NY 14627, USA e-mail: [email protected] © The Author(s) 2016 M.D. Al-Amri et al. (eds.), Optics in Our Time, DOI 10.1007/978-3-319-31903-2_8 178 G.P. Agrawal 8.1 Historical Perspective The use of light for communication purposes dates back to antiquity if we interpret optical communication in a broad sense, implying any communication scheme that makes use of light. Most civilizations have used mirrors, fire beacons, or smoke signals to convey a single piece of information (such as victory in a war). For example, it is claimed that the Greeks constructed in 1084 B.C. a 500-km-long line of fire beacons to convey the news of the fall of Troy [1]. -

International Code of Signals

PUB. 102 INTERNATIONAL CODE OF SIGNALS FOR VISUAL, SOUND, AND RADIO COMMUNICATIONS UNITED STATES EDITION 1969 Edition (Revised 2003) NATIONAL IMAGERY AND MAPPING AGENCY PUB. 102 International Code of Signals As adopted by the Fourth Assembly of the Inter-Governmental Maritime Consultative Organization in 1965 For Visual, Sound, and Radio Communications United States Edition, 1969 (Revised 2003) Prepared and published by the NATIONAL IMAGERY AND MAPPING AGENCY Bethesda, Maryland © COPYRIGHT 2003 BY THE UNITED STATES GOVERNMENT NO COPYRIGHT CLAIMED UNDER TITLE 17 U.S.C. For sale by the Superintendant of Documents, U.S. Government Printing Office Internet: bookstore.gpo.gov Phone: toll free (866) 512-1800; DC area (202) 512-1800 Fax: (202) 512-2250 Mail Stop: SSOP, Washington, DC 20402-0001 PREFACE Pub 102, the 1969 edition of the International Code of Signals, became effective on 1 April 1969, and at that time superseded H.O. Pubs. 103 and 104, International Code of Signals, Volumes I and II. All signals are contained in a single volume suitable for all methods of communication. The First International Code was drafted in 1855 by a Committee set up by the British Board of Trade. It contained 70,000 signals using eighteen flags and was published by the British Board of Trade in 1857 in two parts; the first containing universal and international signals and the second British signals only. The book was adopted by most seafaring nations. This early edition was revised by a Committee set up in 1887 by the British Board of Trade. The Committee’s proposals were discussed by the principal maritime powers and at the International Conference in Washington in 1889. -

WST-600 AUDIO DETECTOR Installation Manual & Users Guide SPECIFICATIONS

TM WST-600 AUDIO DETECTOR US Patent # 9,087,447 Installation Manual & Users Guide SPECIFICATIONS Frequency: 433.92MHz Operating Temperature: 32°-120°F (0°-49°C) Battery: One 3Vdc lithium CR123A (1550 mAh) Operating Humidity: 5-95% RH non condensing Battery life: 4 years Compatible with 433MHz DSC Security Systems Detection distance: 6 in max Supervisory signal interval: 64 min(approx.) OPERATION The FireFighter™ sensor is designed to listen to any smoke, carbon or combo detector. Once confirmed as an alarm, it will transmit a signal to the alarm control panel which if connected to a central monitoring station, will dispatch the fire department. ENROLLING Follow the instructions provided by your control panel, or wireless receiver manual. The 6 digit serial number is printed on back of each device. To monitor smoke alarms, when prompted by the panel, enter the 6 digit serial number as printed on the device. Use either zone type 88 ( Standard 24 hour fire) or type 87 (Delayed 24 hour fire). Remember to set the wireless bit (bit 8) for that zone attribute. To monitor a CO detector, take the printed serial number and replace the first digit with an 8. For example if the number printed is 436A48, you would use 836A48 as the serial number for the CO portion. Use zone type 81 (24 hour Carbon Monoxide). MOUNTING (see IMAGE: 2) Included with this device is a mounting bracket, hardware and double sided tape. To ensure proper operation ensure the side of the device with the small holes is directly facing the sounder holes on the smoke detector. -

Radio Science Bulletin Staff

INTERNATIONAL UNION UNION OF RADIO-SCIENTIFIQUE RADIO SCIENCE INTERNATIONALE ISSN 1024-4530 Bulletin No 360 March 2017 Radio Science URSI, c/o Ghent University (INTEC) St.-Pietersnieuwstraat 41, B-9000 Gent (Belgium) Contents Radio Science Bulletin Staff ....................................................................................... 4 URSI Offi cers and Secretariat.................................................................................... 7 Editor’s Comments ..................................................................................................... 9 Special Section: The Best Papers from the EMTS 2016 Young Scientist Awards 11 Sub-Voxel Refi nement Method for Tissue Boundary Conductivities in Volume Conductor Models ................................................................................... 13 How Kerr Nonlinearity Infl uences Polarized Electromagnetic Wave Propagation ................................................................................................. 19 Sum-Frequency and Second-Harmonic Generation from Plasmonic Nonlinear Nanoantennas ...................................................................................... 43 Hornet Biological Radar for Detection, Tracking, Direction Finding, and Long Distance Communication: Is This Possible? ............................................. 50 Foreword to Radio Science for Humanity: URSI-France 2017 Workshop ......... 60 URSI France 2017 Workshop on Radio Science for Humanity ............................ 62 Journées scientifi ques URSI-France 2017 -

The Denver Catholic Register World Awaits the White Smoke

THE DENVER CATHOLIC REGISTER. Wed.. Auauel 3.T ISTB ---- i e The Denver Catholic Register WEDNESDAY, AUGUST23, 1978 VOL. LIV NO. 1 Colorado's Largest Weekly 20 PAGES 25 CENTS PER COPY a ! i \ v^. THE HOLY SPIRIT MOVES IN OQ THE CONCLAVE O y-h World Awaits the White Smoke By John Muthig where their peers stand on key issues and what they Papal election rules say that cells must be chosen VATICAN CITY (NC) — As hundreds of the have been up to in their own regions. by lot. curious stream past Pope Paul’s simple tomb below St. Peter's, the College of Cardinals has already U.S. Cardinals Absolute Secrecy unofficially begun electing his successor. All eight U.S. cardinals who will enter the All cardinals are sworn to absolute secrecy, not The commandant of the Swiss Guards and a small conclave —• U.S. Cardinal John Wright, prefect of the only about what goes on in the conclave but also about group of Vatican officials will not seal the oak Vatican Congregation for the Clergy, officially the general congregations. conclave doors officially until 5 p.m. Aug. 25, but the informed fellow cardinals by telegram Aug. 14 that he Each had to take the following oath in the presence cardinals during their daily meetings in baroque, cannot attend for health reasons — are participating of his fellow cardinals: frescoed halls near the basilica have already begun the daily in the meetings (called general congregations) of “We cardinals of the Holy Roman Church ... key process of getting to know one another and sizing the college.