Bayesian Methods and Applications Using Winbugs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Captain Cool: the MS Dhoni Story

Captain Cool The MS Dhoni Story GULU Ezekiel is one of India’s best known sports writers and authors with nearly forty years of experience in print, TV, radio and internet. He has previously been Sports Editor at Asian Age, NDTV and indya.com and is the author of over a dozen sports books on cricket, the Olympics and table tennis. Gulu has also contributed extensively to sports books published from India, England and Australia and has written for over a hundred publications worldwide since his first article was published in 1980. Based in New Delhi from 1991, in August 2001 Gulu launched GE Features, a features and syndication service which has syndicated columns by Sir Richard Hadlee and Jacques Kallis (cricket) Mahesh Bhupathi (tennis) and Ajit Pal Singh (hockey) among others. He is also a familiar face on TV where he is a guest expert on numerous Indian news channels as well as on foreign channels and radio stations. This is his first book for Westland Limited and is the fourth revised and updated edition of the book first published in September 2008 and follows the third edition released in September 2013. Website: www.guluzekiel.com Twitter: @gulu1959 First Published by Westland Publications Private Limited in 2008 61, 2nd Floor, Silverline Building, Alapakkam Main Road, Maduravoyal, Chennai 600095 Westland and the Westland logo are the trademarks of Westland Publications Private Limited, or its affiliates. Text Copyright © Gulu Ezekiel, 2008 ISBN: 9788193655641 The views and opinions expressed in this work are the author’s own and the facts are as reported by him, and the publisher is in no way liable for the same. -

December 2019 and March 2020 Graduation Program

GRADUATION PROGRAM DECEMBER 2019 AND MARCH 2020 CONFERRING OF DEGREES TABLE OF CONTENTS AND GRANTING Our Value Proposition to our Students OF DIPLOMAS and the Community 1 AND CERTIFICATES A Message from the Chancellor 2 A Message from the Vice-Chancellor and President 3 December 2019 100 years of opportunity and success 4 Flemington Racecourse, Grandstand At VU, family is everything 5 Epsom Road, Flemington VIC University Senior Executives 6 Acknowledgement of Country 7 March 2020 The University Mace – An Established Tradition 7 Victoria University, Footscray Park Academic Dress 8 Welcome to the Alumni Community 9 Social Media 10 Graduates 11 #vualumni #vicunigrads College of Arts and Education 12 vu.edu.au Victoria University Business School 14 College of Engineering and Science 19 College of Health and Biomedicine 20 College of Law and Justice 22 College of Sport and Exercise Science 23 VU College 24 VU Research 27 University Medals for Academic Excellence 32 University Medals for Academic Excellence in Research Training 32 Companion of the University 33 Honorary Graduates of the University 1987–2019 34 2 VICTORIA UNIVERSITY GRADUATION PROGRAM DECEMBER 2019 AND MARCH 2020 OUR VALUE PROPOSITION TO OUR STUDENTS AND THE COMMUNITY Victoria University (VU) aims to be a great university of the 21st century by being inclusive rather than exclusive. We will provide exceptional value to our diverse community of students by guiding them to achieve their career aspirations through personalised, flexible, well- supported and industry relevant learning opportunities. Achievement will be demonstrated by our students’ and graduates’ employability and entrepreneurship. The applied and translational research conducted by our staff and students will enhance social and economic outcomes in our heartland communities of the West of Melbourne and beyond. -

The Virtual Wisdener

No 29 January 26 2021. The Virtual Wisdener The Newsletter of the Wisden Collectors’ Club big apology for the delay in ge2ng this edion out, A my plan was to send it in the first week in January Wisdenworld Website but things have been a smidgen hec4c. www.wisdenworld.co.uk As you can see on the right hand side there Is an updated Wisdenworld website going live tomorrow. I have also Back in 2019 I decided to have a new Wisdenworld decided that the ongoing frustraBons with the Wisden website built - well aGer 15 months, lots of delays Collectors’ Club website have become a real nuisance so a and a design company that want to discuss new site will be up and coming, hopefully by early March. everything from what brand of tea to have during our zoom mee4ng to what size font I want on my But there are far more interesBng things going on. name…at last it is almost ready to go live. The Australia v India series. India aIer being skiKled out It is fundamentally the same, but just a liKle brighter for 36 in the first Test bounce back to win the series, what and, hopefully, easier to use, along with some added a recovery and especially without Kohli. Sadly the match features reports in the newspapers of the last few days of the final Test have highlighted once again that Australian cricketers In fact, it should be up and running by tomorrow - sBll have certain things that need addressing, like a lack of Wednesday, the 27th - the exisBng site is very much manners, a disregard for fairness and a bully-boy atude sBll acBve and working fine and it will do so unBl then. -

Manpower Pvt Ltd 114320544 G Enterprise Rentals Pvt Ltd

TIN Name 114440175 AMIRO INVEST: SECUR: & MANPOWER PVT LTD 114320544 G ENTERPRISE RENTALS PVT LTD 422251464 GUNAWARDHANA W K J 693630126 INDUKA K B A 722623517 JANATH RAVINDRA W P M 673371825 NUGEGODA M B 291591310 RATHNAYAKE W N 537192461 SITHY PATHUMMA M H MRS 409248160 STAR ENTERPRISES 568003977 WEERASINGHE L D A 134009080 1 2 4 DESIGNS LTD 114287954 21ST CENTURY INTERIORS PVT LTD 409327150 3 C HOLDINGS 174814414 3 DIAMOND HOLDINGS PVT LTD 114689491 3 FA MANAGEMENT SERVICES PVT LTD 114458643 3 MIX PVT LTD 114234281 3 S CONCEPT PVT LTD 409084141 3 S ENTERPRISE 114689092 3 S PANORAMA HOLDINGS PVT LTD 409243622 3 S PRINT SOLUTION 114488151 3 WAY FREIGHT INTERNATIONAL PVT LTD 114707570 3 WHEEL LANKA AUTO TECH PVT LTD 409086896 3D COMPUTING TECHNOLOGIES 409248764 3D PACKAGING SERVICE 409088198 3S INTERNATIONAL MARKETING PVT CO 114251461 3W INNOVATIONS PVT LTD 672581214 4 X 4 MOTORS 114372706 4M PRODUCTS & SERVICES PVT LTD 409206760 4U OFFSET PRINTERS 114102890 505 APPAREL'S PVT LTD 114072079 505 MOTORS PVT LTD 409150578 555 EGODAGE ENVIR;FRENDLY MANU;& EXPORTS 114265780 609 PACKAGING PVT LTD 114333646 609 POLYMER EXPORTS PVT LTD 409115292 6-7BHATHIYAGAMA GRAMASANWARDENA SAMITIYA 114337200 7TH GEAR PVT LTD 114205052 9.4.MOTORS PVT LTD 409274935 A & A ADVERTISING 409096590 A & A CONSTRUCTION 409018165 A & A ENTERPRISES 114456560 A & A ENTERPRISES FIRE PROTECTION PVT LT 409208711 A & A GRAPHICS 114211524 A & A HOLDINGS PVT LTD 114610569 A & A TECHNOLOGY PVT LTD 114480860 A & A TELECOMMUNICATION ENG;SER;PVT LTD 409118887 A & B ENTERPRISES 114268410 -

Kuwaiti Gitmo Detainee Odah Freed, Sent Home

SUBSCRIPTION THURSDAY, NOVEMBER 6, 2014 MUHARRAM 13, 1436 AH www.kuwaittimes.net First Assembly Debacle for Iran general Beach Games session marred Obama as said to to go global, by sectarian, Republicans mastermind says Asian graft charges2 capture7 Senate Iraq13 ground war Olympic18 chief Kuwaiti Gitmo detainee Min 13º Max 24º Odah freed, sent home High Tide 11:44 & 23:14 Low Tide Fawzi spent 13 years in detention without charge 05:23 & 17:33 40 PAGES NO: 16334 150 FILS GUANTANAMO BAY NAVAL BASE, Cuba: One of two remaining Kuwaitis held in the US military prison at Corruption over camping spots at Municipality Guantanamo Bay was sent home yesterday after nearly 13 By Nawara Fattahova years in detention without charge, a Pentagon spokesman told AFP. Fawzi Al-Odah, 37, boarded a Kuwaiti govern- KUWAIT: A Municipality employee involved in the ment plane at 5:30 am (1030 GMT), said Lieutenant allotment of desert spots for camping is selling Colonel Myles Caggins. He is the first inmate freed since them for thousands of dinars, according to several late May, bringing the total number of detainees at the Kuwaitis who had to pay the civil servant in order to prison on a US naval base in be granted those campsites. The muftah (the key Cuba to 148. person) allegedly reserved several spots for himself, “In accordance with statu- his family, friends and even his domestic servants tory requirements, the secre- when the camping season opened. He then began tary of defense informed to sell these reservations to citizens looking to set Congress of the United up their camps in prime locations. -

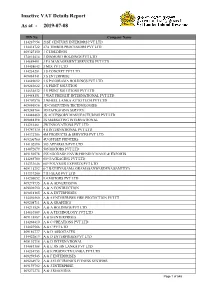

Inactive VAT Details Report As at - 2019-07-08

Inactive VAT Details Report As at - 2019-07-08 TIN No Company Name 114287954 21ST CENTURY INTERIORS PVT LTD 114418722 27A TIMBER PROCESSORS PVT LTD 409327150 3 C HOLDINGS 174814414 3 DIAMOND HOLDINGS PVT LTD 114689491 3 FA MANAGEMENT SERVICES PVT LTD 114458643 3 MIX PVT LTD 114234281 3 S CONCEPT PVT LTD 409084141 3 S ENTERPRISE 114689092 3 S PANORAMA HOLDINGS PVT LTD 409243622 3 S PRINT SOLUTION 114634832 3 S PRINT SOLUTIONS PVT LTD 114488151 3 WAY FREIGHT INTERNATIONAL PVT LTD 114707570 3 WHEEL LANKA AUTO TECH PVT LTD 409086896 3D COMPUTING TECHNOLOGIES 409248764 3D PACKAGING SERVICE 114448460 3S ACCESSORY MANUFACTURING PVT LTD 409088198 3S MARKETING INTERNATIONAL 114251461 3W INNOVATIONS PVT LTD 114747130 4 S INTERNATIONAL PVT LTD 114372706 4M PRODUCTS & SERVICES PVT LTD 409206760 4U OFFSET PRINTERS 114102890 505 APPAREL'S PVT LTD 114072079 505 MOTORS PVT LTD 409150578 555 EGODAGE ENVIR;FRENDLY MANU;& EXPORTS 114265780 609 PACKAGING PVT LTD 114333646 609 POLYMER EXPORTS PVT LTD 409115292 6-7 BATHIYAGAMA GRAMASANWARDENA SAMITIYA 114337200 7TH GEAR PVT LTD 114205052 9.4.MOTORS PVT LTD 409274935 A & A ADVERTISING 409096590 A & A CONTRUCTION 409018165 A & A ENTERPRISES 114456560 A & A ENTERPRISES FIRE PROTECTION PVT LT 409208711 A & A GRAPHICS 114211524 A & A HOLDINGS PVT LTD 114610569 A & A TECHNOLOGY PVT LTD 409118887 A & B ENTERPRISES 114268410 A & C CREATIONS PVT LTD 114023566 A & C PVT LTD 409186777 A & D ASSOCIATES 114422819 A & D ENTERPRISES PVT LTD 409192718 A & D INTERNATIONAL 114081388 A & E JIN JIN LANKA PVT LTD 114234753 A & -

Modelling and Simulation for One-Day Cricket

The Canadian Journal of Statistics 143 Vol. 37, No. 2, 2009, Pages 143–160 La revue canadienne de statistique Modelling and simulation for one-day cricket TimB.SWARTZ1*, Paramjit S. GILL2 and Saman MUTHUKUMARANA1 1Department of Statistics and Actuarial Science, Simon Fraser University, Burnaby, British Columbia, Canada V5A 1S6 2Mathematics, Statistics and Physics Unit, University of British Columbia Okanagan, Kelowna, British Columbia, Canada V1V 1V7 Key words and phrases: Bayesian latent variable model; cricket; Markov chain methods; Monte Carlo simulation; sports statistics; WinBUGS. MSC 2000: Primary 62P99; secondary 62F15. Abstract: This article is concerned with the simulation of one-day cricket matches. Given that only a finite number of outcomes can occur on each ball that is bowled, a discrete generator on a finite set is developed where the outcome probabilities are estimated from historical data involving one-day international cricket matches. The probabilities depend on the batsman, the bowler, the number of wickets lost, the number of balls bowled and the innings. The proposed simulator appears to do a reasonable job at producing realistic results. The simulator allows investigators to address complex questions involving one-day cricket matches. The Canadian Journal of Statistics 37: 143–160; 2009 © 2009 Statistical Society of Canada Resum´ e:´ Cet article porte sur la simulation de matchs de cricket d’une seule journee.´ Etant´ donne´ qu’il y a un nombre fini d’ev´ enements´ possibles a` chaque lancer de balle, un gen´ erateur´ discret. Sur un ensemble fini est developp´ eo´ u` les probabilites´ de chacun des ev´ enements´ sont estimees´ a` partir de donnees´ historiques provenant de matchs de cricket international d’une seule journee.´ Les probabilites´ dependent´ du batteur, du lanceur, du nombre de guichets perdus, du nombre de balles lancees´ et des manches. -

Bruce Yardley Recalls the Great Discovery…

Thursday 26th October, 2006 13 Favourites in disaster zone! ner in which Mahela Jayawardene’s side Well, we wouldn’t need all that calcula- months has been the reason for the inten- won those matches made many predict tion if either New Zealand or South sity levels not being so high. Sri Lanka’s that they’ll be hard to beat. But the group Africa come up with a win and against ODIs in recent times have been against games, especially against South Africa the inconsistent Pakistanis, that’s quite teams that are ranked below them and all was a disaster with the seam bowlers pro- possible. of a sudden when they play stronger oppo- viding the killer punch and knocking the nents like Pakistan and South Africa, they Sri Lankans out of the Champions Poor selections have been found wanting. Trophy. Well, almost. While a lot has been said and written Tom Moody is a fine assessor of situa- No prizes for guessing the cause for about selections during the tournament, tions and his comments will be interesting defeat of course. It was certainly one of something that has been hard to compre- to read. Moody and captain Jayawardene, the worst batting displays by Sri Lanka’s hend is why Sri Lanka have opted to play however, would do well to seek the advice top order in recent times. Sri Lanka’s bat- four seam bowlers in Indian conditions. of senior players in the side such as ting line-up is the most experienced in the It’s certainly a bizarre selection when you Jayasuriya, Muralitharan, Atapattu, world with the combined count of the top consider that someone like Malinga Sangakkara and Chaminda Vaas when six totalling 1200 ODIs. -

Pakistan Lifts Maiden Asia Cup by Vijay Lokapally Pass

CRICKET / ATAPATTU'S CENTURY IN VAIN Pakistan lifts maiden Asia Cup By Vijay Lokapally pass. Facing a daunting target, Sri Lanka DHAKA, JUNE 7. Pakistan peaked precisely made some desperate experiments at when it needed to and Sri Lanka the top. Not many matches are won slumped when it should not have as a through such measures and soon it was packed audience at the Bangabandhu clear that Pakistan was on way to a com- National Stadium was treated to some fortable victory. vintage cricket in the final of the Asia The swift Romesh Kaluwitharana was Cup here. run out without facing a ball, ironically by a limping Saeed Anwar. Vaas tried a The vintage part of the contest was fatal cross-batted shot and the match Qtafted by Moin Khan in a thrilling in- was sealed by Pakistan when Sanath nings as the match brought the tourna- Jayasuriya top-edged the ball back to the ment to an exciting climax, thanks to the bowler. For Sri Lanka to win from that spirited show by the Sri Lankan middle point would have been no less than a order. miracle, for Pakistan was doing nothing The century by Marvan Atapattu gave wrong this day. On the other hand, Sri the match a competitive flavour and the Lanka was a shadow of the spotless field- spirit of the game was exemplified by ing side it is known as. Kaushalya Weeraratne, who could not Sri Lanka grassed seven catches but bat due to injury, walking out just to none as lethal as the return chance from congratulate the opposition which won Moin to Vaas. -

Coincidences Galore As Amanthi Shatters 30-Year-Old Record

Tuesday 14th June, 2011 15 Junior National Athletics Championship India can dominate world cricket for Coincidences galore years, says Fletcher When Duncan Fletcher stepped in to take over as India coach from Gary Kirsten, he said it was “promising young as Amanthi shatters talent” which had lured him to Indian cricket. Now, having seen some stellar displays of character as a second-string, virtual India A squad romped to a ODI series win against the West Indies - 30-year-old record Suresh Raina’s men lead 3-0 in the five- match series - Fletcher believes the world BY REEMUS FERNANDO has a born talent to do well in that the athlete had to receive champions have sufficient bench- this discipline,” said veteran her medal and certificate for strength to dominate world cricket for Coincidences were galore coach Rodrigo who has been this event from her coach. The the next five to 10 years. when Amanthi Silva cleared a the coach of many national Sri Lanka Athletics “It is due to the amount of talent distance of 37.12 metres in the throwers including Olympian Association had picked India have,” Fletcher explained. “Indian Under-18 Girls’ Discus Throw Nadeeka Lakmali. Rodrigo, a former national cricket is in a very, very healthy state to shatter a record which stood “She did this without much champion to present the award. presently. I know five years in interna- unchanged for 30 years at training as she is concentrat- The two throwing records tional cricket is a long time but unless ing more on her studies these established by Deepika Junior National Athletics some international teams suddenly come days,” said Rodrigo about the Rodrigo in 1979 and 1980 were Championships. -

Two Months for Human

www.themorning.lk Late City VOL 01 | NO 23 | Rs. 30.00 { WEDNESDAY } WEDNESDAY, FEBRUARY 24, 2021 THE STORY OF SOMETHING ‘MOST :DIFFERENT COUNTRIES FAILED AFTER GOING TO IMF’ VEHICLE IMPORTERS SAY BRATHWAITE TO SURVIVAL UNTIL END- LEAD WINDIES 2021 UNIMAGINABLE IN TESTS? »SEE PAGE 11 »SEE PAGE 7 »SEE PAGE 16 »SEE PAGE 6 BY THE MORNING NEWS DESK The Presidential EASTER SUNDAY ATTACKS PCOI REPORT Commission of Inquiry (PCoI) on the Easter Sunday attack has recommended that legal action be taken against a topmost politician, senior police officers, and senior Action mooted against public officials, over the failure to prevent the Easter Sunday attacks and properly perform their duties. Contd. on page 2 politicos, officials, cops Fmr Prez Sirisena, fmr Defence Failure to prevent Ranil’s lax attitude Confirms Secy Hemasiri, fmr IGP attacks, dereliction of to Islamist Zahran the Pujith, fmr Nat. Intel Chief Sisira duty/negligence, etc. extremism contributed mastermind, inspired Mendis, and fmr SIS Chief Nilantha by Bangladeshi attack Jayawardena named Charges against AG should file Rishad for calls criminal charges Urges ban of Wahhabism, IS, BBS, SLJI, to Army Commander against all SLJISM/SLJSM, Thowheed groups Major vaccine inflow this week BY HIRANYADA DEWASIRI AND MIHI PERERA of Health is expecting a batch of 264,000 doses of the z 500,000 Covishield Oxford-AstraZeneca Covishield vaccine this week. The Cabinet of Ministers has approved a proposal by Speaking to The Morning yesterday (23), Ministry doses tomorrow Acting Minister of Health Prof. Channa Jayasumana to of Health Epidemiology Unit Consultant purchase 10 million Oxford-AstraZeneca Covishield Epidemiologist Dr. -

21 September 1994

1.50 (GST Inc.) Wednesday September 21 1994 . .~".~ " aSS"IVe 'boost for city, centre Sa:nlam -to invest millions • GWEN LlSTER BARRING unforeseen last-minute hitches yesterday, Sanlam will be the new owners today of an estimated 3 590 square metre prime business site adja cent to the Sanlam Headquarters in In dependence Avenue. The insurance giant is plex. planning a new prestig- It was believed that ious office complex and yesterday was D-day for Shopping~ mall is SanJam's option to planned. finalised and no last- While. th~ precise pur- Cont. on chasepncelsnotknown, i • ~ i . it is believed Sanlam will invest N$65 million in the development which includes both property and buildings. CHANGING SKYLINE ••• What is known as the 'Gathemann building' The good news is that and adjacent business sites are soon to be developed by Sanlam into a Sanlam will preserve the prestigious office block and shopping mall with undercover parking. The historical facades of the estimated cost of the project, including purchase ofproperty and buildings Gathemann building and as well as development, is about N$65 million. Saitlam also plans to retain 'Kroonprinz' as it is the historical facades of the old buildings and incorporate them into the known, and incorporate new complex. Photo: Chris Ndivanga them into the new com- N$23m for"Govt workers • STAFF REPORTER and recommend new conditions of . t H G· b service. PRIME Mims er age emgo The commission will be told to THE POWERS of took the unusual step of address- present its findingg for formulation in Unam Vice-Chancel-' ing the nation in a TV broadcast the 1995/96 budget.