Breaking Through Data-Architecture Gridlock to Scale AI Large-Scale Data Modernization and Rapidly Evolving Data Technologies Can Tie up AI Transformations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data Model Standards and Guidelines, Registration Policies And

Data Model Standards and Guidelines, Registration Policies and Procedures Version 3.2 ● 6/02/2017 Data Model Standards and Guidelines, Registration Policies and Procedures Document Version Control Document Version Control VERSION D ATE AUTHOR DESCRIPTION DRAFT 03/28/07 Venkatesh Kadadasu Baseline Draft Document 0.1 05/04/2007 Venkatesh Kadadasu Sections 1.1, 1.2, 1.3, 1.4 revised 0.2 05/07/2007 Venkatesh Kadadasu Sections 1.4, 2.0, 2.2, 2.2.1, 3.1, 3.2, 3.2.1, 3.2.2 revised 0.3 05/24/07 Venkatesh Kadadasu Incorporated feedback from Uli 0.4 5/31/2007 Venkatesh Kadadasu Incorporated Steve’s feedback: Section 1.5 Issues -Change Decide to Decision Section 2.2.5 Coordinate with Kumar and Lisa to determine the class words used by XML community, and identify them in the document. (This was discussed previously.) Data Standardization - We have discussed on several occasions the cross-walk table between tabular naming standards and XML. When did it get dropped? Section 2.3.2 Conceptual data model level of detail: changed (S) No foreign key attributes may be entered in the conceptual data model. To (S) No attributes may be entered in the conceptual data model. 0.5 6/4/2007 Steve Horn Move last paragraph of Section 2.0 to section 2.1.4 Data Standardization Added definitions of key terms 0.6 6/5/2007 Ulrike Nasshan Section 2.2.5 Coordinate with Kumar and Lisa to determine the class words used by XML community, and identify them in the document. -

Data Analytics EMEA Insurance Data Analytics Study Contents

A little less conversation, a lot more action – tactics to get satisfaction from data analytics EMEA Insurance data analytics study Contents Foreword 01 Introduction from the authors 04 Vision and strategy 05 A disconnect between analytics and business strategies 05 Articulating a clear business case can be tricky 07 Tactical projects are trumping long haul strategic wins 09 The ever evolving world of the CDO 10 Assets and capability 12 Purple People are hard to find 12 Data is not always accessible or trustworthy 14 Agility and traditional insurance are not natural bedfellows 18 Operationalisation and change management 23 Operating models have no clear winner 23 The message is not always loud and clear 24 Hearts and minds do not change overnight 25 Are you ready to become an IDO? 28 Appendix A – The survey 30 Appendix B – Links to publications 31 Appendix C – Key contacts 32 A little less conversation, a lot more action – tactics to get satisfaction from data analytics | EMEA Insurance data analytics study Foreword The world is experiencing the fastest pace of data expansion and technological change in history. Our work with the World Economic Forum in 2015 identified that, within financial services, insurance is the industry which is most ripe for disruption from innovation owing to the significant pressure across the value chain. To build on this work, our report ‘Turbulence ahead – The future of general insurance’, set out various innovations transforming the industry and subsequent scenarios for The time is now the future. It identified that innovation within the insurance industry is no longer led by insurers themselves. -

Constructing a Meta Data Architecture

0-471-35523-2.int.07 6/16/00 12:29 AM Page 181 CHAPTER 7 Constructing a Meta Data Architecture This chapter describes the key elements of a meta data repository architec- ture and explains how to tie data warehouse architecture into the architec- ture of the meta data repository. After reviewing these essential elements, I examine the three basic architectural approaches for building a meta data repository and discuss the advantages and disadvantages of each. Last, I discuss advanced meta data architecture techniques such as closed-loop and bidirectional meta data, which are gaining popularity as our industry evolves. What Makes a Good Architecture A sound meta data architecture incorporates five general characteristics: ■ Integrated ■ Scalable ■ Robust ■ Customizable ■ Open 181 0-471-35523-2.int.07 6/16/00 12:29 AM Page 182 182 Chapter 7 It is important to understand that if a company purchases meta data access and/or integration tools, those tools define a significant portion of the meta data architecture. Companies should, therefore, consider these essential characteristics when evaluating tools and their implementation of the technology. Integrated Anyone who has worked on a decision support project understands that the biggest challenge in building a data warehouse is integrating all of the dis- parate sources of data and transforming the data into meaningful informa- tion. The same is true for a meta data repository. A meta data repository typically needs to be able to integrate a variety of types and sources of meta data and turn the resulting stew into meaningful, accessible business and technical meta data. -

An Enterprise Information System Data Architecture Guide

An Enterprise Information System Data Architecture Guide Grace Alexandra Lewis Santiago Comella-Dorda Pat Place Daniel Plakosh Robert C. Seacord October 2001 TECHNICAL REPORT CMU/SEI-2001-TR-018 ESC-TR-2001-018 Pittsburgh, PA 15213-3890 An Enterprise Information System Data Architecture Guide CMU/SEI-2001-TR-018 ESC-TR-2001-018 Grace Alexandra Lewis Santiago Comella-Dorda Pat Place Daniel Plakosh Robert C. Seacord October 2001 COTS-Based Systems Unlimited distribution subject to the copyright. This report was prepared for the SEI Joint Program Office HQ ESC/DIB 5 Eglin Street Hanscom AFB, MA 01731-2116 The ideas and findings in this report should not be construed as an official DoD position. It is published in the interest of scientific and technical information exchange. FOR THE COMMANDER Norton L. Compton, Lt Col, USAF SEI Joint Program Office This work is sponsored by the U.S. Department of Defense. The Software Engineering Institute is a federally funded research and development center sponsored by the U.S. Department of Defense. Copyright 2001 by Carnegie Mellon University. Requests for permission to reproduce this document or to prepare derivative works of this document should be addressed to the SEI Licensing Agent. NO WARRANTY THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN "AS-IS" BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. -

ER Studio Data Architect Quick Start Guide

Product Documentation ER Studio Data Architect Quick Start Guide Version 11.0 © 2015 Embarcadero Technologies, Inc. Embarcadero, the Embarcadero Technologies logos, and all other Embarcadero Technologies product or service names are trademarks or registered trademarks of Embarcadero Technologies, Inc. All other trademarks are property of their respective owners. Embarcadero Technologies, Inc. is a leading provider of award-winning tools for application developers and database professionals so they can design systems right, build them faster and run them better, regardless of their platform or programming language. Ninety of the Fortune 100 and an active community of more than three million users worldwide rely on Embarcadero products to increase productivity, reduce costs, simplify change management and compliance and accelerate innovation. The company's flagship tools include: Embarcadero® Change Manager™, CodeGear™ RAD Studio, DBArtisan®, Delphi®, ER/Studio®, JBuilder® and Rapid SQL®. Founded in 1993, Embarcadero is headquartered in San Francisco, with offices located around the world. Embarcadero is online at www.embarcadero.com. March, 2015 Embarcadero Technologies 2 CONTENTS Introducing ER/Studio Data Architect............................................................................ 5 Notice for Developer Edition Users ................................................................................... 5 Product Benefits by Audience ............................................................................................ 5 What's -

ER/Studio Enterprise Team Edition

ER/Studio Enterprise Team Edition THE ULTIMATE COLLABORATIVE ENTERPRISE DATA ARCHITECTURE AND MODELING SOLUTION With many organizations seeing a significant increase in data, along with more emphasis on compliance to industry and government regulations, it’s clear that having a solid strategy for data management is extremely important. ER/Studio Enterprise Team edition provides a comprehensive solution that empowers users to easily define models and metadata, establish a foundation for data governance initiatives, and define an enterprise architecture to effectively manage data across the whole organization. THE CHALLENGE OF MANAGING AND UTILIZING ENTERPRISE DATA Physically capturing and properly integrating data is challenging, especially for ER/Studio gives data management professionals the metadata unstructured content. Incorporating the new data, interpreting it correctly, and foundation to quickly respond to business process demands, reduce making it available to decision makers in a timely manner poses three distinct the risk of noncompliance, and deliver more actionable insight. challenges for data management professionals: Many organizations must deal with both relational and NoSQL data, • Leveraging enterprise data as an organizational asset as well as a broad landscape of platforms. ER/Studio Enterprise Team • Improving and managing data quality edition continues to build on its support of strategic enterprise systems including Teradata, Netezza, and Azure, as well as Big Data platform • Clearly and effectively communicating data throughout an organization support for Hadoop Hive and MongoDB, giving organizations an To address these issues, ER/Studio Enterprise Team edition gives data modelers interpretive and collaborative enterprise advantage for leveraging their and data architects the capabilities needed to analyze, document, and share data residing in diverse locations, from data centers to mobile platforms. -

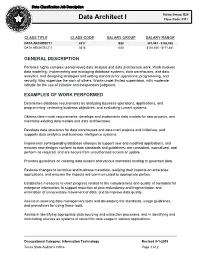

Data Architect I Class Code:Class 0317 Code: 0317

State Classification Job Description Data ArchitectSalary Group: I B28 Data Architect I Class Code:Class 0317 Code: 0317 CLASS TITLE CLASS CODE SALARY GROUP SALARY RANGE DATA ARCHITECT I 0317 B28 $83,991 - $142,052 DATA ARCHITECT II 0318 B30 $101,630 - $171,881 GENERAL DESCRIPTION Performs highly complex (senior-level) data analysis and data architecture work. Work involves data modeling; implementing and managing database systems, data warehouses, and data analytics; and designing strategies and setting standards for operations, programming, and security. May supervise the work of others. Works under limited supervision, with moderate latitude for the use of initiative and independent judgment. EXAMPLES OF WORK PERFORMED Determines database requirements by analyzing business operations, applications, and programming; reviewing business objectives; and evaluating current systems. Obtains data model requirements, develops and implements data models for new projects, and maintains existing data models and data architectures. Develops data structures for data warehouses and data mart projects and initiatives; and supports data analytics and business intelligence systems. Implements corresponding database changes to support new and modified applications, and ensures new designs conform to data standards and guidelines; are consistent, normalized, and perform as required; and are secure from unauthorized access or update. Provides guidelines on creating data models and various standards relating to governed data. Reviews changes to technical and business metadata, realizing their impacts on enterprise applications, and ensures the impacts are communicated to appropriate parties. Establishes measures to chart progress related to the completeness and quality of metadata for enterprise information; to support reduction of data redundancy and fragmentation and elimination of unnecessary movement of data; and to improve data quality. -

Ermrest: an Entity-Relationship Data Storage Service for Web-Based, Data-Oriented Collaboration

ERMrest: an entity-relationship data storage service for web-based, data-oriented collaboration. Karl Czajkowski, Carl Kesselman, Robert Schuler, Hongsuda Tangmunarunkit Information Sciences Institute Viterbi School of Engineering University of Southern California Marina del Rey, CA 90292 Email: fkarlcz,carl,schuler,[email protected] Abstract—Scientific discovery is increasingly dependent on a are hard to evolve over time, and make it difficult to search scientist’s ability to acquire, curate, integrate, analyze, and share for specific data values. large and diverse collections of data. While the details vary from domain to domain, these data often consist of diverse digital In previous work, we have argued for an alternative ap- assets (e.g. image files, sequence data, or simulation outputs) that proach based on scientific asset management [5]. We separate are organized with complex relationships and context which may the “science data” (e.g. microscope images, sequence data, evolve over the course of an investigation. In addition, discovery flow cytometry data) from the “metadata” (e.g. references, is often collaborative, such that sharing of the data and its provenance, properties, and contextual relationships). We have organizational context is highly desirable. Common systems for also defined a data-oriented architecture which expresses col- managing file or asset metadata hide their inherent relational laboration as the manipulation of shared data resources housed structures, while traditional relational database systems do not extend to the distributed collaborative environment often seen in complementary object (asset) and relational (metadata) in scientific investigations. To address these issues, we introduce stores [6]. The metadata encode not only properties and refer- ERMrest, a collaborative data management service which allows ences of individual assets, but relationships among assets and general entity-relationship modeling of metadata manipulated other domain-specific elements such as experiments, protocol by RESTful access methods. -

1. Enterprise Data Planning

1. Enterprise Data Planning Introduction: Enterprise data planning is a strategy for CMS business-focused data standardization. Its objective is to strengthen the agency’s ability to manage and share data and information. NOTE: There are references within this section that refer the reader to the Operating Procedures and Guidelines section. Please download the Operating Procedures and Guidelines section to view these references. The major Enterprise Data Planning products are: Enterprise data objects in the form of Subject Areas and Enterprise Data Entities (Supertypes); and Enterprise Attributes (Data Elements); and Information Security Category settings that establish the controls for appropriate use of CMS data resources. The Enterprise Data Planning process diagram depicts the milestones, control points, and deliverables as they occur during the following steps: Initiate Enterprise Data Planning Define Enterprise Subject Areas Model Enterprise Data Assign Information Security Categories Create the EDM Metadata Repository Publish the Enterprise Data Model Activities in this process are directed by the CMS Enterprise Data Architecture Approach. Key Deliverables: The Enterprise Data Planning process creates the following deliverables: Business Process Model Enterprise Subject Area Definitions, Subject Area Create Read Update Delete Archive (CRUDA) Matrix, Enterprise Data Model, Enterprise Metadata Repository, Business Terms, Enterprise Data Architecture for Repository update. Exhibit 1. Enterprise Data Planning process -

The Layman's Guide to Reading Data Models

Data Architecture & Engineering Services The Layman’s Guide to Reading Data Models A data model shows a data asset’s structure, including the relationships and constraints that determine how data will be stored and accessed. 1. Common Types of Data Models Conceptual Data Model A conceptual data model defines high-level relationships between real-world entities in a particular domain. Entities are typically depicted in boxes, while lines or arrows map the relationships between entities (as shown in Figure 1). Figure 1: Conceptual Data Model Logical Data Model A logical data model defines how a data model should be implemented, with as much detail as possible, without regard for its physical implementation in a database. Within a logical data model, an entity’s box contains a list of the entity’s attributes. One or more attributes is designated as a primary key, whose value uniquely specifies an instance of that entity. A primary key may be referred to in another entity as a foreign key. In the Figure 2 example, each Employee works for only one Employer. Each Employer may have zero or more Employees. This is indicated via the model’s line notation (refer to the Describing Relationships section). Figure 2: Logical Data Model Last Updated: 03/30/2021 OIT|EADG|DEA|DAES 1 The Layman’s Guide to Reading Data Models Physical Data Model A physical data model describes the implementation of a data model in a database (as shown in Figure 3). Entities are described as tables, Attributes are translated to table column, and Each column’s data type is specified. -

Data Architecture 101 for Your Business

Data Architecture 101 for Your Business Bence Faludi - [email protected] Setting up your entire data architecture should be straightforward task. … how to collect our frontend data? ... … which engine should we use? ... … or just pick a visualisation tool ... Let’s just be realistic and bullshit-free! Big Data Mess 1. Too many products are available. Most of them claim they solve all data problems your company encounter; and deliver immediate insights and business value. But NONE is true. 2. Organisational data is mostly unstructured and not clean. It is not ready for consumption. 3. Companies are using various data sources parallely but rarely investing in centralisation - and want this behaviour from 3rd party tools. 4. Easy to stuck with a bad, inefficient and costly architecture. It’s hard, slow and expensive to get rid of them afterwards and clean up the hacks. Bence Faludi My background Independent contractor - Contractor for various companies. [email protected] - Data Engineer at Facebook - Data Scientist at Microsoft - Data Engineer at Wunderlist - etc… Worked with data sizes from few 1000s to billions of active users. Contributor of night-shift and metl open source ETLs. Many tools aim to merge these layers and make it elective. Questions to always ask 1. Do you handle unclean data? 2. How quick will all those transformations and queries be? 3. Where is the cache stored? 4. Does it affect the performance of our database by running parallel, or inefficient queries? 5. What do we need to prepare to make the product effective? 6. How big exactly is the data loss of the tracker? 7. -

The Role of Data Architecture As a Part of Enterprise Architecture∗

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Research Papers in Economics THE ROLE OF DATA ARCHITECTURE AS A PART OF ENTERPRISE ARCHITECTURE∗ Cristian Etegan Ph. D. Student University of Craiova Faculty of Economics and Business Administration Craiova, Romania Assoc. Prof. Anca Mehedintu Ph. D University of Craiova Faculty of Economics and Business Administration Craiova, Romania Prof. Cerasela Pirvu Ph. D University of Craiova Faculty of Economics and Business Administration Craiova, Romania Abstract: : In the early days of computing, technology simply automated manual processes with greater efficiency. The new organizational context provides input into the data architecture and is the primary tool for the management and sharing of enterprise data. It enables architects, data modelers, and stakeholders to identify, classify, and analyze information requirements across the enterprise, allowing the right priorities for data sharing initiatives. Data architecture states how data are persisted, managed, and utilized within an organization. Data architecture is made up of the structure of all corporate data and its relationships to itself and external systems. In far too many situations, the business community has to enlist the assistance of IT to retrieve information due to the community's inconsistency, lack of intuitiveness, or other factors. The goal of any architecture should illustrate how the components of the architecture will fit together and how the system will adapt and evolve over time. JEL classification: M15, M10 Key words: data architecture, enterprise architecture, business process planning, databases, business objects. 1. INTRODUCTION In the early days of computing, technology simply automated manual processes with greater efficiency.