Virtual Memory

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Virtual Memory

Chapter 4 Virtual Memory Linux processes execute in a virtual environment that makes it appear as if each process had the entire address space of the CPU available to itself. This virtual address space extends from address 0 all the way to the maximum address. On a 32-bit platform, such as IA-32, the maximum address is 232 − 1or0xffffffff. On a 64-bit platform, such as IA-64, this is 264 − 1or0xffffffffffffffff. While it is obviously convenient for a process to be able to access such a huge ad- dress space, there are really three distinct, but equally important, reasons for using virtual memory. 1. Resource virtualization. On a system with virtual memory, a process does not have to concern itself with the details of how much physical memory is available or which physical memory locations are already in use by some other process. In other words, virtual memory takes a limited physical resource (physical memory) and turns it into an infinite, or at least an abundant, resource (virtual memory). 2. Information isolation. Because each process runs in its own address space, it is not possible for one process to read data that belongs to another process. This improves security because it reduces the risk of one process being able to spy on another pro- cess and, e.g., steal a password. 3. Fault isolation. Processes with their own virtual address spaces cannot overwrite each other’s memory. This greatly reduces the risk of a failure in one process trig- gering a failure in another process. That is, when a process crashes, the problem is generally limited to that process alone and does not cause the entire machine to go down. -

The Case for Compressed Caching in Virtual Memory Systems

THE ADVANCED COMPUTING SYSTEMS ASSOCIATION The following paper was originally published in the Proceedings of the USENIX Annual Technical Conference Monterey, California, USA, June 6-11, 1999 The Case for Compressed Caching in Virtual Memory Systems _ Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis aUniversity of Texas at Austin © 1999 by The USENIX Association All Rights Reserved Rights to individual papers remain with the author or the author's employer. Permission is granted for noncommercial reproduction of the work for educational or research purposes. This copyright notice must be included in the reproduced paper. USENIX acknowledges all trademarks herein. For more information about the USENIX Association: Phone: 1 510 528 8649 FAX: 1 510 548 5738 Email: [email protected] WWW: http://www.usenix.org The Case for Compressed Caching in Virtual Memory Systems Paul R. Wilson, Scott F. Kaplan, and Yannis Smaragdakis Dept. of Computer Sciences University of Texas at Austin Austin, Texas 78751-1182 g fwilson|sfkaplan|smaragd @cs.utexas.edu http://www.cs.utexas.edu/users/oops/ Abstract In [Wil90, Wil91b] we proposed compressed caching for virtual memory—storing pages in compressed form Compressed caching uses part of the available RAM to in a main memory compression cache to reduce disk pag- hold pages in compressed form, effectively adding a new ing. Appel also promoted this idea [AL91], and it was level to the virtual memory hierarchy. This level attempts evaluated empirically by Douglis [Dou93] and by Russi- to bridge the huge performance gap between normal (un- novich and Cogswell [RC96]. Unfortunately Douglis’s compressed) RAM and disk. -

Memory Protection at Option

Memory Protection at Option Application-Tailored Memory Safety in Safety-Critical Embedded Systems – Speicherschutz nach Wahl Auf die Anwendung zugeschnittene Speichersicherheit in sicherheitskritischen eingebetteten Systemen Der Technischen Fakultät der Universität Erlangen-Nürnberg zur Erlangung des Grades Doktor-Ingenieur vorgelegt von Michael Stilkerich Erlangen — 2012 Als Dissertation genehmigt von der Technischen Fakultät Universität Erlangen-Nürnberg Tag der Einreichung: 09.07.2012 Tag der Promotion: 30.11.2012 Dekan: Prof. Dr.-Ing. Marion Merklein Berichterstatter: Prof. Dr.-Ing. Wolfgang Schröder-Preikschat Prof. Dr. Michael Philippsen Abstract With the increasing capabilities and resources available on microcontrollers, there is a trend in the embedded industry to integrate multiple software functions on a single system to save cost, size, weight, and power. The integration raises new requirements, thereunder the need for spatial isolation, which is commonly established by using a memory protection unit (MPU) that can constrain access to the physical address space to a fixed set of address regions. MPU-based protection is limited in terms of available hardware, flexibility, granularity and ease of use. Software-based memory protection can provide an alternative or complement MPU-based protection, but has found little attention in the embedded domain. In this thesis, I evaluate qualitative and quantitative advantages and limitations of MPU-based memory protection and software-based protection based on a multi-JVM. I developed a framework composed of the AUTOSAR OS-like operating system CiAO and KESO, a Java implementation for deeply embedded systems. The framework allows choosing from no memory protection, MPU-based protection, software-based protection, and a combination of the two. -

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11

Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Requirements on Security Document Title Management for Adaptive Platform Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 881 Document Status published Part of AUTOSAR Standard Adaptive Platform Part of Standard Release R20-11 Document Change History Date Release Changed by Description • Reworded PEE requirement [RS_SEC_05019] AUTOSAR • Added chapter ’Conventions’ and 2020-11-30 R20-11 Release ’Acronyms’ Management • Minor editorial changes to comply with AUTOSAR RS template • Fix spelling in [RS_SEC_05012] • Reworded secure channel AUTOSAR requirements [RS SEC 04001], [RS 2019-11-28 R19-11 Release SEC 04003] and [RS SEC 04003] Management • Changed Document Status from Final to published AUTOSAR 2019-03-29 19-03 Release • Unnecessary requirement [RS SEC Management 05006] removed AUTOSAR 2018-10-31 18-10 Release • Chapter 2.3 ’Protected Runtime Management Environment’ revised AUTOSAR • Moved the Identity and Access 2018-03-29 18-03 Release chapter into RS Identity and Access Management Management (899) AUTOSAR 2017-10-27 17-10 Release • Initial Release Management 1 of 32 Document ID 881: AUTOSAR_RS_SecurityManagement Requirements on Security Management for Adaptive Platform AUTOSAR AP R20-11 Disclaimer This work (specification and/or software implementation) and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the work. The material contained in this work is protected by copyright and other types of intel- lectual property rights. The commercial exploitation of the material contained in this work requires a license to such intellectual property rights. -

What Is an Operating System III 2.1 Compnents II an Operating System

Page 1 of 6 What is an Operating System III 2.1 Compnents II An operating system (OS) is software that manages computer hardware and software resources and provides common services for computer programs. The operating system is an essential component of the system software in a computer system. Application programs usually require an operating system to function. Memory management Among other things, a multiprogramming operating system kernel must be responsible for managing all system memory which is currently in use by programs. This ensures that a program does not interfere with memory already in use by another program. Since programs time share, each program must have independent access to memory. Cooperative memory management, used by many early operating systems, assumes that all programs make voluntary use of the kernel's memory manager, and do not exceed their allocated memory. This system of memory management is almost never seen any more, since programs often contain bugs which can cause them to exceed their allocated memory. If a program fails, it may cause memory used by one or more other programs to be affected or overwritten. Malicious programs or viruses may purposefully alter another program's memory, or may affect the operation of the operating system itself. With cooperative memory management, it takes only one misbehaved program to crash the system. Memory protection enables the kernel to limit a process' access to the computer's memory. Various methods of memory protection exist, including memory segmentation and paging. All methods require some level of hardware support (such as the 80286 MMU), which doesn't exist in all computers. -

A Minimal Powerpc™ Boot Sequence for Executing Compiled C Programs

Order Number: AN1809/D Rev. 0, 3/2000 Semiconductor Products Sector Application Note A Minimal PowerPCª Boot Sequence for Executing Compiled C Programs PowerPC Systems Architecture & Performance [email protected] This document describes the procedures necessary to successfully initialize a PowerPC processor and begin executing programs compiled using the PowerPC embedded application interface (EABI). The items discussed in this document have been tested for MPC603eª, MPC750, and MPC7400 microprocessors. The methods and source code presented in this document may work unmodiÞed on similar PowerPC platforms as well. This document contains the following topics: ¥ Part I, ÒOverview,Ó provides an overview of the conditions and exceptions for the procedures described in this document. ¥ Part II, ÒPowerPC Processor Initialization,Ó provides information on the general setup of the processor registers, caches, and MMU. ¥ Part III, ÒPowerPC EABI Compliance,Ó discusses aspects of the EABI that apply directly to preparing to jump into a compiled C program. ¥ Part IV, ÒSample Boot Sequence,Ó describes the basic operation of the boot sequence and the many options of conÞguration, explains in detail a sample conÞgurable boot and how the code may be modiÞed for use in different environments, and discusses the compilation procedure using the supporting GNU build environment. ¥ Part V, ÒSource Files,Ó contains the complete source code for the Þles ppcinit.S, ppcinit.h, reg_defs.h, ld.script, and MakeÞle. This document contains information on a new product under development by Motorola. Motorola reserves the right to change or discontinue this product without notice. © Motorola, Inc., 2000. All rights reserved. Overview Part I Overview The procedures discussed in this document perform only the minimum amount of work necessary to execute a user program. -

HALO: Post-Link Heap-Layout Optimisation

HALO: Post-Link Heap-Layout Optimisation Joe Savage Timothy M. Jones University of Cambridge, UK University of Cambridge, UK [email protected] [email protected] Abstract 1 Introduction Today, general-purpose memory allocators dominate the As the gap between memory and processor speeds continues landscape of dynamic memory management. While these so- to widen, efficient cache utilisation is more important than lutions can provide reasonably good behaviour across a wide ever. While compilers have long employed techniques like range of workloads, it is an unfortunate reality that their basic-block reordering, loop fission and tiling, and intelligent behaviour for any particular workload can be highly subop- register allocation to improve the cache behaviour of pro- timal. By catering primarily to average and worst-case usage grams, the layout of dynamically allocated memory remains patterns, these allocators deny programs the advantages of largely beyond the reach of static tools. domain-specific optimisations, and thus may inadvertently Today, when a C++ program calls new, or a C program place data in a manner that hinders performance, generating malloc, its request is satisfied by a general-purpose allocator unnecessary cache misses and load stalls. with no intimate knowledge of what the program does or To help alleviate these issues, we propose HALO: a post- how its data objects are used. Allocations are made through link profile-guided optimisation tool that can improve the fixed, lifeless interfaces, and fulfilled by -

Common Object File Format (COFF)

Application Report SPRAAO8–April 2009 Common Object File Format ..................................................................................................................................................... ABSTRACT The assembler and link step create object files in common object file format (COFF). COFF is an implementation of an object file format of the same name that was developed by AT&T for use on UNIX-based systems. This format encourages modular programming and provides powerful and flexible methods for managing code segments and target system memory. This appendix contains technical details about the Texas Instruments COFF object file structure. Much of this information pertains to the symbolic debugging information that is produced by the C compiler. The purpose of this application note is to provide supplementary information on the internal format of COFF object files. Topic .................................................................................................. Page 1 COFF File Structure .................................................................... 2 2 File Header Structure .................................................................. 4 3 Optional File Header Format ........................................................ 5 4 Section Header Structure............................................................. 5 5 Structuring Relocation Information ............................................... 7 6 Symbol Table Structure and Content........................................... 11 SPRAAO8–April 2009 -

Irmx® 286/Irmx® II Troubleshooting Guide

iRMX® 286/iRMX® II Troubleshooting Guide Q1/1990 Order Number: 273472-001 inter iRMX® 286/iRMX® II Troubleshooting Guide Q1/1990 Order Number: 273472-001 Intel Corporation 2402 W. Beardsley Road Phoenix, Arizona Mailstop DV2-42 Intel Corporation (UK) Ltd. Pipers Way Swindon, Wiltshire SN3 1 RJ United Kingdom Intel Japan KK 5-6 Tokodai, Toyosato-machi Tsukuba-gun Ibaragi-Pref. 300-26 An Intel Technical Report from Technical Support Operations Copyright ©1990, Intel Corporation Copyright ©1990, Intel Corporation The information in this document is subject to change without notice. Intel Corporation makes no warranty of any kind with regard to this material, including, but not limited to, the implied warranties of merchantability and fitness for a particular purpose. Intel Corporation assumes no responsibility for any errors that ~ay :!ppe3r in this d~ur:::ent. Intel Corpomtion make:; nc ccmmitmcut tv update nor to keep C"l..iiTeilt thc information contained in this document. Intel Corporation assUmes no responsibility for the use of any circuitry other than circuitry embodied in an Intel product. No other circuit patent licenses are implied. Intel software products are copyrighted by and shall remain the property of Intel Corporation. Use, duplication or disclosure is subject to restrictions stated in Intel's software license, or as defined in FAR 52.227-7013. No part of this document may be copied or reproduced in any form or by any means without the prior written consent of Intel Corporation. The following are trademarks of Intel Corporation -

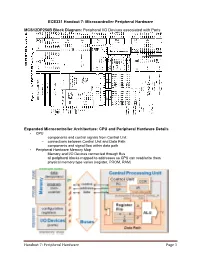

Memory Map: 68HC12 CPU and MC9S12DP256B Evaluation Board

ECE331 Handout 7: Microcontroller Peripheral Hardware MCS12DP256B Block Diagram: Peripheral I/O Devices associated with Ports Expanded Microcontroller Architecture: CPU and Peripheral Hardware Details • CPU – components and control signals from Control Unit – connections between Control Unit and Data Path – components and signal flow within data path • Peripheral Hardware Memory Map – Memory and I/O Devices connected through Bus – all peripheral blocks mapped to addresses so CPU can read/write them – physical memory type varies (register, PROM, RAM) Handout 7: Peripheral Hardware Page 1 Memory Map: 68HCS12 CPU and MC9S12DP256B Evaluation Board Configuration Register Set Physical Memory Handout 7: Peripheral Hardware Page 2 Handout 7: Peripheral Hardware Page 3 HCS12 Modes of Operation MODC MODB MODA Mode Port A Port B 0 0 0 special single chip G.P. I/O G.P. I/O special expanded 0 0 1 narrow Addr/Data Addr 0 1 0 special peripheral Addr/Data Addr/Data 0 1 1 special expanded wide Addr/Data Addr/Data 1 0 0 normal single chip G.P. I/O G.P. I/O normal expanded 1 0 1 narrow Addr/Data Addr 1 1 0 reserved -- -- 1 1 1 normal expanded wide Addr/Data Addr/Data G.P. = general purpose HCS12 Ports for Expanded Modes Handout 7: Peripheral Hardware Page 4 Memory Basics •RAM: Random Access Memory – historically defined as memory array with individual bit access – refers to memory with both Read and Write capabilities •ROM: Read Only Memory – no capabilities for “online” memory Write operations – Write typically requires high voltages or erasing by UV light • Volatility of Memory – volatile memory loses data over time or when power is removed • RAM is volatile – non-volatile memory stores date even when power is removed • ROM is no n-vltilvolatile • Static vs. -

Virtual Memory - Paging

Virtual memory - Paging Johan Montelius KTH 2020 1 / 32 The process code heap (.text) data stack kernel 0x00000000 0xC0000000 0xffffffff Memory layout for a 32-bit Linux process 2 / 32 Segments - a could be solution Processes in virtual space Address translation by MMU (base and bounds) Physical memory 3 / 32 one problem Physical memory External fragmentation: free areas of free space that is hard to utilize. Solution: allocate larger segments ... internal fragmentation. 4 / 32 another problem virtual space used code We’re reserving physical memory that is not used. physical memory not used? 5 / 32 Let’s try again It’s easier to handle fixed size memory blocks. Can we map a process virtual space to a set of equal size blocks? An address is interpreted as a virtual page number (VPN) and an offset. 6 / 32 Remember the segmented MMU MMU exception no virtual addr. offset yes // < within bounds index + physical address segment table 7 / 32 The paging MMU MMU exception virtual addr. offset // VPN available ? + physical address page table 8 / 32 the MMU exception exception virtual address within bounds page available Segmentation Paging linear address physical address 9 / 32 a note on the x86 architecture The x86-32 architecture supports both segmentation and paging. A virtual address is translated to a linear address using a segmentation table. The linear address is then translated to a physical address by paging. Linux and Windows do not use use segmentation to separate code, data nor stack. The x86-64 (the 64-bit version of the x86 architecture) has dropped many features for segmentation. -

CS 151: Introduction to Computers

Information Technology: Introduction to Computers Handout One Computer Hardware 1. Components a. System board, Main board, Motherboard b. Central Processing Unit (CPU) c. RAM (Random Access Memory) SDRAM. DDR-RAM, RAMBUS d. Expansion cards i. ISA - Industry Standard Architecture ii. PCI - Peripheral Component Interconnect iii. PCMCIA - Personal Computer Memory Card International Association iv. AGP – Accelerated Graphics Port e. Sound f. Network Interface Card (NIC) g. Modem h. Graphics Card (AGP – accelerated graphics port) i. Disk drives (A:\ floppy diskette; B:\ obsolete 5.25” floppy diskette; C:\Internal Hard Disk; D:\CD-ROM, CD-R/RW, DVD-ROM/R/RW (Compact Disk-Read Only Memory) 2. Peripherals a. Monitor b. Printer c. Keyboard d. Mouse e. Joystick f. Scanner g. Web cam Operating system – a collection of files and small programs that enables input and output. The operating system transforms the computer into a productive entity for human use. BIOS (Basic Input Output System) date, time, language, DOS – Disk Operating System Windows (Dual, parallel development for home and industry) Windows 3.1 Windows 3.11 (Windows for Workgroups) Windows 95 Windows N. T. (Network Technology) Windows 98 Windows N. T. 4.0 Windows Me Windows 2000 Windows XP Home Windows XP Professional The Evolution of Windows Early 80's IBM introduced the Personal PC using the Intel 8088 processor and Microsoft's Disk Operating System (DOS). This was a scaled down computer aimed at business which allowed a single user to execute a single program. Many changes have been introduced over the last 20 years to bring us to where we are now.