An Empirical Study of User Interfaces for Immersive Virtual Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Computer Essentials – Session 1 – Step-By-Step Guide

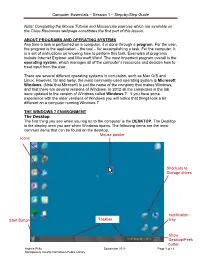

Computer Essentials – Session 1 – Step-by-Step Guide Note: Completing the Mouse Tutorial and Mousercise exercise which are available on the Class Resources webpage constitutes the first part of this lesson. ABOUT PROGRAMS AND OPERATING SYSTEMS Any time a task is performed on a computer, it is done through a program. For the user, the program is the application – the tool – for accomplishing a task. For the computer, it is a set of instructions on knowing how to perform this task. Examples of programs include Internet Explorer and Microsoft Word. The most important program overall is the operating system, which manages all of the computer’s resources and decides how to treat input from the user. There are several different operating systems in circulation, such as Mac O/S and Linux. However, far and away, the most commonly-used operating system is Microsoft Windows. (Note that Microsoft is just the name of the company that makes Windows, and that there are several versions of Windows. In 2012 all the computers in the lab were updated to the version of Windows called Windows 7. If you have some experience with the older versions of Windows you will notice that things look a bit different on a computer running Windows 7. THE WINDOWS 7 ENVIRONMENT The Desktop The first thing you see when you log on to the computer is the DESKTOP. The Desktop is the display area you see when Windows opens. The following items are the most common items that can be found on the desktop: Mouse pointer Icons Shortcuts to Storage drives Notification Start Button Taskbar tray Show Desktop/Peek button Andrea Philo September 2012 Page 1 of 13 Montgomery County-Norristown Public Library Computer Essentials – Session 1 – Step-by-Step Guide Parts of the Windows 7 Desktop Icon: A picture representing a program or file or places to store files. -

User Interface Design Issues in HCI

IJCSNS International Journal of Computer Science and Network Security, VOL.18 No.8, August 2018 153 User Interface Design Issues In HCI Waleed Iftikhar, Muhammad Sheraz Arshad Malik, Shanza Tariq, Maha Anwar, Jawad Ahmad, M. Saad sultan Department of Computing, Riphah International University, Faisalabad, Pakistan Summary Command line is the interface that allows the user to This paper presents an important analysis on a literature review interact with the computer by directly using the commands. which has the findings in design issues from the year 1999 to But there is an issue that the commands cannot be changed, 2018. This study basically discusses about all the issues related they are fixed and computer only understands the exact to design and user interface, and also gives the solutions to make commands. the designs or user interface more attractive and understandable. This study is the guideline to solve the main issues of user Graphical user interface is the interface that allows the interface. user to interact with the system, because this is user There is important to secure the system for modern applications. friendly and easy to use. This includes the graphics, The use of internet is quickly growing from years. Because of pictures and also attractive for all type of users. The this fast travelling lifestyle, where they lets the user to attach with command line is black and white interface. This interface systems from everywhere. When user is ignoring the is also known as WIMPS because it uses windows, icons, functionalities in the system then the system is not secure but, in menus, pointers. -

Designing for Increased Autonomy & Human Control

IFIP Workshop on Intelligent Vehicle Dependability and Security (IVDS) January 29, 2021 Designing for Increased Autonomy & Human Control Ben Shneiderman @benbendc Founding Director (1983-2000), Human-Computer Interaction Lab Professor, Department of Computer Science Member, National Academy of Engineering Photo: BK Adams IFIP Workshop on Intelligent Vehicle Dependability and Security (IVDS) January 29, 2021 Designing for Increased Automation & Human Control Ben Shneiderman @benbendc Founding Director (1983-2000), Human-Computer Interaction Lab Professor, Department of Computer Science Member, National Academy of Engineering Photo: BK Adams What is Human-Centered AI? Human-Centered AI Amplify, Augment, Enhance & Empower People Human Responsibility Supertools and Active Appliances Visual Interfaces to Prevent/Reduce Explanations Audit Trails to Analyze Failures & Near Misses Independent Oversight à Reliable, Safe & Trustworthy Supertools Digital Camera Controls Navigation Choices Texting Autocompletion Spelling correction Active Appliances Coffee maker, Rice cooker, Blender Dishwasher, Clothes Washer/Dryer Implanted Cardiac Pacemakers NASA Mars Rovers are Tele-Operated DaVinci Tele-Operated Surgery “Robots don’t perform surgery. Your surgeon performs surgery with da Vinci by using instruments that he or she guides via a console.” https://www.davincisurgery.com/ Bloomberg Terminal A 2-D HCAI Framework Designing the User Interface Balancing automation & human control First Edition: 1986 Designing the User Interface Balancing automation & -

Design and Evaluation of a Perceptually Adaptive Rendering System for Immersive Virtual Reality Environments Kimberly Ann Weaver Iowa State University

Iowa State University Capstones, Theses and Retrospective Theses and Dissertations Dissertations 2007 Design and evaluation of a perceptually adaptive rendering system for immersive virtual reality environments Kimberly Ann Weaver Iowa State University Follow this and additional works at: https://lib.dr.iastate.edu/rtd Part of the Cognitive Psychology Commons, and the Computer Sciences Commons Recommended Citation Weaver, Kimberly Ann, "Design and evaluation of a perceptually adaptive rendering system for immersive virtual reality environments" (2007). Retrospective Theses and Dissertations. 14895. https://lib.dr.iastate.edu/rtd/14895 This Thesis is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Retrospective Theses and Dissertations by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. Design and evaluation of a perceptually adaptive rendering system for immersive virtual reality environments by Kimberly Ann Weaver A thesis submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Human Computer Interaction Program of Study Committee: Derrick Parkhurst (Major Professor) Chris Harding Shana Smith Iowa State University Ames, Iowa 2007 Copyright © Kimberly Ann Weaver, 2007. All rights reserved. UMI Number: 1449653 Copyright 2007 by Weaver, Kimberly Ann All rights reserved. UMI Microform 1449653 Copyright 2008 by ProQuest Information and Learning Company. All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. ProQuest Information and Learning Company 300 North Zeeb Road P.O. -

Exploring the User Interface Affordances of File Sharing

CHI 2006 Proceedings • Activity: Design Implications April 22-27, 2006 • Montréal, Québec, Canada Share and Share Alike: Exploring the User Interface Affordances of File Sharing Stephen Voida1, W. Keith Edwards1, Mark W. Newman2, Rebecca E. Grinter1, Nicolas Ducheneaut2 1GVU Center, College of Computing 2Palo Alto Research Center Georgia Institute of Technology 3333 Coyote Hill Road 85 5th Street NW, Atlanta, GA 30332–0760, USA Palo Alto, CA 94304, USA {svoida, keith, beki}@cc.gatech.edu {mnewman, nicolas}@parc.com ABSTRACT and folders shared with other users on a single computer, With the rapid growth of personal computer networks and often as a default system behavior; files shared with other the Internet, sharing files has become a central activity in computers over an intranet or home network; and files computer use. The ways in which users control the what, shared with other users around the world on web sites and how, and with whom of sharing are dictated by the tools FTP servers. Users also commonly exchange copies of they use for sharing; there are a wide range of sharing documents as email attachments, transfer files during practices, and hence a wide range of tools to support these instant messaging sessions, post digital photos to online practices. In practice, users’ requirements for certain photo album services, and swap music files using peer–to– sharing features may dictate their choice of tool, even peer file sharing applications. though the other affordances available through that tool Despite these numerous venues for and implementations of may not be an ideal match to the desired manner of sharing. -

Adding the Maine Prescription Monitoring System As a Favorite

Adding the Maine Prescription Monitoring System as a Favorite Beginning 1/1/17 all newly prescribed narcotic medications ordered when discharging a patient from the Emergency Department or an Inpatient department must be recorded in the Maine prescription monitoring system (Maine PMP). Follow these steps to access the Maine PMP site from the SeHR/Epic system and create shortcuts to the activity to simplify access. Access the Maine PMP site from hyperspace 1. Follow this path for the intial launch of the Maine PMP site: Epic>Help>Maine PMP Program Please note: the Maine PMP database is managed and maintained by the State of Maine and does NOT support single-sign on 2. Click Login to enter your Maine PMP user name and password a. If you know your user name and password, click Login b. To retrieve your user name click Retrieve User Name c. To retrieve your password click Retrieve Password Save the Maine PMP site as a favorite under the Epic button After accessing the site from the Epic menu path you can make the link a favorite under the Epic menu. 1. Click the Epic button, the Maine PMP program hyperlink will appear under your Recent activities, click the Star icon to save it as a favorite. After staring the activity it becomes a favorite stored under the Epic button Save the Maine PMP site as a Quick Button on your Epic Toolbar After accessing the site from the Epic menu path you may choose to make the Maine PMP hyperlink a quick button on your main Epic toolbar. -

The Application of Virtual Reality in Engineering Education

applied sciences Review The Application of Virtual Reality in Engineering Education Maged Soliman 1 , Apostolos Pesyridis 2,3, Damon Dalaymani-Zad 1,*, Mohammed Gronfula 2 and Miltiadis Kourmpetis 2 1 College of Engineering, Design and Physical Sciences, Brunel University London, London UB3 3PH, UK; [email protected] 2 College of Engineering, Alasala University, King Fahad Bin Abdulaziz Rd., Dammam 31483, Saudi Arabia; [email protected] (A.P.); [email protected] (M.G.); [email protected] (M.K.) 3 Metapower Limited, Northwood, London HA6 2NP, UK * Correspondence: [email protected] Abstract: The advancement of VR technology through the increase in its processing power and decrease in its cost and form factor induced the research and market interest away from the gaming industry and towards education and training. In this paper, we argue and present evidence from vast research that VR is an excellent tool in engineering education. Through our review, we deduced that VR has positive cognitive and pedagogical benefits in engineering education, which ultimately improves the students’ understanding of the subjects, performance and grades, and education experience. In addition, the benefits extend to the university/institution in terms of reduced liability, infrastructure, and cost through the use of VR as a replacement to physical laboratories. There are added benefits of equal educational experience for the students with special needs as well as distance learning students who have no access to physical labs. Furthermore, recent reviews identified that VR applications for education currently lack learning theories and objectives integration in their design. -

User Interface for Volume Rendering in Virtual Reality Environments

User Interface for Volume Rendering in Virtual Reality Environments Jonathan Klein∗ Dennis Reuling† Jan Grimm‡ Andreas Pfau§ Damien Lefloch¶ Martin Lambersk Andreas Kolb∗∗ Computer Graphics Group University of Siegen ABSTRACT the gradient or the curvature at the voxel location into account and Volume Rendering applications require sophisticated user interac- require even more complex user interfaces. tion for the definition and refinement of transfer functions. Tradi- Since Virtual Environments are especially well suited to explore tional 2D desktop user interface elements have been developed to spatial properties of complex 3D data, bringing Volume Render- solve this task, but such concepts do not map well to the interaction ing applications into such environments is a natural step. However, devices available in Virtual Reality environments. defining new user interfaces suitable both for the Virtual Environ- ment and for the Volume Rendering application is difficult. Pre- In this paper, we propose an intuitive user interface for Volume vious approaches mainly focused on porting traditional 2D point- Rendering specifically designed for Virtual Reality environments. and-click concepts to the Virtual Environment [8, 5, 9]. This tends The proposed interface allows transfer function design and refine- to be unintuitive, to complicate the interaction, and to make only ment based on intuitive two-handed operation of Wand-like con- limited use of available interaction devices. trollers. Additional interaction modes such as navigation and clip In this paper, we propose an intuitive 3D user interface for Vol- plane manipulation are supported as well. ume Rendering based on interaction devices that are suitable for The system is implemented using the Sony PlayStation Move Virtual Reality environments. -

Effective User Interface Design for Consumer Trust Two Case Studies

2005:097 SHU MASTER'S THESIS Effective User Interface Design for Consumer Trust Two Case Studies XILING ZHOU XIANGCHUN LIU Luleå University of Technology MSc Programme in Electronic Commerce Department of Business Administration and Social Sciences Division of Industrial marketing and e-commerce 2005:097 SHU - ISSN: 1404-5508 - ISRN: LTU-SHU-EX--05/097--SE Luleå University of Technology E-Commerce ACKNOWLEDGEMENT This thesis is the result of half a year of work whereby we have been accompanied and supported by many people. It is a pleasant aspect that we could have this opportunity to express our gratitude to all of them. First, we are deeply indebted to our supervisor Prof. Lennart Persson who is from Division of Industrial Marketing at LTU. He helped us with stimulating suggestions and encouragement in all the time of research and writing of this thesis. Without his never-ending support during this process, we could not have done this thesis. Especially, we would like to express our gratitude to all of participants, who have spent their valuable time to response the interview questions and discuss with us. Finally, we would like to thank our family and friends. I, Zhou Xiling am very grateful for everyone who gave me support and encouragement during this process. Especially I felt a deep sense of gratitude to my father and mother who formed part of my vision and taught me the good things that really matter in the life. I also want to thank my friend Tang Yu for his never-ending support and good advices. I, Liu XiangChun am very grateful for my parents, for their endless love and support. -

Lightdb: a DBMS for Virtual Reality Video

LightDB: A DBMS for Virtual Reality Video Brandon Haynes, Amrita Mazumdar, Armin Alaghi, Magdalena Balazinska, Luis Ceze, Alvin Cheung Paul G. Allen School of Computer Science & Engineering University of Washington, Seattle, Washington, USA {bhaynes, amrita, armin, magda, luisceze, akcheung}@cs.washington.edu http://lightdb.uwdb.io ABSTRACT spherical panoramic VR videos (a.k.a. 360◦ videos), encoding one We present the data model, architecture, and evaluation of stereoscopic frame of video can involve processing up to 18× more LightDB, a database management system designed to efficiently bytes than an ordinary 2D video [30]. manage virtual, augmented, and mixed reality (VAMR) video con- AR and MR video applications, on the other hand, often mix tent. VAMR video differs from its two-dimensional counterpart smaller amounts of synthetic video with the world around a user. in that it is spherical with periodic angular dimensions, is nonuni- Similar to VR, however, these applications have extremely de- formly and continuously sampled, and applications that consume manding latency and throughput requirements since they must react such videos often have demanding latency and throughput require- to the real world in real time. ments. To address these challenges, LightDB treats VAMR video To address these challenges, various specialized VAMR sys- data as a logically-continuous six-dimensional light field. Further- tems have been introduced for preparing and serving VAMR video more, LightDB supports a rich set of operations over light fields, data (e.g., VRView [71], Facebook Surround 360 [20], YouTube and automatically transforms declarative queries into executable VR [75], Google Poly [25], Lytro VR [41], Magic Leap Cre- physical plans. -

A High Level User Interface for Topology Controlled Volume Rendering

Topological Galleries: A High Level User Interface for Topology Controlled Volume Rendering Brian MacCarthy, Hamish Carr and Gunther H. Weber Abstract Existing topological interfaces to volume rendering are limited by their re- liance on sophisticated knowledge of topology by the user. We extend previous work by describing topological galleries, an interface for novice users that is based on the design galleries approach. We report three contributions: an interface based on hier- archical thumbnail galleries to display the containment relationships between topo- logically identifiable features, the use of the pruning hierarchy instead of branch decomposition for contour tree simplification, and drag-and-drop transfer function assignment for individual components. Initial results suggest that this approach suf- fers from limitations due to rapid drop-off of feature size in the pruning hierarchy. We explore these limitations by providing statistics of feature size as function of depth in the pruning hierarchy of the contour tree. 1 Introduction The overall goal of scientific visualisation is to provide useful insight into existing data. As visualisation techniques have become more complex, so have the interfaces for controlling them. In cases where the interface has been simplified, it has often been at the cost of the functionality of the program. Thus, while expert users are capable of using state of the art technology, novice users who would otherwise have uses for this technology are restricted by unintuitive interfaces. Topology-based volume rendering, while powerful, is difficult to apply success- fully. This difficulty is due to the fact that the interface used to design transfer func- Brian MacCarthy University College Dublin, Belfield, Dublin 4, Ireland, e-mail: [email protected] Hamish Carr University of Leeds, Woodhouse Lane, Leeds LS2 9JT, England e-mail: [email protected] Gunther H. -

Xfel Database User Interface P.D

THPB039 Proceedings of SRF2015, Whistler, BC, Canada XFEL DATABASE USER INTERFACE P.D. Gall, V. Gubarev, D. Reschke, A. Sulimov, J.H. Thie, S. Yasar DESY, Notkestrasse 85, 22607 Hamburg, Germany Abstract Using this protection system we have divided all The XFEL database plays an important role for an customers into different groups: effective part of the quality control system for the whole ● RI group has permission to view production cavity production and preparation process for the results from RI only and open information European XFEL on a very detailed level. Database has the ● ZANON group has permission to view Graphical User Interface based on the web-technologies, production results from ZANON only and open and it can be accessed via low level Oracle SQL. information ● DESY group has permission to view all results INTRODUCTION from all companies Beginning from TTF a relational database for cavities ● Not authorised people have access to the open was developed at DESY using the ORACLE Relational information only Database Management System (RDBMS) [1]. To get authorised access to the database one have to The database is dynamically accessible from contact the responsible persons listed in the XFEL everywhere via a graphical WEB interface based on database GUI pages. ORACLE. At the moment we use the version Oracle GRAPHICAL USER INTERFACE Developer 10g Forms and Reports. The graphical tools are developed in Java. The XFEL database GUI was developed to meet the The database is created to store data for more than 840 requirements of experts involved. According to the people cavities coming from the serial production and about 100 needs the GUI applications can logically be divided into modules.