A Close Look at the Transient Sky in a Neighbouring Galaxy

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unique Sky Survey Brings New Objects Into Focus 15 June 2009

Unique sky survey brings new objects into focus 15 June 2009 human intervention. The Palomar Transient Factory is a collaboration of scientists and engineers from institutions around the world, including the California Institute of Technology (Caltech); the University of California, Berkeley, and the Lawrence Berkeley National Laboratory (LBNL); Columbia University; Las Cumbres Observatory; the Weizmann Institute of Science in Israel; and Oxford University. During the PTF process, the automated wide-angle 48-inch Samuel Oschin Telescope at Caltech's Palomar Observatory scans the skies using a 100-megapixel camera. The flood of images, more than 100 gigabytes This is the Andromeda galaxy, as seen with the new every night, is then beamed off of the mountain via PTF camera on the Samuel Oschin Telescope at the High Performance Wireless Research and Palomar Observatory. This image covers 3 square Education Network¬-a high-speed microwave data degrees of sky, more than 15 times the size of the full connection to the Internet-and then to the LBNL's moon. Credit: Nugent & Poznanski (LBNL), PTF National Energy Scientific Computing Center. collaboration There, computers analyze the data and compare it to images previously obtained at Palomar. More computers using a type of artificial intelligence software sift through the results to identify the most An innovative sky survey has begun returning interesting "transient" sources-those that vary in images that will be used to detect unprecedented brightness or position. numbers of powerful cosmic explosions-called supernovae-in distant galaxies, and variable Within minutes of a candidate transient's discovery, brightness stars in our own Milky Way. -

A the Restless Universe. How the Periodic Table Got Built Up

A The Restless Universe. How the Periodic Table Got Built up This symposium will feature an Academy Lecture by Shrinivas Kulkarni, professor of Astronomy, California Institute of Technology, Pasadena, United States Date: Friday 24 May 2019, 7.00 p.m. – 8.30 p.m. Venue: Public Library Amsterdam, OBA Oosterdok, Theaterzaal OBA, Oosterdokskade 143, 1011 DL Amsterdam Abstract The Universe began only with hydrogen and helium. It is cosmic explosions which build up the periodic table! Astronomers have now identified several classes of cosmic explosions of which supernovae constitute the largest group. The Palomar Transient Factory was an innovative 2-telescope experiment, and its successor, the Zwicky Transient Factory (ZTF), is a high tech project with gigantic CCD cameras and sophisticated software system, and squarely aimed to systematically find "blips and booms in the middle of the night". Shrinivas Kulkarni will talk about the great returns and surprises from this project: super-luminous supernovae, new classes of transients, new light on progenitors of supernovae, detection of gamma-ray bursts by purely optical techniques and troves of pulsating stars and binary stars. ZTF is poised to become the stepping stone for the Large Synoptic Survey Telescope. Short biography S. R. Kulkarni is the George Ellery Hale Professor of Astronomy at the California Institute of Technology. He served as Executive Officer for Astronomy (1997-2000) and Director of Caltech Optical Observatories for the period 2006-2018. He was recognized by Cornell Uniersity with an AD White Professor-at-Large appointment. Kulkarni received an honorary doctorate from the Radboud University of Nijmegen, The Netherlands. -

Ocabulary of Definitions : P

Service bibliothèque Catalogue historique de la bibliothèque de l’Observatoire de Nice Source : Monographie de l’Observatoire de Nice / Charles Garnier, 1892. Marc Heller © Observatoire de la Côte d’Azur Février 2012 Présentation << On trouve… à l’Ouest … la bibliothèque avec ses six mille deux cents volumes et ses trentes journaux ou recueils périodiques…. >> (Façade principale de la Bibliothèque / Phot. attribuée à Michaud A. – 188? - Marc Heller © Observatoire de la Côte d’Azur) C’est en ces termes qu’Henri Joseph Anastase Perrotin décrivait la bibliothèque de l’Observatoire de Nice en 1899 dans l’introduction du tome 1 des Annales de l’Observatoire de Nice 1. Un catalogue des revues et ouvrages 2 classé par ordre alphabétique d’auteurs et de lieux décrivait le fonds historique de la bibliothèque. 1 Introduction, Annales de l’Observatoire de Nice publiés sous les auspices du Bureau des longitudes par M. Perrotin. Paris,Gauthier-Villars,1899, Tome 1,p. XIV 2 Catalogue de la bibliothèque, Annales de l’Observatoire de Nice publiés sous les auspices du Bureau des longitudes par M. Perrotin. Paris,Gauthier-Villars,1899, Tome 1,p. 1 Le présent document est une version remaniée, complétée et enrichie de ce catalogue. (Bibliothèque, vue de l’intérieur par le photogr. Jean Giletta, 191?. - Marc Heller © Observatoire de la Côte d’Azur) Chaque référence est reproduite à l’identique. Elle est complétée par une notice bibliographique et éventuellement par un lien électronique sur la version numérisée. Les titres et documents non encore identifiés sont signalés en italique. Un index des auteurs et des titres de revues termine le document. -

2015 Scialog-TDA Conference Booklet

Tıme Domain Astrophysics: Stars and Explosions The First Annual Scialog Conference October 22-25. 2015 at Biosphere 2 Conference Objectives Conference Process Engage in dialog with the goal of Brainstorming is welcome; don’t be accelerating high-risk/high-reward afraid to say what comes to mind. research. Consider the possibility of unorthodox Identify and analyze bottlenecks in or unusual ideas without immediately advancing time domain astrophysics and dismissing them. develop approaches for breakthroughs. Discuss, build upon and even Build a creative, better-networked constructively criticize each other’s community that is more likely to produce ideas—in a spirit of cooperative breakthroughs. give and take. Form teams to write proposals to seed Make comments concise to avoid novel projects based on highly innovative monopolizing the dialog. ideas that emerge at the conference. 2015® From the President 2 From the Program Director 3 Agenda 4 Keynote Speakers 6 Proposal Guidelines 8 Discussion Facilitators 9 Scialog Fellows 10 RCSA Board Members & Scientific Staff 12 1 Scialog: Time Domain Astrophysics From the President Welcome to the first Scialog Conference on Time Domain Astrophysics. As befits a topic dealing with change and variability, we ask you to shift your thinking somewhat for the next few days and accept the unique approach to scientific dialog that is a Scialog hallmark. It requires that you actively participate in the discussions by fearlessly brainstorming and saying what comes to mind, even if your thoughts on the topic under discussion may be considered unorthodox or highly speculative. The Scialog methodology also requires that you consider the novel ideas of others without summarily rejecting them, although constructive criticism is always appropriate. -

Messier Objects

Messier Objects From the Stocker Astroscience Center at Florida International University Miami Florida The Messier Project Main contributors: • Daniel Puentes • Steven Revesz • Bobby Martinez Charles Messier • Gabriel Salazar • Riya Gandhi • Dr. James Webb – Director, Stocker Astroscience center • All images reduced and combined using MIRA image processing software. (Mirametrics) What are Messier Objects? • Messier objects are a list of astronomical sources compiled by Charles Messier, an 18th and early 19th century astronomer. He created a list of distracting objects to avoid while comet hunting. This list now contains over 110 objects, many of which are the most famous astronomical bodies known. The list contains planetary nebula, star clusters, and other galaxies. - Bobby Martinez The Telescope The telescope used to take these images is an Astronomical Consultants and Equipment (ACE) 24- inch (0.61-meter) Ritchey-Chretien reflecting telescope. It has a focal ratio of F6.2 and is supported on a structure independent of the building that houses it. It is equipped with a Finger Lakes 1kx1k CCD camera cooled to -30o C at the Cassegrain focus. It is equipped with dual filter wheels, the first containing UBVRI scientific filters and the second RGBL color filters. Messier 1 Found 6,500 light years away in the constellation of Taurus, the Crab Nebula (known as M1) is a supernova remnant. The original supernova that formed the crab nebula was observed by Chinese, Japanese and Arab astronomers in 1054 AD as an incredibly bright “Guest star” which was visible for over twenty-two months. The supernova that produced the Crab Nebula is thought to have been an evolved star roughly ten times more massive than the Sun. -

Active Galactic Nuclei and Their Neighbours

CCD Photometric Observations of Active Galactic Nuclei and their Neighbours by Traianou Efthalia A dissertation submitted in partial fulfillment of the requirements for the degree of Ptychion (Physics) in Aristotle University of Thessaloniki September 2016 Supervisor: Manolis Plionis, Professor To my loved ones Many thanks to: Manolis Plionis for accepting to be my thesis adviser. ii TABLE OF CONTENTS DEDICATION :::::::::::::::::::::::::::::::::: ii LIST OF FIGURES ::::::::::::::::::::::::::::::: v LIST OF TABLES :::::::::::::::::::::::::::::::: ix LIST OF APPENDICES :::::::::::::::::::::::::::: x ABSTRACT ::::::::::::::::::::::::::::::::::: xi CHAPTER I. Introduction .............................. 1 II. Active Galactic Nuclei ........................ 4 2.1 Early History of AGN’s ..................... 4 2.2 AGN Phenomenology ...................... 7 2.2.1 Seyfert Galaxies ................... 7 2.2.2 Low Ionization Nuclear Emission-Line Regions(LINERS) 10 2.2.3 ULIRGS ........................ 11 2.2.4 Radio Galaxies .................... 12 2.2.5 Quasars or QSO’s ................... 14 2.2.6 Blazars ......................... 15 2.3 The Unification Paradigm .................... 16 2.4 Beyond the Unified Model ................... 18 III. Research Goal and Methodology ................. 21 3.1 Torus ............................... 21 3.2 Ha Balmer Line ......................... 23 3.3 Galaxy-Galaxy Interactions ................... 25 3.4 Our Aim ............................. 27 iii IV. Observations .............................. 29 4.1 The Telescope -

Spatial Distribution of Galactic Globular Clusters: Distance Uncertainties and Dynamical Effects

Juliana Crestani Ribeiro de Souza Spatial Distribution of Galactic Globular Clusters: Distance Uncertainties and Dynamical Effects Porto Alegre 2017 Juliana Crestani Ribeiro de Souza Spatial Distribution of Galactic Globular Clusters: Distance Uncertainties and Dynamical Effects Dissertação elaborada sob orientação do Prof. Dr. Eduardo Luis Damiani Bica, co- orientação do Prof. Dr. Charles José Bon- ato e apresentada ao Instituto de Física da Universidade Federal do Rio Grande do Sul em preenchimento do requisito par- cial para obtenção do título de Mestre em Física. Porto Alegre 2017 Acknowledgements To my parents, who supported me and made this possible, in a time and place where being in a university was just a distant dream. To my dearest friends Elisabeth, Robert, Augusto, and Natália - who so many times helped me go from "I give up" to "I’ll try once more". To my cats Kira, Fen, and Demi - who lazily join me in bed at the end of the day, and make everything worthwhile. "But, first of all, it will be necessary to explain what is our idea of a cluster of stars, and by what means we have obtained it. For an instance, I shall take the phenomenon which presents itself in many clusters: It is that of a number of lucid spots, of equal lustre, scattered over a circular space, in such a manner as to appear gradually more compressed towards the middle; and which compression, in the clusters to which I allude, is generally carried so far, as, by imperceptible degrees, to end in a luminous center, of a resolvable blaze of light." William Herschel, 1789 Abstract We provide a sample of 170 Galactic Globular Clusters (GCs) and analyse its spatial distribution properties. -

And Ecclesiastical Cosmology

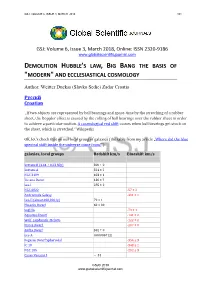

GSJ: VOLUME 6, ISSUE 3, MARCH 2018 101 GSJ: Volume 6, Issue 3, March 2018, Online: ISSN 2320-9186 www.globalscientificjournal.com DEMOLITION HUBBLE'S LAW, BIG BANG THE BASIS OF "MODERN" AND ECCLESIASTICAL COSMOLOGY Author: Weitter Duckss (Slavko Sedic) Zadar Croatia Pусскй Croatian „If two objects are represented by ball bearings and space-time by the stretching of a rubber sheet, the Doppler effect is caused by the rolling of ball bearings over the rubber sheet in order to achieve a particular motion. A cosmological red shift occurs when ball bearings get stuck on the sheet, which is stretched.“ Wikipedia OK, let's check that on our local group of galaxies (the table from my article „Where did the blue spectral shift inside the universe come from?“) galaxies, local groups Redshift km/s Blueshift km/s Sextans B (4.44 ± 0.23 Mly) 300 ± 0 Sextans A 324 ± 2 NGC 3109 403 ± 1 Tucana Dwarf 130 ± ? Leo I 285 ± 2 NGC 6822 -57 ± 2 Andromeda Galaxy -301 ± 1 Leo II (about 690,000 ly) 79 ± 1 Phoenix Dwarf 60 ± 30 SagDIG -79 ± 1 Aquarius Dwarf -141 ± 2 Wolf–Lundmark–Melotte -122 ± 2 Pisces Dwarf -287 ± 0 Antlia Dwarf 362 ± 0 Leo A 0.000067 (z) Pegasus Dwarf Spheroidal -354 ± 3 IC 10 -348 ± 1 NGC 185 -202 ± 3 Canes Venatici I ~ 31 GSJ© 2018 www.globalscientificjournal.com GSJ: VOLUME 6, ISSUE 3, MARCH 2018 102 Andromeda III -351 ± 9 Andromeda II -188 ± 3 Triangulum Galaxy -179 ± 3 Messier 110 -241 ± 3 NGC 147 (2.53 ± 0.11 Mly) -193 ± 3 Small Magellanic Cloud 0.000527 Large Magellanic Cloud - - M32 -200 ± 6 NGC 205 -241 ± 3 IC 1613 -234 ± 1 Carina Dwarf 230 ± 60 Sextans Dwarf 224 ± 2 Ursa Minor Dwarf (200 ± 30 kly) -247 ± 1 Draco Dwarf -292 ± 21 Cassiopeia Dwarf -307 ± 2 Ursa Major II Dwarf - 116 Leo IV 130 Leo V ( 585 kly) 173 Leo T -60 Bootes II -120 Pegasus Dwarf -183 ± 0 Sculptor Dwarf 110 ± 1 Etc. -

Physics Today

Physics Today A New Class of Pulsars Donald C. Backer and Shrinivas R. Kulkarni Citation: Physics Today 43(3), 26 (1990); doi: 10.1063/1.881227 View online: http://dx.doi.org/10.1063/1.881227 View Table of Contents: http://scitation.aip.org/content/aip/magazine/physicstoday/43/3?ver=pdfcov Published by the AIP Publishing Reuse of AIP Publishing content is subject to the terms at: https://publishing.aip.org/authors/rights-and-permissions. Download to IP: 131.215.225.221 On: Thu, 24 Mar 2016 19:00:11 A NEW CLASS OF PULSARS In 1939, seven years after the discovery of the neutron, Binary pulsars, pulsars with millisecond nuclear physicists constructed the first models of a periods and pulsars in globular dusters "neutron star." Stable results were found with masses comparable to the Sun's and radii of about 10 km. are distinguished by their evolutionary For the next three decades, neutron stars remained histories, and are providing tools for purely theoretical entities. Then in 1967, radioastron- fundamental tests of physics. omers at the University of Cambridge observed a radio signal pulsing every 1.337 seconds coming from a single point in the sky—a pulsar.1 Its source was almost certainly a rapidly rotating, highly magnetized neutron Donald C. Docker star. The pulsars discovered since then number about 500, ond Shrinivos R. Kulkarni and their fundamental interest to astronomers and cosmologists has more than justified the excitement that was sparked by their initial discovery. Increasingly sensitive systematic surveys for new pulsars continue at radio observatories around the world. -

How Supernovae Became the Basis of Observational Cosmology

Journal of Astronomical History and Heritage, 19(2), 203–215 (2016). HOW SUPERNOVAE BECAME THE BASIS OF OBSERVATIONAL COSMOLOGY Maria Victorovna Pruzhinskaya Laboratoire de Physique Corpusculaire, Université Clermont Auvergne, Université Blaise Pascal, CNRS/IN2P3, Clermont-Ferrand, France; and Sternberg Astronomical Institute of Lomonosov Moscow State University, 119991, Moscow, Universitetsky prospect 13, Russia. Email: [email protected] and Sergey Mikhailovich Lisakov Laboratoire Lagrange, UMR7293, Université Nice Sophia-Antipolis, Observatoire de la Côte d’Azur, Boulevard de l'Observatoire, CS 34229, Nice, France. Email: [email protected] Abstract: This paper is dedicated to the discovery of one of the most important relationships in supernova cosmology—the relation between the peak luminosity of Type Ia supernovae and their luminosity decline rate after maximum light. The history of this relationship is quite long and interesting. The relationship was independently discovered by the American statistician and astronomer Bert Woodard Rust and the Soviet astronomer Yury Pavlovich Pskovskii in the 1970s. Using a limited sample of Type I supernovae they were able to show that the brighter the supernova is, the slower its luminosity declines after maximum. Only with the appearance of CCD cameras could Mark Phillips re-inspect this relationship on a new level of accuracy using a better sample of supernovae. His investigations confirmed the idea proposed earlier by Rust and Pskovskii. Keywords: supernovae, Pskovskii, Rust 1 INTRODUCTION However, from the moment that Albert Einstein (1879–1955; Whittaker, 1955) introduced into the In 1998–1999 astronomers discovered the accel- equations of the General Theory of Relativity a erating expansion of the Universe through the cosmological constant until the discovery of the observations of very far standard candles (for accelerating expansion of the Universe, nearly a review see Lipunov and Chernin, 2012). -

Pos(Westerbork)006 S 4.0 International License (CC BY-NC-ND 4.0)

Exploring the time-varying Universe PoS(Westerbork)006 Richard Strom ASTRON Oude Hoogeveensedijk 4, 7991 PD Dwingeloo, The Netherlands E-mail: [email protected] Lodie Voûte ASTRON, Anton Pannekoek Inst. Of Astronomy, University of Amsterdam, Postbus 94249, 1090 GE Amsterdam, The Netherlands E-mail: [email protected] Benjamin Stappers School of Phys. & Astron., Alan Turing Bldg., University of Manchester, Oxford Road, Manchester M13 9PL, UK E-mail: [email protected] Gemma Janssen ASTRON Oude Hoogeveensedijk 4, 7991 PD Dwingeloo, The Netherlands E-mail: [email protected] Jason Hessels ASTRON Oude Hoogeveensedijk 4, 7991 PD Dwingeloo, The Netherlands E-mail: [email protected] 50 Years Westerbork Radio Observatory, A Continuing Journey to Discoveries and Innovations Richard Strom, Arnold van Ardenne, Steve Torchinsky (eds) Published with permission of the Netherlands Institute for Radio Astronomy (ASTRON) under the terms of the Creative CommonsAttribution-NonCommercial-NoDerivatives 4.0 International License (CC BY-NC-ND 4.0). Exploring the time-varying Universe Chapter 5.1 The earliest start Richard Strom* Introduction The WSRT interferometrically measures Fourier components of the sky bright- ness distribution from a region set by the primary beam of the telescope ele- ments, at a radio frequency determined by the receiver. This information is used to construct a two-dimensional image of radio emission from the piece of sky observed. Because it is an east-west interferometer array, the information obtained at any instant of time can only be used to construct a one-dimensional map (the telescope so synthesized has the response of a fan beam – narrow in one direction, but orthogonally very elongated). -

Astrophysics in 2006 3

ASTROPHYSICS IN 2006 Virginia Trimble1, Markus J. Aschwanden2, and Carl J. Hansen3 1 Department of Physics and Astronomy, University of California, Irvine, CA 92697-4575, Las Cumbres Observatory, Santa Barbara, CA: ([email protected]) 2 Lockheed Martin Advanced Technology Center, Solar and Astrophysics Laboratory, Organization ADBS, Building 252, 3251 Hanover Street, Palo Alto, CA 94304: ([email protected]) 3 JILA, Department of Astrophysical and Planetary Sciences, University of Colorado, Boulder CO 80309: ([email protected]) Received ... : accepted ... Abstract. The fastest pulsar and the slowest nova; the oldest galaxies and the youngest stars; the weirdest life forms and the commonest dwarfs; the highest energy particles and the lowest energy photons. These were some of the extremes of Astrophysics 2006. We attempt also to bring you updates on things of which there is currently only one (habitable planets, the Sun, and the universe) and others of which there are always many, like meteors and molecules, black holes and binaries. Keywords: cosmology: general, galaxies: general, ISM: general, stars: general, Sun: gen- eral, planets and satellites: general, astrobiology CONTENTS 1. Introduction 6 1.1 Up 6 1.2 Down 9 1.3 Around 10 2. Solar Physics 12 2.1 The solar interior 12 2.1.1 From neutrinos to neutralinos 12 2.1.2 Global helioseismology 12 2.1.3 Local helioseismology 12 2.1.4 Tachocline structure 13 arXiv:0705.1730v1 [astro-ph] 11 May 2007 2.1.5 Dynamo models 14 2.2 Photosphere 15 2.2.1 Solar radius and rotation 15 2.2.2 Distribution of magnetic fields 15 2.2.3 Magnetic flux emergence rate 15 2.2.4 Photospheric motion of magnetic fields 16 2.2.5 Faculae production 16 2.2.6 The photospheric boundary of magnetic fields 17 2.2.7 Flare prediction from photospheric fields 17 c 2008 Springer Science + Business Media.