Notes from Math 5210 Introduction to Representation Theory and Lie Algebras

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Lie Algebras Associated with Generalized Cartan Matrices

LIE ALGEBRAS ASSOCIATED WITH GENERALIZED CARTAN MATRICES BY R. V. MOODY1 Communicated by Charles W. Curtis, October 3, 1966 1. Introduction. If 8 is a finite-dimensional split semisimple Lie algebra of rank n over a field $ of characteristic zero, then there is associated with 8 a unique nXn integral matrix (Ay)—its Cartan matrix—which has the properties Ml. An = 2, i = 1, • • • , n, M2. M3. Ay = 0, implies An = 0. These properties do not, however, characterize Cartan matrices. If (Ay) is a Cartan matrix, it is known (see, for example, [4, pp. VI-19-26]) that the corresponding Lie algebra, 8, may be recon structed as follows: Let e y fy hy i = l, • • • , n, be any Zn symbols. Then 8 is isomorphic to the Lie algebra 8 ((A y)) over 5 defined by the relations Ml = 0, bifj] = dyhy If or all i and j [eihj] = Ajid [f*,] = ~A„fy\ «<(ad ej)-A>'i+l = 0,1 /,(ad fà-W = (J\ii i 7* j. In this note, we describe some results about the Lie algebras 2((Ay)) when (Ay) is an integral square matrix satisfying Ml, M2, and M3 but is not necessarily a Cartan matrix. In particular, when the further condition of §3 is imposed on the matrix, we obtain a reasonable (but by no means complete) structure theory for 8(04t/)). 2. Preliminaries. In this note, * will always denote a field of char acteristic zero. An integral square matrix satisfying Ml, M2, and M3 will be called a generalized Cartan matrix, or g.c.m. -

Matroid Automorphisms of the F4 Root System

∗ Matroid automorphisms of the F4 root system Stephanie Fried Aydin Gerek Gary Gordon Dept. of Mathematics Dept. of Mathematics Dept. of Mathematics Grinnell College Lehigh University Lafayette College Grinnell, IA 50112 Bethlehem, PA 18015 Easton, PA 18042 [email protected] [email protected] Andrija Peruniˇci´c Dept. of Mathematics Bard College Annandale-on-Hudson, NY 12504 [email protected] Submitted: Feb 11, 2007; Accepted: Oct 20, 2007; Published: Nov 12, 2007 Mathematics Subject Classification: 05B35 Dedicated to Thomas Brylawski. Abstract Let F4 be the root system associated with the 24-cell, and let M(F4) be the simple linear dependence matroid corresponding to this root system. We determine the automorphism group of this matroid and compare it to the Coxeter group W for the root system. We find non-geometric automorphisms that preserve the matroid but not the root system. 1 Introduction Given a finite set of vectors in Euclidean space, we consider the linear dependence matroid of the set, where dependences are taken over the reals. When the original set of vectors has `nice' symmetry, it makes sense to compare the geometric symmetry (the Coxeter or Weyl group) with the group that preserves sets of linearly independent vectors. This latter group is precisely the automorphism group of the linear matroid determined by the given vectors. ∗Research supported by NSF grant DMS-055282. the electronic journal of combinatorics 14 (2007), #R78 1 For the root systems An and Bn, matroid automorphisms do not give any additional symmetry [4]. One can interpret these results in the following way: combinatorial sym- metry (preserving dependence) and geometric symmetry (via isometries of the ambient Euclidean space) are essentially the same. -

Platonic Solids Generate Their Four-Dimensional Analogues

1 Platonic solids generate their four-dimensional analogues PIERRE-PHILIPPE DECHANT a;b;c* aInstitute for Particle Physics Phenomenology, Ogden Centre for Fundamental Physics, Department of Physics, University of Durham, South Road, Durham, DH1 3LE, United Kingdom, bPhysics Department, Arizona State University, Tempe, AZ 85287-1604, United States, and cMathematics Department, University of York, Heslington, York, YO10 5GG, United Kingdom. E-mail: [email protected] Polytopes; Platonic Solids; 4-dimensional geometry; Clifford algebras; Spinors; Coxeter groups; Root systems; Quaternions; Representations; Symmetries; Trinities; McKay correspondence Abstract In this paper, we show how regular convex 4-polytopes – the analogues of the Platonic solids in four dimensions – can be constructed from three-dimensional considerations concerning the Platonic solids alone. Via the Cartan-Dieudonne´ theorem, the reflective symmetries of the arXiv:1307.6768v1 [math-ph] 25 Jul 2013 Platonic solids generate rotations. In a Clifford algebra framework, the space of spinors gen- erating such three-dimensional rotations has a natural four-dimensional Euclidean structure. The spinors arising from the Platonic Solids can thus in turn be interpreted as vertices in four- dimensional space, giving a simple construction of the 4D polytopes 16-cell, 24-cell, the F4 root system and the 600-cell. In particular, these polytopes have ‘mysterious’ symmetries, that are almost trivial when seen from the three-dimensional spinorial point of view. In fact, all these induced polytopes are also known to be root systems and thus generate rank-4 Coxeter PREPRINT: Acta Crystallographica Section A A Journal of the International Union of Crystallography 2 groups, which can be shown to be a general property of the spinor construction. -

Hyperbolic Weyl Groups and the Four Normed Division Algebras

Hyperbolic Weyl Groups and the Four Normed Division Algebras Alex Feingold1, Axel Kleinschmidt2, Hermann Nicolai3 1 Department of Mathematical Sciences, State University of New York Binghamton, New York 13902–6000, U.S.A. 2 Physique Theorique´ et Mathematique,´ Universite´ Libre de Bruxelles & International Solvay Institutes, Boulevard du Triomphe, ULB – CP 231, B-1050 Bruxelles, Belgium 3 Max-Planck-Institut fur¨ Gravitationsphysik, Albert-Einstein-Institut Am Muhlenberg¨ 1, D-14476 Potsdam, Germany – p. Introduction Presented at Illinois State University, July 7-11, 2008 Vertex Operator Algebras and Related Areas Conference in Honor of Geoffrey Mason’s 60th Birthday – p. Introduction Presented at Illinois State University, July 7-11, 2008 Vertex Operator Algebras and Related Areas Conference in Honor of Geoffrey Mason’s 60th Birthday – p. Introduction Presented at Illinois State University, July 7-11, 2008 Vertex Operator Algebras and Related Areas Conference in Honor of Geoffrey Mason’s 60th Birthday “The mathematical universe is inhabited not only by important species but also by interesting individuals” – C. L. Siegel – p. Introduction (I) In 1983 Feingold and Frenkel studied the rank 3 hyperbolic Kac–Moody algebra = A++ with F 1 y y @ y Dynkin diagram @ 1 0 1 − Weyl Group W ( )= w , w , w wI = 2, wI wJ = mIJ F h 1 2 3 || | | | i 2 1 0 − 2(αI , αJ ) Cartan matrix [AIJ ]= 1 2 2 = − − (αJ , αJ ) 0 2 2 − 2 3 2 Coxeter exponents [mIJ ]= 3 2 ∞ 2 2 ∞ – p. Introduction (II) The simple roots, α 1, α0, α1, span the − 1 Root lattice Λ( )= α = nI αI n 1,n0,n1 Z F − ∈ ( I= 1 ) X− – p. -

Semisimple Q-Algebras in Algebraic Combinatorics

Semisimple Q-algebras in algebraic combinatorics Allen Herman (Regina) Group Ring Day, ICTS Bangalore October 21, 2019 I.1 Coherent Algebras and Association Schemes Definition 1 (D. Higman 1987). A coherent algebra (CA) is a subalgebra CB of Mn(C) defined by a special basis B, which is a collection of non-overlapping 01-matrices that (1) is a closed set under the transpose, (2) sums to J, the n × n all 1's matrix, and (3) contains a subset ∆ of diagonal matrices summing I , the identity matrix. The standard basis B of a CA in Mn(C) is precisely the set of adjacency matrices of a coherent configuration (CC) of order n and rank r = jBj. > B = B =) CB is semisimple. When ∆ = fI g, B is the set of adjacency matrices of an association scheme (AS). Allen Herman (Regina) Semisimple Q-algebras in Algebraic Combinatorics I.2 Example: Basic matrix of an AS An AS or CC can be illustrated by its basic matrix. Here we give the basic matrix of an AS with standard basis B = fb0; b1; :::; b7g: 2 012344556677 3 6 103244556677 7 6 7 6 230166774455 7 6 7 6 321066774455 7 6 7 6 447701665523 7 6 7 d 6 7 X 6 447710665532 7 ibi = 6 7 6 556677012344 7 i=0 6 7 6 556677103244 7 6 7 6 774455230166 7 6 7 6 774455321066 7 6 7 4 665523447701 5 665532447710 Allen Herman (Regina) Semisimple Q-algebras in Algebraic Combinatorics I.3 Example: Basic matrix of a CC of order 10 and j∆j = 2 2 0 123232323 3 6 1 032323232 7 6 7 6 4567878787 7 6 7 6 5476787878 7 d 6 7 X 6 4587678787 7 ibi = 6 7 6 5478767878 7 i=0 6 7 6 4587876787 7 6 7 6 5478787678 7 6 7 4 4587878767 5 5478787876 ∆ = fb0; b6g, δ(b0) = δ(b6) = 1 Diagonal block valencies: δ(b1) = 1, δ(b7) = 4, δ(b8) = 3; Off diagonal block valencies: row valencies: δr (b2) = δr (b3) = 4, δr (b4) = δr (b5) = 1 column valencies: δc (b2) = δc (b3) = 1, δc (b4) = δc (b5) = 4. -

Classifying the Representation Type of Infinitesimal Blocks of Category

Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. Platt (Under the Direction of Brian D. Boe) Abstract Let g be a simple Lie algebra over the field C of complex numbers, with root system Φ relative to a fixed maximal toral subalgebra h. Let S be a subset of the simple roots ∆ of Φ, which determines a standard parabolic subalgebra of g. Fix an integral weight ∗ µ µ ∈ h , with singular set J ⊆ ∆. We determine when an infinitesimal block O(g,S,J) := OS of parabolic category OS is nonzero using the theory of nilpotent orbits. We extend work of Futorny-Nakano-Pollack, Br¨ustle-K¨onig-Mazorchuk, and Boe-Nakano toward classifying the representation type of the nonzero infinitesimal blocks of category OS by considering arbitrary sets S and J, and observe a strong connection between the theory of nilpotent orbits and the representation type of the infinitesimal blocks. We classify certain infinitesimal blocks of category OS including all the semisimple infinitesimal blocks in type An, and all of the infinitesimal blocks for F4 and G2. Index words: Category O; Representation type; Verma modules Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. Platt B.A., Utah State University, 1999 M.S., Utah State University, 2001 M.A, The University of Georgia, 2006 A Thesis Submitted to the Graduate Faculty of The University of Georgia in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy Athens, Georgia 2008 c 2008 Kenyon J. Platt All Rights Reserved Classifying the Representation Type of Infinitesimal Blocks of Category OS by Kenyon J. -

Completely Integrable Gradient Flows

Commun. Math. Phys. 147, 57-74 (1992) Communications in Maltlematical Physics Springer-Verlag 1992 Completely Integrable Gradient Flows Anthony M. Bloch 1'*, Roger W. Brockett 2'** and Tudor S. Ratiu 3'*** 1 Department of Mathematics, The Ohio State University, Columbus, OH 43210, USA z Division of Applied Sciences, Harvard University, Cambridge, MA 02138, USA 3 Department of Mathematics, University of California, Santa Cruz, CA 95064, USA Received December 6, 1990, in revised form July 11, 1991 Abstract. In this paper we exhibit the Toda lattice equations in a double bracket form which shows they are gradient flow equations (on their isospectral set) on an adjoint orbit of a compact Lie group. Representations for the flows are given and a convexity result associated with a momentum map is proved. Some general properties of the double bracket equations are demonstrated, including a discussion of their invariant subspaces, and their function as a Lie algebraic sorter. O. Introduction In this paper we present details of the proofs and applications of the results on the generalized Toda lattice equations announced in [7]. One of our key results is exhibiting the Toda equations in a double bracket form which shows directly that they are gradient flow equations (on their isospectral set) on an adjoint orbit of a compact Lie group. This system, which is gradient with respect to the normal metric on the orbit, is quite different from the repre- sentation of the Toda flow as a gradient system on N" in Moser's fundamental paper [27]. In our representation the same set of equations is thus Hamiltonian and a gradient flow on the isospectral set. -

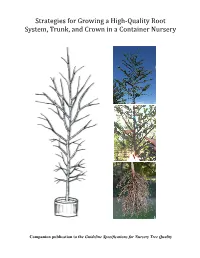

Strategies for Growing a High‐Quality Root System, Trunk, and Crown in a Container Nursery

Strategies for Growing a High‐Quality Root System, Trunk, and Crown in a Container Nursery Companion publication to the Guideline Specifications for Nursery Tree Quality Table of Contents Introduction Section 1: Roots Defects ...................................................................................................................................................................... 1 Liner Development ............................................................................................................................................. 3 Root Ball Management in Larger Containers .......................................................................................... 6 Root Distribution within Root Ball .............................................................................................................. 10 Depth of Root Collar ........................................................................................................................................... 11 Section 2: Trunk Temporary Branches ......................................................................................................................................... 12 Straight Trunk ...................................................................................................................................................... 13 Section 3: Crown Central Leader ......................................................................................................................................................14 Heading and Re‐training -

LIE GROUPS and ALGEBRAS NOTES Contents 1. Definitions 2

LIE GROUPS AND ALGEBRAS NOTES STANISLAV ATANASOV Contents 1. Definitions 2 1.1. Root systems, Weyl groups and Weyl chambers3 1.2. Cartan matrices and Dynkin diagrams4 1.3. Weights 5 1.4. Lie group and Lie algebra correspondence5 2. Basic results about Lie algebras7 2.1. General 7 2.2. Root system 7 2.3. Classification of semisimple Lie algebras8 3. Highest weight modules9 3.1. Universal enveloping algebra9 3.2. Weights and maximal vectors9 4. Compact Lie groups 10 4.1. Peter-Weyl theorem 10 4.2. Maximal tori 11 4.3. Symmetric spaces 11 4.4. Compact Lie algebras 12 4.5. Weyl's theorem 12 5. Semisimple Lie groups 13 5.1. Semisimple Lie algebras 13 5.2. Parabolic subalgebras. 14 5.3. Semisimple Lie groups 14 6. Reductive Lie groups 16 6.1. Reductive Lie algebras 16 6.2. Definition of reductive Lie group 16 6.3. Decompositions 18 6.4. The structure of M = ZK (a0) 18 6.5. Parabolic Subgroups 19 7. Functional analysis on Lie groups 21 7.1. Decomposition of the Haar measure 21 7.2. Reductive groups and parabolic subgroups 21 7.3. Weyl integration formula 22 8. Linear algebraic groups and their representation theory 23 8.1. Linear algebraic groups 23 8.2. Reductive and semisimple groups 24 8.3. Parabolic and Borel subgroups 25 8.4. Decompositions 27 Date: October, 2018. These notes compile results from multiple sources, mostly [1,2]. All mistakes are mine. 1 2 STANISLAV ATANASOV 1. Definitions Let g be a Lie algebra over algebraically closed field F of characteristic 0. -

Introuduction to Representation Theory of Lie Algebras

Introduction to Representation Theory of Lie Algebras Tom Gannon May 2020 These are the notes for the summer 2020 mini course on the representation theory of Lie algebras. We'll first define Lie groups, and then discuss why the study of representations of simply connected Lie groups reduces to studying representations of their Lie algebras (obtained as the tangent spaces of the groups at the identity). We'll then discuss a very important class of Lie algebras, called semisimple Lie algebras, and we'll examine the repre- sentation theory of two of the most basic Lie algebras: sl2 and sl3. Using these examples, we will develop the vocabulary needed to classify representations of all semisimple Lie algebras! Please email me any typos you find in these notes! Thanks to Arun Debray, Joakim Færgeman, and Aaron Mazel-Gee for doing this. Also{thanks to Saad Slaoui and Max Riestenberg for agreeing to be teaching assistants for this course and for many, many helpful edits. Contents 1 From Lie Groups to Lie Algebras 2 1.1 Lie Groups and Their Representations . .2 1.2 Exercises . .4 1.3 Bonus Exercises . .4 2 Examples and Semisimple Lie Algebras 5 2.1 The Bracket Structure on Lie Algebras . .5 2.2 Ideals and Simplicity of Lie Algebras . .6 2.3 Exercises . .7 2.4 Bonus Exercises . .7 3 Representation Theory of sl2 8 3.1 Diagonalizability . .8 3.2 Classification of the Irreducible Representations of sl2 .............8 3.3 Bonus Exercises . 10 4 Representation Theory of sl3 11 4.1 The Generalization of Eigenvalues . -

Symmetries, Fields and Particles. Examples 1

Michaelmas Term 2016, Mathematical Tripos Part III Prof. N. Dorey Symmetries, Fields and Particles. Examples 1. 1. O(n) consists of n × n real matrices M satisfying M T M = I. Check that O(n) is a group. U(n) consists of n × n complex matrices U satisfying U†U = I. Check similarly that U(n) is a group. Verify that O(n) and SO(n) are the subgroups of real matrices in, respectively, U(n) and SU(n). By considering how U(n) matrices act on vectors in Cn, and identifying Cn with R2n, show that U(n) is a subgroup of SO(2n). 2. Show that for matrices M ∈ O(n), the first column of M is an arbitrary unit vector, the second is a unit vector orthogonal to the first, ..., the kth column is a unit vector orthogonal to the span of the previous ones, etc. Deduce the dimension of O(n). By similar reasoning, determine the dimension of U(n). Show that any column of a unitary matrix U is not in the (complex) linear span of the remaining columns. 3. Consider the real 3 × 3 matrix, R(n,θ)ij = cos θδij + (1 − cos θ)ninj − sin θǫijknk 3 where n = (n1, n2, n3) is a unit vector in R . Verify that n is an eigenvector of R(n,θ) with eigenvalue one. Now choose an orthonormal basis for R3 with basis vectors {n, m, m˜ } satisfying, m · m = 1, m · n = 0, m˜ = n × m. By considering the action of R(n,θ) on these basis vectors show that this matrix corre- sponds to a rotation through an angle θ about an axis parallel to n and check that it is an element of SO(3). -

Platonic Solids Generate Their Four-Dimensional Analogues

This is a repository copy of Platonic solids generate their four-dimensional analogues. White Rose Research Online URL for this paper: https://eprints.whiterose.ac.uk/85590/ Version: Accepted Version Article: Dechant, Pierre-Philippe orcid.org/0000-0002-4694-4010 (2013) Platonic solids generate their four-dimensional analogues. Acta Crystallographica Section A : Foundations of Crystallography. pp. 592-602. ISSN 1600-5724 https://doi.org/10.1107/S0108767313021442 Reuse Items deposited in White Rose Research Online are protected by copyright, with all rights reserved unless indicated otherwise. They may be downloaded and/or printed for private study, or other acts as permitted by national copyright laws. The publisher or other rights holders may allow further reproduction and re-use of the full text version. This is indicated by the licence information on the White Rose Research Online record for the item. Takedown If you consider content in White Rose Research Online to be in breach of UK law, please notify us by emailing [email protected] including the URL of the record and the reason for the withdrawal request. [email protected] https://eprints.whiterose.ac.uk/ 1 Platonic solids generate their four-dimensional analogues PIERRE-PHILIPPE DECHANT a,b,c* aInstitute for Particle Physics Phenomenology, Ogden Centre for Fundamental Physics, Department of Physics, University of Durham, South Road, Durham, DH1 3LE, United Kingdom, bPhysics Department, Arizona State University, Tempe, AZ 85287-1604, United States, and cMathematics Department, University of York, Heslington, York, YO10 5GG, United Kingdom. E-mail: [email protected] Polytopes; Platonic Solids; 4-dimensional geometry; Clifford algebras; Spinors; Coxeter groups; Root systems; Quaternions; Representations; Symmetries; Trinities; McKay correspondence Abstract In this paper, we show how regular convex 4-polytopes – the analogues of the Platonic solids in four dimensions – can be constructed from three-dimensional considerations concerning the Platonic solids alone.