Interview with Stefan Savage: on the Spam Payment Trail Rik Farrow

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Shorten Device Boot Time for Automotive IVI and Navigation Systems

Shorten Device Boot Time for Automotive IVI and Navigation Systems Jim Huang ( 黃敬群 ) <[email protected]> Dr. Shi-wu Lo <[email protected]> May 28, 2013 / Automotive Linux Summit (Spring) Rights to copy © Copyright 2013 0xlab http://0xlab.org/ [email protected] Attribution – ShareAlike 3.0 Corrections, suggestions, contributions and translations You are free are welcome! to copy, distribute, display, and perform the work to make derivative works Latest update: May 28, 2013 to make commercial use of the work Under the following conditions Attribution. You must give the original author credit. Share Alike. If you alter, transform, or build upon this work, you may distribute the resulting work only under a license identical to this one. For any reuse or distribution, you must make clear to others the license terms of this work. Any of these conditions can be waived if you get permission from the copyright holder. Your fair use and other rights are in no way affected by the above. License text: http://creativecommons.org/licenses/by-sa/3.0/legalcode Goal of This Presentation • Propose a practical approach of the mixture of ARM hibernation (suspend to disk) and Linux user-space checkpointing – to shorten device boot time • An intrusive technique for Android/Linux – minimal init script and root file system changes are required • Boot time is one of the key factors for Automotive IVI – mentioned by “Linux Powered Clusters” and “Silver Bullet of Virtualization (Pitfalls, Challenges and Concerns) Continued” at ALS 2013 – highlighted by “Boot Time Optimizations” at ALS 2012 About this presentation • joint development efforts of the following entities – 0xlab team - http://0xlab.org/ – OSLab, National Chung Cheng University of Taiwan, led by Dr. -

Linux on the Road

Linux on the Road Linux with Laptops, Notebooks, PDAs, Mobile Phones and Other Portable Devices Werner Heuser <wehe[AT]tuxmobil.org> Linux Mobile Edition Edition Version 3.22 TuxMobil Berlin Copyright © 2000-2011 Werner Heuser 2011-12-12 Revision History Revision 3.22 2011-12-12 Revised by: wh The address of the opensuse-mobile mailing list has been added, a section power management for graphics cards has been added, a short description of Intel's LinuxPowerTop project has been added, all references to Suspend2 have been changed to TuxOnIce, links to OpenSync and Funambol syncronization packages have been added, some notes about SSDs have been added, many URLs have been checked and some minor improvements have been made. Revision 3.21 2005-11-14 Revised by: wh Some more typos have been fixed. Revision 3.20 2005-11-14 Revised by: wh Some typos have been fixed. Revision 3.19 2005-11-14 Revised by: wh A link to keytouch has been added, minor changes have been made. Revision 3.18 2005-10-10 Revised by: wh Some URLs have been updated, spelling has been corrected, minor changes have been made. Revision 3.17.1 2005-09-28 Revised by: sh A technical and a language review have been performed by Sebastian Henschel. Numerous bugs have been fixed and many URLs have been updated. Revision 3.17 2005-08-28 Revised by: wh Some more tools added to external monitor/projector section, link to Zaurus Development with Damn Small Linux added to cross-compile section, some additions about acoustic management for hard disks added, references to X.org added to X11 sections, link to laptop-mode-tools added, some URLs updated, spelling cleaned, minor changes. -

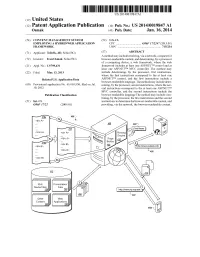

(12) Patent Application Publication (10) Pub. No.: US 2014/0019847 A1 Osmak (43) Pub

US 20140019847A1 (19) United States (12) Patent Application Publication (10) Pub. No.: US 2014/0019847 A1 OSmak (43) Pub. Date: Jan. 16, 2014 (54) CONTENT MANAGEMENT SYSTEM (52) U.S. Cl. EMPLOYINGA HYBRD WEB APPLICATION CPC .................................. G06F 17/2247 (2013.01) FRAMEWORK USPC .......................................................... 71.5/234 (71) Applicant: Telerik, AD, Sofia (BG) (57) ABSTRACT A method may include receiving, via a network, a request for (72) Inventor: Ivan Osmak, Sofia (BG) browser-renderable content, and determining, by a processor of a computing device, a web framework, where the web (21) Appl. No.: 13/799,431 framework includes at least one ASP.NETTM control and at least one ASP.NETTM MVC controller. The method may (22) Filed: Mar 13, 2013 include determining, by the processor, first instructions, where the first instructions correspond to the at least one Related U.S. Application Data ASP.NETTM control, and the first instructions include a browser-renderable language. The method may include deter (60) Provisional application No. 61/669,930, filed on Jul. mining, by the processor, second instructions, where the sec 10, 2012. ond instructions correspond to the at least one ASP.NETTM MVC controller, and the second instructions include the Publication Classification browser-renderable language The method may include com bining, by the processor, the first instructions and the second (51) Int. Cl. instructions to determine the browser-renderable content, and G06F 7/22 (2006.01) providing, via the network, the browser-renderable content. Routing Engine Ric Presentation Media Fies : Fies 22 Applications 28 Patent Application Publication Jan. 16, 2014 Sheet 1 of 8 US 2014/001.9847 A1 Patent Application Publication Jan. -

The Best of Both Worlds with On-Demand Virtualization

The Best of Both Worlds with On-Demand Virtualization Thawan Kooburat, Michael Swift University of Wisconsin-Madison [email protected], [email protected] Abstract Virtualization offers many benefits such as live migration, resource consolidation, and checkpointing. However, there are many cases where the overhead of virtualization is too high to justify for its merits. Most desktop and laptop PCs and many large web properties run natively because the benefits of virtualization are too small compared to the overhead. We propose a new middle ground: on-demand virtualization, in which systems run natively when they need native performance or features but can be converted on-the-fly to run virtually when necessary. This enables the user or system administrator to leverage the most useful features of virtualization, such as or checkpointing or consolidating workloads, during off-peak hours without paying the overhead during peak usage. We have developed a prototype of on-demand virtualization in Linux using the hibernate feature to transfer state between native execution and virtual execution, and find even networking applications can survive being virtualized. OS can return to native execution on its original hardware. 1. Introduction We call this approach on-demand virtualization, and it System virtualization offers many benefits in both data- gives operating systems an ability to be virtualized center and desktop environments, such as live migration, without service disruption. multi-tenancy, checkpointing, and the ability to run dif- Reaping the benefits of virtualization without its costs ferent operating systems at once. These features enable has been proposed previously in other forms. Microvisors hardware maintenance with little downtime, load ba- [14] provided the first step in the direction toward lancing for better throughput or power efficiency, and on-demand virtualization. -

Bachelorarbeit

BACHELORARBEIT Realisierung von verzögerungsfreien Mehrbenutzer Webapplikationen auf Basis von HTML5 WebSockets Hochschule Harz University of Applied Sciences Wernigerode Fachbereich Automatisierung und Informatik im Fach Medieninformatik Erstprüfer: Prof. Jürgen K. Singer, Ph.D. Zweitprüfer: Prof. Dr. Olaf Drögehorn Erstellt von: Lars Häuser Datum: 16.06.2011 Einleitung Inhaltsverzeichnis 1 Einleitung ................................................................................................................. 5 1.1 Zielsetzung ..................................................................................................... 5 1.2 Aufbau der Arbeit ........................................................................................... 6 2 Grundlagen .............................................................................................................. 8 2.1 TCP/IP ............................................................................................................ 8 2.2 HTTP .............................................................................................................. 9 2.3 Request-Response-Paradigma (HTTP-Request-Cycle) .............................. 10 2.4 Klassische Webanwendung: Synchrone Datenübertragung ....................... 11 2.5 Asynchrone Webapplikationen .................................................................... 11 2.6 HTML5 ......................................................................................................... 12 3 HTML5 WebSockets ............................................................................................. -

Revealing Injection Vulnerabilities by Leveraging Existing Tests

Revealing Injection Vulnerabilities by Leveraging Existing Tests Katherine Hough1, Gebrehiwet Welearegai2, Christian Hammer2 and Jonathan Bell1 1George Mason University, Fairfax, VA, USA 2University of Potsdam, Potsdam, Germany [email protected],[email protected],[email protected],[email protected] Abstract just one of over 8,200 similar code injection exploits discovered in Code injection attacks, like the one used in the high-prole 2017 recent years in popular software [44]. Code injection vulnerabilities Equifax breach, have become increasingly common, now ranking have been exploited in repeated attacks on US election systems [10, #1 on OWASP’s list of critical web application vulnerabilities. Static 18, 39, 61], in the theft of sensitive nancial data [56], and in the analyses for detecting these vulnerabilities can overwhelm develop- theft of millions of credit card numbers [33]. In the past several ers with false positive reports. Meanwhile, most dynamic analyses years, code injection attacks have persistently ranked at the top rely on detecting vulnerabilities as they occur in the eld, which of the Open Web Application Security Project (OWASP) top ten can introduce a high performance overhead in production code. most dangerous web aws [46]. Injection attacks can be damaging This paper describes a new approach for detecting injection vul- even for applications that are not traditionally considered critical nerabilities in applications by harnessing the combined power of targets, such as personal websites, because attackers can use them human developers’ test suites and automated dynamic analysis. as footholds to launch more complicated attacks. Our new approach, Rivulet, monitors the execution of developer- In a code injection attack, an adversary crafts a malicious in- written functional tests in order to detect information ows that put that gets interpreted by the application as code rather than may be vulnerable to attack. -

SWE 642 Software Engineering for the World Wide Web

SWE 642 Software Engineering for the World Wide Web Fall Semester, 2017 Location: AB L008 Time: Tuesday 7:20-10:00pm Instructor Overview Textbook and Readings Grading Schedule Academic Integrity Professor: Dr. Vinod Dubey Email: [email protected] Class Hours: Tuesday 7:20-10:00, AB L008 Prerequisite: SWE 619 and SWE Foundation material or (CS 540 and 571) Office Hours: Anytime electronically, or by an appointment Teaching Assistant: Mr. Shravan Hyderabad, [email protected] Overview OBJECTIVE: After completing the course, students will understand the concepts and have the knowledge of how web applications are designed and constructed. Students will be able to engineer high quality building blocks for Web applications. CONTENT: Detailed study of the engineering methods and technologies for building highly interactive web sites for e-commerce and other web-based applications. Engineering principles for building web sites that exhibit high reliability, usability, security, availability, scalability and maintainability are presented. Methods such as client-server programming, component-based software development, middleware, and reusable components are covered. After the course, students should be prepared to create software for large-scale web sites. SWE 642 teaches some of the topics related to the exciting software development models that are used to support web and e-commerce applications. We will be studying the software design and development side of web applications, rather than the policy, business, or networking sides. An introductory level knowledge of HTML and Java is required. SWE 619 is a required prerequisite and SWE 632 is a good background course. The class will be very practical (how to build things) and require several programming assignments. -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Strange History

A STRANGE HISTORY. A DRAMATIC TALE, IN EIGHT CHAPTERS. BY MESSRS. SLINGSBY LAWRENCE AND CHARLES MATHEWS, Authors of " A Chain of Events," &c. &c. THOMAS HAILES LACY, WELLINGTON STREET, STRAND, LONDON. First Performed at the Royal Lyceum Theatre, on Easter Monday, March 29th, 1853. CHARACTERS. JEROME LEVERD ..................................... Mr. CHARLES MATHEWS. LEGROS Mr. FRANK MATTHEWS. NICOLAS Mr. ROBERT ROXBY. MAURICE BELLISLE ................................ Mr. COOPER. DOMINIQUE Mr. JAMES BLAND. ALFRED DE MIRECOUR ..................... Mr. BELTON. JEAN BRIGARD ............................. Mr. BASIL BAKER. AMEDEE Mr. ROSIERE. HECTOR DE BEAUSIRE ...................... Mr. H. BUTLER. CAPTAIN OF GENDARMES ................. Mr. ABBOTT. PIERRE Mr. HENRY. CHRISTINE Madame VESTRIS. MADAME LEGROS .......................................... Mrs. FRANK MATTHEWS. NICOTTE Miss JULIA ST. GEORGE. ESTELLE Miss M. OLIVER. COUNTESS DE MIRECOUR ................... Mrs. HORN. MANETTE Miss MASON. MARGUERITE Miss WADHAM. Time in Representation, Three Hours. If performed in Five Acts, Two Hours and Twenty Minutes. COSTUMES. In the first three chapters Tyrolean ; and French soldiers. In the rest, Breton peasants and French gentlemen of 1810-15. JEROME.—1st Dress—Peasant's brown jacket, black sleeves, red vest; full brown trunks i grey leggings ; shoes ; felt Swiss hat and feather ; over-coat for the mountains, brown camels hair, trimmed with fur. 2nd Dress—Brown open jacket, without sleeves ; holland shirt. 3rd Dress—Light blue open jacket, slashed sleeves of brown ; red em- broidered vest; light drab full trunks, all trimmed with white and coloured gimp ; white buttons ; striped silk sash. 4th Dress—Long blue dress coat (Paris cut), gilt buttons; white vest, trimmed with white fringe; white silk stockings ; short blue breeches ; Hessian boots, &c.; sugar-loaf hat; long hair. -

Pragmaticperl-Interviews-A4.Pdf

Pragmatic Perl Interviews pragmaticperl.com 2013—2015 Editor and interviewer: Viacheslav Tykhanovskyi Covers: Marko Ivanyk Revision: 2018-03-02 11:22 © Pragmatic Perl Contents 1 Preface .......................................... 1 2 Alexis Sukrieh (April 2013) ............................... 2 3 Sawyer X (May 2013) .................................. 10 4 Stevan Little (September 2013) ............................. 17 5 chromatic (October 2013) ................................ 22 6 Marc Lehmann (November 2013) ............................ 29 7 Tokuhiro Matsuno (January 2014) ........................... 46 8 Randal Schwartz (February 2014) ........................... 53 9 Christian Walde (May 2014) .............................. 56 10 Florian Ragwitz (rafl) (June 2014) ........................... 62 11 Curtis “Ovid” Poe (September 2014) .......................... 70 12 Leon Timmermans (October 2014) ........................... 77 13 Olaf Alders (December 2014) .............................. 81 14 Ricardo Signes (January 2015) ............................. 87 15 Neil Bowers (February 2015) .............................. 94 16 Renée Bäcker (June 2015) ................................ 102 17 David Golden (July 2015) ................................ 109 18 Philippe Bruhat (Book) (August 2015) . 115 19 Author .......................................... 123 i Preface 1 Preface Hello there! You have downloaded a compilation of interviews done with Perl pro- grammers in Pragmatic Perl journal from 2013 to 2015. Since the journal itself is in Russian -

What Is Perl

AdvancedAdvanced PerlPerl TechniquesTechniques DayDay 22 Dave Cross Magnum Solutions Ltd [email protected] Schedule 09:45 – Begin 11:15 – Coffee break (15 mins) 13:00 – Lunch (60 mins) 14:00 – Begin 15:30 – Coffee break (15 mins) 17:00 – End FlossUK 24th February 2012 Resources Slides available on-line − http://mag-sol.com/train/public/2012-02/ukuug Also see Slideshare − http://www.slideshare.net/davorg/slideshows Get Satisfaction − http://getsatisfaction.com/magnum FlossUK 24th February 2012 What We Will Cover Modern Core Perl − What's new in Perl 5.10, 5.12 & 5.14 Advanced Testing Database access with DBIx::Class Handling Exceptions FlossUK 24th February 2012 What We Will Cover Profiling and Benchmarking Object oriented programming with Moose MVC Frameworks − Catalyst PSGI and Plack FlossUK 24th February 2012 BenchmarkingBenchmarking && ProfilingProfiling Benchmarking Ensure that your program is fast enough But how fast is fast enough? premature optimization is the root of all evil − Donald Knuth − paraphrasing Tony Hoare Don't optimise until you know what to optimise FlossUK 24th February 2012 Benchmark.pm Standard Perl module for benchmarking Simple usage use Benchmark; my %methods = ( method1 => sub { ... }, method2 => sub { ... }, ); timethese(10_000, \%methods); Times 10,000 iterations of each method FlossUK 24th February 2012 Benchmark.pm Output Benchmark: timing 10000 iterations of method1, method2... method1: 6 wallclock secs \ ( 2.12 usr + 3.47 sys = 5.59 CPU) \ @ 1788.91/s (n=10000) method2: 3 wallclock secs \ ( 0.85 usr + 1.70 sys = 2.55 CPU) \ @ 3921.57/s (n=10000) FlossUK 24th February 2012 Timed Benchmarks Passing timethese a positive number runs each piece of code a certain number of times Passing timethese a negative number runs each piece of code for a certain number of seconds FlossUK 24th February 2012 Timed Benchmarks use Benchmark; my %methods = ( method1 => sub { .. -

Modern Perl, Fourth Edition

Prepared exclusively for none ofyourbusiness Prepared exclusively for none ofyourbusiness Early Praise for Modern Perl, Fourth Edition A dozen years ago I was sure I knew what Perl looked like: unreadable and obscure. chromatic showed me beautiful, structured expressive code then. He’s the right guy to teach Modern Perl. He was writing it before it existed. ➤ Daniel Steinberg President, DimSumThinking, Inc. A tour de force of idiomatic code, Modern Perl teaches you not just “how” but also “why.” ➤ David Farrell Editor, PerlTricks.com If I had to pick a single book to teach Perl 5, this is the one I’d choose. As I read it, I was reminded of the first time I read K&R. It will teach everything that one needs to know to write Perl 5 well. ➤ David Golden Member, Perl 5 Porters, Autopragmatic, LLC I’m about to teach a new hire Perl using the first edition of Modern Perl. I’d much rather use the updated copy! ➤ Belden Lyman Principal Software Engineer, MediaMath It’s not the Perl book you deserve. It’s the Perl book you need. ➤ Gizmo Mathboy Co-founder, Greater Lafayette Open Source Symposium (GLOSSY) Prepared exclusively for none ofyourbusiness We've left this page blank to make the page numbers the same in the electronic and paper books. We tried just leaving it out, but then people wrote us to ask about the missing pages. Anyway, Eddy the Gerbil wanted to say “hello.” Prepared exclusively for none ofyourbusiness Modern Perl, Fourth Edition chromatic The Pragmatic Bookshelf Dallas, Texas • Raleigh, North Carolina Prepared exclusively for none ofyourbusiness Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks.