UNIVERSITY of CALIFORNIA SAN DIEGO Understanding the Remote

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Netcat and Trojans/Backdoors

Netcat and Trojans/Backdoors ECE4883 – Internetwork Security 1 Agenda Overview • Netcat • Trojans/Backdoors ECE 4883 - Internetwork Security 2 Agenda Netcat • Netcat ! Overview ! Major Features ! Installation and Configuration ! Possible Uses • Netcat Defenses • Summary ECE 4883 - Internetwork Security 3 Netcat – TCP/IP Swiss Army Knife • Reads and Writes data across the network using TCP/UDP connections • Feature-rich network debugging and exploration tool • Part of the Red Hat Power Tools collection and comes standard on SuSE Linux, Debian Linux, NetBSD and OpenBSD distributions. • UNIX and Windows versions available at: http://www.atstake.com/research/tools/network_utilities/ ECE 4883 - Internetwork Security 4 Netcat • Designed to be a reliable “back-end” tool – to be used directly or easily driven by other programs/scripts • Very powerful in combination with scripting languages (eg. Perl) “If you were on a desert island, Netcat would be your tool of choice!” - Ed Skoudis ECE 4883 - Internetwork Security 5 Netcat – Major Features • Outbound or inbound connections • TCP or UDP, to or from any ports • Full DNS forward/reverse checking, with appropriate warnings • Ability to use any local source port • Ability to use any locally-configured network source address • Built-in port-scanning capabilities, with randomizer ECE 4883 - Internetwork Security 6 Netcat – Major Features (contd) • Built-in loose source-routing capability • Can read command line arguments from standard input • Slow-send mode, one line every N seconds • Hex dump of transmitted and received data • Optional ability to let another program service established connections • Optional telnet-options responder ECE 4883 - Internetwork Security 7 Netcat (called ‘nc’) • Can run in client/server mode • Default mode – client • Same executable for both modes • client mode nc [dest] [port_no_to_connect_to] • listen mode (-l option) nc –l –p [port_no_to_connect_to] ECE 4883 - Internetwork Security 8 Netcat – Client mode Computer with netcat in Client mode 1. -

Pest Control: Taming the Rats

RESEARCH pest control: taming the rats Authors Remote Administration Tools (RATs) allow a Shawn Denbow remote attacker to control and access the Twitter: @sdenbow_ system. In this paper, we present our analysis of Email: [email protected] their protocols, explain how to decrypt their Jesse Hertz traffic, as well as present vulnerabilities we have Twitter: @hectohertz found. Email: [email protected] Introduction As 2012 Matasano summer interns, we were tasked with running a research project with a couple criteria: • It should be something we are both interested in. • We should be able to present our research for the company at the end of our internship. However, on completion, we decided that it would be best if we made our findings public. With John Villamil, our advisor, we decided that given our interest in low-level analysis, we should analyze Remote Administration Tools (RATs). RATs have recently seen media attention due to their use by oppressive governments in spying on activists and other “dissidents”. We felt this to be a perfect project. Remote Administration Tools are pieces of software which, once installed on a victim’s computer allow a remote user to control and access the system. RATs can be used legitimately by system administrators, or they can be used maliciously. There are a variety of methods by which they are installed on a computer: Various social engineering tactics can be employed to get a user to open the executable, they can be bundled with other pieces of software, they can be installed as the payload of a virus or worm, or they can be installed after an attacker gains access to a system through an exploit. -

List of NMAP Scripts Use with the Nmap –Script Option

List of NMAP Scripts Use with the nmap –script option Retrieves information from a listening acarsd daemon. Acarsd decodes ACARS (Aircraft Communication Addressing and Reporting System) data in real time. The information retrieved acarsd-info by this script includes the daemon version, API version, administrator e-mail address and listening frequency. Shows extra information about IPv6 addresses, such as address-info embedded MAC or IPv4 addresses when available. Performs password guessing against Apple Filing Protocol afp-brute (AFP). Attempts to get useful information about files from AFP afp-ls volumes. The output is intended to resemble the output of ls. Detects the Mac OS X AFP directory traversal vulnerability, afp-path-vuln CVE-2010-0533. Shows AFP server information. This information includes the server's hostname, IPv4 and IPv6 addresses, and hardware type afp-serverinfo (for example Macmini or MacBookPro). Shows AFP shares and ACLs. afp-showmount Retrieves the authentication scheme and realm of an AJP service ajp-auth (Apache JServ Protocol) that requires authentication. Performs brute force passwords auditing against the Apache JServ protocol. The Apache JServ Protocol is commonly used by ajp-brute web servers to communicate with back-end Java application server containers. Performs a HEAD or GET request against either the root directory or any optional directory of an Apache JServ Protocol ajp-headers server and returns the server response headers. Discovers which options are supported by the AJP (Apache JServ Protocol) server by sending an OPTIONS request and lists ajp-methods potentially risky methods. ajp-request Requests a URI over the Apache JServ Protocol and displays the result (or stores it in a file). -

Chapter 3 Composite Default Screen Blind Folio 3:61

Color profile: GenericORACLE CMYK printerTips & Techniques profile 8 / Oracle9i for Windows 2000 Tips & Techniques / Jesse, Sale, Hart / 9462-6 / Chapter 3 Composite Default screen Blind Folio 3:61 CHAPTER 3 Configuring Windows 2000 P:\010Comp\OracTip8\462-6\ch03.vp Wednesday, November 14, 2001 3:20:31 PM Color profile: GenericORACLE CMYK printerTips & Techniques profile 8 / Oracle9i for Windows 2000 Tips & Techniques / Jesse, Sale, Hart / 9462-6 / Chapter 3 Composite Default screen Blind Folio 3:62 62 Oracle9i for Windows 2000 Tips & Techniques here are three basic configurations of Oracle on Windows 2000: as T a management platform, as an Oracle client, and as a database server. The first configuration is the platform from which you will manage Oracle installations across various machines on various operating systems. Most system and database administrators are given a desktop PC to perform day-to-day tasks that are not DBA specific (such as reading e-mail). From this desktop, you can also manage Oracle components installed on other operating systems (for example, Solaris, Linux, and HP-UX). Even so, you will want to configure Windows 2000 to make your system and database administrative tasks quick and easy. The Oracle client software configuration is used in more configurations than you might first suspect: ■ Web applications that connect to an Oracle database: ■ IIS 5 ASPs that use ADO to connect to an Oracle database ■ Perl DBI application running on Apache that connects to an Oracle database ■ Any J2EE application server that uses the thick JDBC driver ■ Client/server applications: ■ Desktop Visual Basic application that uses OLEDB or ODBC to connect to an Oracle Database ■ Desktop Java application that uses the thick JDBC to connect to Oracle In any of these configurations, at least an Oracle client installation is required. -

Major Qualifying Project

Network Anomaly Detection Utilizing Robust Principal Component Analysis Major Qualifying Project Advisors: PROFESSORS LANE HARRISON,RANDY PAFFENROTH Written By: AURA VELARDE RAMIREZ ERIK SOLA PLEITEZ A Major Qualifying Project WORCESTER POLYTECHNIC INSTITUTE Submitted to the Faculty of the Worcester Polytechnic Institute in partial fulfillment of the requirements for the Degree of Bachelor of Science in Computer Science. AUGUST 24, 2017 - MARCH 2, 2018 ABSTRACT n this Major Qualifying Project, we focus on the development of a visualization-enabled anomaly detection system. We examine the 2011 VAST dataset challenge to efficiently Igenerate meaningful features and apply Robust Principal Component Analysis (RPCA) to detect any data points estimated to be anomalous. This is done through an infrastructure that promotes the closing of the loop from feature generation to anomaly detection through RPCA. We enable our user to choose subsets of the data through a web application and learn through visualization systems where problems are within their chosen local data slice. In this report, we explore both feature engineering techniques along with optimizing RPCA which ultimately lead to a generalized approach for detecting anomalies within a defined network architecture. i TABLE OF CONTENTS Page List of Tables v List of Figures vii 1 Introduction 1 1.1 Introduction .......................................... 1 2 VAST Dataset Challenge3 2.1 2011 VAST Dataset...................................... 3 2.2 Attacks in the VAST Dataset ................................ 6 2.3 Avoiding Data Snooping ................................... 7 2.4 Previous Work......................................... 8 3 Anomalies in Cyber Security9 3.1 Anomaly detection methods................................. 9 4 Feature Engineering 12 4.1 Feature Engineering Process ................................ 12 4.2 Feature Selection For a Dataset.............................. -

Ncircle IP360

VULNERABILITY MANAGEMENT TECHNOLOGY REPORT nCircle IP360 OCTOBER 2006 www.westcoastlabs.org 2 VULNERABILITY MANAGEMENT TECHNOLOGY REPORT CONTENTS nCircle IP360 nCircle, 101 Second Street, Suite 400, San Francisco, CA 94105 Phone: +1 (415) 625 5900 • Fax: +1 (415) 625 5982 Test Environment and Network ................................................................3 Test Reports and Assessments ................................................................4 Checkmark Certification – Standard and Premium ....................................5 Vulnerabilities..........................................................................................6 West Coast Labs Vulnerabilities Classification ..........................................7 The Product ............................................................................................8 Developments in the IP360 Technology ....................................................9 Test Report ............................................................................................10 Test Results ............................................................................................17 West Coast Labs Conclusion....................................................................18 Security Features Buyers Guide ..............................................................19 West Coast Labs, William Knox House, Britannic Way, Llandarcy, Swansea, SA10 6EL, UK. Tel : +44 1792 324000, Fax : +44 1792 324001. www.westcoastlabs.org VULNERABILITY MANAGEMENT TECHNOLOGY REPORT 3 TEST ENVIRONMENT -

Adwind a Cross Platform

ADWIND A CROSSPLATFORM RAT REPORT ON THE INVESTIGATION INTO THE MALWAREASASERVICE PLATFORM AND ITS TARGETED ATTACKS Vitaly Kamluk, Alexander Gostev February 2016 V. 3.0 #TheSAS2016 #Adwind CONTENTS Executive summary ..................................................................................................... 4 The history of Adwind ................................................................................................ 5 Frutas RAT ................................................................................................................. 5 The Adwind RAT ..................................................................................................... 11 UNRECOM ................................................................................................................ 17 AlienSpy ..................................................................................................................... 24 The latest reincarnation of the malware ............................................................. 28 JSocket.org: malware-as-a-service ................................................................. 28 Registration .............................................................................................................. 29 Online malware shop ........................................................................................... 30 YouTube channel .................................................................................................... 32 Profitability .............................................................................................................. -

List of TCP and UDP Port Numbers from Wikipedia, the Free Encyclopedia

List of TCP and UDP port numbers From Wikipedia, the free encyclopedia This is a list of Internet socket port numbers used by protocols of the transport layer of the Internet Protocol Suite for the establishment of host-to-host connectivity. Originally, port numbers were used by the Network Control Program (NCP) in the ARPANET for which two ports were required for half- duplex transmission. Later, the Transmission Control Protocol (TCP) and the User Datagram Protocol (UDP) needed only one port for full- duplex, bidirectional traffic. The even-numbered ports were not used, and this resulted in some even numbers in the well-known port number /etc/services, a service name range being unassigned. The Stream Control Transmission Protocol database file on Unix-like operating (SCTP) and the Datagram Congestion Control Protocol (DCCP) also systems.[1][2][3][4] use port numbers. They usually use port numbers that match the services of the corresponding TCP or UDP implementation, if they exist. The Internet Assigned Numbers Authority (IANA) is responsible for maintaining the official assignments of port numbers for specific uses.[5] However, many unofficial uses of both well-known and registered port numbers occur in practice. Contents 1 Table legend 2 Well-known ports 3 Registered ports 4 Dynamic, private or ephemeral ports 5 See also 6 References 7 External links Table legend Official: Port is registered with IANA for the application.[5] Unofficial: Port is not registered with IANA for the application. Multiple use: Multiple applications are known to use this port. Well-known ports The port numbers in the range from 0 to 1023 are the well-known ports or system ports.[6] They are used by system processes that provide widely used types of network services. -

How to Cheat at Configuring Open Source Security Tools

436_XSS_FM.qxd 4/20/07 1:18 PM Page ii 441_HTC_OS_FM.qxd 4/12/07 1:32 PM Page i Visit us at www.syngress.com Syngress is committed to publishing high-quality books for IT Professionals and deliv- ering those books in media and formats that fit the demands of our customers. We are also committed to extending the utility of the book you purchase via additional mate- rials available from our Web site. SOLUTIONS WEB SITE To register your book, visit www.syngress.com/solutions. Once registered, you can access our [email protected] Web pages. There you may find an assortment of value- added features such as free e-books related to the topic of this book, URLs of related Web sites, FAQs from the book, corrections, and any updates from the author(s). ULTIMATE CDs Our Ultimate CD product line offers our readers budget-conscious compilations of some of our best-selling backlist titles in Adobe PDF form. These CDs are the perfect way to extend your reference library on key topics pertaining to your area of expertise, including Cisco Engineering, Microsoft Windows System Administration, CyberCrime Investigation, Open Source Security, and Firewall Configuration, to name a few. DOWNLOADABLE E-BOOKS For readers who can’t wait for hard copy, we offer most of our titles in downloadable Adobe PDF form. These e-books are often available weeks before hard copies, and are priced affordably. SYNGRESS OUTLET Our outlet store at syngress.com features overstocked, out-of-print, or slightly hurt books at significant savings. SITE LICENSING Syngress has a well-established program for site licensing our e-books onto servers in corporations, educational institutions, and large organizations. -

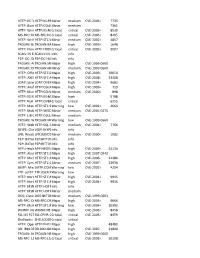

HTTP: IIS "Propfind" Rem HTTP:IIS:PROPFIND Minor Medium

HTTP: IIS "propfind"HTTP:IIS:PROPFIND RemoteMinor DoS medium CVE-2003-0226 7735 HTTP: IkonboardHTTP:CGI:IKONBOARD-BADCOOKIE IllegalMinor Cookie Languagemedium 7361 HTTP: WindowsHTTP:IIS:NSIISLOG-OF Media CriticalServices NSIISlog.DLLcritical BufferCVE-2003-0349 Overflow 8035 MS-RPC: DCOMMS-RPC:DCOM:EXPLOIT ExploitCritical critical CVE-2003-0352 8205 HTTP: WinHelp32.exeHTTP:STC:WINHELP32-OF2 RemoteMinor Buffermedium Overrun CVE-2002-0823(2) 4857 TROJAN: BackTROJAN:BACKORIFICE:BO2K-CONNECT Orifice 2000Major Client Connectionhigh CVE-1999-0660 1648 HTTP: FrontpageHTTP:FRONTPAGE:FP30REG.DLL-OF fp30reg.dllCritical Overflowcritical CVE-2003-0822 9007 SCAN: IIS EnumerationSCAN:II:IIS-ISAPI-ENUMInfo info P2P: DC: DirectP2P:DC:HUB-LOGIN ConnectInfo Plus Plus Clientinfo Hub Login TROJAN: AOLTROJAN:MISC:AOLADMIN-SRV-RESP Admin ServerMajor Responsehigh CVE-1999-0660 TROJAN: DigitalTROJAN:MISC:ROOTBEER-CLIENT RootbeerMinor Client Connectmedium CVE-1999-0660 HTTP: OfficeHTTP:STC:DL:OFFICEART-PROP Art PropertyMajor Table Bufferhigh OverflowCVE-2009-2528 36650 HTTP: AXIS CommunicationsHTTP:STC:ACTIVEX:AXIS-CAMERAMajor Camerahigh Control (AxisCamControl.ocx)CVE-2008-5260 33408 Unsafe ActiveX Control LDAP: IpswitchLDAP:OVERFLOW:IMAIL-ASN1 IMail LDAPMajor Daemonhigh Remote BufferCVE-2004-0297 Overflow 9682 HTTP: AnyformHTTP:CGI:ANYFORM-SEMICOLON SemicolonMajor high CVE-1999-0066 719 HTTP: Mini HTTP:CGI:W3-MSQL-FILE-DISCLSRSQL w3-msqlMinor File View mediumDisclosure CVE-2000-0012 898 HTTP: IIS MFCHTTP:IIS:MFC-EXT-OF ISAPI FrameworkMajor Overflowhigh (via -

Growth and Commoditization of Remote Access Trojans

30 September - 2 October, 2020 / vblocalhost.com GROWTH AND COMMODITIZATION OF REMOTE ACCESS TROJANS Veronica Valeros & Sebastian García Czech Technical University in Prague, Czech Republic [email protected] [email protected] www.virusbulletin.com GROWTH AND COMMODITIZATION OF REMOTE ACCESS TROJANS VALEROS & GARCÍA ABSTRACT Remote access trojans (RATs) are an intrinsic part of traditional cybercriminal activities, and they have also become a standard tool in advanced espionage attacks and scams. There have been significant changes in the cybercrime world in terms of organization, attacks and tools in the last three decades, however, the overly specialized research on RATs has led to a seeming lack of understanding of how RATs in particular have evolved as a phenomenon. The lack of generalist research hinders the understanding and development of new techniques and methods to better detect them. This work presents the first results of a long-term research project looking at remote access trojans. Through an extensive methodological process of collection of families of RATs, we are able to present an analysis of the growth of RATs in the last 30 years. Through a closer analysis of 11 selected RATs, we discuss how they have become a commodity in the last decade. Finally, through the collected information we attempt to characterize RATs, their victims, attacks and operators. Preliminary results of our ongoing research have shown that the number of RATs has increased drastically in the past ten years and that nowadays RATs have become standardized commodity products that are not very different from each other. INTRODUCTION Remote access software is a type of computer program that allows an individual to have full remote control of the device on which the software is installed. -

Bugs in the Market: Creating a Legitimate, Transparent, and Vendor-Focused Market for Software Vulnerabilities

BUGS IN THE MARKET: CREATING A LEGITIMATE, TRANSPARENT, AND VENDOR-FOCUSED MARKET FOR SOFTWARE VULNERABILITIES Jay P. Kesan* & Carol M. Hayes** Ukraine, December 23, 2015. Hundreds of thousands of homes lost power. Call center communications were blocked. Authorities reported that 103 cities experienced a total blackout. The alleged cause? BlackEnergy malware. With so much of our daily lives reliant on computers, is modern civilization just a stream of ones and zeroes away from disaster? Malware like BlackEnergy relies on uncorrected security flaws in computer systems. Sometimes, the system owner fails to install a patch. Other times, there is no patch because the software vendor either did not know about or did not correct a critical security flaw. Meanwhile, the victim country’s government or its allies may have knowledge of the same flaw, but kept the information secret so that it could be used against its enemies. There is an urgent need for a new legal and economic approach to cybersecurity that will curtail socially harmful behavior by security researchers and governments. Laws aimed at curbing cyberattacks typically focus on punishment, with little to no wiggle room provided for socially beneficial hacking behavior. Around the world, governments hoard zero-day vulnerabilities while permitting software vendors to sue security researchers who plan to demonstrate critical security flaws at industry conferences. There is also a growing market for buying and selling security flaws, and the buyers do not always have society’s best interests in mind. This Article delves into the world of cybersecurity and software and provides an interdisciplinary analysis of the current crisis, contributing to the limited but growing literature addressing these new threats that cannot be contained by traditional philosophies of war and weaponry.