Future Directions in Mathematics Workshop

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2006 Annual Report

Contents Clay Mathematics Institute 2006 James A. Carlson Letter from the President 2 Recognizing Achievement Fields Medal Winner Terence Tao 3 Persi Diaconis Mathematics & Magic Tricks 4 Annual Meeting Clay Lectures at Cambridge University 6 Researchers, Workshops & Conferences Summary of 2006 Research Activities 8 Profile Interview with Research Fellow Ben Green 10 Davar Khoshnevisan Normal Numbers are Normal 15 Feature Article CMI—Göttingen Library Project: 16 Eugene Chislenko The Felix Klein Protocols Digitized The Klein Protokolle 18 Summer School Arithmetic Geometry at the Mathematisches Institut, Göttingen, Germany 22 Program Overview The Ross Program at Ohio State University 24 PROMYS at Boston University Institute News Awards & Honors 26 Deadlines Nominations, Proposals and Applications 32 Publications Selected Articles by Research Fellows 33 Books & Videos Activities 2007 Institute Calendar 36 2006 Another major change this year concerns the editorial board for the Clay Mathematics Institute Monograph Series, published jointly with the American Mathematical Society. Simon Donaldson and Andrew Wiles will serve as editors-in-chief, while I will serve as managing editor. Associate editors are Brian Conrad, Ingrid Daubechies, Charles Fefferman, János Kollár, Andrei Okounkov, David Morrison, Cliff Taubes, Peter Ozsváth, and Karen Smith. The Monograph Series publishes Letter from the president selected expositions of recent developments, both in emerging areas and in older subjects transformed by new insights or unifying ideas. The next volume in the series will be Ricci Flow and the Poincaré Conjecture, by John Morgan and Gang Tian. Their book will appear in the summer of 2007. In related publishing news, the Institute has had the complete record of the Göttingen seminars of Felix Klein, 1872–1912, digitized and made available on James Carlson. -

A Mathematical Tonic

NATURE|Vol 443|14 September 2006 BOOKS & ARTS reader nonplussed. For instance, he discusses a problem in which a mouse runs at constant A mathematical tonic speed round a circle, and a cat, starting at the centre of the circle, runs at constant speed Dr Euler’s Fabulous Formula: Cures Many mathematician. This is mostly a good thing: directly towards the mouse at all times. Does Mathematical Ills not many mathematicians know much about the cat catch the mouse? Yes, if, and only if, it by Paul Nahin signal processing or the importance of complex runs faster than the mouse. In the middle of Princeton University Press: 2006. 404 pp. numbers to engineers, and it is fascinating to the discussion Nahin suddenly abandons the $29.95, £18.95 find out about these subjects. Furthermore, it mathematics and puts a discrete approximation is likely that a mathematician would have got into his computer to generate some diagrams. Timothy Gowers hung up on details that Nahin cheerfully, and His justification is that an analytic formula for One of the effects of the remarkable success rightly, ignores. However, his background has the cat’s position is hard to obtain, but actu- of Stephen Hawking’s A Brief History Of Time the occasional disadvantage as well, such as ally the problem can be neatly solved without (Bantam, 1988) was a burgeoning of the mar- when he discusses the matrix formulation of such a formula (although it is good to have ket for popular science writing. It did not take de Moivre’s theorem, the fundamental result his diagrams as well). -

2 Hilbert Spaces You Should Have Seen Some Examples Last Semester

2 Hilbert spaces You should have seen some examples last semester. The simplest (finite-dimensional) ex- C • A complex Hilbert space H is a complete normed space over whose norm is derived from an ample is Cn with its standard inner product. It’s worth recalling from linear algebra that if V is inner product. That is, we assume that there is a sesquilinear form ( , ): H H C, linear in · · × → an n-dimensional (complex) vector space, then from any set of n linearly independent vectors we the first variable and conjugate linear in the second, such that can manufacture an orthonormal basis e1, e2,..., en using the Gram-Schmidt process. In terms of this basis we can write any v V in the form (f ,д) = (д, f ), ∈ v = a e , a = (v, e ) (f , f ) 0 f H, and (f , f ) = 0 = f = 0. i i i i ≥ ∀ ∈ ⇒ ∑ The norm and inner product are related by which can be derived by taking the inner product of the equation v = aiei with ei. We also have n ∑ (f , f ) = f 2. ∥ ∥ v 2 = a 2. ∥ ∥ | i | i=1 We will always assume that H is separable (has a countable dense subset). ∑ Standard infinite-dimensional examples are l2(N) or l2(Z), the space of square-summable As usual for a normed space, the distance on H is given byd(f ,д) = f д = (f д, f д). • • ∥ − ∥ − − sequences, and L2(Ω) where Ω is a measurable subset of Rn. The Cauchy-Schwarz and triangle inequalities, • √ (f ,д) f д , f + д f + д , | | ≤ ∥ ∥∥ ∥ ∥ ∥ ≤ ∥ ∥ ∥ ∥ 2.1 Orthogonality can be derived fairly easily from the inner product. -

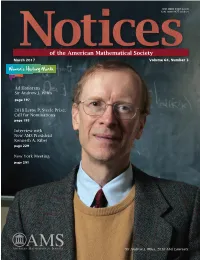

Sir Andrew J. Wiles

ISSN 0002-9920 (print) ISSN 1088-9477 (online) of the American Mathematical Society March 2017 Volume 64, Number 3 Women's History Month Ad Honorem Sir Andrew J. Wiles page 197 2018 Leroy P. Steele Prize: Call for Nominations page 195 Interview with New AMS President Kenneth A. Ribet page 229 New York Meeting page 291 Sir Andrew J. Wiles, 2016 Abel Laureate. “The definition of a good mathematical problem is the mathematics it generates rather Notices than the problem itself.” of the American Mathematical Society March 2017 FEATURES 197 239229 26239 Ad Honorem Sir Andrew J. Interview with New The Graduate Student Wiles AMS President Kenneth Section Interview with Abel Laureate Sir A. Ribet Interview with Ryan Haskett Andrew J. Wiles by Martin Raussen and by Alexander Diaz-Lopez Allyn Jackson Christian Skau WHAT IS...an Elliptic Curve? Andrew Wiles's Marvelous Proof by by Harris B. Daniels and Álvaro Henri Darmon Lozano-Robledo The Mathematical Works of Andrew Wiles by Christopher Skinner In this issue we honor Sir Andrew J. Wiles, prover of Fermat's Last Theorem, recipient of the 2016 Abel Prize, and star of the NOVA video The Proof. We've got the official interview, reprinted from the newsletter of our friends in the European Mathematical Society; "Andrew Wiles's Marvelous Proof" by Henri Darmon; and a collection of articles on "The Mathematical Works of Andrew Wiles" assembled by guest editor Christopher Skinner. We welcome the new AMS president, Ken Ribet (another star of The Proof). Marcelo Viana, Director of IMPA in Rio, describes "Math in Brazil" on the eve of the upcoming IMO and ICM. -

The Bibliography

Referenced Books [Ach92] N. I. Achieser. Theory of Approximation. Dover Publications Inc., New York, 1992. Reprint of the 1956 English translation of the 1st Rus- sian edition; the 2nd augmented Russian edition is available, Moscow, Nauka, 1965. [AH05] Kendall Atkinson and Weimin Han. Theoretical Numerical Analysis: A Functional Analysis Framework, volume 39 of Texts in Applied Mathe- matics. Springer, New York, second edition, 2005. [Atk89] Kendall E. Atkinson. An Introduction to Numerical Analysis. John Wiley & Sons Inc., New York, second edition, 1989. [Axe94] Owe Axelsson. Iterative Solution Methods. Cambridge University Press, Cambridge, 1994. [Bab86] K. I. Babenko. Foundations of Numerical Analysis [Osnovy chislennogo analiza]. Nauka, Moscow, 1986. [Russian]. [BD92] C. A. Brebbia and J. Dominguez. Boundary Elements: An Introductory Course. Computational Mechanics Publications, Southampton, second edition, 1992. [Ber52] S. N. Bernstein. Collected Works. Vol. I. The Constructive Theory of Functions [1905–1930]. Izdat. Akad. Nauk SSSR, Moscow, 1952. [Russian]. [Ber54] S. N. Bernstein. Collected Works. Vol. II. The Constructive Theory of Functions [1931–1953]. Izdat. Akad. Nauk SSSR, Moscow, 1954. [Russian]. [BH02] K. Binder and D. W. Heermann. Monte Carlo Simulation in Statistical Physics: An Introduction, volume 80 of Springer Series in Solid-State Sciences. Springer-Verlag, Berlin, fourth edition, 2002. [BHM00] William L. Briggs, Van Emden Henson, and Steve F. McCormick. A Multigrid Tutorial. Society for Industrial and Applied Mathematics (SIAM), Philadelphia, PA, second edition, 2000. [Boy01] John P. Boyd. Chebyshev and Fourier Spectral Methods. Dover Publi- cations Inc., Mineola, NY, second edition, 2001. [Bra84] Achi Brandt. Multigrid Techniques: 1984 Guide with Applications to Fluid Dynamics, volume 85 of GMD-Studien [GMD Studies]. -

UCLA Electronic Theses and Dissertations

UCLA UCLA Electronic Theses and Dissertations Title Algorithms for Optimal Paths of One, Many, and an Infinite Number of Agents Permalink https://escholarship.org/uc/item/3qj5d7dj Author Lin, Alex Tong Publication Date 2020 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California UNIVERSITY OF CALIFORNIA Los Angeles Algorithms for Optimal Paths of One, Many, and an Infinite Number of Agents A dissertation submitted in partial satisfaction of the requirements for the degree Doctor of Philosophy in Mathematics by Alex Tong Lin 2020 c Copyright by Alex Tong Lin 2020 ABSTRACT OF THE DISSERTATION Algorithms for Optimal Paths of One, Many, and an Infinite Number of Agents by Alex Tong Lin Doctor of Philosophy in Mathematics University of California, Los Angeles, 2020 Professor Stanley J. Osher, Chair In this dissertation, we provide efficient algorithms for modeling the behavior of a single agent, multiple agents, and a continuum of agents. For a single agent, we combine the modeling framework of optimal control with advances in optimization splitting in order to efficiently find optimal paths for problems in very high-dimensions, thus providing allevia- tion from the curse of dimensionality. For a multiple, but finite, number of agents, we take the framework of multi-agent reinforcement learning and utilize imitation learning in order to decentralize a centralized expert, thus obtaining optimal multi-agents that act in a de- centralized fashion. For a continuum of agents, we take the framework of mean-field games and use two neural networks, which we train in an alternating scheme, in order to efficiently find optimal paths for high-dimensional and stochastic problems. -

Maxwell's Equations in Differential Form

M03_RAO3333_1_SE_CHO3.QXD 4/9/08 1:17 PM Page 71 CHAPTER Maxwell’s Equations in Differential Form 3 In Chapter 2 we introduced Maxwell’s equations in integral form. We learned that the quantities involved in the formulation of these equations are the scalar quantities, elec- tromotive force, magnetomotive force, magnetic flux, displacement flux, charge, and current, which are related to the field vectors and source densities through line, surface, and volume integrals. Thus, the integral forms of Maxwell’s equations,while containing all the information pertinent to the interdependence of the field and source quantities over a given region in space, do not permit us to study directly the interaction between the field vectors and their relationships with the source densities at individual points. It is our goal in this chapter to derive the differential forms of Maxwell’s equations that apply directly to the field vectors and source densities at a given point. We shall derive Maxwell’s equations in differential form by applying Maxwell’s equations in integral form to infinitesimal closed paths, surfaces, and volumes, in the limit that they shrink to points. We will find that the differential equations relate the spatial variations of the field vectors at a given point to their temporal variations and to the charge and current densities at that point. In this process we shall also learn two important operations in vector calculus, known as curl and divergence, and two related theorems, known as Stokes’ and divergence theorems. 3.1 FARADAY’S LAW We recall from the previous chapter that Faraday’s law is given in integral form by d E # dl =- B # dS (3.1) CC dt LS where S is any surface bounded by the closed path C.In the most general case,the elec- tric and magnetic fields have all three components (x, y,and z) and are dependent on all three coordinates (x, y,and z) in addition to time (t). -

Some C*-Algebras with a Single Generator*1 )

transactions of the american mathematical society Volume 215, 1976 SOMEC*-ALGEBRAS WITH A SINGLEGENERATOR*1 ) BY CATHERINE L. OLSEN AND WILLIAM R. ZAME ABSTRACT. This paper grew out of the following question: If X is a com- pact subset of Cn, is C(X) ® M„ (the C*-algebra of n x n matrices with entries from C(X)) singly generated? It is shown that the answer is affirmative; in fact, A ® M„ is singly generated whenever A is a C*-algebra with identity, generated by a set of n(n + l)/2 elements of which n(n - l)/2 are selfadjoint. If A is a separable C*-algebra with identity, then A ® K and A ® U are shown to be sing- ly generated, where K is the algebra of compact operators in a separable, infinite- dimensional Hubert space, and U is any UHF algebra. In all these cases, the gen- erator is explicitly constructed. 1. Introduction. This paper grew out of a question raised by Claude Scho- chet and communicated to us by J. A. Deddens: If X is a compact subset of C, is C(X) ® M„ (the C*-algebra ofnxn matrices with entries from C(X)) singly generated? We show that the answer is affirmative; in fact, A ® Mn is singly gen- erated whenever A is a C*-algebra with identity, generated by a set of n(n + l)/2 elements of which n(n - l)/2 are selfadjoint. Working towards a converse, we show that A ® M2 need not be singly generated if A is generated by a set con- sisting of four elements. -

And Varifold-Based Registration of Lung Vessel and Airway Trees

Current- and Varifold-Based Registration of Lung Vessel and Airway Trees Yue Pan, Gary E. Christensen, Oguz C. Durumeric, Sarah E. Gerard, Joseph M. Reinhardt The University of Iowa, IA 52242 {yue-pan-1, gary-christensen, oguz-durumeric, sarah-gerard, joe-reinhardt}@uiowa.edu Geoffrey D. Hugo Virginia Commonwealth University, Richmond, VA 23298 [email protected] Abstract 1. Introduction Registration of lung CT images is important for many radiation oncology applications including assessing and Registering lung CT images is an important problem adapting to anatomical changes, accumulating radiation for many applications including tracking lung motion over dose for planning or assessment, and managing respiratory the breathing cycle, tracking anatomical and function motion. For example, variation in the anatomy during radio- changes over time, and detecting abnormal mechanical therapy introduces uncertainty between the planned and de- properties of the lung. This paper compares and con- livered radiation dose and may impact the appropriateness trasts current- and varifold-based diffeomorphic image of the originally-designed treatment plan. Frequent imaging registration approaches for registering tree-like structures during radiotherapy accompanied by accurate longitudinal of the lung. In these approaches, curve-like structures image registration facilitates measurement of such variation in the lung—for example, the skeletons of vessels and and its effect on the treatment plan. The cumulative dose airways segmentation—are represented by currents or to the target and normal tissue can be assessed by mapping varifolds in the dual space of a Reproducing Kernel Hilbert delivered dose to a common reference anatomy and com- Space (RKHS). Current and varifold representations are paring to the prescribed dose. -

Boundary Value Problems for Systems That Are Not Strictly

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Applied Mathematics Letters 24 (2011) 757–761 Contents lists available at ScienceDirect Applied Mathematics Letters journal homepage: www.elsevier.com/locate/aml On mixed initial–boundary value problems for systems that are not strictly hyperbolic Corentin Audiard ∗ Institut Camille Jordan, Université Claude Bernard Lyon 1, Villeurbanne, Rhone, France article info a b s t r a c t Article history: The classical theory of strictly hyperbolic boundary value problems has received several Received 25 June 2010 extensions since the 70s. One of the most noticeable is the result of Metivier establishing Received in revised form 23 December 2010 Majda's ``block structure condition'' for constantly hyperbolic operators, which implies Accepted 28 December 2010 well-posedness for the initial–boundary value problem (IBVP) with zero initial data. The well-posedness of the IBVP with non-zero initial data requires that ``L2 is a continuable Keywords: initial condition''. For strictly hyperbolic systems, this result was proven by Rauch. We Boundary value problem prove here, by using classical matrix theory, that his fundamental a priori estimates are Hyperbolicity Multiple characteristics valid for constantly hyperbolic IBVPs. ' 2011 Elsevier Ltd. All rights reserved. 1. Introduction In his seminal paper [1] on hyperbolic initial–boundary value problems, H.O. Kreiss performed the algebraic construction of a tool, now called the Kreiss symmetrizer, that leads to a priori estimates. Namely, if u is a solution of 8 d X C >@ u C A .x; t/@ u D f ;.t; x/ 2 × Ω; <> t j xj R jD1 (1) C >Bu D g;.t; x/ 2 @ × @Ω; :> R ujtD0 D 0; C Pd where the operator @t jD1 Aj@xj is assumed to be strictly hyperbolic and B satisfies the uniform Lopatinski˘ı condition, there is some γ0 > 0 such that u satisfies the a priori estimate p γ kuk 2 C C kuk 2 C ≤ C kf k 2 C C kgk 2 C ; (2) Lγ .R ×Ω/ Lγ .R ×@Ω/ Lγ .R ×Ω/ Lγ .R ×@Ω/ 2 2 −γ t for γ ≥ γ0. -

Society Reports USNC/TAM

Appendix J 2008 Society Reports USNC/TAM Table of Contents J.1 AAM: Ravi-Chandar.............................................................................................. 1 J.2 AIAA: Chen............................................................................................................. 2 J.3 AIChE: Higdon ....................................................................................................... 3 J.4 AMS: Kinderlehrer................................................................................................. 5 J.5 APS: Foss................................................................................................................. 5 J.6 ASA: Norris............................................................................................................. 6 J.7 ASCE: Iwan............................................................................................................. 7 J.8 ASME: Kyriakides.................................................................................................. 8 J.9 ASTM: Chona ......................................................................................................... 9 J.10 SEM: Shukla ....................................................................................................... 11 J.11 SES: Jasiuk.......................................................................................................... 13 J.12 SIAM: Healey...................................................................................................... 14 J.13 SNAME: Karr.................................................................................................... -

The Top Mathematics Award

Fields told me and which I later verified in Sweden, namely, that Nobel hated the mathematician Mittag- Leffler and that mathematics would not be one of the do- mains in which the Nobel prizes would The Top Mathematics be available." Award Whatever the reason, Nobel had lit- tle esteem for mathematics. He was Florin Diacuy a practical man who ignored basic re- search. He never understood its impor- tance and long term consequences. But Fields did, and he meant to do his best John Charles Fields to promote it. Fields was born in Hamilton, Ontario in 1863. At the age of 21, he graduated from the University of Toronto Fields Medal with a B.A. in mathematics. Three years later, he fin- ished his Ph.D. at Johns Hopkins University and was then There is no Nobel Prize for mathematics. Its top award, appointed professor at Allegheny College in Pennsylvania, the Fields Medal, bears the name of a Canadian. where he taught from 1889 to 1892. But soon his dream In 1896, the Swedish inventor Al- of pursuing research faded away. North America was not fred Nobel died rich and famous. His ready to fund novel ideas in science. Then, an opportunity will provided for the establishment of to leave for Europe arose. a prize fund. Starting in 1901 the For the next 10 years, Fields studied in Paris and Berlin annual interest was awarded yearly with some of the best mathematicians of his time. Af- for the most important contributions ter feeling accomplished, he returned home|his country to physics, chemistry, physiology or needed him.