Feature-Based Comparison of Iscsi Target Implementations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Administració De Sistemes GNU Linux Mòdul4 Administració

Administració local Josep Jorba Esteve PID_00238577 GNUFDL • PID_00238577 Administració local Es garanteix el permís per a copiar, distribuir i modificar aquest document segons els termes de la GNU Free Documentation License, Version 1.3 o qualsevol altra de posterior publicada per la Free Software Foundation, sense seccions invariants ni textos de la oberta anterior o posterior. Podeu consultar els termes de la llicència a http://www.gnu.org/licenses/fdl-1.3.html. GNUFDL • PID_00238577 Administració local Índex Introducció.................................................................................................. 5 1. Eines bàsiques per a l'administrador........................................... 7 1.1. Eines gràfiques i línies de comandes .......................................... 8 1.2. Documents d'estàndards ............................................................. 10 1.3. Documentació del sistema en línia ............................................ 13 1.4. Eines de gestió de paquets .......................................................... 15 1.4.1. Paquets TGZ ................................................................... 16 1.4.2. Fedora/Red Hat: paquets RPM ....................................... 19 1.4.3. Debian: paquets DEB ..................................................... 24 1.4.4. Nous formats d'empaquetat: Snap i Flatpak .................. 28 1.5. Eines genèriques d'administració ................................................ 36 1.6. Altres eines ................................................................................. -

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

Fibre Channel Interface

Fibre Channel Interface Fibre Channel Interface ©2006, Seagate Technology LLC All rights reserved Publication number: 100293070, Rev. A March 2006 Seagate and Seagate Technology are registered trademarks of Seagate Technology LLC. SeaTools, SeaFONE, SeaBOARD, SeaTDD, and the Wave logo are either registered trade- marks or trademarks of Seagate Technology LLC. Other product names are registered trade- marks or trademarks of their owners. Seagate reserves the right to change, without notice, product offerings or specifications. No part of this publication may be reproduced in any form without written permission of Seagate Technol- ogy LLC. Revision status summary sheet Revision Date Writer/Engineer Sheets Affected A 03/08/06 C. Chalupa/J. Coomes All iv Fibre Channel Interface Manual, Rev. A Contents 1.0 Contents . i 2.0 Publication overview . 1 2.1 Acknowledgements . 1 2.2 How to use this manual . 1 2.3 General interface description. 2 3.0 Introduction to Fibre Channel . 3 3.1 General information . 3 3.2 Channels vs. networks . 4 3.3 The advantages of Fibre Channel . 4 4.0 Fibre Channel standards . 5 4.1 General information . 6 4.1.1 Description of Fibre Channel levels . 6 4.1.1.1 FC-0 . .6 4.1.1.2 FC-1 . .6 4.1.1.3 FC-1.5 . .6 4.1.1.4 FC-2 . .6 4.1.1.5 FC-3 . .6 4.1.1.6 FC-4 . .7 4.1.2 Relationship between the levels. 7 4.1.3 Topology standards . 7 4.1.4 FC Implementation Guide (FC-IG) . 7 4.1.5 Applicable Documents . -

AMD Opteron™ Shared Memory MP Systems Ardsher Ahmed Pat Conway Bill Hughes Fred Weber Agenda

AMD Opteron™ Shared Memory MP Systems Ardsher Ahmed Pat Conway Bill Hughes Fred Weber Agenda • Glueless MP systems • MP system configurations • Cache coherence protocol • 2-, 4-, and 8-way MP system topologies • Beyond 8-way MP systems September 22, 2002 Hot Chips 14 2 AMD Opteron™ Processor Architecture DRAM 5.3 GB/s 128-bit MCT CPU SRQ XBAR HT HT HT 3.2 GB/s per direction 3.2 GB/s per direction @ 0+]'DWD5DWH @ 0+]'DWD5DWH 3.2 GB/s per direction @ 0+]'DWD5DWH HT = HyperTransport™ technology September 22, 2002 Hot Chips 14 3 Glueless MP System DRAM DRAM MCT CPU MCT CPU SRQ SRQ non-Coherent HyperTransport™ Link XBAR XBAR HT I/O I/O I/O HT cHT cHT cHT cHT Coherent HyperTransport ™ cHT cHT I/O I/O HT HT cHT cHT XBAR XBAR CPU MCT CPU MCT SRQ SRQ HT = HyperTransport™ technology DRAM DRAM September 22, 2002 Hot Chips 14 4 MP Architecture • Programming model of memory is effectively SMP – Physical address space is flat and fully coherent – Far to near memory latency ratio in a 4P system is designed to be < 1.4 – Latency difference between remote and local memory is comparable to the difference between a DRAM page hit and a DRAM page conflict – DRAM locations can be contiguous or interleaved – No processor affinity or NUMA tuning required • MP support designed in from the beginning – Lower overall chip count results in outstanding system reliability – Memory Controller and XBAR operate at the processor frequency – Memory subsystem scale with frequency improvements September 22, 2002 Hot Chips 14 5 MP Architecture (contd.) • Integrated Memory Controller -

Architecture and Application of Infortrend Infiniband Design

Architecture and Application of Infortrend InfiniBand Design Application Note Version: 1.3 Updated: October, 2018 Abstract: Focusing on the architecture and application of InfiniBand technology, this document introduces the architecture, application scenarios and highlights of the Infortrend InfiniBand host module design. Infortrend InfiniBand Host Module Design Contents Contents ............................................................................................................................................. 2 What is InfiniBand .............................................................................................................................. 3 Overview and Background .................................................................................................... 3 Basics of InfiniBand .............................................................................................................. 3 Hardware ....................................................................................................................... 3 Architecture ................................................................................................................... 4 Application Scenarios for HPC ............................................................................................................. 5 Current Limitation .............................................................................................................................. 6 Infortrend InfiniBand Host Board Design ............................................................................................ -

USB Composite Gadget Using CONFIG-FS on Dra7xx Devices

Application Report SPRACB5–September 2017 USB Composite Gadget Using CONFIG-FS on DRA7xx Devices RaviB ABSTRACT This application note explains how to create a USB composite gadget, network control model (NCM) and abstract control model (ACM) from the user space using Linux® CONFIG-FS on the DRA7xx platform. Contents 1 Introduction ................................................................................................................... 2 2 USB Composite Gadget Using CONFIG-FS ............................................................................. 3 3 Creating Composite Gadget From User Space.......................................................................... 4 4 References ................................................................................................................... 8 List of Figures 1 Block Diagram of USB Composite Gadget............................................................................... 3 2 Selection of CONFIGFS Through menuconfig........................................................................... 4 3 Select USB Configuration Through menuconfig......................................................................... 4 4 Composite Gadget Configuration Items as Files and Directories ..................................................... 5 5 VID, PID, and Manufacturer String Configuration ....................................................................... 6 6 Kernel Logs Show Enumeration of USB Composite Gadget by Host ................................................ 6 7 Ping -

Chapter 6 MIDI, SCSI, and Sample Dumps

MIDI, SCSI, and Sample Dumps SCSI Guidelines Chapter 6 MIDI, SCSI, and Sample Dumps SCSI Guidelines The following sections contain information on using SCSI with the K2600, as well as speciÞc sections dealing with the Mac and the K2600. Disk Size Restrictions The K2600 accepts hard disks with up to 2 gigabytes of storage capacity. If you attach an unformatted disk that is larger than 2 gigabytes, the K2600 will still be able to format it, but only as a 2 gigabyte disk. If you attach a formatted disk larger than 2 gigabytes, the K2600 will not be able to work with it; you could reformat the disk, but thisÑof courseÑwould erase the disk entirely. Configuring a SCSI Chain Here are some basic guidelines to follow when conÞguring a SCSI chain: 1. According to the SCSI SpeciÞcation, the maximum SCSI cable length is 6 meters (19.69 feet). You should limit the total length of all SCSI cables connecting external SCSI devices with Kurzweil products to 17 feet (5.2 meters). To calculate the total SCSI cable length, add the lengths of all SCSI cables, plus eight inches for every external SCSI device connected. No single cable length in the chain should exceed eight feet. 2. The Þrst and last devices in the chain must be terminated. There is a single exception to this rule, however. A K2600 with an internal hard drive and no external SCSI devices attached should have its termination disabled. If you later add an external device to the K2600Õs SCSI chain, you must enable the K2600Õs termination at that time. -

Application Note 904 an Introduction to the Differential SCSI Interface

DS36954 Application Note 904 An Introduction to the Differential SCSI Interface Literature Number: SNLA033 An Introduction to the Differential SCSI Interface AN-904 National Semiconductor An Introduction to the Application Note 904 John Goldie Differential SCSI Interface August 1993 OVERVIEW different devices to be connected to the same daisy chained The scope of this application note is to provide an introduc- cable (SCSI-1 and 2 allows up to eight devices while the pro- tion to the SCSI Parallel Interface and insight into the differ- posed SCSI-3 standard will allow up to 32 devices). A typical ential option specified by the SCSI standards. This applica- SCSI bus configuration is shown in Figure 1. tion covers the following topics: WHY DIFFERENTIAL SCSI? • The SCSI Interface In comparison to single-ended SCSI, differential SCSI costs • Why Differential SCSI? more and has additional power and PC board space require- • The SCSI Bus ments. However, the gained benefits are well worth the addi- • SCSI Bus States tional IC cost, PCB space, and required power in many appli- • SCSI Options: Fast and Wide cations. Differential SCSI provides the following benefits over single-ended SCSI: • The SCSI Termination • Reliable High Transfer Rates — easily capable of operat- • SCSI Controller Requirements ing at 10MT/s (Fast SCSI) without special attention to termi- • Summary of SCSI Standards nations. Even higher data rates are currently being standard- • References/Standards ized (FAST-20 @ 20MT/s). The companion Application Note (AN-905) focuses on the THE SCSI INTERFACE features of National’s new RS-485 hex transceiver. The The Small Computer System Interface is an ANSI (American DS36BC956 specifically designed for use in differential SCSI National Standards Institute) interface standard defining a applications is also optimal for use in other high speed, par- peer to peer generic input/output bus (I/O bus). -

Oracle® Linux 7 Managing File Systems

Oracle® Linux 7 Managing File Systems F32760-07 August 2021 Oracle Legal Notices Copyright © 2020, 2021, Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Unionfs: User- and Community-Oriented Development of a Unification File System

Unionfs: User- and Community-Oriented Development of a Unification File System David Quigley, Josef Sipek, Charles P. Wright, and Erez Zadok Stony Brook University {dquigley,jsipek,cwright,ezk}@cs.sunysb.edu Abstract If a file exists in multiple branches, the user sees only the copy in the higher-priority branch. Unionfs allows some branches to be read-only, Unionfs is a stackable file system that virtually but as long as the highest-priority branch is merges a set of directories (called branches) read-write, Unionfs uses copy-on-write seman- into a single logical view. Each branch is as- tics to provide an illusion that all branches are signed a priority and may be either read-only writable. This feature allows Live-CD develop- or read-write. When the highest priority branch ers to give their users a writable system based is writable, Unionfs provides copy-on-write se- on read-only media. mantics for read-only branches. These copy- on-write semantics have lead to widespread There are many uses for namespace unifica- use of Unionfs by LiveCD projects including tion. The two most common uses are Live- Knoppix and SLAX. In this paper we describe CDs and diskless/NFS-root clients. On Live- our experiences distributing and maintaining CDs, by definition, the data is stored on a read- an out-of-kernel module since November 2004. only medium. However, it is very convenient As of March 2006 Unionfs has been down- for users to be able to modify the data. Uni- loaded by over 6,700 unique users and is used fying the read-only CD with a writable RAM by over two dozen other projects. -

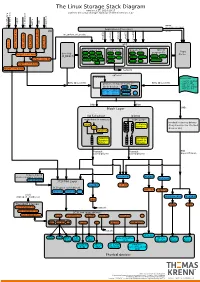

The Linux Storage Stack Diagram

The Linux Storage Stack Diagram version 3.17, 2014-10-17 outlines the Linux storage stack as of Kernel version 3.17 ISCSI USB mmap Fibre Channel Fibre over Ethernet Fibre Channel Fibre Virtual Host Virtual FireWire (anonymous pages) Applications (Processes) LIO malloc vfs_writev, vfs_readv, ... ... stat(2) read(2) open(2) write(2) chmod(2) VFS tcm_fc sbp_target tcm_usb_gadget tcm_vhost tcm_qla2xxx iscsi_target_mod block based FS Network FS pseudo FS special Page ext2 ext3 ext4 proc purpose FS target_core_mod direct I/O NFS coda sysfs Cache (O_DIRECT) xfs btrfs tmpfs ifs smbfs ... pipefs futexfs ramfs target_core_file iso9660 gfs ocfs ... devtmpfs ... ceph usbfs target_core_iblock target_core_pscsi network optional stackable struct bio - sector on disk BIOs (Block I/O) BIOs (Block I/O) - sector cnt devices on top of “normal” - bio_vec cnt block devices drbd LVM - bio_vec index - bio_vec list device mapper mdraid dm-crypt dm-mirror ... dm-cache dm-thin bcache BIOs BIOs Block Layer BIOs I/O Scheduler blkmq maps bios to requests multi queue hooked in device drivers noop Software (they hook in like stacked ... Queues cfq devices do) deadline Hardware Hardware Dispatch ... Dispatch Queue Queues Request Request BIO based Drivers based Drivers based Drivers request-based device mapper targets /dev/nullb* /dev/vd* /dev/rssd* dm-multipath SCSI Mid Layer /dev/rbd* null_blk SCSI upper level drivers virtio_blk mtip32xx /dev/sda /dev/sdb ... sysfs (transport attributes) /dev/nvme#n# /dev/skd* rbd Transport Classes nvme skd scsi_transport_fc network -

ODROID-HC2: 3.5” High Powered Storage February 1, 2018

ODROID WiFi Access Point: Share Files Via Samba February 1, 2018 How to setup an ODROID with a WiFi access point so that an ODROID’s hard drive can be accessed and modied from another computer. This is primarily aimed at allowing access to images, videos, and log les on the ODROID. ODROID-HC2: 3.5” High powered storage February 1, 2018 The ODROID-HC2 is an aordable mini PC and perfect solution for a network attached storage (NAS) server. This device home cloud-server capabilities centralizes data and enables users to share and stream multimedia les to phones, tablets, and other devices across a network. It is an ideal tool for many use Using SquashFS As A Read-Only Root File System February 1, 2018 This guide describes the usage of SquashFS PiFace: Control and Display 2 February 1, 2018 For those who have the PiFace Control and Display 2, and want to make it compatible with the ODROID-C2 Android Gaming: Data Wing, Space Frontier, and Retro Shooting – Pixel Space Shooter February 1, 2018 Variations on a theme! Race, blast into space, and blast things into pieces that are racing towards us. The fun doesn’t need to stop when you take a break from your projects. Our monthly pick on Android games. Linux Gaming: Saturn Games – Part 1 February 1, 2018 I think it’s time we go into a bit more detail about Sega Saturn for the ODROID-XU3/XU4 Gaming Console: Running Your Favorite Games On An ODROID-C2 Using Android February 1, 2018 I built a gaming console using an ODROID-C2 running Android 6 Controller Area Network (CAN) Bus: Implementation