Comparative Study of Performance Testing Tools: Apache Jmeter and HP Loadrunner

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

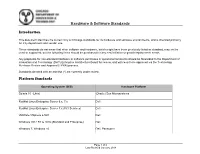

Hardware & Software Standards

Hardware & Software Standards Introduction This document identifies the current City of Chicago standards for its hardware and software environments, and is intended primarily for City department and vendor use. These standards do not mean that other software and hardware, which might have been previously listed as standard, may not be used or supported, but the following items should be purchased for any new initiative or growth/replacement needs. Any proposals for non-standard hardware or software purchases or questions/comments should be forwarded to the Department of Innovation and Technology (DoIT) Enterprise Architecture Board for review, and will need to be approved via the Technology Purchase Review and Approval (TPRA) process. Standards denoted with an asterisk (*) are currently under review. Platform Standards Operating System (O/S) Hardware Platform Solaris 10 (Unix) (Oracle) Sun Microsystems RedHat Linux Enterprise Server 6.x, 7.x Dell RedHat Linux Enterprise Server 7.x (PCI Services) Dell VMWare VSphere 6.5U1 Dell Windows 2012 R2 & 2016 (Standard and Enterprise) Dell Windows 7, Windows 10 Dell, Panasonic Page 1 of 6 Last Revised January 2018 Hardware & Software Standards Enterprise Services Type Windows 2008 Server All other platforms Oracle Enterprise 11gR2, 12cR1; Postgres 9.x or 10.x (EnterpriseDB or Database N/A community) Print O/S n/a File O/S n/a Email Exchange 2016 / Office365 n/a Desktops, Laptops, & Tablets Type Model Standard Users Dell OptiPlex 5050 SFF, Dell OptiPlex 7450 All-In-One Mobile User Latitude 12 Rugged Extreme Latitude 14 Rugged 5414 Latitude 12 2 in 1 with case and Doc Latitude 5480 14" Laptop 6th gen proc High-End Workstation Dell Precision T5810 Laptop Accessories Docking- For the E-5470 units, Dell Business Dock - WD15 with 130W Adapter Monitor Dell 23 Monitor – P2317H Page 2 of 6 Last Revised January 2018 Hardware & Software Standards Printing and Scanning The Department of Fleet and Facility Management (2FM) oversees print services for the City of Chicago. -

IT CLASSIFICATION TECHNOLOGY LIST – ISSUE DATE: April 18, 2017

IT CLASSIFICATION TECHNOLOGY LIST – ISSUE DATE: April 18, 2017 Technology Definition: A set of knowledge, skills and/or abilities, taking a significant time (e.g. 6 months) to learn, and applicable to the defined classification specification assigned. Example of Tools: These are examples only for illustration purposes and are not meant to constitute a full and/or comprehensive list. CLASSIFICATION DISCIPLINE TECHNOLOGY DEFINITION EXAMPLE OF TOOLS Omegamon, IBM Admin Tools, Log Analyzer, Relational The relational database management system provided by IBM that runs on Unix, Linux, DBA DB2 DB2 Compare, Nsynch, TSM, Universal Database Windows and z/OS platforms including DB2 Connect and related tools. Command, SQL SQL Server Mgmt. Studio, Red Gate, Vantage, SQL Server The relational database management system and related tools provided by Microsoft Corp. Tivoli, Snap Manager, Toad, Enterprise Manager, SQL ORACLE The relational database management system and related tools provided by Oracle Corp. ASE SYBASE The relational database management system and related tools provided by Sybase. Cincom SUPRA SQL – Cincom’s relational database management system provides access to data Supra 2.X in open and proprietary environments through industry-standard SQL for standalone and client/server application solutions. Open Source Open Source database management system such as Mysql. Phpadmin, mysqladmin, Vertica Hierarchical The hierarchical database management system provided by IBM that runs on z/OS mainframe IMS BMC IMS Utilities, Strobe, Omegamon Database platform including related tools. Cincom SUPRA® PDM – Cincom’s networked, hierarchical database management system provides access to your data through a Physical Data Manager (PDM) that manages the data Supra 1 structures of the physical files that store the data. -

Types of Software Testing

Types of Software Testing We would be glad to have feedback from you. Drop us a line, whether it is a comment, a question, a work proposition or just a hello. You can use either the form below or the contact details on the rightt. Contact details [email protected] +91 811 386 5000 1 Software testing is the way of assessing a software product to distinguish contrasts between given information and expected result. Additionally, to evaluate the characteristic of a product. The testing process evaluates the quality of the software. You know what testing does. No need to explain further. But, are you aware of types of testing. It’s indeed a sea. But before we get to the types, let’s have a look at the standards that needs to be maintained. Standards of Testing The entire test should meet the user prerequisites. Exhaustive testing isn’t conceivable. As we require the ideal quantity of testing in view of the risk evaluation of the application. The entire test to be directed ought to be arranged before executing it. It follows 80/20 rule which expresses that 80% of defects originates from 20% of program parts. Start testing with little parts and extend it to broad components. Software testers know about the different sorts of Software Testing. In this article, we have incorporated majorly all types of software testing which testers, developers, and QA reams more often use in their everyday testing life. Let’s understand them!!! Black box Testing The black box testing is a category of strategy that disregards the interior component of the framework and spotlights on the output created against any input and performance of the system. -

Smoke Testing What Is Smoke Testing?

Smoke Testing What is Smoke Testing? Smoke testing is the initial testing process exercised to check whether the software under test is ready/stable for further testing. The term ‘Smoke Testing’ is came from the hardware testing, in the hardware testing initial pass is done to check if it did not catch the fire or smoked in the initial switch on.Prior to start Smoke testing few test cases need to created once to use for smoke testing. These test cases are executed prior to start actual testing to checkcritical functionalities of the program is working fine. This set of test cases written such a way that all functionality is verified but not in deep. The objective is not to perform exhaustive testing, the tester need check the navigation’s & adding simple things, tester needs to ask simple questions “Can tester able to access software application?”, “Does user navigates from one window to other?”, “Check that the GUI is responsive” etc. Here are graphical representation of Smoke testing & Sanity testing in software testing: Smoke Sanity Testing Diagram The test cases can be executed manually or automated; this depends upon the project requirements. In this types of testing mainly focus on the important functionality of application, tester do not care about detailed testing of each software component, this can be cover in the further testing of application. The Smoke testing is typically executed by testers after every build is received for checking the build is in testable condition. This type of testing is applicable in the Integration Testing, System Testing and Acceptance Testing levels. -

Webload IDE User's Guide I

IDE User’s Guide Version 10.3 The software supplied with this document is the property of RadView Software and is furnished under a licensing agreement. Neither the software nor this document may be copied or transferred by any means, electronic or mechanical, except as provided in the licensing agreement. The information in this document is subject to change without prior notice and does not represent a commitment by RadView Software or its representatives. WebLOAD IDE User’s Guide © Copyright 2015 by RadView Software. All rights reserved. August, 2015, RadView Publication Number WL-OSSPRO-0913-IUG10 WebLOAD, TestTalk, Authoring Tools, ADL, AppletLoad, and WebExam, are trademarks or registered trademarks of RadView Software IBM, and OS/2 are trademarks of International Business Machines Corporation. Microsoft Windows, Microsoft Windows 95, Microsoft Windows NT, Microsoft Word for Windows, Microsoft Internet Explorer, Microsoft Excel for Windows, Microsoft Access for Windows and Microsoft Access Runtime are trademarks or registered trademarks of Microsoft Corporation. SPIDERSESSION is a trademark of NetDynamics. UNIX is a registered trademark of AT&T Bell Laboratories. Solaris, Java and Java-based marks are registered trademarks of Sun Microsystems, Inc. HP- UX is a registered trademark of Hewlett-Packard. SPARC is a registered trademark of SPARC International, Inc. Netscape Navigator and LiveConnect are registered trademarks of Netscape Communications Corporation. Any other trademark name appearing in this book is used for editorial purposes only and to the benefit of the trademark owner with no intention of infringing upon that trademark. For product assistance or information, contact: Toll free in the US: 1-888-RadView Fax: +1-908-864-8099 World Wide Web: www.RadView.com North American Headquarters: International Headquarters: RadView Software Inc. -

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform

Parasoft Static Application Security Testing (SAST) for .Net - C/C++ - Java Platform Parasoft® dotTEST™ /Jtest (for Java) / C/C++test is an integrated Development Testing solution for automating a broad range of testing best practices proven to improve development team productivity and software quality. dotTEST / Java Test / C/C++ Test also seamlessly integrates with Parasoft SOAtest as an option, which enables end-to-end functional and load testing for complex distributed applications and transactions. Capabilities Overview STATIC ANALYSIS ● Broad support for languages and standards: Security | C/C++ | Java | .NET | FDA | Safety-critical ● Static analysis tool industry leader since 1994 ● Simple out-of-the-box integration into your SDLC ● Prevent and expose defects via multiple analysis techniques ● Find and fix issues rapidly, with minimal disruption ● Integrated with Parasoft's suite of development testing capabilities, including unit testing, code coverage analysis, and code review CODE COVERAGE ANALYSIS ● Track coverage during unit test execution and the data merge with coverage captured during functional and manual testing in Parasoft Development Testing Platform to measure true test coverage. ● Integrate with coverage data with static analysis violations, unit testing results, and other testing practices in Parasoft Development Testing Platform for a complete view of the risk associated with your application ● Achieve test traceability to understand the impact of change, focus testing activities based on risk, and meet compliance -

Loadrunner Enterprise Installation Guide

LoadRunner Enterprise Software Version: 2020 Installation Guide Go to HELP CENTER ONLINE http://admhelp.microfocus.com/pc/ Document Release Date: December 31, 2019 | Software Release Date: December 2019 Installation Guide Legal Notices Disclaimer Certain versions of software and/or documents (“Material”) accessible here may contain branding from Hewlett-Packard Company (now HP Inc.) and Hewlett Packard Enterprise Company. As of September 1, 2017, the Material is now offered by Micro Focus, a separately owned and operated company. Any reference to the HP and Hewlett Packard Enterprise/HPE marks is historical in nature, and the HP and Hewlett Packard Enterprise/HPE marks are the property of their respective owners. Warranty The only warranties for products and services of Micro Focus and its affiliates and licensors (“Micro Focus”) are set forth in the express warranty statements accompanying such products and services. Nothing herein should be construed as constituting an additional warranty. Micro Focus shall not be liable for technical or editorial errors or omissions contained herein. The information contained herein is subject to change without notice. Restricted Rights Legend Contains Confidential Information. Except as specifically indicated otherwise, a valid license is required for possession, use or copying. Consistent with FAR 12.211 and 12.212, Commercial Computer Software, Computer Software Documentation, and Technical Data for Commercial Items are licensed to the U.S. Government under vendor's standard commercial license. -

Enterprise Development with Flex

Enterprise Development with Flex Enterprise Development with Flex Yakov Fain, Victor Rasputnis, and Anatole Tartakovsky Beijing • Cambridge • Farnham • Köln • Sebastopol • Taipei • Tokyo Enterprise Development with Flex by Yakov Fain, Victor Rasputnis, and Anatole Tartakovsky Copyright © 2010 Yakov Fain, Victor Rasputnis, and Anatole Tartakovsky.. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: (800) 998-9938 or [email protected]. Editor: Mary E. Treseler Indexer: Ellen Troutman Development Editor: Linda Laflamme Cover Designer: Karen Montgomery Production Editor: Adam Zaremba Interior Designer: David Futato Copyeditor: Nancy Kotary Illustrator: Robert Romano Proofreader: Sada Preisch Printing History: March 2010: First Edition. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. Enterprise Development with Flex, the image of red-crested wood-quails, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc. was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and authors assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

A Framework for Evaluating Performance of Software Testing Tools

INTERNATIONAL JOURNAL OF SCIENTIFIC & TECHNOLOGY RESEARCH VOLUME 9, ISSUE 02, FEBRUARY 2020 ISSN 2277-8616 A Framework For Evaluating Performance Of Software Testing Tools Pramod Mathew Jacob, Prasanna Mani Abstract: Software plays a pivotal role in this technology era. Due to its increased applicable domains, quality of the software being developed is to be monitored and controlled. Software organization follows many testing methodologies to perform quality management. Testing methodologies can be either manual or automated. Automated testing tools got massive acceptability among software professionals due to its enhanced features and functionalities than that of manual testing. There are hundreds of test automation tools available, among which some perform exceptionally well. Due to the availability of large set of tools, it is a herculean task for the project manager to choose the appropriate automation tool, which is suitable for their project domain. In this paper, we derive a software testing tool selection model which evaluates the performance aspects of a script-based testing tool. Experimental evaluation proves that, it can be used to compare and evaluate various performance characteristics of commercially accepted test automation tools based on user experience as well as system performance. Index Terms: Automated testing, Software testing, Test script, Testing Tool, Test bed, Verification and Validation. —————————— ◆ —————————— 1 INTRODUCTION S OFTWARE is advanced in recent days by enhancing its applicable domains. Software is embedded in almost all electronic gadgets and systems. In this scenario the quality of the software plays a significant role. The customer or end – user should be satisfied which is primarily depended on quality and capability of the software being developed. -

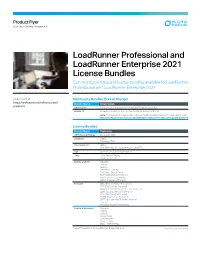

Loadrunner Professional and Loadrunner Enterprise 2021

Product Flyer Application Delivery Management LoadRunner Professional and LoadRunner Enterprise 2021 License Bundles Community bundles and license bundles available for LoadRunner Professional and LoadRunner Enterprise 2021. Learn more at Community Bundles (Free of Charge) https://software.microfocus.com/ Bundle Name Description products Community 50 Vusers for all protocols, except for Templates and GUI Mobile UI Unlimited Vusers for the TruClient—Native Mobile protocol. Note: This bundle requires an Micro Focus Mobile Center license. For more details, see: https://software.microfocus.com/software/customer-technical-support-services License Bundles Bundle Name Protocols .NET Record/Replay Microsoft .NET Database ODBC Oracle—2 Tier Development SDK Unit Test—NUnit, Junit Selenium, LeanFT* GUI Unified Functional Testing (UFT) Java Java Record Replay Java over HTTP Mobile and IoT DevWeb JMeter Gatling Web—HTTP/HTML TruClient—Mobile Web SMP (SAP Mobile Platform) MQTT (Internet of Things) CoAP (Internet of Things) Network DNS (Domain Name Resolution) FTP (File Transfer Protocol) IMAP (Internet Message Access Protocol) LDAP (Listing Directory Service) MAPI (Microsoft Exchange) POP3 (Post Office Protocol) SMTP (Simple Mail Transfer Protocol) Tuxedo Windows Sockets (Winsock) Oracle E-Business DevWeb JMeter Gatling Oracle NCA Oracle—Web Siebel—Web Web—HTTP/HTML * Continued on next page LeanFT support is for LoadRunner Enterprise only. Contact us at: www.microfocus.com Like what you read? Share it. Bundle Name Protocols Remote Access Citrix ICA -

Automated Testing of Firmware Installation and Update Scenarios for Peripheral Devices

DEGREE PROJECT IN COMPUTER SCIENCE AND ENGINEERING, SECOND CYCLE, 30 CREDITS STOCKHOLM, SWEDEN 2019 Automated testing of firmware installation and update scenarios for peripheral devices DAG REUTERSKIÖLD KTH ROYAL INSTITUTE OF TECHNOLOGY SCHOOL OF ELECTRICAL ENGINEERING AND COMPUTER SCIENCE Automated testing of firmware installation and update scenarios for peripheral devices DAG REUTERSKIÖLD Master in Computer Science Date: August 12, 2019 Supervisor: Hamid Faragardi Examiner: Elena Troubitsyna School of Electrical Engineering and Computer Science Host company: Tobii AB Swedish title: Automatisering av enhetsinstallation, uppdatering och testning med hjälp av virtuella maskiner iii Abstract This research presents an approach to transition from manual to automated testing of hardware specific firmware. The manual approach for firmware test- ing can be repetitive and time consuming. A significant proportion of the time is spent on cleaning and re-installing operating systems so that old firmware does not interfere with the newer firmware that is being tested. The approach in this research utilizes virtual machines and presents an automation framework. One component of the automation framework is an application to imitate con- nected peripheral devices to bypass hardware dependencies of firmware in- stallers. The framework also consists of automation and pipeline scripts with the objective to execute firmware installers and detect errors and abnormalities in the installation and updating processes. The framework can run on locally hosted virtual machines, but is most applicable using cloud hosted virtual ma- chines, where it is part of a continuous integration that builds, downloads, installs, updates and tests new firmware versions, in a completely automated manner. The framework is evaluated by measuring and comparing execution times with manually conducted installation and updating tests, and the result shows that the framework complete tests much faster than the manual approach. -

GUI-Guided Test Script Repair for Mobile Apps

This is the author's version of an article that has been published in this journal. Changes were made to this version by the publisher prior to publication. The final version of record is available at http://dx.doi.org/10.1109/TSE.2020.3007664 1 GUI-Guided Test Script Repair for Mobile Apps Minxue Pan, Tongtong Xu, Yu Pei, Zhong Li, Tian Zhang, Xuandong Li Abstract—Graphical User Interface (GUI) testing is widely used to test mobile apps. As mobile apps are frequently updated and need repeated testing, to reduce the test cost, their test cases are often coded as scripts to enable automated execution using test harnesses/tools. When those mobile apps evolve, many of the test scripts, however, may become broken due to changes made to the app GUIs. While it is desirable that the broken scripts get repaired, doing it manually can be preventively expensive if the number of tests need repairing is large. We propose in this paper a novel approach named METER to repairing broken GUI test scripts automatically when mobile apps evolve. METER leverages computer vision techniques to infer GUI changes between two versions of a mobile app and uses the inferred changes to guide the repair of GUI test scripts. Since METER only relies on screenshots to repair GUI tests, it is applicable to apps targeting open or closed source mobile platforms. In experiments conducted on 22 Android apps and 6 iOS apps, repairs produced by METER helped preserve 63.7% and 38.8% of all the test actions broken by the GUI changes, respectively.