Speech and Audio Processing for Coding, Enhancement and Recognition Speech and Audio Processing for Coding, Enhancement and Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Speech Coding and Compression

Introduction to voice coding/compression PCM coding Speech vocoders based on speech production LPC based vocoders Speech over packet networks Speech coding and compression Corso di Networked Multimedia Systems Master Universitario di Primo Livello in Progettazione e Gestione di Sistemi di Rete Carlo Drioli Università degli Studi di Verona Facoltà di Scienze Matematiche, Dipartimento di Informatica Fisiche e Naturali Speech coding and compression Introduction to voice coding/compression PCM coding Speech vocoders based on speech production LPC based vocoders Speech over packet networks Speech coding and compression: OUTLINE Introduction to voice coding/compression PCM coding Speech vocoders based on speech production LPC based vocoders Speech over packet networks Speech coding and compression Introduction to voice coding/compression PCM coding Speech vocoders based on speech production LPC based vocoders Speech over packet networks Introduction Approaches to voice coding/compression I Waveform coders (PCM) I Voice coders (vocoders) Quality assessment I Intelligibility I Naturalness (involves speaker identity preservation, emotion) I Subjective assessment: Listening test, Mean Opinion Score (MOS), Diagnostic acceptability measure (DAM), Diagnostic Rhyme Test (DRT) I Objective assessment: Signal to Noise Ratio (SNR), spectral distance measures, acoustic cues comparison Speech coding and compression Introduction to voice coding/compression PCM coding Speech vocoders based on speech production LPC based vocoders Speech over packet networks -

Space Application Development Board

Space Application Development Board The Space Application Development Board (SADB-C6727B) enables developers to implement systems using standard and custom algorithms on processor hardware similar to what would be deployed for space in a fully radiation hardened (rad-hard) design. The SADB-C6727B is devised to be similar to a space qualified rad-hardened system design using the TI SMV320C6727B-SP DSP and high-rel memories (SRAM, SDRAM, NOR FLASH) from Cobham. The VOCAL SADB- C6727B development board does not use these high reliability space qualified components but rather implements various common bus widths and memory types/speeds for algorithm development and performance evaluation. VOCAL Technologies, Ltd. www.vocal.com 520 Lee Entrance, Suite 202 Tel: (716) 688-4675 Buffalo, New York 14228 Fax: (716) 639-0713 Audio inputs are handled by a TI ADS1278 high speed multichannel analog-to-digital converter (ADC) which has a variant qualified for space operation. Audio outputs may be generated from digital signal Pulse Density Modulation (PDM) or Pulse Width Modulation (PWM) signals to avoid the need for a space qualified digital-to-analog converter (DAC) hardware or a traditional audio codec circuit. A commercial grade AIC3106 audio codec is provided for algorithm development and for comparison of audio performance to PDM/PWM generated audio signals. Digital microphones (PDM or I2S formats) can be sampled by the processor directly or by the AIC3106 audio codec (PDM format only). Digital audio inputs/outputs can also be connected to other processors (using the McASP signals directly). Both SRAM and SDRAM memories are supported via configuration resistors to allow for developing and benchmarking algorithms using either 16-bit wide or 32-bit wide busses (also via configuration resistors). -

Concatenation of Compression Codecs – the Need for Objective Evaluations

Concatenation of compression codecs – The need for objective evaluations C.J. Dalton (UKIB) In this article the Author considers, firstly, a hypothetical broadcast network in which compression equipments have replaced several existing functions – resulting in multiple-cascading. Secondly, he describes a similar network that has been optimized for compression technology. Picture-quality assessment methods – both conventional and new, fied for still-picture applications in the printing in- subjective and objective – are dustry and was adapted to motion video applica- discussed with the aim of providing tions, by encoding on a picture-by-picture basis. background information. Some Various proprietary versions of JPEG, with higher proposals are put forward for compression ratios, were introduced to reduce the objective evaluation together with storage requirements, and alternative systems based on Wavelets and Fractals have been imple- initial observations when concaten- mented. Due to the availability of integrated hard- ating (cascading) codecs of similar ware, motion JPEG is now widely used for off- and different types. and on-line editing, disc servers, slow-motion sys- tems, etc., but there is little standardization and few interfaces at the compressed level. The MPEG-1 compression standard was aimed at 1. Introduction progressively-scanned moving images and has been widely adopted for CD-ROM and similar ap- Bit-rate reduction (BRR) techniques – commonly plications; it has also found limited application for referred to as compression – are now firmly estab- broadcast video. The MPEG-2 system is specifi- lished in the latest generation of broadcast televi- cally targeted at the complete gamut of video sys- sion equipments. tems; for standard-definition television, the MP@ML codec – with compression ratios of 20:1 Original language: English Manuscript received 15/1/97. -

MIGRATING RADIO CALL-IN TALK SHOWS to WIDEBAND AUDIO Radio Is the Original Social Network

Established 1961 2013 WBA Broadcasters Clinic Madison, WI MIGRATING RADIO CALL-IN TALK SHOWS TO WIDEBAND AUDIO Radio is the original Social Network • Serves local or national audience • Allows real-time commentary from the masses • The telephone becomes the medium • Telephone technical factors have limited the appeal of the radio “Social Network” Telephones have changed over the years But Telephone Sound has not changed (and has gotten worse) This is very bad for Radio Why do phones sound bad? • System designed for efficiency not comfort • Sampling rate of 8kHz chosen for all calls • 4 kHz max response • Enough for intelligibility • Loses depth, nuance, personality • Listener fatigue Why do phones sound so bad ? (cont) • Low end of telephone calls have intentional high- pass filtering • Meant to avoid AC power hum pickup in phone lines • Lose 2-1/2 Octaves of speech audio on low end • Not relevant for digital Why Phones Sound bad (cont) Los Angeles Times -- January 10, 2009 Verizon Communications Inc., the second-biggest U.S. telephone company, plans to do away with traditional phone lines within seven years as it moves to carry all calls over the Internet. An Internet-based service can be maintained at a fraction of the cost of a phone network and helps Verizon offer a greater range of services, Stratton said. "We've built our business over the years with circuit-switched voice being our bread and butter...but increasingly, we are in the business of selling, basically, data connectivity," Chief Marketing Officer John Stratton said. VoIP -

Digital Speech Processing— Lecture 17

Digital Speech Processing— Lecture 17 Speech Coding Methods Based on Speech Models 1 Waveform Coding versus Block Processing • Waveform coding – sample-by-sample matching of waveforms – coding quality measured using SNR • Source modeling (block processing) – block processing of signal => vector of outputs every block – overlapped blocks Block 1 Block 2 Block 3 2 Model-Based Speech Coding • we’ve carried waveform coding based on optimizing and maximizing SNR about as far as possible – achieved bit rate reductions on the order of 4:1 (i.e., from 128 Kbps PCM to 32 Kbps ADPCM) at the same time achieving toll quality SNR for telephone-bandwidth speech • to lower bit rate further without reducing speech quality, we need to exploit features of the speech production model, including: – source modeling – spectrum modeling – use of codebook methods for coding efficiency • we also need a new way of comparing performance of different waveform and model-based coding methods – an objective measure, like SNR, isn’t an appropriate measure for model- based coders since they operate on blocks of speech and don’t follow the waveform on a sample-by-sample basis – new subjective measures need to be used that measure user-perceived quality, intelligibility, and robustness to multiple factors 3 Topics Covered in this Lecture • Enhancements for ADPCM Coders – pitch prediction – noise shaping • Analysis-by-Synthesis Speech Coders – multipulse linear prediction coder (MPLPC) – code-excited linear prediction (CELP) • Open-Loop Speech Coders – two-state excitation -

PXC 550 Wireless Headphones

PXC 550 Wireless headphones Instruction Manual 2 | PXC 550 Contents Contents Important safety instructions ...................................................................................2 The PXC 550 Wireless headphones ...........................................................................4 Package includes ..........................................................................................................6 Product overview .........................................................................................................7 Overview of the headphones .................................................................................... 7 Overview of LED indicators ........................................................................................ 9 Overview of buttons and switches ........................................................................10 Overview of gesture controls ..................................................................................11 Overview of CapTune ................................................................................................12 Getting started ......................................................................................................... 14 Charging basics ..........................................................................................................14 Installing CapTune .....................................................................................................16 Pairing the headphones ...........................................................................................17 -

Audio Coding for Digital Broadcasting

Recommendation ITU-R BS.1196-7 (01/2019) Audio coding for digital broadcasting BS Series Broadcasting service (sound) ii Rec. ITU-R BS.1196-7 Foreword The role of the Radiocommunication Sector is to ensure the rational, equitable, efficient and economical use of the radio- frequency spectrum by all radiocommunication services, including satellite services, and carry out studies without limit of frequency range on the basis of which Recommendations are adopted. The regulatory and policy functions of the Radiocommunication Sector are performed by World and Regional Radiocommunication Conferences and Radiocommunication Assemblies supported by Study Groups. Policy on Intellectual Property Right (IPR) ITU-R policy on IPR is described in the Common Patent Policy for ITU-T/ITU-R/ISO/IEC referenced in Resolution ITU-R 1. Forms to be used for the submission of patent statements and licensing declarations by patent holders are available from http://www.itu.int/ITU-R/go/patents/en where the Guidelines for Implementation of the Common Patent Policy for ITU-T/ITU-R/ISO/IEC and the ITU-R patent information database can also be found. Series of ITU-R Recommendations (Also available online at http://www.itu.int/publ/R-REC/en) Series Title BO Satellite delivery BR Recording for production, archival and play-out; film for television BS Broadcasting service (sound) BT Broadcasting service (television) F Fixed service M Mobile, radiodetermination, amateur and related satellite services P Radiowave propagation RA Radio astronomy RS Remote sensing systems S Fixed-satellite service SA Space applications and meteorology SF Frequency sharing and coordination between fixed-satellite and fixed service systems SM Spectrum management SNG Satellite news gathering TF Time signals and frequency standards emissions V Vocabulary and related subjects Note: This ITU-R Recommendation was approved in English under the procedure detailed in Resolution ITU-R 1. -

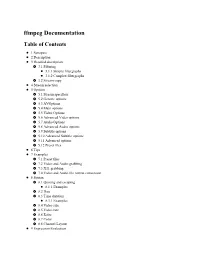

Ffmpeg Documentation Table of Contents

ffmpeg Documentation Table of Contents 1 Synopsis 2 Description 3 Detailed description 3.1 Filtering 3.1.1 Simple filtergraphs 3.1.2 Complex filtergraphs 3.2 Stream copy 4 Stream selection 5 Options 5.1 Stream specifiers 5.2 Generic options 5.3 AVOptions 5.4 Main options 5.5 Video Options 5.6 Advanced Video options 5.7 Audio Options 5.8 Advanced Audio options 5.9 Subtitle options 5.10 Advanced Subtitle options 5.11 Advanced options 5.12 Preset files 6 Tips 7 Examples 7.1 Preset files 7.2 Video and Audio grabbing 7.3 X11 grabbing 7.4 Video and Audio file format conversion 8 Syntax 8.1 Quoting and escaping 8.1.1 Examples 8.2 Date 8.3 Time duration 8.3.1 Examples 8.4 Video size 8.5 Video rate 8.6 Ratio 8.7 Color 8.8 Channel Layout 9 Expression Evaluation 10 OpenCL Options 11 Codec Options 12 Decoders 13 Video Decoders 13.1 rawvideo 13.1.1 Options 14 Audio Decoders 14.1 ac3 14.1.1 AC-3 Decoder Options 14.2 ffwavesynth 14.3 libcelt 14.4 libgsm 14.5 libilbc 14.5.1 Options 14.6 libopencore-amrnb 14.7 libopencore-amrwb 14.8 libopus 15 Subtitles Decoders 15.1 dvdsub 15.1.1 Options 15.2 libzvbi-teletext 15.2.1 Options 16 Encoders 17 Audio Encoders 17.1 aac 17.1.1 Options 17.2 ac3 and ac3_fixed 17.2.1 AC-3 Metadata 17.2.1.1 Metadata Control Options 17.2.1.2 Downmix Levels 17.2.1.3 Audio Production Information 17.2.1.4 Other Metadata Options 17.2.2 Extended Bitstream Information 17.2.2.1 Extended Bitstream Information - Part 1 17.2.2.2 Extended Bitstream Information - Part 2 17.2.3 Other AC-3 Encoding Options 17.2.4 Floating-Point-Only AC-3 Encoding -

Surround Sound Processed by Opus Codec: a Perceptual Quality Assessment

28. Konferenz Elektronische Sprachsignalverarbeitung 2017, Saarbrücken SURROUND SOUND PROCESSED BY OPUS CODEC: APERCEPTUAL QUALITY ASSESSMENT Franziska Trojahn, Martin Meszaros, Michael Maruschke and Oliver Jokisch Hochschule für Telekommunikation Leipzig, Germany [email protected] Abstract: The article describes the first perceptual quality study of 5.1 surround sound that has been processed by the Opus codec standardised by the Internet Engineering Task Force (IETF). All listening sessions with up to five subjects took place in a slightly sound absorbing laboratory – simulating living room conditions. For the assessment we conducted a Degradation Category Rating (DCR) listening- opinion test according to ITU-T P.800 recommendation with stimuli for six channels at total bitrates between 96 kbit/s and 192 kbit/s as well as hidden references. A group of 27 naive listeners compared a total of 20 sound samples. The differences between uncompressed and degraded sound samples were rated on a five-point degradation category scale resulting in Degradation Mean Opinion Score (DMOS). The overall results show that the average quality correlates with the bitrates. The quality diverges for the individual test stimuli depending on the music characteristics. Under most circumstances, a bitrate of 128 kbit/s is sufficient to achieve acceptable quality. 1 Introduction Nowadays, a high number of different speech and audio codecs are implemented in several kinds of multimedia applications; including audio / video entertainment, broadcasting and gaming. In recent years the demand for low delay and high quality audio applications, such as remote real-time jamming and cloud gaming, has been increasing. Therefore, current research objectives do not only include close to natural speech or audio quality, but also the requirements of low bitrates and a minimum latency. -

(A/V Codecs) REDCODE RAW (.R3D) ARRIRAW

What is a Codec? Codec is a portmanteau of either "Compressor-Decompressor" or "Coder-Decoder," which describes a device or program capable of performing transformations on a data stream or signal. Codecs encode a stream or signal for transmission, storage or encryption and decode it for viewing or editing. Codecs are often used in videoconferencing and streaming media solutions. A video codec converts analog video signals from a video camera into digital signals for transmission. It then converts the digital signals back to analog for display. An audio codec converts analog audio signals from a microphone into digital signals for transmission. It then converts the digital signals back to analog for playing. The raw encoded form of audio and video data is often called essence, to distinguish it from the metadata information that together make up the information content of the stream and any "wrapper" data that is then added to aid access to or improve the robustness of the stream. Most codecs are lossy, in order to get a reasonably small file size. There are lossless codecs as well, but for most purposes the almost imperceptible increase in quality is not worth the considerable increase in data size. The main exception is if the data will undergo more processing in the future, in which case the repeated lossy encoding would damage the eventual quality too much. Many multimedia data streams need to contain both audio and video data, and often some form of metadata that permits synchronization of the audio and video. Each of these three streams may be handled by different programs, processes, or hardware; but for the multimedia data stream to be useful in stored or transmitted form, they must be encapsulated together in a container format. -

Speech Compression Using Discrete Wavelet Transform and Discrete Cosine Transform

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 1 Issue 5, July - 2012 Speech Compression Using Discrete Wavelet Transform and Discrete Cosine Transform Smita Vatsa, Dr. O. P. Sahu M. Tech (ECE Student ) Professor Department of Electronicsand Department of Electronics and Communication Engineering Communication Engineering NIT Kurukshetra, India NIT Kurukshetra, India Abstract reproduced with desired level of quality. Main approaches of speech compression used today are Aim of this paper is to explain and implement waveform coding, transform coding and parametric transform based speech compression techniques. coding. Waveform coding attempts to reproduce Transform coding is based on compressing signal input signal waveform at the output. In transform by removing redundancies present in it. Speech coding at the beginning of procedure signal is compression (coding) is a technique to transform transformed into frequency domain, afterwards speech signal into compact format such that speech only dominant spectral features of signal are signal can be transmitted and stored with reduced maintained. In parametric coding signals are bandwidth and storage space respectively represented through a small set of parameters that .Objective of speech compression is to enhance can describe it accurately. Parametric coders transmission and storage capacity. In this paper attempts to produce a signal that sounds like Discrete wavelet transform and Discrete cosine original speechwhether or not time waveform transform -

Polycom Voice

Level 1 Technical – Polycom Voice Level 1 Technical Polycom Voice Contents 1 - Glossary .......................................................................................................................... 2 2 - Polycom Voice Networks ................................................................................................. 3 Polycom UC Software ...................................................................................................... 3 Provisioning ..................................................................................................................... 3 3 - Key Features (Desktop and Conference Phones) ............................................................ 5 OpenSIP Integration ........................................................................................................ 5 Microsoft Integration ........................................................................................................ 5 Lync Qualification ............................................................................................................ 5 Better Together over Ethernet ......................................................................................... 5 BroadSoft UC-One Integration ......................................................................................... 5 Conference Link / Conference Link2 ................................................................................ 6 Polycom Desktop Connector ..........................................................................................