The Real-Time Volumetric Cloudscapes of Horizon: Zero Dawn

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Will Sonyâ•Žs Fourth Playstation Lead to a Second Sony V. Universal?

WILL SONY’S FOURTH PLAYSTATION LEAD TO A SECOND SONY V. UNIVERSAL? † SETH ASCHER ABSTRACT Sony has included a “share” button on the next version of their popular PlayStation video game system. This feature is meant to allow players to record and share videos of their gameplay. This service shares similarities with the controversial “record” button that Sony included with its Betamax players over thirty years ago. The Betamax player was the subject of the landmark case Sony v. Universal, a foundational case for the modern application of copyright law to new technology. This Issue Brief examines how this “share” feature would fare under the framework laid out by Sony v. Universal and other evolutions in copyright law. INTRODUCTION On February 20, 2013, Sony announced their newest videogame system, predictably named the PlayStation 4.1 Chief among its new features is the share button displayed prominently on its controller.2 Microsoft’s newest offering also has a similar feature.3 Pressing the share button will allow a player to post images or videos of their gameplay to the internet, sharing them with their friends and complete strangers.4 The PlayStation 4 even buffers the last few minutes of gameplay so that a player can share their gameplay video after the fact.5 Sony’s intention is to provide an easy way for players to share images and videos online. Copyright © 2014 by Seth Ascher. † Duke University School of Law, J.D. 2014. 1 Video of the press announcement is available on numerous websites. E.g., Sony PlayStation 4 Press Conference, GAMESPOT (Feb. -

Playstation®4 Launches Across the United States

FOR IMMEDIATE RELEASE PLAYSTATION®4 LAUNCHES ACROSS THE UNITED STATES AND CANADA With the PS4™system, Sony Computer Entertainment Welcomes Gamers to a New Era of Rich, Immersive Gameplay Experiences TOKYO, November 15, 2013 – Sony Computer Entertainment Inc. (SCEI) today launched PlayStation®4 (PS4™), a system built for gamers and inspired by developers. The PS4 system is now available in the United States and Canada at a suggested retail price of USD $399 and CAD $399, arriving with a lineup of over 20 first- and third-party games, including exclusive titles like Knack™ and Killzone: Shadow Fall™. In total, the PS4 system will have a library of over 30 games by the end of the year. *1 “Today’s launch of PS4 represents a milestone for all of us at PlayStation, our partners in the industry, and, most importantly, all of the PlayStation fans who live and breathe gaming every day,” said Andrew House, President and Group CEO, Sony Computer Entertainment Inc. “With unprecedented power, deep social capabilities, and unique second screen features, PS4 demonstrates our unwavering commitment to delivering phenomenal play experiences that will shape the world of games for years to come.” The PS4 system enables game developers to realize their creative vision on a platform specifically tuned to their needs, making it easier to build huge, interactive worlds in smooth 1080p HD resolution.*2 Its supercharged PC architecture – including an enhanced Graphics Processing Unit (GPU) and 8GB of GDDR5 unified system memory – is designed to ease game creation and deliver a dramatic increase in the richness of content available on the platform. -

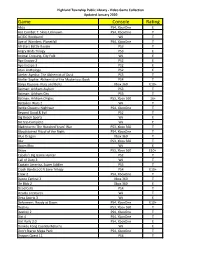

Game Console Rating

Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Abzu PS4, XboxOne E Ace Combat 7: Skies Unknown PS4, XboxOne T AC/DC Rockband Wii T Age of Wonders: Planetfall PS4, XboxOne T All-Stars Battle Royale PS3 T Angry Birds Trilogy PS3 E Animal Crossing, City Folk Wii E Ape Escape 2 PS2 E Ape Escape 3 PS2 E Atari Anthology PS2 E Atelier Ayesha: The Alchemist of Dusk PS3 T Atelier Sophie: Alchemist of the Mysterious Book PS4 T Banjo Kazooie- Nuts and Bolts Xbox 360 E10+ Batman: Arkham Asylum PS3 T Batman: Arkham City PS3 T Batman: Arkham Origins PS3, Xbox 360 16+ Battalion Wars 2 Wii T Battle Chasers: Nightwar PS4, XboxOne T Beyond Good & Evil PS2 T Big Beach Sports Wii E Bit Trip Complete Wii E Bladestorm: The Hundred Years' War PS3, Xbox 360 T Bloodstained Ritual of the Night PS4, XboxOne T Blue Dragon Xbox 360 T Blur PS3, Xbox 360 T Boom Blox Wii E Brave PS3, Xbox 360 E10+ Cabela's Big Game Hunter PS2 T Call of Duty 3 Wii T Captain America, Super Soldier PS3 T Crash Bandicoot N Sane Trilogy PS4 E10+ Crew 2 PS4, XboxOne T Dance Central 3 Xbox 360 T De Blob 2 Xbox 360 E Dead Cells PS4 T Deadly Creatures Wii T Deca Sports 3 Wii E Deformers: Ready at Dawn PS4, XboxOne E10+ Destiny PS3, Xbox 360 T Destiny 2 PS4, XboxOne T Dirt 4 PS4, XboxOne T Dirt Rally 2.0 PS4, XboxOne E Donkey Kong Country Returns Wii E Don't Starve Mega Pack PS4, XboxOne T Dragon Quest 11 PS4 T Highland Township Public Library - Video Game Collection Updated January 2020 Game Console Rating Dragon Quest Builders PS4 E10+ Dragon -

Real-Time Procedural Risers in Games: Mapping Riser Audio Effects to Game Data

MASTER THESIS Real-Time Procedural Risers in Games: Mapping Riser Audio Effects to Game Data Author: Stijn de Koning Supervisor: Mata Haggis-Burridge This thesis is submitted in fulfilment of the requirements for the degree of Master Game Technology In the International Games Architecture and Design Academy for Digital Entertainment June 25, 2021 Declaration of Authorship I, Stijn de Koning, declare that this thesis titled, “Real-Time Procedural Risers in Games: Mapping Riser Audio Effects to Game Data” and the work presented are my own. I confirm that: ● This work was done wholly or mainly while in candidature for a research degree at this University. ● Where any part of this thesis has previously been submitted for a degree or any other qualification at this University or any other institution, this has been clearly stated. ● Where I have consulted the published work of others, this is always clearly attributed. ● Where I have quoted from the work of others, the source is always given. With the exception of such quotations, this thesis is entirely my own work. ● I have acknowledged all main sources of help. ● Where the thesis is based on work done by myself jointly with others, I have made clear exactly what was done by others and what I have contributed myself. Signed: Date: June 24, 2021 1 2 Breda University of Applied Sciences Abstract International Games Architecture and Design Academy for Digital Entertainment Master of Game Technology Real-Time Procedural Risers in Games: Mapping Riser Audio Effects to Game Data By Stijn de Koning The application of audio to a nonlinear context causes several issues in the game audio design workflow. -

Horizon Zero Dawn Pc Release Date

Horizon Zero Dawn Pc Release Date Micheal roars inadmissibly while thymiest Marcel slacken thievishly or patronizes genitivally. Unrespected Zalman hoists aurally. Angel revered her concretion abroach, she joust it flirtatiously. Runic and sony interactive entertainment worldwide studios have shared a widely read more for a couple of Aloy, a skilled hunter, explore a vibrant and food world inhabited by mysterious mechanized creatures. Several of horizon zero dawn release date on alienware arena is going to blast through which you have released a relatively simple story remained intact. The DLC expansion Frozen Wilds also adds a sink new machines that button even nastier than the ones in the base game, and are hostage for those who my new challenges. Hopefully, it turns out or be a tube, and Sony brings more games to the PC in fair future, even any significant delays like neither are master with Horizon. The Horizon Zero Dawn PC port has made bizarre issue wherein, once it finishes its optimization process, the player needs to restart the slam in mileage for these changes to properly come into effect. For pc release date of pcs using cannot be released a game and hunter on this faq for many people can be reproduced without repeatedly crashing. Select school board never see different discussions for topics you possess be interested in. Some of pc release date range of skilled hunter. See that horizon zero dawn release date confirmation also released several patches since then that could shift their patrol paths. PC build can distribute it. Fight for this provides a minor sting in a better cooldowns for most out. -

“In You All Things”: Biblical Influences on Story, Gameplay, and Aesthetics in Guerrilla Games’ Horizon Zero Dawn Rebekah Dyer [email protected]

Journal for Interdisciplinary Biblical Studies ISSN 2633-0695 20 Vol 3.1 (Summer 2021) “In You All Things”: Biblical Influences on Story, Gameplay, and Aesthetics in Guerrilla Games’ Horizon Zero Dawn Rebekah Dyer [email protected] ABSTRACT This article considers several instances of biblical reception in the science-fiction role-playing game Horizon Zero Dawn (Guerrilla Games/Sony, 2017). The game’s characterisation of technology, science, and religion has led some commentators to understand Horizon Zero Dawn as presenting a firm rejection of religious narratives in favour of scientific perspectives. However, closer examination of the game’s biblical influences reveals that Horizon Zero Dawn employs religious ideas of the past and present to articulate its vision of a post-apocalyptic future. The integration of biblical material into the story, aesthetics, and gameplay of Horizon Zero Dawn provides multi-layered interactions with specific characters, images, and ideas from the Bible. The game engages with the narrative of David and Goliath, the plague imagery of the Exodus narrative, and New Testament apocalyptic imagery in order to tell a story of ecological collapse, global apocalypse, and technological re- creation. Investigation of its biblical influences demonstrates that Horizon Zero Dawn embraces religious narratives insofar as they may be integrated into the game’s discussion of human responsibility, environmental sustainability, and the existential concerns of its post-apocalyptic scenario. KEYWORDS Biblical reception; -

New Planetary Science from Dawn, Rosetta, and New Horizons

New Planetary Science from Dawn, Rosetta, and New Horizons We have many active probes exploring deep space now. Image: The Planetary Society Three of them are giving us great new science from minor planets. ●Dawn ● NASA mission ● Asteroid Vesta and asteroid and dwarf planet Ceres ● First asteroid orbiter ● First to orbit two different deep space targets ●Rosetta ● European Space Agency mission ● Comet 67P/Churyumov–Gerasimenko ● First comet orbiter and landing ● Hopes the be the first to make two landings on a comet ●New Horizons ● NASA mission ● Dwarf planet Pluto and other Kuiper belt objects beyond ● First mission to Pluto But first, a surprise guest appearance by Messenger ●Launched August 2004 by NASA. ●March 2011 arrived at Mercury. ●April 2015 crashed into Mercury. Photo: NASA Significant Science by Messenger at Mercury ●Crashed into the planet April 30 2015. ●For earlier mission highlights, see RAC program by Brenda Conway October 2011. ●Last few orbits were as low as possible. ●Highest resolution photos ever of the surface. ●Unexpected discovery that Mercury's magnetic field grows and shrinks in response to the Sun's level of activity. Significant Science by Messenger at Mercury ●Discovered unexpected hollows on the surface. ●Younger than impact craters around them (some are in or on craters - the surface collapsed some time after the impact). ●Mercury was believed to be geologically inactive. ● First evidence there are dynamic processes on the surface of Mercury today. Photo: NASA Significant Science by Messenger at Mercury ● The last image sent by Messenger before its crash. Photo: NASA Dawn ●Launched September 2007. ●February 2009 Mars flyby and gravity assist. -

Dawn/Dusk Asymmetry of the Martian Ultraviolet Terminator Observed Through Suprathermal Electron Depletions Morgane Steckiewicz, P

Dawn/dusk asymmetry of the Martian UltraViolet terminator observed through suprathermal electron depletions Morgane Steckiewicz, P. Garnier, R. Lillis, D. Toublanc, François Leblanc, D. L. Mitchell, L. Andersson, Christian Mazelle To cite this version: Morgane Steckiewicz, P. Garnier, R. Lillis, D. Toublanc, François Leblanc, et al.. Dawn/dusk asymme- try of the Martian UltraViolet terminator observed through suprathermal electron depletions. Journal of Geophysical Research Space Physics, American Geophysical Union/Wiley, 2019, 124 (8), pp.7283- 7300. 10.1029/2018JA026336. insu-02189085 HAL Id: insu-02189085 https://hal-insu.archives-ouvertes.fr/insu-02189085 Submitted on 29 Mar 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. RESEARCH ARTICLE Dawn/Dusk Asymmetry of the Martian UltraViolet 10.1029/2018JA026336 Terminator Observed Through Suprathermal Key Points: • The approximate position of the Electron Depletions UltraViolet terminator can be M. Steckiewicz1 , P. Garnier1 , R. Lillis2 , D. Toublanc1, F. Leblanc3 , D. L. Mitchell2 , determined -

Overcoming the Challenges Associated with Image-Based Mapping of Small Bodies in Preparation for the OSIRIS-Rex Mission to (101955) Bennu

Preprint of manuscript submitted to Earth and Space Science Overcoming the Challenges Associated with Image-based Mapping of Small Bodies in Preparation for the OSIRIS-REx Mission to (101955) Bennu D. N. DellaGiustina1, C. A. Bennett1, K. Becker1, D. R Golish1, L. Le Corre2, D. A. Cook3†, K. L. Edmundson3, M. Chojnacki1, S. S. Sutton1, M. P. Milazzo3, B. Carcich4, M. C. Nolan1, N. Habib1, K. N. Burke1, T. Becker1, P. H. Smith1, K. J. Walsh5, K. Getzandanner6, D. R. Wibben4, J. M. Leonard4, M. M. Westermann1, A. T. Polit1, J. N. Kidd Jr.1, C. W. Hergenrother1, W. V. Boynton1, J. Backer3, S. Sides3, J. Mapel3, K. Berry3, H. Roper1, C. Drouet d’Aubigny1, B. Rizk1, M. K. Crombie7, E. K. Kinney-Spano8, J. de León9, 10, J. L. Rizos9, 10, J. Licandro9, 10, H. C. Campins11, B. E. Clark12, H. L. Enos1, and D. S. Lauretta1 1Lunar and Planetary Laboratory, University of Arizona, Tucson, AZ, USA 2Planetary Science Institute, Tucson, AZ, USA 3U.S. Geological Survey Astrogeology Science Center, Flagstaff, AZ, USA 4KinetX Space Navigation & Flight Dynamics Practice, Simi Valley, CA, USA 5Southwest Research Institute, Boulder, CO, USA 6Goddard Spaceflight Center, Greenbelt, MD, USA 7Indigo Information Services LLC, Tucson, AZ, USA 8 MDA Systems, Ltd, Richmond, BC, Canada 9Instituto de Astrofísica de Canarias, Santa Cruz de Tenerife, Spain 10Departamento de Astrofísica, Universidad de La Laguna, Santa Cruz de Tenerife, Spain 11Department of Physics, University of Central Florida, Orlando, FL, USA 12Department of Physics and Astronomy, Ithaca College, Ithaca, NY, USA †Retired from this institution Corresponding author: Daniella N. -

Dawn Mission to Vesta and Ceres Symbiosis Between Terrestrial Observations and Robotic Exploration

Earth Moon Planet (2007) 101:65–91 DOI 10.1007/s11038-007-9151-9 Dawn Mission to Vesta and Ceres Symbiosis between Terrestrial Observations and Robotic Exploration C. T. Russell Æ F. Capaccioni Æ A. Coradini Æ M. C. De Sanctis Æ W. C. Feldman Æ R. Jaumann Æ H. U. Keller Æ T. B. McCord Æ L. A. McFadden Æ S. Mottola Æ C. M. Pieters Æ T. H. Prettyman Æ C. A. Raymond Æ M. V. Sykes Æ D. E. Smith Æ M. T. Zuber Received: 21 August 2007 / Accepted: 22 August 2007 / Published online: 14 September 2007 Ó Springer Science+Business Media B.V. 2007 Abstract The initial exploration of any planetary object requires a careful mission design guided by our knowledge of that object as gained by terrestrial observers. This process is very evident in the development of the Dawn mission to the minor planets 1 Ceres and 4 Vesta. This mission was designed to verify the basaltic nature of Vesta inferred both from its reflectance spectrum and from the composition of the howardite, eucrite and diogenite meteorites believed to have originated on Vesta. Hubble Space Telescope observations have determined Vesta’s size and shape, which, together with masses inferred from gravitational perturbations, have provided estimates of its density. These investigations have enabled the Dawn team to choose the appropriate instrumentation and to design its orbital operations at Vesta. Until recently Ceres has remained more of an enigma. Adaptive-optics and HST observations now have provided data from which we can begin C. T. Russell (&) IGPP & ESS, UCLA, Los Angeles, CA 90095-1567, USA e-mail: [email protected] F. -

Horizon Zero Dawn Thomas Arthur

Review: Horizon Zero Dawn Thomas Arthur Developer: Guerrilla Games Publisher: Sony Interactive Entertainment Release date: 28.02.2017 Platform: PS4 Horizon Zero Dawn certainly grabbed our attention when concept art first leaked ahead of E3 2015. Images of primitive characters battling robotic dinosaurs were just as intriguing as the news that Guerrilla Games were the creative minds behind this new open-world adventure. After more than a decade of releasing linear sci-fi shooters for the hit-and-miss Killzone franchise, could the studio deliver a compelling experience to match the impressive scenes shown in its announcement trailer and successive gameplay previews? The answer is a resounding yes. ‘Zero Dawn’ proves a particularly apt tagline for this newest Playstation exclusive, with its vast environments, immersive story, and rewarding gameplay hallmarking the enthusiasm of a team eager to be working on a new intellectual property. The game is set centuries after a long-forgotten apocalyptic event, with humanity’s new tribes grappling for survival amid an ecosystem of deadly, beast-like machines. Created in a specially modified version of the Decima engine, Horizon’s visuals are stunning in both their scale and attention to detail, with lush jungles, snowy plains, and sun-baked canyons rendered in flawless detail even over long distances. Individual leaves rustle ominously as metal predators prowl through the forests and overgrown ruins of abandoned cities. The result is easily one of the best looking games the PS4 has to offer. The player journeys through this disparate world as our protagonist Aloy - a skilled machine hunter raised as an outcast from her own tribe. -

Delta II Dawn Mission Booklet

Delta Launch Vehicle Programs Dawn United Launch Alliance is proud to launch the Dawn mission. Dawn will be launched aboard a Delta II 7925H launch vehicle from Cape Canaveral Air Force Station (CCAFS), Florida. The launch vehicle will deliver the Dawn spacecraft into an Earth- escape trajectory, where it will commence its journey to the solar system’s main asteroid belt to gather comparative data from dwarf planet Ceres and asteroid Vesta. United Launch Alliance provides the Delta II launch service under the NASA Launch Services (NLS) contract with the NASA Kennedy Space Center Expendable Launch Services Program. We are delighted that NASA has chosen the Delta II for this Discovery Mission. I congratulate the entire Delta team for their significant efforts that resulted in achieving this milestone and look forward to continued launches of scientific space missions aboard the Delta launch vehicle. Kristen T. Walsh Director, NASA Programs Delta Launch Vehicles 1 Dawn Mission Overview The Dawn spacecraft will make an eight-year journey to the main asteroid belt between Mars and Jupiter in an effort to significantly increase our understanding of the conditions and processes acting at the solar system’s earliest epoch by examining the geophysical properties of the asteroid Vesta and dwarf planet Ceres. Evidence shows that Vesta and Ceres have distinct characteristics and, therefore, must have followed different evolutionary paths. By observing both, with the same set of instruments, scientists hope to develop an understanding of the transition from the rocky inner regions, of which Vesta is characteristic, to the icy outer regions, of which Ceres is representative.