An Object's Smell in the Multisensory Brain

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Billboard.Com “Finesse” Hits a New High After Cardi B Hopped on the Track’S Remix, Which Arrived on Jan

GRAMMYS 2018 ‘You don’t get to un-have this moment’ LORDE on a historic Grammys race, her album of the year nod and the #MeToo movement PLUS Rapsody’s underground takeover and critics’ predictions for the Big Four categories January 20, 2018 | billboard.com “Finesse” hits a new high after Cardi B hopped on the track’s remix, which arrived on Jan. 4. COURTESY OF ATLANTIC RECORDS ATLANTIC OF COURTESY Bruno Mars And Cardi B Title CERTIFICATION Artist osition 2 Weeks Ago Peak P ‘Finesse’ Last Week This Week PRODUCER (SONGWRITER) IMPRINT/PROMOTION LABEL Weeks On Chart #1 111 6 WK S Perfect 2 Ed Sheeran 120 Their Way Up W.HICKS,E.SHEERAN (E.C.SHEERAN) ATLANTIC sic, sales data as compiled by Nielsen Music and streaming activity data by online music sources tracked by Nielsen Music. tracked sic, activity data by online music sources sales data as compiled by Nielsen Music and streaming Havana 2 Camila Cabello Feat. Young Thug the first time. See Charts Legend on billboard.com/biz for complete rules and explanations. © 2018, Prometheus Global Media, LLC and Nielsen Music, Inc. Global Media, LLC All rights reserved. © 2018, Prometheus for complete rules and explanations. the first time. See Charts on billboard.com/biz Legend 3 2 2 222 FRANK DUKES (K.C.CABELLO,J.L.WILLIAMS,A.FEENY,B.T.HAZZARD,A.TAMPOSI, The Hot 100 B.LEE,A.WOTMAN,P.L.WILLIAMS,L.BELL,R.L.AYALA RODRIGUEZ,K.GUNESBERK) SYCO/EPIC DG AG SG Finesse Bruno Mars & Cardi B - 35 3 32 RUNO MARS AND CARDI B achieve the career-opening feat, SHAMPOO PRESS & CURL,STEREOTYPES (BRUNO MARS,P.M.LAWRENCE II, C.B.BROWN,J.E.FAUNTLEROY II,J.YIP,R.ROMULUS,J.REEVES,R.C.MCCULLOUGH II) ATLANTIC bring new jack swing and the second male; Lionel Richie back to the top 10 of the landed at least three from each of his 2 3 4 Rockstar 2 Post Malone Feat. -

Jennifer Hudson SEPTEMBER 3 0 OCTOBER OCTOBER 7 OCTOBER

Jennifer Hudson Chemical" and "No News Is Bad News." "It's a nice marriage of where I've come from and where I've gotten to. For me, it's the "I think people will be pleasantly surprised, because it shows a track that ties all the ends together," he says of the latter. side of my work that no one has heard before," Jennifer Hudson says of her long -in- the -works debut. First single "Spotlight," Xtreme penned by Ne -Yo, is top 40 on Billboard's Hot R &B /Hip -Hop TBA (Universal) Songs chart after seven weeks. While a follow -up hasn't been The Bronx urban bachata duo's breakout, " Haciendo Historia," chosen, some tracks in contention are the Timbaland- produced has sold 125,000 copies in the United States and Puerto Rico, ac- "Pocketbook" featuring Ludacris and "Can't Stop the Rain," also cording to Nielsen SoundScan, and spawned hits "Shorty Shorty" written by Ne -Yo. Additional contributors to the and "No Me Digas Que No." Steve Styles and Danny D. (the for- album include Robin Thicke, the Under- mer won an AS CAP Latino Award this year for penning "Shorty dogs, Diane Warren, Christopher Shorty") are producing and writing their follow -up with produc- "Tricky" Stewart and Jack ers Sergio George, George Zamora and manager Ben de Jesus. Splash. R. Kelly and Akon It's "still within the urban bachata realm but a little more tradi- are expected to con- tional," de Jesus says. Referencing everything from salsa to clas- tribute as well. sic Dominican bachata to hip -hop and Sean Kingston, "the fusion is going even deeper between modern and retro," he says. -

Summer I Give G Ager Johnny Wright Says

"The key to any success is to be creative and Yo- assisted "Super Natural." "The music flows It's a scaldin to take chances," her recently appointed man- together. It's about the journey and having fun summer I give g ager Johnny Wright says. "This is how artists through music. can you a basic album stay on top: by challenging themselves and in- in a 12 -song layout, but I wanted to be more day Los Angeles creasing their fan base, and by bringing some- creative," Ciara says. in For The oppor- thing unexpected to you." "Fantasy Ride," format presents challenges and as some of the music industry's most Ciara is pushing the envelope creatively by hav- tunities alike for marketing, says Lisa Cam- ing the set feature three distinct musical styles. bridge, VP of marketing at Zomba Label Group. distinguished urban artists made their way to the As of now, the three sounds will tentatively be "The dance aspect of this album has made Shrine Auditorium for the 2008 BET Awards. called "Groove City," full of sensual songs; international want to get their hands on it, es- "Crunk Town," with Atlanta -based crunk music pecially the U.K.," Cambridge says. "She has This year's bill featured all the heavy- hitters: Usher, similar to her introductory single, "Goodies "; [had] some success overseas, but this is her real Lil Wayne, Alicia Keys and T Pain assisted by and "Kingdom of Dance," with uptempo, breakthrough. We're really hoping to set up op- house -leaning, freestyle -tinged cuts. portunities to spend time in the territories and DJ Khaled, Ludacris and Rick Ross. -

Missy Elliott Supa Dupa Fly Torrent Download Supa Dupa Fly

missy elliott supa dupa fly torrent download Supa Dupa Fly. Arguably the most influential album ever released by a female hip-hop artist, Missy "Misdemeanor" Elliott's debut album, Supa Dupa Fly, is a boundary-shattering postmodern masterpiece. It had a tremendous impact on hip-hop, and an even bigger one on R&B, as its futuristic, nearly experimental style became the de facto sound of urban radio at the close of the millennium. A substantial share of the credit has to go to producer Timbaland, whose lean, digital grooves are packed with unpredictable arrangements and stuttering rhythms that often resemble slowed-down drum'n'bass breakbeats. The results are not only unique, they're nothing short of revolutionary, making Timbaland a hip name to drop in electronica circles as well. For her part, Elliott impresses with her versatility -- she's a singer, a rapper, and an equal songwriting partner, and it's clear from the album's accompanying videos that the space-age aesthetic of the music doesn't just belong to her producer. She's no technical master on the mic; her raps are fairly simple, delivered in the slow purr of a heavy-lidded stoner. Yet they're also full of hilariously surreal free associations that fit the off-kilter sensibility of the music to a tee. Actually, Elliott sings more on Supa Dupa Fly than she does on her subsequent albums, making it her most R&B-oriented effort; she's more unique as a rapper than she is as a singer, but she has a smooth voice and harmonizes well. -

Designing for the Ears As Well As for the Eyes

Inspicio architecture 26 Sushi & Tapas restaurant, Surfside, Florida, 2015. Photo: WSDG Designing for the Ears as Well as for the Eyes By Howard Sherman orris J. Kaplan is a very hands-on businessman. A successful real estate developer and innovative res- M taurateur/entrepreneur, his career track is marked by imaginative ventures. Last year, Kaplan and his wife Sandra began laying the groundwork for a new Miami-area restaurant. A longtime fan of master chef Fernando Chang’s culinary tal- ents, Kaplan was confident there would be a strong demand for Chef Chang’s specialty—Kosher sushi and Peruvian dishes. Kaplan invested considerable time collaborating on the bill of fare and the interior design of his new restaurant. In addition to the menu, one of his primary concerns was acoustics. An avid “foodie,” Kaplan had long avoided dining in restaurants with noise levels so high they intruded on his guests’ ability to enjoy a simple conversation along with their meal. Kaplan made a promise to himself. Diners at his new restaurant would be able to discuss their food (and other topics) without having to shout at the top of their lungs. Coincidentally, as he formulated his plans for what was to be- come “26 Sushi & Tapas” in Surfside, Florida, Kaplan was also involved in building two new Miami area synagogues. During the course of a series of visits to those construction sites, he became aware of an unusually quiet ambience. Building sites are typically noisy environments and neither of these work sites would ever be mistaken for a library, but Kaplan discovered a sound clarity that was unusual enough to spark an investiga- tion. -

Download Khalid Album 2017 Download Khalid Album 2017

download khalid album 2017 Download khalid album 2017. Completing the CAPTCHA proves you are a human and gives you temporary access to the web property. What can I do to prevent this in the future? If you are on a personal connection, like at home, you can run an anti-virus scan on your device to make sure it is not infected with malware. If you are at an office or shared network, you can ask the network administrator to run a scan across the network looking for misconfigured or infected devices. Another way to prevent getting this page in the future is to use Privacy Pass. You may need to download version 2.0 now from the Chrome Web Store. Cloudflare Ray ID: 66a70459cf39f166 • Your IP : 188.246.226.140 • Performance & security by Cloudflare. Download khalid album 2017. DOWNLOAD LINK HERE - DJ khaled - Grateful Album Download DJ khaled - Grateful 2016 album mp3 download DJ khaled - Grateful Album 320 kbps DJ khaled - Grateful leak DJ khaled - Grateful Deluxe Edition, DJ khaled - Grateful 2017 has it leaked? Album full# Download DJ khaled - Grateful album 2017 Working ZIP DJ khaled - Grateful [Free] Download Full Album, DJ khaled - Grateful [Deluxe Edition] DJ khaled - Grateful telecharger album gratuit, DJ khaled - Grateful Download [Free] DJ khaled - Grateful Album 320 kbps, DJ khaled - Grateful MP3 Track, DJ khaled - Grateful Download Full Song, DJ Khaled - Grateful ALBUM 320 KBPS, DJ Khaled - Grateful ALBUM COMPLET, DJ Khaled - Grateful ALBUM DOWNLOAD, DJ Khaled - Grateful ALBUM FULL DOWNLOAD 2017, DJ Khaled - Grateful 2017 SNIPPETS, Artist: DJ Khaled Album: Grateful Year: 2017 Genre: Hip Hop, R&B, Rap. -

Madonna Miles Away Mp3, Flac, Wma

Madonna Miles Away mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic / Pop Album: Miles Away Released: 2008 Style: House, Europop MP3 version RAR size: 1850 mb FLAC version RAR size: 1967 mb WMA version RAR size: 1972 mb Rating: 4.2 Votes: 361 Other Formats: APE DMF MMF DXD MPC MP2 XM Tracklist Hide Credits Miles Away (Peter Rauhofer Mix) 1 Remix – Peter Rauhofer Miles Away (Marcos "Demo" Castellon Mix) 2 Remix – Marcos "Demo" Castellon* Miles Away (Kaskade Remix) 3 Remix – Kaskade Miles Away (Headcleanr) 4 Remix – Headcleanr Miles Away (DJ Johnny Vicious) 5 Remix – DJ Johnny Vicious* Miles Away (Morgan Page) 6 Remix – Morgan Page Miles Away (Stuart Price!) 7 Remix – Stuart Price!* 8 Miles Away (Radio Edit) Credits Producer – Danja, Justin Timberlake, Madonna, Timbaland Written-By – Justin Timberlake, Madonna, Nate Hills, Tim Mosley* Notes Released in a cardboard sleeve. Barcode and Other Identifiers Barcode: 9362498536 Other versions Category Artist Title (Format) Label Category Country Year Warner Bros. 9362-49800-8, Miles Away (CD, Records, 9362-49800-8, Madonna Europe 2008 9362498008 Maxi) Warner Bros. 9362498008 Records Miles Away (Radio Warner Bros. none Madonna Edit) (CDr, Single, none US 2009 Records Promo) Miles Away (CDr, Warner Bros. none Madonna none Denmark 2008 Single, Promo) Records Miles Away (CD, Warner Bros. 9362-49807-0 Madonna 9362-49807-0 Europe 2008 Single) Records Miles Away (CDr, Warner Bros. none Madonna none UK 2008 Single, Promo) Records Related Music albums to Miles Away by Madonna Miles Davis - Workin' with the Miles Davis Quintet Madonna - Miles Away Vol. 2 Miles Davis - Miles Davis At Plugged Nickel, Chicago Vol.2 Seven Miles - Seven Miles Miles Davis - Miles Davis - Miles Davis: The Complete 1963-64 Columbia Recordings Robert Miles - Paths Madonna Feat. -

Naughty and Nice List, 2020

North Pole Government NAUGHTY & NICE LIST 2020 NAUGHTY & NICE LIST Naughty and Nice List 2020 This is the Secretary’s This list relates to the people of the world’s performance for 2020 against the measures outlined Naughty and Nice in the Christmas Behaviour Statements. list to the Minister for Christmas Affairs In addition to providing an alphabetised list of all naughty and nice people for the year 2020, this for the financial year document contains details of how to rectify a ended 30 June 2020. naughty reputation. 2 | © Copyright North Pole Government 2020 christmasaffairs.com North Pole Government, Department of Christmas Affairs | Naughty and Nice List, 2020 Contents About this list 04 Official list (in alphabetical order) 05 Disputes 173 Rehabilitation 174 3 | © Copyright North Pole Government 2020 christmasaffairs.com North Pole Government, Department of Christmas Affairs | Naughty and Nice List, 2018-192020 About this list This list relates to the people of the world’s performance for 2020 against the measures outlined in the Christmas Behaviour Statements. In addition to providing an alphabetised list of all naughty and nice people for the 2020 financial year, this document contains details of how to rectify a naughty reputation. 4 | © Copyright North Pole Government 2020 christmasaffairs.com North Pole Government, Department of Christmas Affairs | Naughty and Nice List, 2020 Official list in alphabetical order A.J. Nice Abbott Nice Aaden Nice Abby Nice Aalani Naughty Abbygail Nice Aalia Naughty Abbygale Nice Aalis Nice Abdiel -

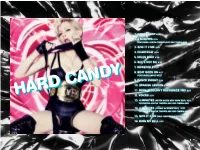

1. Candy Shop 4:16 2. 4 Minutes 4:04 3. Give It 2 Me 4:48 4

1. CANDY SHOP 4:16 2. 4 MINUTES 4:04 FEATURING JUSTIN TIMBERLAKE AND TIMBALAND 3. GIVE IT 2 ME 4:48 4. HEARTBEAT 4:04 5. MILES AWAY 4:49 6. SHE’S NOT ME 6:05 7. INCREDIBLE 6:20 8. BEAT GOES ON 4:27 FEATURING KANYE WEST 9. DANCE 2NIGHT 5:03 10. SPANISH LESSON 3:38 11. DEVIL WOULDN’T RECOGNIZE YOU 5:09 12. VOICES 3:39 13. 4 MINUTES (PETER SAVES NEW YORK EDIT) 4:56 FEATURING JUSTIN TIMBERLAKE AND TIMBALAND 14. 4 MINUTES (JUNKIE XL REMIX EDIT) 4:39 FEATURING JUSTIN TIMBERLAKE AND TIMBALAND 15. GIVE IT 2 ME (PAUL OAKENFOLD EDIT) 4:59 16. RING MY BELL 3:53 1 LOLLIPOPLOLLIPOP 2 TURKISHTURKISH DELIGHTDELIGHT 3 MYMY SUGARSUGAR ISIS RAWRAW 4 STICKYSTICKY && SWEETSWEET 5 CANDYCANDY GALOREGALORE 6 PLENTYPLENTY TOTO EATEAT 7 1. CANDY SHOP (4:16) 3. GIVE IT 2 ME (4:48) CASTELLON FOR STORM-CIRCLE ENT. (P. WILLIAMS, MADONNA) (P. WILLIAMS, MADONNA) PROTOOLS EDITING BY RON TAYLOR WATERS OF NAZARETH PUBLISHING/EMI- WATERS OF NAZARETH PUBLISHING/EMI- ASSISTANT ENGINEERS: JULIAN VASQUEZ, VADIM BLACKWOOD MUSIC, INC. (BMI)/WEBO GIRL BLACKWOOD MUSIC, INC. (BMI)/WEBO GIRL CHISLOV, GRAHAM ARCHER, FAREED SALAMAH PUBLISHING, INC. (ASCAP), ALL RIGHTS O/B/O WEBO PUBLISHING, INC. (ASCAP), ALL RIGHTS O/B/O WEBO SCE ASST.: JOSE AND JOSEPH CASTELLON GIRL PUBLISHING, INC. ADMIN. BY WB MUSIC CORP. GIRL PUBLISHING, INC. ADMIN. BY WB MUSIC CORP. STUDIOS: SARM STUDIOS UK AND THE HIT (ASCAP) (ASCAP) FACTORY CRITERIA MIAMI PRODUCED BY THE NEPTUNES PRODUCED BY THE NEPTUNES BG VOCALS BY JUSTIN TIMBERLAKE CO-PRODUCED BY MADONNA CO-PRODUCED BY MADONNA GUITARS BY DAN WARNER AND JUSTIN -

January 14, 2020: Papa's Got a Brand-New Bag: the Current State of Hip

January 14th, 2020 Papa’s Got a Brand-New Bag: The Current State of Hip Hop NEWSLETTER A n E n t e r t a i n m e n t I n d u s t r y O r g a n i z a t i on President’s Corner Dear Friends and Members, Happy New Year to you all! We're looking forward to another exciting year of CCC panels and networking events. As we start off 2020, I cordially invite you to our next panel discussion on January 14th: “Papa’s Got A Brand-New Bag: The Current State of Hip Hop” featuring major movers-and-shakers from the performing and business sides of the genre. Hip Hop continues to dominate the charts and has created many of today’s music superstars. Join our moderator, James Leach, and this panel of industry experts to learn more about this ever-evolving genre. Please note, the reservation deadline has been EXTENDED to Tuesday, January 14th at 12pm! Please register in advance below. I look forward to seeing you then. Sincerely, David Quan, MBA California Copyright Conference President 2019-2020 1 January Meeting: Tuesday, January 14th, 2020 6:15 PM - 9:00 PM "PAPA'S GOT A BRAND-NEW BAG: THE CURRENT STATE OF HIP HOP" This panel takes an in-depth look at the Hip Hop genre…delving into its humble beginnings as a genre fighting for chart recognition. Then, on to its legal battles, and finally to it overtaking rock as the most popular genre among music fans. -

I Think She Knows

I think she knows click here to download LoveStoned is a song recorded by American singer and songwriter Justin Timberlake for his "LoveStoned / I Think She Knows" was written by Justin Timberlake, Timbaland, and Nate "Danja" Hills as a sexual love song. In an interview with. Sep 8, LoveStoned / I Think She Knows Interlude Lyrics: Hey! / She's freaky and she knows it / She's freaky and I like it / Listen / She grabs the yellow. I Think She Knows Lyrics: Those flashing lights come from everywhere / The way they hit I just stop and stare / She's got me lovestoned / I think I'm lovestoned. Lyrics to "Lovestoned / I Think She Knows Interlude" song by Justin Timberlake: She's freaky and she knows it She's freaky and I like it Listen She grabs the. Discover releases, reviews, track listings, recommendations, and more about Justin Timberlake - Lovestoned / I Think She Knows at Discogs. Complete your. Find a Justin Timberlake - LoveStoned / I Think She Knows (Push 24 Extended Mix) first pressing or reissue. Complete your Justin Timberlake collection. [Intro] Em D C G D Em Em D C G D Em Those flashing lights come from everywhere. Em D C G D Em The way they hit her, I just stop and stare. Em D C She's. Justin Timberlake - Lovestoned/I Think She Knows by Justin Timberlake - www.doorway.ru Music. LoveStoned/I Think She Knows Songtext von Justin Timberlake mit Lyrics, deutscher Übersetzung, Musik-Videos und Liedtexten kostenlos auf Songtexte. com. Justin Timberlake: LoveStoned/I Think She Knows (). Not Rated | 5min | Short, Music | 13 June (USA) · Justin Timberlake: LoveStoned/I Think She. -

Justin Timberlake Futuresex Lovesounds Rar Download

Justin timberlake futuresex lovesounds rar download CLICK TO DOWNLOAD FutureSex/LoveSounds Deluxe Edition | Justin Timberlake to stream in hi-fi, or to download in True CD Quality on renuzap.podarokideal.ru Justin Timberlake, Associated Performer, Composer, Lyricist, Main Artist, Producer, Associated Performer, Composer, Lyricist, Producer. /9/12 · FutureSex / LoveSounds (Deluxe Version) Justin Timberlake Pop · Preview SONG TIME FutureSex / LoveSound 1 PREVIEW SexyBack (feat. Timbaland) Justin Timberlake & Timbaland 2 PREVIEW Released on: September 12, Justin Timberlake - Futuresex/Lovesounds () {Jive/Zomba} EAC Rip | FLAC with CUE and log | scans | mb MP3 CBR kbps | RAR | mb Genre: R&B, soul, pop, dance, hip-hop, rap Futuresex/Lovesounds is the CD by former boy band. Simple Way to Listen / Download FutureSex/LoveSounds by Justin Timberlake in mp3, FLAC, ogg, zip album and other supported formats. How to Listen / Download FutureSex/LoveSounds: Scroll down to comments - Click the link. - Get your file - Wish you have. Listen to FutureSex/LoveSounds songs Online on JioSaavn. English music album by Justin Timberlake 1. FutureSex / LoveSound - Justin Timberlake, 2. SexyBack - Justin Timberlake, Timbaland, 3. Sexy Ladies - Justin Timberlake, 4. Medley: Let Me Talk to You. /9/16 · Downloads FutureSex FutureSex/LoveSounds Prevoius Next Postado por Unknown às Marcadores: Downloads, FutureSex, FutureSex/LoveSounds No Comment to " FutureSex/LoveSounds (Deluxe) - Download " ‹‹ Postagem mais. /1/29 · (Another Song) ALL OVER AGAIN - Justin Timberlake [FUTURESEX_⁄LOVESOUNDS].mp3 download M Justin Timberlake - Cry Me A River + download renuzap.podarokideal.ru3 download M Justin Timberlake - Nothin' Else + download renuzap.podarokideal.ru3. Discography Justin Timberlake download es un cantante, compositor de origen estadounidense del genero pop iniciando su trayectoria musical en el año continuando así hasta la actualidad.