Understanding Challenges and Facilitators in Investigating and Prosecuting Child Sexual Abuse Materials in the United States

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

微软郑宇:大数据解决城市大问题 18 更智能的家庭 19 微博拾粹 Weibo Highlights

2015年1月 第33期 P7 WWT: 数字宇宙,虚拟星空 P16 P12 带着微软坐过山车 P4 P10 1 微软亚洲研究院 2015年1月 第33期 以“开放”和“极客创新”精神铸造一个“新”微软 3 第十六届“二十一世纪的计算”学术研讨会成功举办 4 微软与清华大学联合举办2014年微软亚太教育峰会 5 你还记得一夜暴红的机器人小冰吗? 7 Peter Lee:带着微软坐过山车 10 计算机科学家周以真专访 12 WWT:数字宇宙,虚拟星空 16 微软郑宇:大数据解决城市大问题 18 更智能的家庭 19 微博拾粹 Weibo Highlights @微软亚洲研究院 官方微博精彩选摘 20 让虚拟世界更真实 22 4K时代,你不能不知道的HEVC 25 小冰的三项绝技是如何炼成的? 26 科研伴我成长——上海交通大学ACM班学生在微软亚洲研究院的幸福实习生活 28 年轻的心与渐行渐近的梦——记微软-斯坦福产品设计创新课程ME310 30 如何写好简历,找到心仪的暑期实习 32 微软研究员Eric Horvitz解读“人工智能百年研究” 33 2 以“开放”和“极客创新”精神 铸造一个“新”微软 爆竹声声辞旧岁,喜气洋“羊”迎新年。转眼间,这一 年的时光即将伴着哒哒的马蹄声奔驰而去。在这辞旧迎新的 时刻,我们怀揣着对技术改变未来的美好憧憬,与所有为推 动互联网不断发展、为人类科技进步不断奋斗的科技爱好者 一起,敲响新年的钟声。挥别2014,一起期盼那充满希望 的新一年! 即将辞行的是很不平凡的一年。这一年的互联网风起云 涌,你方唱罢我登场,比起往日的峥嵘有过之而无不及:可 穿戴设备和智能家居成为众人争抢的高地;人工智能在逐渐 触手可及的同时又引起争议不断;一轮又一轮创业大潮此起 彼伏,不断有优秀的新公司和新青年展露头角。这一年的微 软也在众人的关注下发生着翻天覆地的变化:第三任CEO Satya Nadella屡新,确定以移动业务和云业务为中心 的公司战略,以开放的姿态拥抱移动互联的新时代,发布新一代Windows 10操作系统……于我个人而言,这一 年,就任微软亚太研发集团主席开启了我20年微软旅程上的新篇章。虽然肩上的担子更重了,但心中的激情却 丝毫未减。 “开放”和“极客创新”精神是我们在Satya时代看到微软文化的一个演进。“开放”是指不固守陈规,不囿 于往日的羁绊,用户在哪里需要我们,微软的服务就去哪里。推出Office for iOS/Android,开源.Net都是“开放” 的“新”微软对用户做出的承诺。关于“极客创新”,大家可能都已经听说过“车库(Garage)”这个词了,当年比 尔∙盖茨最早就是在车库里开始创业的。2014年,微软在全球范围内举办了“极客马拉松大赛(Hackthon)”,并鼓 励员工在业余时间加入“微软车库”项目,将自己的想法变为现实。这一系列的举措都是希望激发微软员工的“极 客创新”精神,我们也欣喜地看到变化正在微软内部潜移默化地进行着。“微软车库”目前已经公布了一部分有趣 的项目,我相信,未来还会有更多让人眼前一亮的创新。 2015年,我希望微软亚洲研究院和微软亚太研发集团的同事能够保持和发扬“开放”和“极客创新”的精 神。我们一起铸造一个“新”微软,为用户创造更多的惊喜! 微软亚洲研究院院长 3 第十六届“二十一世纪的计算”学术研讨会成功举办 2014年10月29日由微软亚洲研究院与北京大学联合主办的“二 形图像等领域的突破:微软语音助手小娜的背后,蕴藏着一系列人 十一世纪的计算”大型学术研讨会,在北京大学举行。包括有“计 工智能技术;大数据和机器学习技术帮助我们更好地认识城市和生 算机科学领域的诺贝尔奖”之称的图灵奖获得者、来自微软及学术 -

Operating System Support for Redundant Multithreading

Operating System Support for Redundant Multithreading Dissertation zur Erlangung des akademischen Grades Doktoringenieur (Dr.-Ing.) Vorgelegt an der Technischen Universität Dresden Fakultät Informatik Eingereicht von Dipl.-Inf. Björn Döbel geboren am 17. Dezember 1980 in Lauchhammer Betreuender Hochschullehrer: Prof. Dr. Hermann Härtig Technische Universität Dresden Gutachter: Prof. Frank Mueller, Ph.D. North Carolina State University Fachreferent: Prof. Dr. Christof Fetzer Technische Universität Dresden Statusvortrag: 29.02.2012 Eingereicht am: 21.08.2014 Verteidigt am: 25.11.2014 FÜR JAKOB *† 15. Februar 2013 Contents 1 Introduction 7 1.1 Hardware meets Soft Errors 8 1.2 An Operating System for Tolerating Soft Errors 9 1.3 Whom can you Rely on? 12 2 Why Do Transistors Fail And What Can Be Done About It? 15 2.1 Hardware Faults at the Transistor Level 15 2.2 Faults, Errors, and Failures – A Taxonomy 18 2.3 Manifestation of Hardware Faults 20 2.4 Existing Approaches to Tolerating Faults 25 2.5 Thesis Goals and Design Decisions 36 3 Redundant Multithreading as an Operating System Service 39 3.1 Architectural Overview 39 3.2 Process Replication 41 3.3 Tracking Externalization Events 42 3.4 Handling Replica System Calls 45 3.5 Managing Replica Memory 49 3.6 Managing Memory Shared with External Applications 57 3.7 Hardware-Induced Non-Determinism 63 3.8 Error Detection and Recovery 65 4 Can We Put the Concurrency Back Into Redundant Multithreading? 71 4.1 What is the Problem with Multithreaded Replication? 71 4.2 Can we make Multithreading -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems. -

Microsoft 2012 Citizenship Report

Citizenship at Microsoft Our Company Serving Communities Working Responsibly About this Report Microsoft 2012 Citizenship Report Microsoft 2012 Citizenship Report 01 Contents Citizenship at Microsoft Serving Communities Working Responsibly About this Report 3 Serving communities 14 Creating opportunities for youth 46 Our people 85 Reporting year 4 Working responsibly 15 Empowering youth through 47 Compensation and benefits 85 Scope 4 Citizenship governance education and technology 48 Diversity and inclusion 85 Additional reporting 5 Setting priorities and 16 Inspiring young imaginations 50 Training and development 85 Feedback stakeholder engagement 18 Realizing potential with new skills 51 Health and safety 86 United Nations Global Compact 5 External frameworks 20 Supporting youth-focused 53 Environment 6 FY12 highlights and achievements nonprofits 54 Impact of our operations 23 Empowering nonprofits 58 Technology for the environment 24 Donating software to nonprofits Our Company worldwide 61 Human rights 26 Providing hardware to more people 62 Affirming our commitment 28 Sharing knowledge to build capacity 64 Privacy and data security 8 Our business 28 Solutions in action 65 Online safety 8 Where we are 67 Freedom of expression 8 Engaging our customers 31 Employee giving and partners 32 Helping employees make 69 Responsible sourcing 10 Our products a difference 71 Hardware production 11 Investing in innovation 73 Conflict minerals 36 Humanitarian response 74 Expanding our efforts 37 Providing assistance in times of need 76 Governance 40 Accessibility 77 Corporate governance 41 Empowering people with disabilities 79 Maintaining strong practices and performance 42 Engaging students with special needs 80 Public policy engagement 44 Improving seniors’ well-being 83 Compliance Cover: Participants at the 2012 Imagine Cup, Sydney, Australia. -

FY15 Safer Cities Whitepaper

Employing Information Technology TO COMBAT HUMAN TRAF FICKING Arthur T. Ball Clyde W. Ford, CEO Managing Director, Asia Entegra Analytics Public Safety and National Security Microsoft Corporation Employing Information Technology to Combat Human Trafficking | 1 Anti-trafficking advocates, law enforcement agencies (LEAs), governments, nongovernmental organizations (NGOs), and intergovernmental organizations (IGOs) have worked for years to combat human trafficking, but their progress to date has been limited. The issues are especially complex, and the solutions are not simple. Although technology facilitates this sinister practice, technology can also help fight it. Advances in sociotechnical research, privacy, Microsoft and its partners are applying their interoperability, cloud and mobile technology, and industry experience to address technology- data sharing offer great potential to bring state- facilitated crime. They are investing in research, of-the-art technology to bear on human trafficking programs, and partnerships to support human across borders, between jurisdictions, and among rights and advance the fight against human the various agencies on the front lines of this trafficking. Progress is possible through increased effort. As Microsoft shows in fighting other forms public awareness, strong public-private of digital crime, it believes technology companies partnerships (PPPs), and cooperation with should play an important role in efforts to disrupt intervention efforts that increase the risk for human trafficking, by funding and facilitating the traffickers. All of these efforts must be based on a research that fosters innovation and harnesses nuanced understanding of the influence of advanced technology to more effectively disrupt innovative technology on the problem and on the this trade. implications and consequences of employing such technology by the community of interest (COI) in stopping human trafficking. -

Summary Paper on Online Child Sexual Exploitation

SUMMARY PAPER ON ONLINE CHILD SEXUAL EXPLOITATION ECPAT International is a global network of civil society The Internet is a part of children’s lives. Information organisations working together to end the sexual exploitation of and Communication Technologies (ICT) are now as children (SEC). ECPAT comprises member organisations in over important to the education and social development of 100 countries who generate knowledge, raise awareness, and children and young people as they are to the overall advocate to protect children from all forms of sexual exploitation. global economy. According to research, children and adolescents under 18 account for an estimated one in Key manifestations of sexual exploitation of children (SEC) three Internet users around the world1 and global data include the exploitation of children in prostitution, the sale and indicates that children’s usage is increasing – both in trafficking of children for sexual purposes, online child sexual terms of the number of children with access, and their exploitation (OCSE), the sexual exploitation of children in travel time spent online.2 While there is still disparity in access and tourism (SECTT) and some forms of child, early and forced to new technologies between and within countries in marriages (CEFM). None of these contexts or manifestations are the developed and developing world, technology now isolated, and any discussion of one must be a discussion of SEC mediates almost all human activities in some way. Our altogether. lives exist on a continuum that includes online and offline domains simultaneously. It can therefore be argued that Notably, these contexts and manifestations of SEC are becoming children are living in a digital world where on/offline increasingly complex and interlinked as a result of drivers like greater mobility of people, evolving digital technology and rapidly expanding access to communications. -

Reining in Online Abuses

Technology and Innovation, Vol. 19, pp. 593-599, 2018 ISSN 1949-8241 • E-ISSN 1949-825X Printed in the USA. All rights reserved. http://dx.doi.org/10.21300/19.3.2018.593 Copyright © 2018 National Academy of Inventors. www.technologyandinnovation.org REINING IN ONLINE ABUSES Hany Farid Dartmouth College, Hanover, NH, USA Online platforms today are being used in deplorably diverse ways: recruiting and radicalizing terrorists; exploiting children; buying and selling illegal weapons and underage prostitutes; bullying, stalking, and trolling on social media; distributing revenge porn; stealing personal and financial data; propagating fake and hateful news; and more. Technology companies have been and continue to be frustratingly slow in responding to these real threats with real conse- quences. I advocate for the development and deployment of new technologies that allow for the free flow of ideas while reining in abuses. As a case study, I will describe the development and deployment of two such technologies—photoDNA and eGlyph—that are currently being used in the global fight against child exploitation and extremism. Key words: Social media; Child protection; Counter-extremism INTRODUCTION number of CP reports. In the early 1980s, it was Here are some sobering statistics: In 2016, the illegal in New York State for an individual to “pro- National Center for Missing and Exploited Children mote any performance which includes sexual conduct by a child less than sixteen years of age.” In 1982, (NCMEC) received 8,000,000 reports of child por- Paul Ferber was charged under this law with selling nography (CP), 460,000 reports of missing children, material that depicted underage children involved and 220,000 reports of sexual exploitation. -

Photodna & Photodna Cloud Service

PhotoDNA & PhotoDNA Cloud Service Fact Sheet PhotoDNA Microsoft believes its customers are entitled to safer and more secure online experiences that are free from illegal, objectionable and unwanted content. PhotoDNA is a core element of Microsoft’s voluntary business strategy to protect its customers, systems and reputation by helping to create a safer online environment. • Every single day, there are over 1.8 billion unique images uploaded and shared online. Stopping the distribution of illegal images of sexually abused children is like finding a needle in a haystack. • In 2009 Microsoft partnered with Dartmouth College to develop PhotoDNA, a technology that aids in finding and removing some of the “worst of the worst” images of child sexual abuse from the Internet. • Microsoft donated the PhotoDNA technology to the National Center for Missing & Exploited Children (NCMEC), which established a PhotoDNA-based program for online service providers to help disrupt the spread of child sexual abuse material online. • PhotoDNA has become a leading best practice for combating child sexual abuse material on the Internet. • PhotoDNA is provided free of charge to qualified companies and developers. Currently, more than 50 companies, including Facebook and Twitter, non-governmental organizations and law enforcement use PhotoDNA. PhotoDNA Technology PhotoDNA technology converts images into a greyscale format with uniform size, then divides the image into squares and assigns a numerical value that represents the unique shading found within each square. • Together, those numerical values represent the “PhotoDNA signature,” or “hash,” of an image, which can then be compared to signatures of other images to find copies of a given image with incredible accuracy and at scale. -

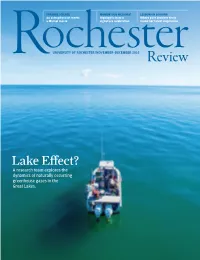

Lake E≠Ect? a Research Team Explores the Dynamics of Naturally Occurring Greenhouse Gases in the Great Lakes

STRANGE SCIENCE MOMENTOUS MELIORA! LESSONS IN LOOKING An astrophysicist meets Highlights from a Where poet Jennifer Grotz a Marvel movie signature celebration found her latest inspiration UNIVERSITY OF ROCHESTER /NovembER–DecembER 2016 Lake E≠ect? A research team explores the dynamics of naturally occurring greenhouse gases in the Great Lakes. RochRev_Nov2016_Cover.indd 1 11/1/16 4:38 PM “You have to feel strongly about where you give. I really feel I owe the University for a lot of my career. My experience was due in part Invest in PAYING IT to someone else’s generosity. Through the George Eastman Circle, I can help students what you love with fewer resources pursue their career “WE’RE BOOMERS. We think we’ll be aspirations at Rochester.” around forever—but finalizing our estate FORWARD gifts felt good,” said Judy Ricker. Her husband, —Virgil Joseph ’01 | Vice President-Relationship Manager, Ray, agrees. “It’s a win-win for us and the Canandaigua National Bank & Trust University,” he said. “We can provide for FOR FUTURE Rochester, New York ourselves in retirement, then our daughter, Member, George Eastman Circle Rochester Leadership Council and the school we both love.” GENERATIONS Supports: School of Arts and Sciences The Rickers’ charitable remainder unitrust provides income for their family before creating three named funds at the Eastman School of Music. Those endowed funds— two professorships and one scholarship— will be around forever. They acknowledge Eastman’s celebrated history, and Ray and Judy’s confidence in the School’s future. Judy’s message to other boomers? “Get on this bus! Join us in ensuring the future of what you love most about the University.” Judith Ricker ’76E, ’81E (MM), ’91S (MBA) is a freelance oboist, a business consultant, and former executive vice president of brand research at Market Probe. -

The Power of Public- Private Partnerships in Eradicating Child Sexual Exploitation

EXPERT PAPER THE POWER OF PUBLIC- PRIVATE PARTNERSHIPS IN ERADICATING CHILD SEXUAL EXPLOITATION ERNIE ALLEN FOR ECPAT INTERNATIONAL Ernie Allen, Principal, Allen Global Consulting, LLC and Senior Advisor with ECPAT International INTRODUCTION Twenty years ago, the first World Congress Against Commercial Sexual Exploitation of Children awakened the world to the depth and extent of the insidious problem of child sexual exploitation. Since then, progress has been remarkable. Through efforts led by a global network to End Child Prostitution, Child Pornography and Trafficking of Children for Sexual Purposes or ECPAT, more victims are being identified; more offenders are being prosecuted; new laws are being enacted; and public awareness has reached its highest level. Yet child sexual exploitation is a bigger problem today than it was twenty years ago and it remains underreported and underappreciated. Further, with the advent of the internet, it has exploded to unprecedented levels. Prior to the internet, people with sexual interest in children felt isolated, aberrant, and alone. Today, they are part of a global community, able to interact online with those with similar interests worldwide. They share images, fantasies, techniques, even real children. And they do it all with virtual anonymity. In 2013, a man in Sweden was convicted of raping children even though it wasn’t in the same country as his victims, when the assault took place How is that possible? He hired men in the Philippines to obtain children as young as five and assault them while he directed the action and watched via webcam from the comfort of his home. Swedish authorities called it ‘virtual trafficking.’ 1 Last year, law enforcement in the United • The Netherlands has worked with social Kingdom arrested 29 people for the use media including Twitter to remove child of children in Asia as young as six for exploitation images quickly. -

Photodna Cloud Service Customer Presentation Deck

___________________________________________________________________________ 2018/TEL58/LSG/IR/005 Session: 2 Microsoft Digital Crimes Unit Submitted by: Microsoft Roundtable on Best Practice for Enhancing Citizens’ Digital Literacy Taipei, Chinese Taipei 1 October 2018 1 in 5 girls will be sexually abused 1 in 10 boys 500 images of sexually abused children will be traded online approximately every 60 seconds. www.microsoft.com/photodna PhotoDNA Cloud Service: An intelligent solution for combatting Child Sexual Abuse Material (CSAM) in the Enterprise Secure Efficient Interoperability Images are instantly converted Reduce the cost Integrate via REST API on any to secure hashes and cannot be and increase the speed of platform or environment. recreated. Images are never detecting and reporting child retained by Microsoft. sexual exploitation images. Reporting: For U.S.‐based customers, the PhotoDNA Cloud Service provides an API to submit reports to the National Center for Missing and Exploited Children. Internationally based customers will need to determine how to submit reports on their own. Microsoft does not provide advice or counsel related to these legal requirements or obligations. Visit www.Microsoft.com/photodna webpage and select “Apply” 8250753738743… 3425594688810… Identify images to 9381614543396… verify 7970619785740… Compute PhotoDNA Hashes 6355281102230… 1018650324653… for all images 6913585438775… 9469898399124… 8435970367851… 9092468906255… Compare to hashes in the database Takes care of most of the necessary functions 8253738743… -

Building a Better Mousetrap

ISN_FINAL (DO NOT DELETE) 10/24/2013 6:04 PM SUPER-INTERMEDIARIES, CODE, HUMAN RIGHTS IRA STEVEN NATHENSON* Abstract We live in an age of intermediated network communications. Although the internet includes many intermediaries, some stand heads and shoulders above the rest. This article examines some of the responsibilities of “Super-Intermediaries” such as YouTube, Twitter, and Facebook, intermediaries that have tremendous power over their users’ human rights. After considering the controversy arising from the incendiary YouTube video Innocence of Muslims, the article suggests that Super-Intermediaries face a difficult and likely impossible mission of fully servicing the broad tapestry of human rights contained in the International Bill of Human Rights. The article further considers how intermediary content-control pro- cedures focus too heavily on intellectual property, and are poorly suited to balancing the broader and often-conflicting set of values embodied in human rights law. Finally, the article examines a num- ber of steps that Super-Intermediaries might take to resolve difficult content problems and ultimately suggests that intermediaries sub- scribe to a set of process-based guiding principles—a form of Digital Due Process—so that intermediaries can better foster human dignity. * Associate Professor of Law, St. Thomas University School of Law, inathen- [email protected]. I would like to thank the editors of the Intercultural Human Rights Law Review, particularly Amber Bounelis and Tina Marie Trunzo Lute, as well as symposium editor Lauren Smith. Additional thanks are due to Daniel Joyce and Molly Land for their comments at the symposium. This article also benefitted greatly from suggestions made by the participants of the 2013 Third Annual Internet Works-in-Progress conference, including Derek Bambauer, Marc Blitz, Anupam Chander, Chloe Georas, Andrew Gilden, Eric Goldman, Dan Hunter, Fred von Lohmann, Mark Lemley, Rebecca Tushnet, and Peter Yu.