Compression Rate Comparison Between Elias Delta and Unary Codes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Error Correction Capacity of Unary Coding

Error Correction Capacity of Unary Coding Pushpa Sree Potluri1 Abstract Unary coding has found applications in data compression, neural network training, and in explaining the production mechanism of birdsong. Unary coding is redundant; therefore it should have inherent error correction capacity. An expression for the error correction capability of unary coding for the correction of single errors has been derived in this paper. 1. Introduction The unary number system is the base-1 system. It is the simplest number system to represent natural numbers. The unary code of a number n is represented by n ones followed by a zero or by n zero bits followed by 1 bit [1]. Unary codes have found applications in data compression [2],[3], neural network training [4]-[11], and biology in the study of avian birdsong production [12]-14]. One can also claim that the additivity of physics is somewhat like the tallying of unary coding [15],[16]. Unary coding has also been seen as the precursor to the development of number systems [17]. Some representations of unary number system use n-1 ones followed by a zero or with the corresponding number of zeroes followed by a one. Here we use the mapping of the left column of Table 1. Table 1. An example of the unary code N Unary code Alternative code 0 0 0 1 10 01 2 110 001 3 1110 0001 4 11110 00001 5 111110 000001 6 1111110 0000001 7 11111110 00000001 8 111111110 000000001 9 1111111110 0000000001 10 11111111110 00000000001 The unary number system may also be seen as a space coding of numerical information where the location determines the value of the number. -

Image Data Compression Introduction to Coding

Image Data Compression Introduction to Coding © 2018-19 Alexey Pak, Lehrstuhl für Interaktive Echtzeitsysteme, Fakultät für Informatik, KIT 1 Review: data reduction steps (discretization / digitization) Continuous 2D siGnal Fully diGital siGnal (liGht intensity on sensor) gq (xa, yb,ti ) Discrete time siGnal (pixel voltaGe readinGs) g(xa, yb,ti ) g(x, y,t) Spatial discretization Temporal discretization and diGitization g(xa, yb,t) g(xa, yb,t) gq (xa, yb,t) Discrete value siGnal AnaloG siGnal Spatially discrete siGnal (e.G., # of electrons at each (liGht intensity at a pixel) (pixel-averaGed intensity) pixel of the CCD matrix) © 2018-19 Alexey Pak, Lehrstuhl für Interaktive Echtzeitsysteme, Fakultät für Informatik, KIT 2 Review: data reduction steps (discretization / digitization) Discretization of 1D continuous-time signals (sampling) • Important signal transformations: up- and down-sampling • Information-preserving down-sampling: rate determined based on signal bandwidth • Fourier space allows simple interpretation of the effects due to decimation and interpolation (techniques of up-/down-sampling) Scalar (one-dimensional) signal quantization of continuous-value signals • Quantizer types: uniform, simple non-uniform (with a dead zone, with a limited amplitude) • Advanced quantizers: PDF-optimized (Max-Lloyd algorithm), perception-optimized, SNR- optimized • Implementation: pre-processing with a compander function + simple quantization Vector (multi-dimensional) signal quantization • Terminology: quantization, reconstruction, codebook, distance metric, Voronoi regions, space partitioning • Relation to the general classification problem (from Machine Learning) • Linde-Buzo-Gray algorithm of constructing (sub-optimal) codebooks (aka k-means) © 2018-19 Alexey Pak, Lehrstuhl für Interaktive Echtzeitsysteme, Fakultät für Informatik, KIT 3 LGB vector quantization – 2D example [Linde, Buzo, Gray ‘80]: 1. -

The Pillars of Lossless Compression Algorithms a Road Map and Genealogy Tree

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 13, Number 6 (2018) pp. 3296-3414 © Research India Publications. http://www.ripublication.com The Pillars of Lossless Compression Algorithms a Road Map and Genealogy Tree Evon Abu-Taieh, PhD Information System Technology Faculty, The University of Jordan, Aqaba, Jordan. Abstract tree is presented in the last section of the paper after presenting the 12 main compression algorithms each with a practical This paper presents the pillars of lossless compression example. algorithms, methods and techniques. The paper counted more than 40 compression algorithms. Although each algorithm is The paper first introduces Shannon–Fano code showing its an independent in its own right, still; these algorithms relation to Shannon (1948), Huffman coding (1952), FANO interrelate genealogically and chronologically. The paper then (1949), Run Length Encoding (1967), Peter's Version (1963), presents the genealogy tree suggested by researcher. The tree Enumerative Coding (1973), LIFO (1976), FiFO Pasco (1976), shows the interrelationships between the 40 algorithms. Also, Stream (1979), P-Based FIFO (1981). Two examples are to be the tree showed the chronological order the algorithms came to presented one for Shannon-Fano Code and the other is for life. The time relation shows the cooperation among the Arithmetic Coding. Next, Huffman code is to be presented scientific society and how the amended each other's work. The with simulation example and algorithm. The third is Lempel- paper presents the 12 pillars researched in this paper, and a Ziv-Welch (LZW) Algorithm which hatched more than 24 comparison table is to be developed. -

The Deep Learning Solutions on Lossless Compression Methods for Alleviating Data Load on Iot Nodes in Smart Cities

sensors Article The Deep Learning Solutions on Lossless Compression Methods for Alleviating Data Load on IoT Nodes in Smart Cities Ammar Nasif *, Zulaiha Ali Othman and Nor Samsiah Sani Center for Artificial Intelligence Technology (CAIT), Faculty of Information Science & Technology, University Kebangsaan Malaysia, Bangi 43600, Malaysia; [email protected] (Z.A.O.); [email protected] (N.S.S.) * Correspondence: [email protected] Abstract: Networking is crucial for smart city projects nowadays, as it offers an environment where people and things are connected. This paper presents a chronology of factors on the development of smart cities, including IoT technologies as network infrastructure. Increasing IoT nodes leads to increasing data flow, which is a potential source of failure for IoT networks. The biggest challenge of IoT networks is that the IoT may have insufficient memory to handle all transaction data within the IoT network. We aim in this paper to propose a potential compression method for reducing IoT network data traffic. Therefore, we investigate various lossless compression algorithms, such as entropy or dictionary-based algorithms, and general compression methods to determine which algorithm or method adheres to the IoT specifications. Furthermore, this study conducts compression experiments using entropy (Huffman, Adaptive Huffman) and Dictionary (LZ77, LZ78) as well as five different types of datasets of the IoT data traffic. Though the above algorithms can alleviate the IoT data traffic, adaptive Huffman gave the best compression algorithm. Therefore, in this paper, Citation: Nasif, A.; Othman, Z.A.; we aim to propose a conceptual compression method for IoT data traffic by improving an adaptive Sani, N.S. -

Habilitation `A Diriger Des Recherches from Image Coding And

Habilitation `aDiriger des Recherches From image coding and representation to robotic vision Marie BABEL Universit´ede Rennes 1 June 29th 2012 Bruno Arnaldi, Professor, INSA Rennes Committee chairman Ferran Marques, Professor, Technical University of Catalonia Reviewer Beno^ıtMacq, Professor, Universit´eCatholique de Louvain Reviewer Fr´ed´ericDufaux, CNRS Research Director, Telecom ParisTech Reviewer Charly Poulliat, Professor, INP-ENSEEIHT Toulouse Examiner Claude Labit, Inria Research Director, Inria Rennes Examiner Fran¸oisChaumette, Inria Research Director, Inria Rennes Examiner Joseph Ronsin, Professor, INSA Rennes Examiner IRISA UMR CNRS 6074 / INRIA - Equipe Lagadic IETR UMR CNRS 6164 - Equipe Images 2 Contents 1 Introduction 3 1.1 An overview of my research project ........................... 3 1.2 Coding and representation tools: QoS/QoE context .................. 4 1.3 Image and video representation: towards pseudo-semantic technologies . 4 1.4 Organization of the document ............................. 5 2 Still image coding and advanced services 7 2.1 JPEG AIC calls for proposal: a constrained applicative context ............ 8 2.1.1 Evolution of codecs: JPEG committee ..................... 8 2.1.2 Response to the call for JPEG-AIC ....................... 9 2.2 Locally Adaptive Resolution compression framework: an overview . 10 2.2.1 Principles and properties ............................ 11 2.2.2 Lossy to lossless scalable solution ........................ 12 2.2.3 Hierarchical colour region representation and coding . 12 2.2.4 Interoperability ................................. 13 2.3 Quadtree Partitioning: principles ............................ 14 2.3.1 Basic homogeneity criterion: morphological gradient . 14 2.3.2 Enhanced color-oriented homogeneity criterion . 15 2.3.2.1 Motivations .............................. 15 2.3.2.2 Results ................................ 16 2.4 Interleaved S+P: the pyramidal profile ........................ -

Efficient Inverted Index Compression Algorithm Characterized by Faster

entropy Article Efficient Inverted Index Compression Algorithm Characterized by Faster Decompression Compared with the Golomb-Rice Algorithm Andrzej Chmielowiec 1,* and Paweł Litwin 2 1 The Faculty of Mechanics and Technology, Rzeszow University of Technology, Kwiatkowskiego 4, 37-450 Stalowa Wola, Poland 2 The Faculty of Mechanical Engineering and Aeronautics, Rzeszow University of Technology, Powsta´ncówWarszawy 8, 35-959 Rzeszow, Poland; [email protected] * Correspondence: [email protected] Abstract: This article deals with compression of binary sequences with a given number of ones, which can also be considered as a list of indexes of a given length. The first part of the article shows that the entropy H of random n-element binary sequences with exactly k elements equal one satisfies the inequalities k log2(0.48 · n/k) < H < k log2(2.72 · n/k). Based on this result, we propose a simple coding using fixed length words. Its main application is the compression of random binary sequences with a large disproportion between the number of zeros and the number of ones. Importantly, the proposed solution allows for a much faster decompression compared with the Golomb-Rice coding with a relatively small decrease in the efficiency of compression. The proposed algorithm can be particularly useful for database applications for which the speed of decompression is much more important than the degree of index list compression. Citation: Chmielowiec, A.; Litwin, P. Efficient Inverted Index Compression Keywords: inverted index compression; Golomb-Rice coding; runs coding; sparse binary sequence Algorithm Characterized by Faster compression Decompression Compared with the Golomb-Rice Algorithm. -

Techniques for Inverted Index Compression

Techniques for Inverted Index Compression GIULIO ERMANNO PIBIRI, ISTI-CNR, Italy ROSSANO VENTURINI, University of Pisa, Italy The data structure at the core of large-scale search engines is the inverted index, which is essentially a collection of sorted integer sequences called inverted lists. Because of the many documents indexed by such engines and stringent performance requirements imposed by the heavy load of queries, the inverted index stores billions of integers that must be searched efficiently. In this scenario, index compression is essential because it leads to a better exploitation of the computer memory hierarchy for faster query processing and, at the same time, allows reducing the number of storage machines. The aim of this article is twofold: first, surveying the encoding algorithms suitable for inverted index compression and, second, characterizing the performance of the inverted index through experimentation. CCS Concepts: • Information systems → Search index compression; Search engine indexing. Additional Key Words and Phrases: Inverted Indexes; Data Compression; Efficiency ACM Reference Format: Giulio Ermanno Pibiri and Rossano Venturini. 2020. Techniques for Inverted Index Compression. ACM Comput. Surv. 1, 1 (August 2020), 35 pages. https://doi.org/10.1145/nnnnnnn.nnnnnnn 1 INTRODUCTION Consider a collection of textual documents each described, for this purpose, as a set of terms. For each distinct term t appearing in the collection, an integer sequence St is built and lists, in sorted order, all the identifiers of the documents (henceforth, docIDs) where the term appears. The sequence St is called the inverted list, or posting list, of the term t and the set of inverted lists for all the distinct terms is the subject of this article – the data structure known as the inverted index. -

Compressing Integers for Fast File Access

Compressing Integers for Fast File Access Hugh E. Williams and Justin Zobel Department of Computer Science, RMIT University GPO Box 2476V, Melbourne 3001, Australia Email: hugh,jz @cs.rmit.edu.au { } Fast access to files of integers is crucial for the efficient resolution of queries to databases. Integers are the basis of indexes used to resolve queries, for exam- ple, in large internet search systems and numeric data forms a large part of most databases. Disk access costs can be reduced by compression, if the cost of retriev- ing a compressed representation from disk and the CPU cost of decoding such a representation is less than that of retrieving uncompressed data. In this paper we show experimentally that, for large or small collections, storing integers in a com- pressed format reduces the time required for either sequential stream access or random access. We compare different approaches to compressing integers, includ- ing the Elias gamma and delta codes, Golomb coding, and a variable-byte integer scheme. As a conclusion, we recommend that, for fast access to integers, files be stored compressed. Keywords: Integer compression, variable-bit coding, variable-byte coding, fast file access, scientific and numeric databases. 1. INTRODUCTION codes [2], and parameterised Golomb codes [3]; second, we evaluate byte-wise storage using standard four-byte Many data processing applications depend on efficient integers and a variable-byte scheme. access to files of integers. For example, integer data is We have found in our experiments that coding us- prevalent in scientific and financial databases, and the ing a tailored integer compression scheme can allow indexes to databases can consist of large sequences of retrieval to be up to twice as fast than with integers integer record identifiers. -

Start, Step, Stop Unary Coding for Data Compression

Europaisches Patentamt J) European Patent Office GO Publication number: 0 340 041 Office europeen des brevets A2 EUROPEAN PATENT APPLICATION Application number: 89304352.1 int.ci.4-. H 03 M 7/40 G 06 F 15/40 Date of filing: 28.04.89 Priority: 29.04.88 US 187697 Applicant: XEROX CORPORATION Xerox Square - 020 Date of publication of application: Rochester New York 14644 (US) 02.11.89 Bulletin 89/44 Inventor: Fiala, Edward R. Designated Contracting States: DE FR GB 1018 Robin Way Sunnyvale California 94087 (US) Greene, Daniel H. 942 Aster Court Sunnyvale California 94086 (US) Representative : Goode, Ian Roy et al Rank Xerox Limited Patent Department 364 Euston Road London NW1 3BL (GB) @ Start, step, stop unary coding for data compression. @ Start, Step, Stop unary coding provides a family of variable length codewords for encoding compressed data, such as the copies and literals of the various textual substitution data compresion processes disclosed herein. Textual substitution data compression is, however, only one example of one type of UrDME CON MULLED data compression in which this < start, step, stop> unary coding may be used to advantage. FIG. 3 o o 3 Q. Ill Bundesdruckerei Berlin EP 0 340 041 A2 Description START, STEP, STOP UNARY CODING FOR DATA COMPRESSION This invention relates to digital data compression systems and, more particularly, to adaptive and invertible or lossless digital data compression systems. 5 Reference is made to our concurrently filed EP application (D/88068), entitled "Search Tree Data Structure Encoding for Textual Substitution Data Compression Systems". A common detailed description has been used because the inventions covered by the different applications may be combined in various ways. -

Entropy Coding and Different Coding Techniques

Journal of Network Communications and Emerging Technologies (JNCET) www.jncet.org Volume 6, Issue 5, May (2016) Entropy Coding and Different Coding Techniques Sandeep Kaur Asst. Prof. in CSE Dept, CGC Landran, Mohali Sukhjeet Singh Asst. Prof. in CS Dept, TSGGSK College, Amritsar. Abstract – In today’s digital world information exchange is been 2. CODING TECHNIQUES held electronically. So there arises a need for secure transmission of the data. Besides security there are several other factors such 1. Huffman Coding- as transfer speed, cost, errors transmission etc. that plays a vital In computer science and information theory, a Huffman code role in the transmission process. The need for an efficient technique for compression of Images ever increasing because the is a particular type of optimal prefix code that is commonly raw images need large amounts of disk space seems to be a big used for lossless data compression. disadvantage during transmission & storage. This paper provide 1.1 Basic principles of Huffman Coding- a basic introduction about entropy encoding and different coding techniques. It also give comparison between various coding Huffman coding is a popular lossless Variable Length Coding techniques. (VLC) scheme, based on the following principles: (a) Shorter Index Terms – Huffman coding, DEFLATE, VLC, UTF-8, code words are assigned to more probable symbols and longer Golomb coding. code words are assigned to less probable symbols. 1. INTRODUCTION (b) No code word of a symbol is a prefix of another code word. This makes Huffman coding uniquely decodable. 1.1 Entropy Encoding (c) Every source symbol must have a unique code word In information theory an entropy encoding is a lossless data assigned to it. -

Entropy and Lossless Coding No

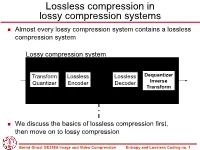

Lossless compression in lossy compression systems Almost every lossy compression system contains a lossless compression system Lossy compression system Transform Lossless Lossless Dequantizer Quantizer Encoder Decoder Inverse Transform Lossless compression system We discuss the basics of lossless compression first, then move on to lossy compression Bernd Girod: EE398A Image and Video Compression Entropy and Lossless Coding no. 1 Topics in lossless compression Binary decision trees and variable length coding Entropy and bit-rate Prefix codes, Huffman codes, Golomb codes Joint entropy, conditional entropy, sources with memory Fax compression standards Arithmetic coding Bernd Girod: EE398A Image and Video Compression Entropy and Lossless Coding no. 2 Example: 20 Questions Alice thinks of an outcome (from a finite set), but does not disclose her selection. Bob asks a series of yes/no questions to uniquely determine the outcome chosen. The goal of the game is to ask as few questions as possible on average. Our goal: Design the best strategy for Bob. Bernd Girod: EE398A Image and Video Compression Entropy and Lossless Coding no. 3 Example: 20 Questions (cont.) Which strategy is better? 0 (=no) 1 (=yes) 0 1 0 0 1 0 1 0 1 1 A B C 0 0 1 C D 0 1 F 0 1 A B E F D E Observation: The collection of questions and answers yield a binary code for each outcome. Bernd Girod: EE398A Image and Video Compression Entropy and Lossless Coding no. 4 Fixed length codes 0 1 0 1 0 1 0 0 1 0 0 1 A B C D E F G H Average description length for K outcomes lav log2 K Optimum for equally likely outcomes Verify by modifying tree Bernd Girod: EE398A Image and Video Compression Entropy and Lossless Coding no. -

STUDIES on GOPALA-HEMACHANDRA CODES and THEIR APPLICATIONS. by Logan Childers December, 2020

STUDIES ON GOPALA-HEMACHANDRA CODES AND THEIR APPLICATIONS. by Logan Childers December, 2020 Director of Thesis: Krishnan Gopalakrishnan, PhD Major Department: Computer Science Gopala-Hemachandra codes are a variation of the Fibonacci universal code and have ap- plications in data compression and cryptography. We study a specific parameterization of Gopala-Hemachandra codes and present several results pertaining to these codes. We show that GHa(n) always exists for n ≥ 1 when −2 ≥ a ≥ −4, meaning that these are universal codes. We develop two new algorithms to determine whether a GH code exists for a given a and n, and to construct them if they exist. We also prove that when a = −(4 + k) where k ≥ 1, that there are at most k consecutive integers for which GH codes do not exist. In 2014, Nalli and Ozyilmaz proposed a stream cipher based on GH codes. We show that this cipher is insecure and provide experimental results on the performance of our program that cracks this cipher. STUDIES ON GOPALA-HEMACHANDRA CODES AND THEIR APPLICATIONS. A Thesis Presented to The Faculty of the Department of Computer Science East Carolina University In Partial Fulfillment of the Requirements for the Degree Master of Science in Computer Science by Logan Childers December, 2020 Copyright Logan Childers, 2020 STUDIES ON GOPALA-HEMACHANDRA CODES AND THEIR APPLICATIONS. by Logan Childers APPROVED BY: DIRECTOR OF THESIS: Krishnan Gopalakrishnan, PhD COMMITTEE MEMBER: Venkat Gudivada, PhD COMMITTEE MEMBER: Karl Abrahamson, PhD CHAIR OF THE DEPARTMENT OF COMPUTER SCIENCE: Venkat Gudivada, PhD DEAN OF THE GRADUATE SCHOOL: Paul J. Gemperline, PhD Table of Contents LIST OF TABLES ..................................