Cross-Device Computation Coordination for Mobile Collocated Interactions with Wearables

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

New Nokia Strengthens Brand with Google's Android One & a Curved

Publication date: 25 Feb 2018 Author: Omdia Analyst New Nokia strengthens brand with Google’s Android One & a curved display smartphone Brought to you by Informa Tech New Nokia strengthens brand with Google’s 1 Android One & a curved display smartphone At MWC 2018, Nokia-brand licensee HMD Global unveiled a new Google relationship and five striking new handsets, notable features include: - Pure Android One platform as standard: HMD has extended its - Nokia 6 (2018): dual anodized metal design; 5.5” 1080P IPS display; existing focus on delivering a “pure Android” experience with 16MP rear camera with Zeiss optics; Snapdragon 630; LTE Cat 4; Android monthly security updates, with a commitment that all new One; availability: Latin America, Europe, Hong Kong, Taiwan, rest of smartphones would be part of Google’s Android One program, or APAC, dual & single SIM; Euro 279. at the lowest tier, Android Oreo (Go edition). - Nokia 7 Plus: dual anodized metal design; 6” 18:9 HD+ display; 12MP - New Nokia 8110: a colorful modern version of the original rear camera with Zeiss; 16MP front camera with Zeiss; return of Pro “Matrix” phone; 4G LTE featurephone including VoLTE & mobile camera UX from Lumia 1020; Snapdragon 660; LTE Cat 6; Android One; hotspot; running Kai OS, Qualcomm 205; expected availability: availability: China, Hong Kong, Taiwan, rest of APAC, Europe; dual & MENA, China, Europe; Euro 79. single SIM, Euro 399. - Nokia 1: Google Android Oreo (Go Edition); the return of - Nokia 8 Sirocco: dual edge curved display, LG 5.5” pOLED; super colorful Nokia Xpress-on swapable covers; MTK 6737M; 1GB compact design; steel frame; Gorilla Glass 5 front & back; 12/13MP rear Ram; 8GB storage; 4.5” FWVGA IPS display; availability: India, dual camera with Zeiss; Pro Camera UX; Qi wireless charging; IP67; Australia, rest of APAC, Europe, Latin America; dual & single SIM; Android One; Snapdragon 835; LTE Cat 12 down, Cat 13 up; availability: $85 Europe, China, MENA; dual & single SIM; Euro 749. -

Flash Yellow Powered by the Nationwide Sprint 4G LTE Network Table of Contents

How to Build a Successful Business with Flash Yellow powered by the Nationwide Sprint 4G LTE Network Table of Contents This playbook contains everything you need build a successful business with Flash Wireless! 1. Understand your customer’s needs. GO TO PAGE 3 2. Recommend Flash Yellow in strong service areas. GO TO PAGE 4 a. Strong service map GO TO PAGE 5 3. Help your customer decide on a service plan GO TO PAGE 6 4. Ensure your customer is on the right device GO TO PAGE 8 a. If they are buying a new device GO TO PAGE 9 b. If they are bringing their own device GO TO PAGE 15 5. Device Appendix – Is your customer’s device compatible? GO TO PAGE 28 2 Step 1. Understand your customer’s needs • Mostly voice and text - Flash Yellow network would be a good fit in most metropolitan areas. For suburban / rural areas, check to see if they live in a strong Flash Yellow service area. • Average to heavy data user - Check to see if they live in a strong Flash Yellow service area. See page 5. If not, guide them to Flash Green or Flash Purple network to ensure they get the best customer experience. Remember, a good customer experience is the key to keeping a long-term customer! 3 Step 2. Recommend Flash Yellow in strong areas • Review Flash Yellow’s strong service areas. Focus your Flash Yellow customer acquisition efforts on these areas to ensure high customer satisfaction and retention. See page 5. o This is a top 24 list, the network is strong in other areas too. -

Xiaomi Mi A1 HASZNÁLATI ÚTMUTATÓ

Xiaomi Mi A1 HASZNÁLATI ÚTMUTATÓ Ez a használati útmutató az A1/7.1.2 N2G47H szoftver verziójához készült. A frissítések után egyes funkciók változhatnak! Xiaomi Mi A1 Manual HU v1.0 - 1. oldal Bevezetés Köszönjük, hogy Xiaomi terméket választott! Kérjük, használatba vétel előtt olvassa el figyelmesen ezt a használati utasítást, hogy a készüléket könnyen és egyszerűen tudja használni. A jobb szolgáltatás elérése érdekében a használati utasítás tartalma megváltozhat! A készülék funkciói és tulajdonságai előzetes figyelmeztetés nélkül megváltozhatnak! Amennyiben eltérést tapasztal, keresse fel weboldalunkat a legfrissebb információkért! Kezdeti lépések Biztonsági figyelmeztetések Mindig tartsa be az alábbi előírásokat a készülék használata során. Ezzel csökkentheti tűz, áramütés és balesetveszély kockázatát. Figyelem: Áramütés elkerülése érdekében ne használja a terméket esőben vagy víz közelében. Karbantartás előtt húzza ki a készülékből a hálózati adaptert. Tartsa távol magas hőforrástól és kerülje a közvetlen napfénnyel történő érintkezést. Védje az adapter kábelét. Vezesse úgy a kábelt, hogy ne lehessen rálépni, illetve ne sértse meg rá vagy mellé helyezett más eszköz. Különösen figyeljen a kábelre a készülék csatlakozójánál. Csak a készülékhez mellékelt hálózati adaptert használja. Más adapter használata érvényteleníti a garanciát. Húzza ki az adaptert a fali aljzatból, amikor nem használja azt a készülék töltéséhez. - Tartsa a telefont és tartozékait gyermekek által nem elérhető helyen. - Tartsa távol a telefont a nedvességtől, mert az károsíthatja a készüléket. Ne használjon kémiai / vegyi anyagokat a készülék tisztításához - A készüléket csak arra felhatalmazott személy (márkaszerviz) szerelheti szét. - Beázás esetén azonnal kapcsolja ki a készüléket. Ne vegye le a hátlapot és a szárításhoz ne használjon melegítő / szárító eszközt, pl. hajszárító, mikrohullámú sütő, stb. - Bármilyen kérdés felmerülése esetén forduljon a szervizhez. -

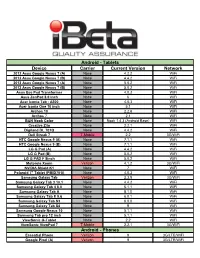

Ibeta-Device-Invento

Android - Tablets Device Carrier Current Version Network 2012 Asus Google Nexus 7 (A) None 4.2.2 WiFi 2012 Asus Google Nexus 7 (B) None 4.4.2 WiFi 2013 Asus Google Nexus 7 (A) None 5.0.2 WiFi 2013 Asus Google Nexus 7 (B) None 5.0.2 WiFi Asus Eee Pad Transformer None 4.0.3 WiFi Asus ZenPad 8.0 inch None 6 WiFi Acer Iconia Tab - A500 None 4.0.3 WiFi Acer Iconia One 10 inch None 5.1 WiFi Archos 10 None 2.2.6 WiFi Archos 7 None 2.1 WiFi B&N Nook Color None Nook 1.4.3 (Android Base) WiFi Creative Ziio None 2.2.1 WiFi Digiland DL 701Q None 4.4.2 WiFi Dell Streak 7 T-Mobile 2.2 3G/WiFi HTC Google Nexus 9 (A) None 7.1.1 WiFi HTC Google Nexus 9 (B) None 7.1.1 WiFi LG G Pad (A) None 4.4.2 WiFi LG G Pad (B) None 5.0.2 WiFi LG G PAD F 8inch None 5.0.2 WiFi Motorola Xoom Verizon 4.1.2 3G/WiFi NVIDIA Shield K1 None 7 WiFi Polaroid 7" Tablet (PMID701i) None 4.0.3 WiFi Samsung Galaxy Tab Verizon 2.3.5 3G/WiFi Samsung Galaxy Tab 3 10.1 None 4.4.2 WiFi Samsung Galaxy Tab 4 8.0 None 5.1.1 WiFi Samsung Galaxy Tab A None 8.1.0 WiFi Samsung Galaxy Tab E 9.6 None 6.0.1 WiFi Samsung Galaxy Tab S3 None 8.0.0 WiFi Samsung Galaxy Tab S4 None 9 WiFi Samsung Google Nexus 10 None 5.1.1 WiFi Samsung Tab pro 12 inch None 5.1.1 WiFi ViewSonic G-Tablet None 2.2 WiFi ViewSonic ViewPad 7 T-Mobile 2.2.1 3G/WiFi Android - Phones Essential Phone Verizon 9 3G/LTE/WiFi Google Pixel (A) Verizon 9 3G/LTE/WiFi Android - Phones (continued) Google Pixel (B) Verizon 8.1 3G/LTE/WiFi Google Pixel (C) Factory Unlocked 9 3G/LTE/WiFi Google Pixel 2 Verizon 8.1 3G/LTE/WiFi Google Pixel -

I in the UNITED STATES DISTRICT COURT for the EASTERN DISTRICT of TEXAS LOGANTREE LP Plaintiff, V. LG ELECTRONICS, INC., and LG

Case 4:21-cv-00332 Document 1 Filed 04/27/21 Page 1 of 14 PageID #: 1 IN THE UNITED STATES DISTRICT COURT FOR THE EASTERN DISTRICT OF TEXAS LOGANTREE LP Plaintiff, v. CIVIL ACTION NO. LG ELECTRONICS, INC., and LG ELECTRONICS USA, INC., JURY DEMAND Defendants. PLAINTIFF’S ORIGINAL COMPLAINT TABLE OF CONTENTS I. PARTIES ................................................................................................................................ 1 II. JURISDICTION AND VENUE ........................................................................................ 2 III. THE PATENT-IN-SUIT ................................................................................................... 3 IV. THE REEXAMINATION ................................................................................................. 4 V. COUNT ONE: INFRINGEMENT OF THE REEXAMINED ‘576 PATENT ............. 7 VI. PRAYER FOR RELIEF.................................................................................................. 11 VII. DEMAND FOR JURY TRIAL ....................................................................................... 12 i Case 4:21-cv-00332 Document 1 Filed 04/27/21 Page 2 of 14 PageID #: 2 TABLE OF AUTHORITIES Cases TC Heartland LLC v. Kraft Foods Grp. Brands LLC, 137 S. Ct. 1514 (2017) .............................. 3 Statutes 28 U.S.C. § 1331 ............................................................................................................................. 2 28 U.S.C. § 1338 ............................................................................................................................ -

Sprint Complete

Sprint Complete Equipment Replacement Insurance Program (ERP) Equipment Service and Repair Service Contract Program (ESRP) Effective July 2021 This device schedule is updated regularly to include new models. Check this document any time your equipment changes and before visiting an authorized repair center for service. If you are not certain of the model of your phone, refer to your original receipt or it may be printed on the white label located under the battery of your device. Repair eligibility is subject to change. Models Eligible for $29 Cracked Screen Repair* Apple Samsung HTC LG • iPhone 5 • iPhone X • GS5 • Note 8 • One M8 • G Flex • G3 Vigor • iPhone 5C • iPhone XS • GS6 • Note 9 • One E8 • G Flex II • G4 • iPhone 5S • iPhone XS Max • GS6 Edge • Note 20 5G • One M9 • G Stylo • G5 • iPhone 6 • iPhone XR • GS6 Edge+ • Note 20 Ultra 5G • One M10 • Stylo 2 • G6 • iPhone 6 Plus • iPhone 11 • GS7 • GS10 • Bolt • Stylo 3 • V20 • iPhone 6S • iPhone 11 Pro • GS7 Edge • GS10e • HTC U11 • Stylo 6 • X power • iPhone 6S Plus • iPhone 11 Pro • GS8 • GS10+ • G7 ThinQ • V40 ThinQ • iPhone SE Max • GS8+ • GS10 5G • G8 ThinQ • V50 ThinQ • iPhone SE2 • iPhone 12 • GS9 • Note 10 • G8X ThinQ • V60 ThinQ 5G • iPhone 7 • iPhone 12 Pro • GS9+ • Note 10+ • V60 ThinQ 5G • iPhone 7 Plus • iPhone 12 Pro • A50 • GS20 5G Dual Screen • iPhone 8 Max • A51 • GS20+ 5G • Velvet 5G • iPhone 8 Plus • iPhone 12 Mini • Note 4 • GS20 Ultra 5G • Note 5 • Galaxy S20 FE 5G • GS21 5G • GS21+ 5G • GS21 Ultra 5G Monthly Charge, Deductible/Service Fee, and Repair Schedule -

Essential Phone on Its Way to Early Adopters 24 August 2017, by Seung Lee, the Mercury News

Essential Phone on its way to early adopters 24 August 2017, by Seung Lee, The Mercury News this week in a press briefing in Essential's headquarters in Palo Alto. "People have neglected hardware for years, decades. The rest of the venture business is focused on software, on service." Essential wanted to make a timeless, high-powered phone, according to Rubin. Its bezel-less and logo- less design is reinforced by titanium parts, stronger than the industry standard aluminum parts, and a ceramic exterior. It has no buttons in its front display but has a fingerprint scanner on the back. The company is also making accessories, like an attachable 360-degree camera, and the Phone will work with products from its competition, such as the Apple Homekit. Essential, the new smartphone company founded by Android operating system creator Andy Rubin, "How do you build technology that consumers are is planning to ship its first pre-ordered flagship willing to invest in?" asked Rubin. "Inter-operability smartphones soon. The general launch date for is really, really important. We acknowledge that, the Essential Phone remains unknown, despite and we inter-operate with companies even if they months of publicity and continued intrigue among are our competitors." Silicon Valley's gadget-loving circles. Recently, Essential's exclusive carrier Sprint announced it While the 5.7-inch phone feels denser than its will open Phone pre-orders on its own website and Apple and Samsung counterparts, it is bereft of stores. Essential opened up pre-orders on its bloatware - rarely used default apps that are website in May when the product was first common in new smartphones. -

Barometer of Mobile Internet Connections in Switzerland

Barometer of mobile internet connections in Switzerland Publication of March 06th 2020 2019 report nPerf is a trademark owned by nPerf SAS, 87 rue de Sèze 69006 LYON – France. Contents 1 Summary of overall results .......................................................................................................... 2 1.1 nPerf score, all technologies combined, [2G->4G] ............................................................... 2 1.2 Our analysis ........................................................................................................................... 3 2 Overall results ............................................................................................................................... 3 2.1 Data amount and distribution ............................................................................................... 3 2.2 Success rate [2G->4G] ........................................................................................................... 4 2.3 Download speed [2G->4G] ..................................................................................................... 4 2.4 Upload speed [2G->4G] ......................................................................................................... 4 2.5 Latency [2G->4G] ................................................................................................................... 5 2.6 Browsing test [2G->4G] ......................................................................................................... 5 2.7 Streaming test [2G->4G] -

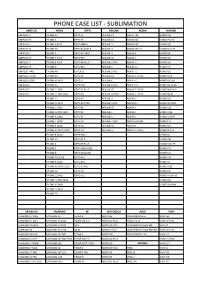

Qikink Product & Price List

PHONE CASE LIST - SUBLIMATION ONEPLUS APPLE OPPO REALME NOKIA HUAWEI ONEPLUS 3 IPHONE SE OPPO F3 REALME C1 NOKIA 730 HONOR 6X ONEPLUS 3T IPHONE 6 OPPO F5 REALME C2 NOKIA 640 HONOR 9 LITE ONEPLUS 5 IPHONE 6 PLUS OPPO FIND X REALME 3 NOKIA 540 HONOR Y9 ONEPLUS 5T IPHONE 6S OPPO REALME X REALME 3i NOKIA 7 PLUS HONOR 10 LITE ONEPLUS 6 IPHONE 7 OPPO F11 PRO REALME 5i NOKIA 8 HONOR 8C ONEPLUS 6T IPHONE 7 PLUS OPPO F15 REALME 5S NOKIA 6 HONOR 8X ONEPLUS 7 IPHONE 8 PLUS OPPO RENO 2F REALME 2 PRO NOKIA 3.1 HONOR 10 ONEPLUS 7T IPHONE X OPPO F11 REALME 3 NOKIA 2.1 HONOR 7C ONEPLUS 7PRO IPHONE XR OPPOF13 REALME 3 PRO NOKIA 7.1 HONOR 5C ONEPLUS 7T PRO IPHONE XS OPPO F1 REALME C3 NOKIA 3.1 PLUS HONOR P20 ONEPLUS NORD IPHONE XS MAX OPPO F7 REALME 6 NOKIA 5.1 HONOR 6PLUS ONEPLUS X IPHONE 11 OPPO A57 REALME 6 PRO NOKIA 7.2 HONOR PLAY 8A ONEPLUS 2 IPHONE 11 PRO OPPO F1 PLUS REALME X2 NOKIA 7.1 PLUS HONOR NOVA 3i ONEPLUS 1 IPHONE 11 PRO MAX OPPO F9 REALME X2 PRO NOKIA 6.1 PLUS HONOR PLAY IPHONE 12 OPPO A7 REALME 5 NOKIA 6.1 HONOR 8X IPHONE 12 MINI OPPO R17 PRO REALME 5 PRO NOKIA 8.1 HONOR 8X MAX IPHONE 12 PRO OPPO K1 REALME XT NOKIA 2 HONOR 20i IPHONE 12 PRO MAX OPPO F9 REALME 1 NOKIA 3 HONOR V20 IPHONE X LOGO OPPO F3 REALME X NOKIA 5 HONOR 6 PLAY IPHONE 7 LOGO OPPO A3 REALME 7 PRO NOKIA 6 (2018) HONOR 7X IPHONE 6 LOGO OPPO A5 REALME 5S NOKIA 8 HONOR 5X IPHONE XS MAX LOGO OPPO A9 REALME 5i NOKIA 2.1 PLUS HONOR 8 LITE IPHONE 8 LOGO OPPO R98 HONOR 8 IPHONE 5S OPPO F1 S HONOR 9N IPHONE 4 OPPO F3 PLUS HONOR 10 LITE IPHONE 5 OPPO A83 (2018) HONOR 7S IPHONE 8 -

Gebruikershandleiding

Nokia 7 Plus Gebruikershandleiding Uitgave 2018-07-31 nl-NL Nokia 7 Plus Gebruikershandleiding Over deze gebruikershandleiding Belangrijk: Lees de gedeelten 'Voor uw veiligheid' en 'Product- en veiligheidsinformatie' in de gedrukte gebruikershandleiding of op www.nokia.com/support voordat u het apparaat in gebruik neemt, voor belangrijke informatie over een veilig gebruik van uw apparaat en batterij. Lees de gedrukte handleiding om aan de slag te gaan met uw nieuwe apparaat. © 2018 HMD Global Oy. Alle rechten voorbehouden. 2 Nokia 7 Plus Gebruikershandleiding Inhoudsopgave Over deze gebruikershandleiding 2 Aan de slag 6 Houd uw telefoon up-to-date 6 Toetsen en onderdelen 6 De simkaart en geheugenkaart plaatsen of verwijderen 7 Uw telefoon opladen 8 Uw telefoon inschakelen en instellen 9 Instellingen voor dubbele simkaarten 10 Vingerafdruk-ID instellen 12 Uw telefoon vergrendelen of ontgrendelen 12 Het aanraakscherm gebruiken 12 Basisprincipes 17 Uw telefoon personaliseren 17 Een app openen en sluiten 17 Meldingen 18 Het volume regelen 19 Schermopnamen 20 Levensduur van de batterij 20 Op kosten voor gegevensroaming besparen 22 Tekst schrijven 23 Datum en tijd 25 Klok en alarm 26 Calculator 27 Toegankelijkheid 28 Contact maken met uw familie en vrienden 29 Oproepen 29 Contacten 29 Berichten verzenden en ontvangen 31 E-mail 31 Sociaal worden 33 Camera 34 © 2018 HMD Global Oy. Alle rechten voorbehouden. 3 Nokia 7 Plus Gebruikershandleiding Basisprincipes van de camera 34 Een video opnemen 35 Uw camera gebruiken als een professional 36 Uw foto's -

HR-Imotion Kompatibilitätsübersicht So Vergleichen Sie Ob Ihr Smartphone Oder Tablet in Den Gerätehalter Passt*

HR-imotion Kompatibilitätsübersicht So vergleichen Sie ob Ihr Smartphone oder Tablet in den Gerätehalter passt*. 1. Überprüfen Sie die Artikel Nummer des Gerätehalter. Sie finden die Nummer auf der Unterseite der Verpackung über dem Strichcode Auf der Amazon Webseite im Produkttitel oder im Produktinformationsbereich 2. Suchen Sie nun Ihr Telefon oder Tablet in der auf der nächsten Seite startenden Geräteübersicht Sollte Ihr Tablet oder Smartphone nicht auftauchen, so messen Sie bitte die Länge und Breite des Smartphone oder Tablet ab und vergleichen Sie es mit den genannten Abmessungen in der Beschreibung / Stichpunkten. 3. Schauen Sie nun in der Spalte mit der heraus gesuchten Artikelnummer ob Ihr Telefon oder Tablet passt: = Produkt passt in die Halterung = Produkt passt nicht in die Halterung *Alle Angaben ohne Gewähr. Überprüft werden nur die Abmessungen der Geräte. Beispiel Sie besitzen ein Google Nexus 6P und möchten ent- weder wissen, welche Halterung Sie nutzen können oder ob die Quicky Lüftungshalterung (Art. Nr. 22110101) passt. Kompatibilitätsübersicht HR-imotion Kompatibilität/Compatibility 2019 / 11 Gerätetyp Telefon 22410001 23010201 22110001 23010001 23010101 22010401 22010501 22010301 22010201 22110101 22010701 22011101 22010101 22210101 22210001 23510101 23010501 23010601 23010701 23510320 22610001 23510420 Smartphone Acer Liquid Zest Plus Smartphone AEG Voxtel M250 Smartphone Alcatel 1X Smartphone Alcatel 3 Smartphone Alcatel 3C Smartphone Alcatel 3V Smartphone Alcatel 3X Smartphone Alcatel 5 Smartphone Alcatel 5v Smartphone -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer