Agent-Based Collaborative Affective E-Learning Framework

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neural Networks for Emotion Classification

Neural Networks for Emotion Classification For obtaining master degree In computer science At Leiden Institute of Advanced Computer Science Under supervision of Dr. Michael S. Lew and Dr. Nicu Sebe by Yafei Sun August 2003 1 ACKNOWLEDGMENTS I would like to express my heartfelt gratitude to Dr. Michael S. Lew for his support, invaluable guidance, time, and encouragement. I’d like to express my sincere appreciation to Dr. Nicu Sebe for his countless ideas and advice, his encouragement and sharing his valuable knowledge with me. Thanks to Ernst Lindoorn and Jelle Westbroek for their help on the experiments setup. Thanks to all the friends who participated in the construction of the authentic emotion database. I would also like to Dr. Andre Deutz, Miss Riet Derogee and Miss Rachel Van Der Waal for helping me out with a problem on the scheduling of my oral presentation of master thesis. Finally a special word of thanks to Dr. Erwin Bakker for attending my graduation exam ceremony. 2 Neural Networks for Emotion Classification Table of Contents ABSTRACT ............................................................................................................................................5 1 INTRODUCTION ..........................................................................................................................5 2 BACKGROUND RESEARCH IN EMOTION RECOGNITION..............................................8 2.1 AN IDEAL SYSTEM FOR FACIAL EXPRESSION RECOGNITION: CURRENT PROBLEMS.....11 2.2 FACE DETECTION AND FEATURE EXTRACTION................................................................11 -

CITY MOOD How Does Your City Feel?

CITY MOOD how does your city feel? Luisa Fabrizi Interaction Design One year Master 15 Credits Master Thesis August 2014 Supervisor: Jörn Messeter CITY MOOD how does your city feel? 2 Thesis Project I Interaction Design Master 2014 Malmö University Luisa Fabrizi [email protected] 3 01. INDEX 01. INDEX .................................................................................................................................03 02. ABSTRACT ...............................................................................................................................04 03. INTRODUCTION ...............................................................................................................................05 04. METHODOLOGY ..............................................................................................................................08 05. THEORETICAL FRamEWORK ...........................................................................................................09 06. DESIGN PROCESS ...................................................................................................................18 07. GENERAL CONCLUSIONS ..............................................................................................................41 08. FURTHER IMPLEMENTATIONS ....................................................................................................44 09. APPENDIX ..............................................................................................................................47 02. -

Truth Be Told

Truth Be Told . - washingtonpost.com Page 1 of 4 Hello vidal Change Preferences | Sign Out Print Edition | Subscribe NEWS POLITICS OPINIONS LOCAL SPORTS ARTS & LIVING CITY GUIDE JOBS SHO SEARCH: washingtonpost.com Web | Search Ar washingtonpost.com > Print Edition > Sunday Source » THIS STORY: READ + | Comments Truth Be Told . Sunday, November 25, 2007; Page N06 In the arena of lie detecting, it's important to remember that no emotion tells you its source. Think of Othello suffocating Desdemona because he interpreted her tears (over Cassio's death) as the reaction of an adulterer, not a friend. Othello made an assumption and killed his wife. Oops. The moral of the story: Just because someone exhibits the behavior of a liar does not make them one. "There isn't a silver bullet," psychologist Paul Ekman says. "That's because we don't have Pinocchio's nose. There is nothing in demeanor that is specific to a lie." Nevertheless, there are indicators that should prompt you to make further inquiries that may lead to discovering a lie. Below is a template for taking the first steps, gleaned from interviews with Ekman and several other experts, as well as their works. They are psychology professors Maureen O'Sullivan (University of San Francisco), (ISTOCKPHOTO) Robert Feldman (University of Massachusetts) and Bella DePaulo (University of California at Santa Barbara); TOOLBOX communication professor Mark Frank (University at Resize Text Save/Share + Buffalo); and body language trainer Janine Driver (a.k.a. Print This the Lyin' Tamer). E-mail This COMMENT How to Detect a Lie No comments have been posted about this http://www.washingtonpost.com/wp-dyn/content/article/2007/11/21/AR2007112102027... -

Ekman, Emotional Expression, and the Art of Empirical Epiphany

JOURNAL OF RESEARCH IN PERSONALITY Journal of Research in Personality 38 (2004) 37–44 www.elsevier.com/locate/jrp Ekman, emotional expression, and the art of empirical epiphany Dacher Keltner* Department of Psychology, University of California, Berkeley, 3319 Tolman, 94720 Berkeley, CA, USA Introduction In the mid and late 1960s, Paul Ekman offered a variety of bold assertions, some seemingly more radical today than others (Ekman, 1984, 1992, 1993). Emotions are expressed in a limited number of particular facial expressions. These expressions are universal and evolved. Facial expressions of emotion are remarkably brief, typically lasting 1 to 5 s. And germane to the interests of the present article, these brief facial expressions of emotion reveal a great deal about peopleÕs lives. In the present article I will present evidence that supports this last notion ad- vanced by Ekman, that brief expressions of emotion reveal important things about the individualÕs life course. To do so I first theorize about how individual differences in emotion shape the life context. With this reasoning as backdrop, I then review four kinds of evidence that indicate that facial expression is revealing of the life that the individual has led and is likely to continue leading. Individual differences in emotion and the shaping of the life context People, as a function of their personality or psychological disorder, create the sit- uations in which they act (e.g., Buss, 1987). Individuals selectively attend to certain features of complex situations, thus endowing contexts with idiosyncratic meaning. Individuals evoke responses in others, thus shaping the shared, social meaning of the situation. -

E Motions in Process Barbara Rauch

e_motions in process Barbara Rauch Abstract This research project maps virtual emotions. Rauch uses 3D-surface capturing devices to scan facial expressions in (stuffed) animals and humans, which she then sculpts with the Phantom Arm/ SensAble FreeForm device in 3D virtual space. The results are rapidform printed objects and 3D animations of morphing faces and gestures. Building on her research into consciousness studies and emotions, she has developed a new artwork to reveal characteristic aspects of human emotions (i.e. laughing, crying, frowning, sneering, etc.), which utilises new technology, in particular digital scanning devices and special effects animation software. The proposal is to use a 3D high-resolution laser scanner to capture animal faces and, using the data of these faces, animate and then combine them with human emotional facial expressions. The morphing of the human and animal facial data are not merely layers of the different scans but by applying an algorithmic programme to the data, crucial landmarks in the animal face are merged in order to match with those of the human. The results are morphings of the physical characteristics of animals with the emotional characteristics of the human face in 3D. The focus of this interdisciplinary research project is a collaborative practice that brings together researchers from UCL in London and researchers at OCAD University’s data and information visualization lab. Rauch uses Darwin’s metatheory of the continuity of species and other theories on evolution and internal physiology (Ekman et al) in order to re-examine previous and new theories with the use of new technologies, including the SensAble FreeForm Device, which, as an interface, allows for haptic feedback from digital data.Keywords: interdisciplinary research, 3D-surface capturing, animated facial expressions, evolution of emotions and feelings, technologically transformed realities. -

Playing God? Towards Machines That Deny Their Maker

Lecture with Prof. Rosalind Picard, Massachusetts Institute of Technology (MIT) Playing God? Towards Machines that Deny Their Maker 22. April 2016 14:15 UniS, Hörsaal A003 CAMPUS live VBG Bern Institut für Philosophie Christlicher Studentenverein Christliche Hochschulgruppe Länggassstrasse 49a www.campuslive.ch/bern www.bern.vbg.net 3000 Bern 9 www.philosophie.unibe.ch 22. April 2016 14:15 UniS, Hörsaal A003 (Schanzeneckstrasse 1, Bern) Lecture with Prof. Rosalind Picard (MIT) Playing God? Towards Machines that Deny their Maker "Today, technology is acquiring the capability to sense and respond skilfully to human emotion, and in some cases to act as if it has emotional processes that make it more intelligent. I'll give examples of the potential for good with this technology in helping people with autism, epilepsy, and mental health challenges such as anxie- ty and depression. However, the possibilities are much larger: A few scientists want to build computers that are vastly superior to humans, capable of powers beyond reproducing their own kind. What do we want to see built? And, how might we make sure that new affective technologies make human lives better?" Professor Rosalind W. Picard, Sc.D., is founder and director of the Affective Computing Research Group at the Massachusetts Institute of Technology (MIT) Media Lab where she also chairs MIT's Mind+Hand+Heart initiative. Picard has co- founded two businesses, Empatica, Inc. creating wearable sensors and analytics to improve health, and Affectiva, Inc. delivering software to measure and communi- cate emotion through facial expression analysis. She has authored or co-authored over 200 scientific articles, together with the book Affective Computing, which was instrumental in giving rise to the field by that name. -

Facial Expression and Emotion Paul Ekman

1992 Award Addresses Facial Expression and Emotion Paul Ekman Cross-cultural research on facial expression and the de- We found evidence of universality in spontaneous velopments of methods to measure facial expression are expressions and in expressions that were deliberately briefly summarized. What has been learned about emo- posed. We postulated display rules—culture-specific pre- tion from this work on the face is then elucidated. Four scriptions about who can show which emotions, to whom, questions about facial expression and emotion are dis- and when—to explain how cultural differences may con- cussed: What information does an expression typically ceal universal in expression, and in an experiment we convey? Can there be emotion without facial expression? showed how that could occur. Can there be a facial expression of emotion without emo- In the last five years, there have been a few challenges tion? How do individuals differ in their facial expressions to the evidence of universals, particularly from anthro- of emotion? pologists (see review by Lutz & White, 1986). There is. however, no quantitative data to support the claim that expressions are culture specific. The accounts are more In 1965 when I began to study facial expression,1 few- anecdotal, without control for the possibility of observer thought there was much to be learned. Goldstein {1981} bias and without evidence of interobserver reliability. pointed out that a number of famous psychologists—F. There have been recent challenges also from psychologists and G. Allport, Brunswik, Hull, Lindzey, Maslow, Os- (J. A. Russell, personal communication, June 1992) who good, Titchner—did only one facial study, which was not study how words are used to judge photographs of facial what earned them their reputations. -

Emotion Classification Based on Biophysical Signals and Machine Learning Techniques

S S symmetry Article Emotion Classification Based on Biophysical Signals and Machine Learning Techniques Oana Bălan 1,* , Gabriela Moise 2 , Livia Petrescu 3 , Alin Moldoveanu 1 , Marius Leordeanu 1 and Florica Moldoveanu 1 1 Faculty of Automatic Control and Computers, University POLITEHNICA of Bucharest, Bucharest 060042, Romania; [email protected] (A.M.); [email protected] (M.L.); fl[email protected] (F.M.) 2 Department of Computer Science, Information Technology, Mathematics and Physics (ITIMF), Petroleum-Gas University of Ploiesti, Ploiesti 100680, Romania; [email protected] 3 Faculty of Biology, University of Bucharest, Bucharest 030014, Romania; [email protected] * Correspondence: [email protected]; Tel.: +40722276571 Received: 12 November 2019; Accepted: 18 December 2019; Published: 20 December 2019 Abstract: Emotions constitute an indispensable component of our everyday life. They consist of conscious mental reactions towards objects or situations and are associated with various physiological, behavioral, and cognitive changes. In this paper, we propose a comparative analysis between different machine learning and deep learning techniques, with and without feature selection, for binarily classifying the six basic emotions, namely anger, disgust, fear, joy, sadness, and surprise, into two symmetrical categorical classes (emotion and no emotion), using the physiological recordings and subjective ratings of valence, arousal, and dominance from the DEAP (Dataset for Emotion Analysis using EEG, Physiological and Video Signals) database. The results showed that the maximum classification accuracies for each emotion were: anger: 98.02%, joy:100%, surprise: 96%, disgust: 95%, fear: 90.75%, and sadness: 90.08%. In the case of four emotions (anger, disgust, fear, and sadness), the classification accuracies were higher without feature selection. -

Membership Information

ACM 1515 Broadway New York, NY 10036-5701 USA The CHI 2002 Conference is sponsored by ACM’s Special Int e r est Group on Computer-Human Int e r a c t i o n (A CM SIGCHI). ACM, the Association for Computing Machinery, is a major force in advancing the skills and knowledge of Information Technology (IT) profession- als and students throughout the world. ACM serves as an umbrella organization offering its 78,000 members a variety of forums in order to fulfill its members’ needs, the delivery of cutting-edge technical informa- tion, the transfer of ideas from theory to practice, and opportunities for information exchange. Providing high quality products and services, world-class journals and magazines; dynamic special interest groups; numerous “main event” conferences; tutorials; workshops; local special interest groups and chapters; and electronic forums, ACM is the resource for lifelong learning in the rapidly changing IT field. The scope of SIGCHI consists of the study of the human-computer interaction process and includes research, design, development, and evaluation efforts for interactive computer systems. The focus of SIGCHI is on how people communicate and interact with a broadly-defined range of computer systems. SIGCHI serves as a forum for the exchange of ideas among com- puter scientists, human factors scientists, psychologists, social scientists, system designers, and end users. Over 4,500 professionals work together toward common goals and objectives. Membership Information Sponsored by ACM’s Special Interest Group on Computer-Human -

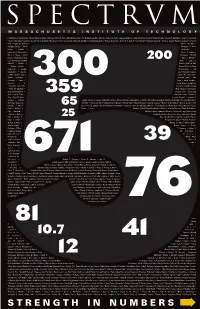

Download a PDF of This Issue

Hal Abelson • Edward Adams • Morris Adelman • Edward Adelson • Khurram Afridi • Anuradha Agarwal • Alfredo Alexander-Katz • Marilyne Andersen • Polina Olegovna Anikeeva • Anuradha M Annaswamy • Timothy Antaya • George E. Apostolakis • Robert C. Armstrong • Elaine V. Backman • Lawrence Bacow • Emilio Baglietto • Hari Balakrishnan • Marc Baldo • Ronald G. Ballinger • George Barbastathis • William A. Barletta • Steven R. H. Barrett • Paul I. Barton • Moungi G. Bawendi • Martin Z. Bazant • Geoffrey S. Beach • Janos M. Beer • Angela M. Belcher • Moshe Ben-Akiva • Hansjoerg Berger • Karl K. Berggren • A. Nihat Berker • Dimitri P. Bertsekas • Edmundo D. Blankschtein • Duane S. Boning • Mary C. Boyce • Kirkor Bozdogan • Richard D. Braatz • Rafael Luis Bras • John G. Brisson • Leslie Bromberg • Elizabeth Bruce • Joan E. Bubluski • Markus J. Buehler • Cullen R. Buie • Vladimir Bulovic • Mayank Bulsara • Tonio Buonassisi • Jacopo Buongiorno • Oral Buyukozturk • Jianshu Cao • Christopher Caplice • JoAnn Carmin • John S. Carroll • W. Craig Carter • Frederic Casalegno • Gerbrand Ceder • Ivan Celanovic • Sylvia T. Ceyer • Ismail Chabini • Anantha • P. Chandrakasan • Gang Chen • Sow-Hsin Chen • Wai K. Cheng • Yet-Ming Chiang • Sallie W. Chisholm • Nazil Choucri • Chryssosto- midis Chryssostomos • Shaoyan Chu • Jung-Hoon Chun • Joel P. Clark • Robert E. Cohen • Daniel R. Cohn • Clark K. Colton • Patricia Connell • Stephen R. Connors • Chathan Cooke • Charles L. Cooney • Edward F. Crawley • Mary Cummings • Christopher C. Cummins • Kenneth R. Czerwinski • Munther A. Da hleh • Rick Lane Danheiser • Ming Dao • Richard de Neufville • Olivier L de Weck • Edward Francis DeLong • Erik Demaine • Martin Demaine • Laurent Demanet • Michael J. Demkowicz • John M. Deutch • Srini Devadas • Mircea Dinca • Patrick S Doyle • Elisabeth M.Drake • Mark Drela • Catherine L.Drennan • Michael J.Driscoll • Steven Dubowsky • Esther Duflo • Fredo Durand • Thomas Eagar • Richard S. -

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome

Wearable Platform to Foster Learning of Natural Facial Expressions and Emotions in High-Functioning Autism and Asperger Syndrome PRIMARY INVESTIGATORS: Matthew Goodwin, The Groden Center. Rosalind Picard, Massachusetts Institute of Technology Media Laboratory Rana el Kaliouby, Massachusetts Institute of Technology Media Laboratory Alea Teeters, Massachusetts Institute of Technology Media Laboratory June Groden, The Groden Center DESCRIPTION: Evaluate the scientific and clinical significance of using a wearable camera system (Self-Cam) to improve recognition of emotions from real-world faces in young adults with Asperger syndrome (AS) and high-functioning autism (HFA). The study tests a group of 10 young adults diagnosed with AS/HFA who are asked to wear Self-Cam three times a week for 10 weeks, and use a computerized, interactive facial expression analysis system to review and analyze their videos on a weekly basis. A second group of 10 age-, sex-, IQ-matched young adults with AS/HFA will use Mind Reading – a systematic DVD guide for teaching emotion recognition. These two groups will be tested pre- and post-intervention on recognition of emotion from faces at three levels of generalization: videos of themselves, videos of their interaction partner, and videos of other participants (i.e. people they are less familiar with). A control group of 10 age- and sex- matched typically developing adults will also be tested to establish performance differences in emotion recognition abilities across groups. This study will use a combination of behavioral reports, direct observation, and pre- and post- questionnaires to assess Self-Cam’s acceptance by persons with autism. We hypothesize that the typically developing control group will perform better on emotion recognition than both groups with AS/HFA at baseline and that the Self-Cam group, whose intervention teaches emotion recognition using real-life videos, will perform better than the Mind Reading group who will learn emotion recognition from a standard stock of emotion videos. -

Facial Expressions of Emotion Influence Interpersonal Trait Inferences

FACIAL EXPRESSIONS OF EMOTION INFLUENCE INTERPERSONAL TRAIT INFERENCES Brian Knutson ABSTRACT.. Theorists have argued that facial expressions of emotion serve the inter- personal function of allowing one animal to predict another's behavior. Humans may extend these predictions into the indefinite future, as in the case of trait infer- ence. The hypothesis that facial expressions of emotion (e.g., anger, disgust, fear, happiness, and sadness) affect subjects' interpersonal trait inferences (e.g., domi- nance and affiliation) was tested in two experiments. Subjects rated the disposi- tional affiliation and dominance of target faces with either static or apparently mov- ing expressions. They inferred high dominance and affiliation from happy expressions, high dominance and low affiliation from angry and disgusted expres- sions, and low dominance from fearful and sad expressions. The findings suggest that facial expressions of emotion convey not only a targets internal state, but also differentially convey interpersonal information, which could potentially seed trait inference. What do people infer from facial expressions of emotion? Darwin (1872/1962) suggested that facial muscle movements which originally sub- served individual survival problems (e.g., spitting out noxious food, shield- ing the eyes) eventually allowed animals to predict the behavior of their conspecifics. Current emotion theorists agree that emotional facial expres- sions can serve social predictive functions (e.g., Ekman, 1982, Izard, 1972; Plutchik, 1980). For instance, Frank (1988) hypothesizes that a person might signal a desire to cooperate by smiling at another. Although a viewer may predict a target's immediate behavior on the basis of his or her facial expressions, the viewer may also extrapolate to the more distant future, as in the case of inferring personality traits.