A MULTI-STAKEHOLDER INVESTIGATION INTO the EFFECT of the WEB on SCHOLARLY COMMUNICATION in CHEMISTRY by Richard William Fyson

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

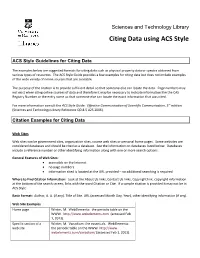

Citing Data Using ACS Style

Sciences and Technology Library Citing Data using ACS Style ACS Style Guidelines for Citing Data The examples below are suggested formats for citing data such as physical property data or spectra obtained from various types of resources. The ACS Style Guide provides a few examples for citing data but does not include examples of the wide variety of online sources that are available. The purpose of the citation is to provide sufficient detail so that someone else can locate the data. Page numbers may not exist when citing online sources of data and therefore it may be necessary to indicate information like the CAS Registry Number or the entry name so that someone else can locate the exact information that was cited. For more information consult the ACS Style Guide: Effective Communication of Scientific Communication, 3rd edition (Sciences and Technology Library Reference QD 8.5 A25 2006). Citation Examples for Citing Data Web Sites Web sites can be government sites, organization sites, course web sites or personal home pages. Some websites are considered databases and should be cited as a database. See the information on databases listed below. Databases include a reference number or other identifying information along with one or more search options. General Features of Web Sites: accessible on the Internet no page numbers information cited is located at the URL provided – no additional searching is required Where to Find Citation Information: Look at the About Us links, Contact Us links, Copyright link, copyright information at the bottom of the search screen, links with the word Citation or Cite. -

Understanding Semantic Aware Grid Middleware for E-Science

Computing and Informatics, Vol. 27, 2008, 93–118 UNDERSTANDING SEMANTIC AWARE GRID MIDDLEWARE FOR E-SCIENCE Pinar Alper, Carole Goble School of Computer Science, University of Manchester, Manchester, UK e-mail: penpecip, carole @cs.man.ac.uk { } Oscar Corcho School of Computer Science, University of Manchester, Manchester, UK & Facultad de Inform´atica, Universidad Polit´ecnica de Madrid Boadilla del Monte, ES e-mail: [email protected] Revised manuscript received 11 January 2007 Abstract. In this paper we analyze several semantic-aware Grid middleware ser- vices used in e-Science applications. We describe them according to a common analysis framework, so as to find their commonalities and their distinguishing fea- tures. As a result of this analysis we categorize these services into three groups: information services, data access services and decision support services. We make comparisons and provide additional conclusions that are useful to understand bet- ter how these services have been developed and deployed, and how similar ser- vices would be developed in the future, mainly in the context of e-Science applica- tions. Keywords: Semantic grid, middleware, e-science 1 INTRODUCTION The Science 2020 report [40] stresses the importance of understanding and manag- ing the semantics of data used in scientific applications as one of the key enablers of 94 P. Alper, C. Goble, O. Corcho future e-Science. This involves aspects like understanding metadata, ensuring data quality and accuracy, dealing with data provenance (where and how it was pro- duced), etc. The report also stresses the fact that metadata is not simply for human consumption, but primarily used by tools that perform data integration and exploit web services and workflows that transform the data, compute new derived data, etc. -

Description Logics Emerge from Ivory Towers Deborah L

Description Logics Emerge from Ivory Towers Deborah L. McGuinness Stanford University, Stanford, CA, 94305 [email protected] Abstract: Description logic (DL) has existed as a field for a few decades yet somewhat recently have appeared to transform from an area of academic interest to an area of broad interest. This paper provides a brief historical perspective of description logic developments that have impacted their usability beyond just in universities and research labs and provides one perspective on the topic. Description logics (previously called terminological logics and KL-ONE-like systems) started with a motivation of providing a formal foundation for semantic networks. The first implemented DL system – KL-ONE – grew out of Brachman’s thesis [Brachman, 1977]. This work was influenced by the work on frame systems but was focused on providing a foundation for building term meanings in a semantically meaningful and unambiguous manner. It rejected the notion of maintaining an ever growing (seemingly adhoc) vocabulary of link and node names seen in semantic networks and instead embraced the notion of a fixed set of domain-independent “epistemological primitives” that could be used to construct complex, structured object descriptions. It included constructs such as “defines-an-attribute-of” as a built-in construct and expected terms like “has-employee” to be higher-level terms built up from the epistemological primitives. Higher level terms such as “has-employee” and “has-part-time-employee” could be related automatically based on term definitions instead of requiring a user to place links between them. In its original incarnation, this led to maintaining the motivation of semantic networks of providing broad expressive capabilities (since people wanted to be able to represent natural language applications) coupled with the motivation of providing a foundation of building blocks that could be used in a principled and well-defined manner. -

Open PHACTS: Semantic Interoperability for Drug Discovery

REVIEWS Drug Discovery Today Volume 17, Numbers 21/22 November 2012 Reviews KEYNOTE REVIEW Open PHACTS: semantic interoperability for drug discovery 1 2 3 Antony J. Williams Antony J. Williams , Lee Harland , Paul Groth , graduated with a PhD in 4 5,6 chemistry as an NMR Stephen Pettifer , Christine Chichester , spectroscopist. Antony 7 6,7 8 Williams is currently VP, Egon L. Willighagen , Chris T. Evelo , Niklas Blomberg , Strategic development for 9 4 6 ChemSpider at the Royal Gerhard Ecker , Carole Goble and Barend Mons Society of Chemistry. He has written chapters for many 1 Royal Society of Chemistry, ChemSpider, US Office, 904 Tamaras Circle, Wake Forest, NC 27587, USA books and authored >140 2 Connected Discovery Ltd., 27 Old Gloucester Street, London, WC1N 3AX, UK peer reviewed papers and 3 book chapters on NMR, predictive ADME methods, VU University Amsterdam, Room T-365, De Boelelaan 1081a, 1081 HV Amsterdam, The Netherlands 4 internet-based tools, crowdsourcing and database School of Computer Science, The University of Manchester, Oxford Road, Manchester M13 9PL, UK 5 curation. He is an active blogger and participant in the Swiss Institute of Bioinformatics, CMU, Rue Michel-Servet 1, 1211 Geneva 4, Switzerland Internet chemistry network as @ChemConnector. 6 Netherlands Bioinformatics Center, P. O. Box 9101, 6500 HB Nijmegen, and Leiden University Medical Center, The Netherlands Lee Harland 7 is the Founder & Chief Department of Bioinformatics – BiGCaT, Maastricht University, The Netherlands 8 Technical Officer of Respiratory & Inflammation iMed, AstraZeneca R&D Mo¨lndal, S-431 83 Mo¨lndal, Sweden 9 ConnectedDiscovery, a University of Vienna, Department of Medicinal Chemistry, Althanstraße 14, 1090 Wien, Austria company established to promote and manage precompetitive collaboration Open PHACTS is a public–private partnership between academia, within the life science industry. -

EXPLORING NEW ASYMMETRIC REACTIONS CATALYSED by DICATIONIC Pd(II) COMPLEXES

EXPLORING NEW ASYMMETRIC REACTIONS CATALYSED BY DICATIONIC Pd(II) COMPLEXES A DISSERTATION FOR THE DEGREE OF DOCTOR OF PHILOSOPHY FROM IMPERIAL COLLEGE LONDON BY ALEXANDER SMITH MAY 2010 DEPARTMENT OF CHEMISTRY IMPERIAL COLLEGE LONDON DECLARATION I confirm that this report is my own work and where reference is made to other research this is referenced in text. ……………………………………………………………………… Copyright Notice Imperial College of Science, Technology and Medicine Department Of Chemistry Exploring new asymmetric reactions catalysed by dicationic Pd(II) complexes © 2010 Alexander Smith [email protected] This publication may be distributed freely in its entirety and in its original form without the consent of the copyright owner. Use of this material in any other published works must be appropriately referenced, and, if necessary, permission sought from the copyright owner. Published by: Alexander Smith Department of Chemistry Imperial College London South Kensington campus, London, SW7 2AZ UK www.imperial.ac.uk ACKNOWLEDGEMENTS I wish to thank my supervisor, Dr Mimi Hii, for her support throughout my PhD, without which this project could not have existed. Mimi’s passion for research and boundless optimism have been crucial, turning failures into learning curves, and ultimately leading this project to success. She has always been able to spare the time to advise me and provide fresh ideas, and I am grateful for this patience, generosity and support. In addition, Mimi’s open-minded and multi-disciplinary approach to chemistry has allowed the project to develop in new and unexpected directions, and has fundamentally changed my attitude to chemistry. I would like to express my gratitude to Dr Denis Billen from Pfizer, who also provided a much needed dose of optimism and enthusiasm in the early stages of the project, and managed to arrange my three month placement at Pfizer despite moving to the US as the department closed. -

Cambridge Pre-U Developing Successful Students Cambridge Education Group What Is Cambridge Pre-U?

Cambridge Pre-U Developing successful students Cambridge Education Group What is Cambridge Pre-U? CATS Cambridge is pleased to offer this new and exciting two- Why study Cambridge Pre-U? year qualification designed for the most gifted and talented • Is recognised by the best universities including all of the students who want to go to the best universities. Developed Russell Group, who welcome it by University of Cambridge International Examinations (CIE), as a way of selecting the most Cambridge Pre-U will prepare high-flying students with the talented students • Allows students to acquire knowledge and skills they need to make a success of their independent learning skills studies at university. and a deep understanding of their subjects • Is structured to award the best What will students study? students grade points higher than Cambridge Pre-U can be studied in one of these subjects must be the can be achieved at A level a number of ways and will be taught core element, Global Perspectives. • Can be combined with A levels by the outstanding academic staff Global Perspectives encourages • Examinations take place at the at CATS Cambridge – 1/3 of whom students to think critically about issues end of the two years, allowing are Oxbridge qualified. Students can of the modern world, giving them students to benefit from seeing study up to four Cambridge Pre-U the tools to identify, evaluate and the subject as a whole rather Principal subjects or substitute one or explore competing arguments and than studying isolated modules two of these for A-level subjects. To perspectives. -

Financial Statements and Trustees' Report 2017

Royal Society of Chemistry Financial Statements and Trustees’ Report 2017 About us Contents We are the professional body for chemists in the Welcome from our president 1 UK with a global community of more than 50,000 Our strategy: shaping the future of the chemical sciences 2 members in 125 countries, and an internationally Chemistry changes the world 2 renowned publisher of high quality chemical Chemistry is changing 2 science knowledge. We can enable that change 3 As a not-for-profit organisation, we invest our We have a plan to enable that change 3 surplus income to achieve our charitable objectives Champion the chemistry profession 3 in support of the chemical science community Disseminate chemical knowledge 3 and advancing chemistry. We are the largest non- Use our voice for chemistry 3 governmental investor in chemistry education in We will change how we work 3 the UK. Delivering our core roles: successes in 2017 4 We connect our community by holding scientific Champion for the chemistry profession 4 conferences, symposia, workshops and webinars. Set and maintain professional standards 5 We partner globally for the benefit of the chemical Support and bring together practising chemists 6 sciences. We support people teaching and practising Improve and enrich the teaching and learning of chemistry 6 chemistry in schools, colleges, universities and industry. And we are an influential voice for the Provider of high quality chemical science knowledge 8 chemical sciences. Maintain high publishing standards 8 Promote and enable the exchange of ideas 9 Our global community spans hundreds of thousands Facilitate collaboration across disciplines, sectors and borders 9 of scientists, librarians, teachers, students, pupils and Influential voice for the chemical sciences 10 people who love chemistry. -

The Fourth Paradigm

ABOUT THE FOURTH PARADIGM This book presents the first broad look at the rapidly emerging field of data- THE FOUR intensive science, with the goal of influencing the worldwide scientific and com- puting research communities and inspiring the next generation of scientists. Increasingly, scientific breakthroughs will be powered by advanced computing capabilities that help researchers manipulate and explore massive datasets. The speed at which any given scientific discipline advances will depend on how well its researchers collaborate with one another, and with technologists, in areas of eScience such as databases, workflow management, visualization, and cloud- computing technologies. This collection of essays expands on the vision of pio- T neering computer scientist Jim Gray for a new, fourth paradigm of discovery based H PARADIGM on data-intensive science and offers insights into how it can be fully realized. “The impact of Jim Gray’s thinking is continuing to get people to think in a new way about how data and software are redefining what it means to do science.” —Bill GaTES “I often tell people working in eScience that they aren’t in this field because they are visionaries or super-intelligent—it’s because they care about science The and they are alive now. It is about technology changing the world, and science taking advantage of it, to do more and do better.” —RhyS FRANCIS, AUSTRALIAN eRESEARCH INFRASTRUCTURE COUNCIL F OURTH “One of the greatest challenges for 21st-century science is how we respond to this new era of data-intensive -

UC Berkeley UC Berkeley Electronic Theses and Dissertations

UC Berkeley UC Berkeley Electronic Theses and Dissertations Title Supercharging methods for improving analysis and detection of proteins by electrospray ionization mass spectrometry Permalink https://escholarship.org/uc/item/93j8p71p Author Going, Catherine Cassou Publication Date 2015 Peer reviewed|Thesis/dissertation eScholarship.org Powered by the California Digital Library University of California Supercharging methods for improving analysis and detection of proteins by electrospray ionization mass spectrometry by Catherine Cassou Going A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Chemistry in the Graduate Division of the University of California, Berkeley Committee in charge: Professor Evan R. Williams, chair Professor Kristie A. Boering Professor Robert Glaeser Fall 2015 Supercharging methods for improving analysis and detection of proteins by electrospray ionization mass spectrometry Copyright 2015 by Catherine Cassou Going Abstract Supercharging methods for improving analysis and detection of proteins by electrospray ionization mass spectrometry by Catherine Cassou Going Doctor of Philosophy in Chemistry University of California, Berkeley Professor Evan R. Williams, Chair The characterization of mechanisms, analytical benefits, and applications of two different methods for producing high charge state protein ions in electrospray ionization (ESI) mass spectrometry (MS), or "supercharging", are presented in this dissertation. High charge state protein ions are desirable in tandem MS due to their higher fragmentation efficiency and thus greater amount of sequence information that can be obtained from them. The first supercharging method, supercharging with reagents (typically non-volatile organic molecules), is shown in this work to be able to produce such highly charged protein ions from denaturing solutions that about one in every three residues carries a charge. -

Synthesis and Applications of Derivatives of 1,7-Diazaspiro[5.5

Synthesis and Applications of Derivatives of 1,7-Diazaspiro[5.5]undecane. A Thesis Submitted by Joshua J. P. Almond-Thynne In partial fulfilment of the requirements for the degree of Doctor of Philosophy Department of Chemistry Imperial College London South Kensington London, SW7 2AZ October 2017 1 Declaration of Originality I, Joshua Almond-Thynne, certify that the research described in this manuscript was carried out under the supervision of Professor Anthony G. M. Barrett, Imperial College London and Doctor Anastasios Polyzos, CSIRO, Australia. Except where specific reference is made to the contrary, it is original work produced by the author and neither the whole nor any part had been submitted before for a degree in any other institution Joshua Almond-Thynne October 2017 Copyright Declaration The copyright of this thesis rests with the author and is made available under a Creative Commons Attribution Non-Commercial No Derivatives licence. Researchers are free to copy, distribute or transmit the thesis on the condition that they attribute it, that they do not use it for commercial purposes and that they do not alter, transform or build upon it. For any reuse or redistribution, researchers must make clear to others the licence terms of this work. 2 Abstract Spiroaminals are an understudied class of heterocycle. Recently, the Barrett group reported a relatively mild approach to the most simple form of spiroaminal; 1,7-diazaspiro[5.5]undecane (I).i This thesis consists of the development of novel synthetic methodologies towards the spiroaminal moiety. The first part of this thesis focuses on the synthesis of aliphatic derivatives of I through a variety of methods from the classic Barrett approach which utilises lactam II, through to de novo bidirectional approaches which utilise diphosphate V and a key Horner-Wadsworth- Emmons reaction with aldehyde VI. -

Informed Choices

<delete 60mm from right> A Russell Group guide Informed to making decisions about choices post-16 education 2011 Preface The Russell Group The Russell Group Introduction <delete 60mm from right> How to use this guide Introduction The Russell Group represents 20 leading UK universities which are What you decide to study post-16 can have a major impact on what you committed to maintaining the very best research, an outstanding teaching can study at degree level. Whether or not you have an idea of the subject Acknowledgements and learning experience for students of all backgrounds and unrivalled you want to study at university, having the right information now will give Index links with business and the public sector. you more options when the time comes to make your mind up. Visit http://www.russellgroup.ac.uk to find out more. This guide aims to help you make an informed decision when choosing Post-16 qualifications your course for post-16 education. We hope it will also be of use to parents and how they are organised and advisors. Pre-16 qualifications and How to use this guide The Russell Group is very grateful to the Institute of Career Guidance (the university entry world’s largest career guidance professional body), and particularly Andy To make this document easier to use, the following design elements have Gardner, for their very valuable input. Making your post-16 subject been adopted: choices Subjects required for different ATTENTION!! degree courses WARNING! Although there are common themes, entry requirements (even for very Text inside this large arrow is of particular importance How subject choices can affect similar courses) can vary from one university to another so you should your future career options only use this information as a general guide. -

Media Coverage of the of the Eliahou Dangoor Scholarships December

Media coverage of the of the Eliahou Dangoor scholarships December 2009 Media Coverage of the Eliahou Dangoor Scholarship December 2009 Contents National Press: 1. 07 Dec 2009: “Iraqi exile gives £3m for education of poor as debt of gratitude” The Times 2. 07 Dec 2009: “Top universities to offer new scholarships after donation” Press Association 3. 07 Dec 2009: “Top universities to offer new scholarships after donation” Independent 4. 07 Dec 2009: “Top universities to offer new scholarships” Evening Standard 5. 07 Dec 2009: “Property tycoon donates £3m to help poor university students” Guardian 6. 08 Dec 2009: “£4million scholarship scheme for budding scientists” Guardian 7. 07 Dec 2009: “Property tycoon donates £3m to help poor university students” UTV (Northern Ireland) 8. 08 Dec 2009: “Refugee pays UK student bursaries” BBC News online 9. 10 Dec 2009: “New Scholarships for Cardiff University” Western Mail Online and local coverage: 10. 08 Dec 2009: “Russell Group and 1994 Group universities launch £4 million scholarships scheme” Bioscience technology 11. 08 Dec 2009: “Refugee pays UK student bursaries” Edplace.co.uk 12. 08 Dec 2009: “Exiled tycoon gives £3m to students” Sideways News 13. 08 Dec 2009: “UK universities receive bursary boost” SFS Group Ltd 14. 09 Dec 2009: “Dangoor Scholarships at Russell Group universities” YouTube 15. 08 Dec 2009: “Speech given at the Royal Society about Dangoor scholarships” Mario Creatura blog 16. 08 Dec 2009: “Iraqi exile gives £3m for UK university scholarships” ‘Point of no return’ blog 17. 07 Dec 2009: “Queen Mary alumnus invests millions in the future of UK science and engineering” Queen Mary University of London 18.