Investigating the Construct of Productive Vocabulary Knowledge with Lex30

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Great Food, Great Stories from Korea

GREAT FOOD, GREAT STORIE FOOD, GREAT GREAT A Tableau of a Diamond Wedding Anniversary GOVERNMENT PUBLICATIONS This is a picture of an older couple from the 18th century repeating their wedding ceremony in celebration of their 60th anniversary. REGISTRATION NUMBER This painting vividly depicts a tableau in which their children offer up 11-1541000-001295-01 a cup of drink, wishing them health and longevity. The authorship of the painting is unknown, and the painting is currently housed in the National Museum of Korea. Designed to help foreigners understand Korean cuisine more easily and with greater accuracy, our <Korean Menu Guide> contains information on 154 Korean dishes in 10 languages. S <Korean Restaurant Guide 2011-Tokyo> introduces 34 excellent F Korean restaurants in the Greater Tokyo Area. ROM KOREA GREAT FOOD, GREAT STORIES FROM KOREA The Korean Food Foundation is a specialized GREAT FOOD, GREAT STORIES private organization that searches for new This book tells the many stories of Korean food, the rich flavors that have evolved generation dishes and conducts research on Korean cuisine after generation, meal after meal, for over several millennia on the Korean peninsula. in order to introduce Korean food and culinary A single dish usually leads to the creation of another through the expansion of time and space, FROM KOREA culture to the world, and support related making it impossible to count the exact number of dishes in the Korean cuisine. So, for this content development and marketing. <Korean Restaurant Guide 2011-Western Europe> (5 volumes in total) book, we have only included a selection of a hundred or so of the most representative. -

Stoves and Food for Dofe

Stoves and Food for DofE. Trangia stove with 2 pans, pan handle and gas burner as shown here. You can make the pan lid into a frying pan but as bacon and sausage cannot be stored safely then we don’t recommend frying. Cleaning also has to be born in mind. Please bring something to have a drink in while you are out as you will not pass shops and on your qualifying you cannot buy anything. Water can be in a drinks bottle, or you can bring 2 x 550ml bottles to have water in. We refill water bottles as you go from water containers in supervisors cars or sometimes we have to carry water to you. You can bring the small squirty concentrated juice if you don’t like water on its own although it is healthier for you. Food should be: Ready to heat and eat. Fast cooking time. Nothing that needs to go in a fridge. Light and easy to carry. Wide range of meals you could not otherwise have. Not fried. No jars as they break. You can cook as an individual or in your group. Remember you will be sharing a stove so if you cook individual it will need to be washed before the next person can use it. Bronze DofE Food suggestions. Saturday Breakfast – this will be at home so please have something, toast, cereal, jam butty, bacon butty or a full English! You will travel to the campsite so have a snack and drink ready for when you arrive there. You will be walking with all your kit from then on. -

Offers GOLD 3 SUNDAYMONDAY 21ST FEBRUARY 9H MAR - SATURDAY - SUNDAY 13 29THTH MARCH MAR 2020 2021

GREAT DEALS | GREAT SERVICE | EVERYDAY 2020 SYMBOL/ FRANCHISE GROUP OF THE YEAR SHORTLISTED Offers GOLD 3 SUNDAYMONDAY 21ST FEBRUARY 9H MAR - SATURDAY - SUNDAY 13 29THTH MARCH MAR 2020 2021 Kellogg’s Coco Pops Boost Energy Strawberry & White Choc 6 x 480g Original/ Original Sugar Free Paddy Irish Whiskey £11.05 12 x 1ltr 6 x 70cl 114645 £81.99 £6.15 544623 145429/145430 As Stocked Retail £22.49 POR 27.1% PM £2.99 PM £1.29 POR 38.4% POR 52.3% Buy a case & get a Bold Powder Andrex Toilet Tissue bottle of Lavender & Camomile Classic Clean 6 x 650g 12 x 2 Pack Southern £11.29 £9.65 Comfort 150854 270865 70cl PM £19.99 FREE!* MUST ADD FREE STOCK TO BASKET BEFORE COMPLETING ORDER PM £2.99 PM £1.29 * POR 24.5% POR 25.2% Guinness Foreign Guinness Draught Extra Stout 10x440ml 12 x 650ml Budweiser Carlsberg Export Pint 15x300ml 6 x 4x568ml £7.99 £23.59 988546 £8.19 £21.05 113186 970145 990121 Retail £3.39 Retail 12.49 Retail £12 PM £6.59 POR 30.4% POR 23.2% POR 18.1% POR 36.1% DELIVERING THE BEST POSSIBLE SERVICE SHEFFIELD 2 Great Deals50% Pluson Grocery POR Deals Fuel10K Granola Batchelors Pasta ‘n’ Sauce Superberry VAS 7 x 99g 6 x 400g £3.89 999677/268855/ £8.59 986916/998757/ 951797 271909 Retail £2.99 Retail £1.09 POR 52.1% POR 49.0% Cirio Passata Boost Energy 7up Swizzels Dip 12 x 500g Original/ Regular/ Love Heart/Double Original Sugar Free Free 36 x Pack £6.05 12 x 1ltr 24 x 330ml 499336 £4.65 £6.15 £5.95 32852/32818 145429/145430 113846/992121 PM 59p Retail 99p PM £1.29 or 2 for £1 Retail 30p POR 49.1% POR 52.3% POR 49.6% POR 48.3% Tetley -

THE WAY of the NOODLE Industry Sector: Food Producers Client Company: Kabuto Noodles Design Consultancy: B&B Studio July 2016

THE WAY OF THE NOODLE Industry sector: Food Producers Client company: Kabuto Noodles Design consultancy: B&B studio July 2016 For publication EXECUTIVE SUMMARY Q. WHY IS DESIGN EFFECTIVENESS LIKE A NOODLE? A. BECAUSE IT SHOULD BE BOTH INSTANT AND LENGTHY The success of independent start-up Kabuto demonstrates both. In its rst year of launch, sales targets were smashed and distribution objectives exceeded, despite the most minimal marketing support. And today, ve years later, brand growth shows no signs of stopping with total retail sales to date of more than £11m. And this is surely the true test of design success: to gain long-term loyalty and ongoing effectiveness, not a simple spike in sales thanks to a short-lived design trend. Here are some of our headlines: • TRANSFORMING A £20K INVESTMENT INTO A £11M BRAND IN 5 YEARS • SMASHING YEAR ONE SALES TARGETS BY 86% • YEAR FIVE RETAIL SALES VALUE 24 TIMES THAT OF YEAR ONE • BECOMING THE UK’S 5TH LARGEST INSTANT NOODLE BRAND ©B&B studio page 2 PROJECT OVERVIEW Project brief B&B was asked to create the name, identity and packaging for a new brand of premium instant pot-based noodles aimed at bringing the fresh sophistication of noodle restaurant chains like Wagamama to a sector still dominated by cheap, cheerful and tongue-in-cheek brands. Business objectives (for year one): Gain a listing in one major multiple retailer Meet sales targets of £100,000 Bring a wholly new consumer to the pot snack market Design objectives: Target young afuent consumers with a unique proposition Create a clearly British brand with authentic Asian recipes Bring natural health credentials to an articial category Description Kabuto Noodles was started by Crispin Busk – an independent entrepreneur with a dream to make the posh pot noodle. -

Nycfoodinspectionsimpleallen

NYCFoodInspectionSimpleAllenHazlett Based on DOHMH New York City Restaurant Inspection Results DBA BORO STREET ZIPCODE JJANG COOKS Queens ROOSEVELT AVE 11354 JUICY CUBE Manhattan LEXINGTON AVENUE 10022 DUNKIN Bronx BARTOW AVENUE 10469 BEVACCO RESTAURANT, Brooklyn HENRY STREET 11201 BINC LILI MEXICAN Bronx EAST 138 STREET 10454 RESTAURANT RUINAS DE COPAN Bronx BROOK AVENUE 10455 SHUN LI CHINESE Brooklyn PITKIN AVENUE 11212 RESTAURANT SUNFLOWER CAFE Manhattan 3 AVENUE 10010 Manhattan EAST 10 STREET 10003 CARIBBEAN & AMERICAN Brooklyn NOSTRAND AVENUE 11226 ENTERTAINMENT BAR LOUNGE & RESTAURANT NIZZA Manhattan 9 AVENUE 10036 OLIVE GARDEN Brooklyn GATEWAY DRIVE 11239 DAWA'S Queens SKILLMAN AVE 11377 FARIEDA'S DHAL PURI Queens 101ST AVE 11419 HUT GOOD TASTE 88 Brooklyn 52 STREET 11220 PIG AND KHAO Manhattan CLINTON STREET 10002 Page 1 of 556 09/27/2021 NYCFoodInspectionSimpleAllenHazlett Based on DOHMH New York City Restaurant Inspection Results CUISINE DESCRIPTION INSPECTION DATE Korean 11/20/2019 Juice, Smoothies, Fruit Salads 02/23/2018 Donuts 11/29/2019 Italian 12/19/2018 Mexican 08/14/2019 Spanish 06/11/2018 Chinese 11/23/2018 American 02/07/2020 01/01/1900 Caribbean 02/08/2020 Italian 11/30/2018 Italian 09/25/2017 Coffee/Tea 04/17/2018 Caribbean 07/09/2019 Chinese 02/06/2020 Thai 02/12/2019 Page 2 of 556 09/27/2021 NYCFoodInspectionSimpleAllenHazlett Based on DOHMH New York City Restaurant Inspection Results EMPANADA MAMA Manhattan ALLEN STREET 10002 FREDERICK SOUL HOLE Queens MERRICK BLVD 11422 GOLDEN STEAMER Manhattan MOTT STREET 10013 -

2608 Nicollet Ave. S. Minneapolis, MN 55408

Peninsula, named after the Malay Peninsula, is established by a group of Malaysians with great passion of bringing the most authentic Malaysian and Southeast Asian cuisine to the Twin Cities. We are honored to present you with one of the finest Chefs in Malaysian cuisine in the country to lead Peninsula for your unique dining experience. We sincerely thank you for being our guests. 2608 Nicollet Ave. S. Minneapolis, MN 55408 Tel: 6128718282 Fax: 6128712863 www.PeninsulaMalaysianCuisine.com We open seven days a week. Hours: Sunday – Thursday: 11:00am – 10:00pm Friday & Saturday: 11:00am – 11:00pm Parking: Restaurant Parking in the rear (all day) Across the street at Dai Nam Lot (6pm closed) Valet Parking (Friday & Saturday 6pm – closed) APPETIZERS 开胃小菜 001. ROTI CANAI 印度面包 3.95 All time Malaysian favorite. Crispy Indian style pancake. Served with spicy curry chicken & potato dipping sauce. Pancake only 2.75. 002. ROTI TELUR 面包加蛋 4.95 Crispy Indian style pancake filled with eggs, onions & bell peppers. Served with spicy curry chicken & potato dipping sauce. 003. PASEMBUR 印度罗呀 7.95 Shredded cucumber, jicama, & bean sprout salad topped with shrimp pancakes, sliced eggs, tofu & a sweet & spicy sauce. 004. PENINSULA SATAY CHICKEN 沙爹鸡 6.95 Marinated & perfectly grilled chicken on skewers with homemade spicy peanut dipping sauce. 005. PENINSULA SATAY BEEF 沙爹牛 6.95 Marinated & perfectly grilled beef on skewers with homemade spicy peanut dipping sauce. 006. SATAY TOFU 沙爹豆腐 5.95 Shells of crispy homemade tofu, filled with shredded cucumbers, bean sprouts. Topped with homemade peanut sauce. 007. POPIAH 薄饼 4.95 Malaysian style spring rolls stuffed with jicama, tofu, & bean sprouts in freshly steamed wrappers (2 rolls). -

CHINA | Sanya KOREA | Gwangju INDONESIA | Jakarta KOREA | Incheon

Volume.19 2011 ISSN 1739-5089 THE OFFICIAL MAGAZINE OF THE TOURISM PROMOTION ORGANIZATION FOR ASIA PACIFIC CITIES Volume.19 2011 2011 Volume.19 CHINA | Sanya KOREA | Gwangju INDONESIA | Jakarta KOREA | Incheon Nanshan Cultural Tourism Zone, Sanya Tourism Promotion Organization for Asia Pacific Cities TPO is a network of Asia Pacific cities and a growing international organization in the field of Tourism. It serves as a centre of marketing, information and communication for its member cities. Its membership includes 64 city governments and 31 non government members representing the private sector, educational institutions and other tourism authorities. TPO is committed to common prosperity of Asia Pacific cities geared toward sustainable tourism development. TREND & ANALYSIS 50 Research in the Significance and Potentials for ISSN 1739-5089 Development of LOHAS Tour $VLD3DFL¿F&LWLHV &+,1$_6KDQJKDL 54 7KH2I¿FLDO0DJD]LQH2I7KH7RXULVP3URPRWLRQ2UJDQL]DWLRQ)RU New Trend of the Exhibition Industry 2011 5866,$_9ODGLYRVWRN 9ROXPH .25($_6RNFKR and Tourism 19 0$/$<6,$_*HRUJHWRZQ &+,1$_6+$1*+$, TPO NEWS 58 ,QGXVWU\1HZV 62 2UJDQL]DWLRQ1HZV 7KH2IILFLDO0DJD]LQH 64 %HVW7RXULVP3URGXFW RIWKH7RXULVP 3URPRWLRQ DIRECTORY SCOPE 2UJDQL]DWLRQIRU$VLD 70 7322EMHFWLYHV 0HPEHUV 3DFLILF&LWLHV PUBLISHED BY 7326HFUHWDULDW 9ROXPH *HRMH'RQJ<HRQMH*X Contents %XVDQ.RUHD TEL :a)D[ WEBSITE :ZZZDSWSRRUJ E-MAIL :VHFUHWDULDW@DSWSRRUJ TPO FOCUS DESTINATION GUIDE PUBLISHER+ZDQ0\XQJ-RR 06 CULTURE 18 SPECIAL A CITY WHERE THE SKY MEETS LAND DIRECTOR OF PLANNING/DXQ\&KRL Urumqi, China -

2017 Annual Recipe Index Annual Recipe Index

2017 ANNUAL RECIPE INDEX ANNUAL RECIPE INDEX. Granny Smith & cinnamon crumble ...................Jul: 121 Afogato muffins in Pork belly with apples Baby spinach & garlic Acoffee sugar .......................... May: 108 and pears ................................. Apr: 67 Bchive dumplings .......................Nov: 56 All-day pan-cooked breakfast ...... Apr: 84 Rose caramel apples .................. Apr: 112 Bacon and pancetta see also ham and Almonds Salmon en papillote with prosciutto Almond kofta balls with heirloom kohlrabi & apple salad .............Nov: 20 Bacon, cheese & onion with pickled tomato salad ............................ Feb: 68 Vin santo apple & mustard seeds ..........................Jul: 112 Brussels sprouts with almonds sultana cake ...........................Dec: 170 Bacon & herb focaccia .................Mar: 90 and skordalia ............................ Apr: 20 Apricots Bacon & scallop skewers with Chocolate & almond Apricot & amaretti crisp ..............Jun: 122 peppercorn butter ...................Nov: 86 butter slice ............................. Sep: 126 Apricot & Breakfast pies .............................. Sep: 68 Chocolate almond cups ............. Feb: 110 amaretto cheesecake .............Nov: 116 Chicken a la Normande ................Jun: 48 Garden olive oil cakes .............. May: 101 Sfogliatelle with apricot & Creamy fish pie ........................... May: 96 Green beans with almonds rosemary filling.......................Aug: 127 Gorgonzola, pumpkin, maple-cured and mint ...................................Dec: -

Edition 4 Ingredient Recipe Index Measurements Explained Chia Energy Balls

BEYOND MI GOrENG Edition 4 Ingredient Recipe Index Measurements Explained Chia Energy Balls .......................8 Healthy Peanut Spoons: Butter Cookies ......................... 10 » teaspoon = tsp = 5 ml Kale Chips ................................11 » Tablespoon = Tbsp = 20 ml Popcorn ...................................11 Cups: Dips and Pita Chips .................. 12 » 1 cup = 250 ml = ¼ of a litre Beetroot and » ¾ cup = 190 ml Walnut Salad ........................... 14 » ²⁄³ cup = 170 ml Zucchini, Feta » ½ cup = 125 ml and Mint Salad ......................... 16 » ¹⁄³ cup = 80 ml Green Smoothies .....................20 » ¼ cup = 60 ml Cypriot Freekah Salad .............. 29 Tuna Nicoise .............................31 Pasta with Salmon and Caper Butter ...................... 32 Fish Cakes ............................... 33 With thanks to: Candice Worsteling, Alaina McMurray and Sally Christiansen for writing and recipes. Gabriel Brady for introduction. Shana Schultz for publication design and layout. © 2014 RMIT University Student Union 360 Swanston Street, Melbourne, Vic, 3000. www.su.rmit.edu.au Introduction ................................. 5 Brain Food ...................................6 Study Snacks ................................8 Super Salads ............................... 14 What the Heck is a Green Smoothie? .........................18 Five Easy Green Smoothie Recipes ....................... 20 Eating Real Food on a Tight Budget ........................ 22 Price Comparisons ...................... 23 $20 for the Week -

If in One of the Categories

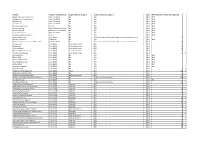

Product Type of Price promotion Sugar reduction category Calorie reduction category SDL NPM score (if in one of the categories) Apple and Grape snack pack Any 3 for £1.25 No No No N/A Mango and Pineapple fingers Any 3 for £1.50 No No No N/A Apple 6 pack Any 2 for £2.50 No No No N/A Cashew nuts Any 2 for £4.00 No No No N/A Air dried apple crisps 3 for 2 No No No N/A Grape snack pot £3 sandwhich meal deal No No No N/A Stir Fry Veg pack £2.50 Stir Fry meal deal No No No N/A Vegetable medley Any 3 for £4.00 No No No N/A Chopped Shallot and Onion 3 for 2 No No No N/A Organuc Houmous 2 for £2.00 No Prepared dips and composite salads as meal accompaniments 1 No -1 Sweet Crisp Salad Salad deal No No No N/A Butternut Squash Quinoa and Rice salad Any 2 for £4.00 No Prepared dips and composite salads as meal accompaniments 1 No -4 Orange juice 2 for £2.00 Juice based drinks 1 No No -3 Apple juice 2 for £3.00 Juice based drinks 1 No No -3 Coconut Water 2 for £5.00 Juice based drinks 1 No No 0 Apple and Elderflower juice 2 for £5.00 Juice based drinks 1 No No -3 Cranberry Lemondade 2 for £4.00 Juice based drinks 1 No No 1 1 Skimmed milk 2 for £2.50 No No No N/A Whole Milk 2 for £3.00 No No No N/A Organic Whole Milk 2 for £3.00 No No No N/A Flora Buttery Spread 2 for £2.50 No No No N/A Salted Butter 2 for £3.20 No No No N/A Lurpack spreadable 2 for £6.00 No No No N/A Dairy free milk 3 for £3.00 No No No 1 1 Cinnamon Swirl dough pack 2 for £3.00 Cakes 1 No No 21 1 Alpro Soya Chocolate Milk 3 for £3.00 No No No -1 Galaxy Chocolate thick shake 2 for £2.00 -

SUCCESS DOESN't COME on a PLATE How Pot Noodle Won Over A

SUCCESS DOESN’T COME ON A PLATE How Pot Noodle won over a new generation Marketing Society Effectiveness Awards, entered for Category C: Effective Marketing Communications Authors: Loz Horner, Monique Rossi Executive summary The challenge Pot Noodle’s association with 1990s ‘Slacker’ culture had always made it the choice of the lazy and the indolent, but today’s 16-24 year-olds no longer identified with that lifestyle. They were driven, ambitious and determined to succeed. Pot Noodle was becoming a cultural Pariah and as a result, its sales and market share were dropping. Something radical had to be done. Our response We sPent time with Pot Noodle’s young audience and realised they still valued its sPeed and ease, but not because they were lazy, because they were busy. So we fliPPed Pot Noodle’s convenience benefit on its head and humorously Positioned it as the choice of go-getters chasing their dreams. Our idea was: Pot Noodle. You Can Make It. And between SePtember and December 2015 we used it to comPletely re-launch the brand. What this idea delivered Young people comPletely embraced the You Can Make It idea. The hashtag generated 29M imPressions and Pot Noodle became a trending toPic as our insPirational message was re- tweeted and re-Posted by media brands and youth influencers. PercePtions of Pot Noodle changed for the better and this translated into business results. Volume and Value sales jumPed by over 3% as over 364,000 extra houses bought Pot Noodle, Pushing total brand value over the £100M mark for the first time. -

Against Expression: an Anthology of Conceptual Writing

Against Expression Against Expression An Anthology of Conceptual Writing E D I T E D B Y C R A I G D W O R K I N A N D KENNETH GOLDSMITH Northwestern University Press Evanston Illinois Northwestern University Press www .nupress.northwestern .edu Copyright © by Northwestern University Press. Published . All rights reserved. Printed in the United States of America + e editors and the publisher have made every reasonable eff ort to contact the copyright holders to obtain permission to use the material reprinted in this book. Acknowledgments are printed starting on page . Library of Congress Cataloging-in-Publication Data Against expression : an anthology of conceptual writing / edited by Craig Dworkin and Kenneth Goldsmith. p. cm. — (Avant- garde and modernism collection) Includes bibliographical references. ---- (pbk. : alk. paper) . Literature, Experimental. Literature, Modern—th century. Literature, Modern—st century. Experimental poetry. Conceptual art. I. Dworkin, Craig Douglas. II. Goldsmith, Kenneth. .A .—dc o + e paper used in this publication meets the minimum requirements of the American National Standard for Information Sciences—Permanence of Paper for Printed Library Materials, .-. To Marjorie Perlo! Contents Why Conceptual Writing? Why Now? xvii Kenneth Goldsmith + e Fate of Echo, xxiii Craig Dworkin Monica Aasprong, from Soldatmarkedet Walter Abish, from Skin Deep Vito Acconci, from Contacts/ Contexts (Frame of Reference): Ten Pages of Reading Roget’s ! esaurus from Removal, Move (Line of Evidence): + e Grid Locations of Streets, Alphabetized, Hagstrom’s Maps of the Five Boroughs: . Manhattan Kathy Acker, from Great Expectations Sally Alatalo, from Unforeseen Alliances Paal Bjelke Andersen, from + e Grefsen Address Anonymous, Eroticism David Antin, A List of the Delusions of the Insane: What + ey Are Afraid Of from Novel Poem from + e Separation Meditations Louis Aragon, Suicide Nathan Austin, from Survey Says! J.