The Effect of the First Italian Research Evaluation Exercise on Student Enrolment Choices

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Masters Erasmus Mundus Coordonnés Par Ou Associant Un EESR Français

Les Masters conjoints « Erasmus Mundus » Masters conjoints « Erasmus Mundus » coordonnés par un établissement français ou associant au moins un établissement français Liste complète des Masters conjoints Erasmus Mundus : http://eacea.ec.europa.eu/erasmus_mundus/results_compendia/selected_projects_action_1_master_courses_en.php *Master n’offrant pas de bourses Erasmus Mundus *ACES - Joint Masters Degree in Aquaculture, Environment and Society (cursus en 2 ans) UK-University of the Highlands and Islands LBG FR- Université de Nantes GR- University of Crete http://www.sams.ac.uk/erasmus-master-aquaculture ADVANCES - MA Advanced Development in Social Work (cursus en 2 ans) UK-UNIVERSITY OF LINCOLN, United Kingdom DE-AALBORG UNIVERSITET - AALBORG UNIVERSITY FR-UNIVERSITÉ PARIS OUEST NANTERRE LA DÉFENSE PO-UNIWERSYTET WARSZAWSKI PT-UNIVERSIDADE TECNICA DE LISBOA www.socialworkadvances.org AMASE - Joint European Master Programme in Advanced Materials Science and Engineering (cursus en 2 ans) DE – Saarland University ES – Polytechnic University of Catalonia FR – Institut National Polytechnique de Lorraine SE – Lulea University of Technology http://www.amase-master.net ASC - Advanced Spectroscopy in Chemistry Master's Course FR – Université des Sciences et Technologies de Lille – Lille 1 DE - University Leipzig IT - Alma Mater Studiorum - University of Bologna PL - Jagiellonian University FI - University of Helsinki http://www.master-asc.org Août 2016 Page 1 ATOSIM - Atomic Scale Modelling of Physical, Chemical and Bio-molecular Systems (cursus -

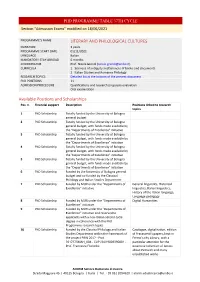

PHD PROGRAMME TABLE 37TH CYCLE Section “Admission Exams” Modified on 18/06/2021

PHD PROGRAMME TABLE 37TH CYCLE Section “Admission Exams” modified on 18/06/2021 PROGRAMME’S NAME LITERARY AND PHILOLOGICAL CULTURES DURATION 3 years PROGRAMME START DATE 01/11/2021 LANGUAGE Italian MANDATORY STAY ABROAD 6 months COORDINATOR Prof. Nicola Grandi ([email protected]) CURRICULA 1. Sciences of antiquity and Sciences of books and documents 2. Italian Studies and Romance Philology RESEARCH TOPICS Detailed list at the bottom of the present document PhD POSITIONS 11 ADMISSION PROCEDURE Qualifications and research proposals evaluation Oral examination Available Positions and Scholarships Pos. n. Financial support Description Positions linked to research topics 1 PhD Scholarship Totally funded by the University of Bologna general budget 2 PhD Scholarship Totally funded by the University of Bologna general budget, with funds made available by the "Departments of Excellence" initiative 3 PhD Scholarship Totally funded by the University of Bologna general budget, with funds made available by the "Departments of Excellence" initiative 4 PhD Scholarship Totally funded by the University of Bologna general budget, with funds made available by the "Departments of Excellence" initiative 5 PhD Scholarship Totally funded by the University of Bologna general budget, with funds made available by the "Departments of Excellence" initiative 6 PhD Scholarship Funded by the University of Bologna general budget and co-funded by the Classical Philology and Italian Studies Department 7 PhD Scholarship Funded by MUR under the "Departments of General -

Scientific Programme

Fifth International Meeting Food for Brain: promoting health and preventing diseases 20-22 November 2019 Università di Milano-Bicocca, Milan, Italy SCIENTIFIC PROGRAMME in collaboration with Fifth International Meeting FOOD FOR BRAIN: promoting health and preventing diseases www.theoffice.it/neuromi19 20-22 November, 2019 Presidents of the Symposium Carlo Ferrarese, Massimo Labra, Paola Palestini, Eraldo Paulesu, Marina Saresella, Giuseppe Vallar Scientific Board Marco Antoniotti, Gabriella Bottini, Guido Cavaletti, Giuseppe Chirico, Massimo Clerici, Annamaria Colangelo, Giovanni Corrao, Angelo Maravita, Massimo Masserini, Rosa Maria Moresco, Massimo Musicco, Andrea Ongaro, Carlo Reverberi, Marina Saresella, Luca Stanca Executive Board Paola Di Rienzo, Gessica Sala THE VENUE University of Milano-Bicocca, Milan, Italy Piazza dell’Ateneo Nuovo, 1 - Milano Credits: Università Milano Bicocca NEUROMI 2019 INTERNATIONAL MEETING HIGHLIGHTS The Milan Center for Neuroscience (www.neuromi.it) was founded in 2014 by the University of Milano-Bicocca to promote high-level multidisciplinary research and education in the field of Neuroscience, fostering collaborations among clinical, molecular, cognitive, imaging, computational and biotechnological fields. It now gathers more than 300 neuroscientists from 8 Departments of Milano-Bicocca, other Universities and Scientific Institutions from the Milan area. This is the fifth NeuroMI international meeting. Previous ones have been “Imaging of the brain” (2015), “Prediction and prevention of dementia: new hope” (2016), “Personalised medicine in multiple sclerosis” (2017), “Brain stimulation and brain plasticity: from basic research to clinical practice” (2018). “Food for brain: promoting health and preventing diseases” is the topic of this fifth international meeting, organized in collaboration with Best4Food, a multidisciplinary Center of the University of Milano-Bicocca dedicated to food research. -

BOLOGNA CAMPUS ACADEMIC YEAR 2019/2020 EXCHANGE Presentation

ALMA MATER STUDIORUM UNIVERSITÀ DI BOLOGNA STUDENTS GUIDE BOLOGNA CAMPUS ACADEMIC YEAR 2019/2020 EXCHANGE Presentation Exchange Students Guide Bologna Campus A.Y. 2019/2020 Welcome to the University of Bologna! This Guide also aims to introduce you to the city Produced by This Guide is for international exchange students and its beauty, helping you to discover the places, Alma Mater Studiorum - Università di Bologna at the Bologna Campus. To make the most of your the art, events, and people that will make your DIRI - International Relations Division experience at the university and to benefit of all experience unique. Exchange Students Desk the cultural and leisure opportunities offered by Editing and grapfhic design the city of Bologna and its students community Alma Mater Studiorum - Università di Bologna it is important to learn about the rules and the ARTEC - Industrial Relations, Third Mission and Communication Division procedures to be followed and to find out about the Insitutional Communication Office services offered by the university and its territory. www.unibo.it/exchangestudents Layout Alma Mater Studiorum - Università di Bologna ARTEC - Industrial Relations, Third Mission and Communication Division The origins of the University of Bologna go way back, University of Bologna is a Multicampus university with Insitutional Communication Office and it is considered to be the oldest university in the 5 campuses: Bologna, Cesena, Forlì, Ravenna and Printed by Western world. Its history began in 1088, when law Rimini, a school of excellence, the Collegio Superiore, ACM Spa was first taught freely in the city, and became tied and an Advanced Studies Centre in Buenos Aires. -

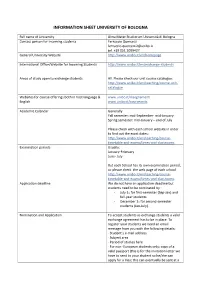

Information Sheet University of Bologna

INFORMATION SHEET UNIVERSITY OF BOLOGNA Full name of University Alma Mater Studiorum Università di Bologna Contact person for incoming students Ferruccio Quercetti [email protected] tel. +39 051 2099427 General University Website http://www.unibo.it/en/homepage International Office/Website for Incoming Students http://www.unibo.it/en/exchange-students Areas of study open to exchange students All. Please check our unit course catalogue: http://www.unibo.it/en/teaching/course-unit- catalogue Websites for course offerings both in host language & www.unibo.it/insegnamenti English www.unibo.it/courseunits Academic Calendar Generally Fall semester: mid-September- mid-January Spring semester: mid-January – end of July Please check with each school website in order to find out the exact dates: http://www.unibo.it/en/teaching/course- timetable-and-exams/times-and-classrooms Examination periods Usually: January-February June- July But each School has its own examination period, so please check the web page of each school. http://www.unibo.it/en/teaching/course- timetable-and-exams/times-and-classrooms Application deadline We do not have an application deadline but students need to be nominated by : - July 1st for first-semester (Sep-Jan) and full-year students. - December 1st for second-semester students (Jan-July). Nomination and Application To accept students as exchange students a valid exchange agreement has to be in place. To register your students we need an email message from you with the following details: · Student’s e-mail -

Curriculum Vitae ‐ Autumn 2014

Via Sarfatti 25 20136 Milano Curriculum Vitae ‐ Autumn 2014 Family name: Tagiuri Given name: Giacomo Gender: male Date of birth: (16/11/1986) Citizenship: Italian email address: [email protected] Qualifications Bocconi University Ph.D. in Legal Studies (September 2014 – 2017 expected) International Law and Economics • Full Scholarship Johns Hopkins University School of Advanced International Studies (Bologna and Washington, DC) Master of Arts in European Studies and International Economics (May 2013) • Fondazione del Monte Scholarship • Distinction in European Studies Università di Bologna Laurea Magistrale in Law (March 2011) • 110/110 magna cum laude • Erasmus Student at University of Copenhagen (January‐July 2008) Awards and Scholarships Fondazione del Monte Schoparship (Johns Hopkins University a.a. 2011/2012) Stavros Niarchos Summer Internship Funds (Johns Hopkins University, Summer 2013) University of Bologna scholarship for thesis preparation abroad (Sept.‐Dec. 2010) Bobby Abernethy Scholarship for Summer School “EU and legal reforms” (Igalo, Montenegro, Summer 2010) Research Interests International Law, Comparative Public Law, European Integration, Culture and Identity, Cultural Heritage and the Law. Publications – La dottrina Britannica in tema di diritti umani, Diritto Pubblico Comparato ed Europeo, 4/2014 – La dottrina britannica in tema di amministrazione, Diritto Pubblico Comparato ed Europeo, 1/2014. – Forging Identity: The EU and European Culture, Survival: Global Politics and Strategy, 1/2014. Article shortlisted for the International Institute of Strategic Studies (IISS)'s Palliser Essay Prize. – Letter from Italy, Survival:Global Politics and Strategy, 1/2013, pp. 197‐204. – G.Tagiuri, Romney's Italian Job, in IISS Voices, 05 November 2012 (Blog post on www.iiss.org/whats‐ new/iiss‐voices/) – Il referendum del 1975: quando i britannici decisero di rimanere nella Comunità economica europea (co‐authored with J. -

Bologna Contents

Study Abroad in Bologna Contents Introduction 3 Welcome to Bologna 4 History of the University 6 The University Today 8 How to Apply 10 Tuition Fees and Living Costs 13 Living in Bologna 17 Exploring Emilia-Romagna 22 Introduction The University of Bologna is one of the most highly regarded universities in Italy, Europe, and the world. The oldest continuously running university on the planet, founded in 1088, the university and the city alike have provided countless generations of students with incredible education in one of Italy’s most historically important regions, Emilia-Romagna. It’s not all just about the history though. The University of Bologna (or UNIBO for short) consistently stands at the forefront of innovation with The University of 230 projects funded by Horizon 2020, the largest ever EU Research and Bologna is one of Innovation program, along with a further 145 funded national projects and 170 regional projects. the most highly regarded universities The university is committed to helping achieve the UN’s agenda of sustainable development goals (17 in total), teaching the best suitable in Italy, Europe, and practices to accomplish goals by 2030, with sustainability at the heart of the world. this university that has sustained teaching for close to 1,000 years. Read on to find out more about Bologna’s rich history, the history of its university, as well as UNIBO’s application process and tuition fees, what it’s like to live in the city, and discover the university multicampus across the Emilia-Romagna region. Si parte! www.TopUniversities.com How to study abroad in Bologna 3 Image from Alma Mater Studiorum - University of Bologna Welcome to Bologna Bologna is the pride and joy of northern Italy’s Emilia Romagna region, seamlessly combining the ancient and the modern. -

The Hebrew University Erasmus+ Exchange Partner Universities

The Hebrew University Erasmus+ Exchange Partner Universities Austria Germany Italy Medical University of Innsbruck (Medicine) Friedrich-Alexander-University, Erlangen-Nuremberg University of Siena (Law) – staff only University of Salzburg (Humanities) (Humanities, Social Sciences) University of Bologna (Italian Studies) University of Vienna (selected fields) Free University of Berlin (all fields) Vienna School of International Studies (European Friedrich Schiller University Jena (History, Chemistry, Lithuania Forum) Politics) Vilnius University (all fields) University of Göttingen (all fields) Belgium University of Heidelberg (all fields) Catholique de Louvain (Humanities) Humboldt University of Berlin (Humanities) Luxembourg KU Leuven (Humanities) University of Konstanz (all fields) University of Luxembourg (selected fields) Leipzig University (Humanities, Social Sciences) Croatia Ludwig Maximilian University of Munich (LMU) (all Malta University of Dubrovnik (Geography)- staff only fields) Malta University (Musicology – staff exchange Phillips-University of Marburg (all fields) only) Czech Republic Saarland University (Humanities) Masaryk University (all fields) Technical University of Munich (selected fields) Netherlands Charles University (Chemistry) University of Trier (Humanities) Leiden University (all fields) Palacky University (selected fields) Radboud University (all fields) Greece Wageningen University (Agriculture) Denmark National and Kapodistrian University of Athens Aarhus University (all fields) (Humanities - staff exchange -

Eleonora Pierucci: Curriculum Vitae

Eleonora Pierucci Assistant Professor of Economics (tenured) Department of Mathematics, Computer Science and Economics (DiMIE) University of Basilicata Via dell’Ateneo Lucano 10, 85100, Potenza-Italy Phone: (+39) 0971 206118 Email: [email protected] Web page: https://sites.google.com/site/eleonorapierucci/ Personal Born on July 1, 1979. Citizenship: Italian. Research interests University-Industry collaborations; Third mission and technology transfer; Risk sharing; regional con- vergence; Informal channels of shocks absorption; Social capital. Employment 2011 at present. Assistant Professor of Economics (Ricercatore confermato a tempo indeterminato, area 13/A1), University of Basilicata. Since a.y. 2016-2017 Aggregate Professor of Economics of Public Utilities (in English, Master level- "Laurea Magistrale"). Since a.y. 2015-2016 Aggregate Professor of Industrial Economics (in English, Master level-"Laurea Magistrale"). a.y. 2011-2012; 2012-2013; 2013-2014; 2014-2015; 2015-2016 Aggregate Professor of Microeconomics (Undergraduate level-"Laurea Triennale"). 2009-2015. Adjunct Professor, University of Roma Tre. 2010–2011. Post doc Research Fellow in Applied Economics, University of Roma Tre. 2010. Temporary research appointment, Sapienza University of Rome. 2009-2010. Post doc Research Fellow in Economics and Statistics, Sapienza University of Rome. Education Ph.D. in Economics, Sapienza University of Rome, 2009. M.Sc. in Economics cum laude, Sapienza University of Rome, 2004. Eleonora Pierucci 2 Grants, Fellowships and Awards Visiting professor at Aarhus Summer University (Aarhus University, Denmark). Course title: Interna- tional risk sharing and financial integration della durata di due settimane (27 hours) "Master level" expected 3-13 July 2018. Habilitation for Associate Professor in Economic Policy (13/A2), April 7, 2017. -

University of Bologna Placement Profile

University of Bologna At a glance Location Bologna, Italy Erasmus Code I BOLOGNA01 Students 84,700 Campuses 5 Available places 2 Placement duration Full year Economics, Finance, Specialisms Management Taught Language English Italian language classes Language Support available Term dates September—May/June Website https://www.unibo.it/ Description Service) to help them find accommodation in the The University of Bologna, founded in 1088, is the city. It offers a mixture of student residences and oldest University in the Western world. It is also one private accommodation. More information: of the largest in Europe with 5 campuses, 33 http://www.saisaccommodation.it/en- departments and 11 schools. Each year they accept us/sais.aspx?idC=61642&LN=en-US approximately 2,500 incoming exchange students to study across the institution. How to apply When successfully selected by UWE Bristol and Surrounding Area nominated to the University of Bologna you will Bologna is the capital city of the Emilia-Romagna need to provide: region of Italy and is neatly situated a short distance away from Milan, Florence, Venice and San 1. Completed online application form Marino. Its Piazza Maggiore is a sprawling plaza 2. Photocopy of your identity card or passport lined with arched colonnades, cafes and medieval 3. Photocopy of your EHIC Insurance card and Renaissance structures such as City Hall, the Fountain of Neptune and the Basilica di San 4. Copy of your transcript of records Petronio. 5. Learning agreement Accommodation Further information Students attending the University of Bologna use http://www.unibo.it/en/international/exchange- SAIS (Student Accommodation and Information opportunities-at-Unibo/exchange-students-guide . -

New Strategies for the Post-Marketing Monitoring of Medical Products

ISP E 201 1Mid-Year Meeting FFlloorreennccee,, ITALY > APRIL 9-11, 2011 JOINTLY SPONSORED BY ISPE • International Society for Pharmacoepidemiology SIF • Italian Society of Pharmacology SIMG • Italian College of General Practitioners PRELIMINARY Meeti ng V enue agenda Grand Hotel Baglioni Symposium April 9, 2011 ******** New Strategies for the Post-marketing Monitoring of Medical Products Piazza Unità Italiana, 6 07 :30 REGISTRATION AFTERNOON SESSION 1 16 :35 Federation of databases to evaluate the arrhythmogenic 50123 - Florence, Italy 09 :00 INTRODUCTION TO PROGRAM NEW APPROACHES IN DRUG SAFETY potential of drugs: Giampiero Mazzaglia, Program Co-Chair, Health Search, SIGNAL DETECTION The ARITMO project Italian College of General Practitioners, Italy Co-chairs Fabrizio De Ponti, Gianluca Trifirò, Program Co-Chair, Erasmus University Giovanni Corrao, University of Milan Bicocca, Italy University of Bologna, Italy Medical Center, The Netherlands & University of Messina, Italy Marie Lindqvist , WHO Collaborating WELCOME ADDRESSES Centre for International Drug Monitoring, Sweden 17 :00 A network of long-term care facilities: Scientific Committee Achille Caputi, Italian Society of Pharmacology Experience from Europe and USA Past-President , University of Messina, Italy 13 :30 From individual case report assessment to Giovanni Gambassi, Sacro Cuore University CO -CHAIR Claudio Cricelli, Italian College of General Practitioners quantitative signal detection using spontaneous of Rome, Italy Giampiero Mazzaglia President , Italy ADR reporting: What -

Elsevier Statement Signatories

Statement against Elsevier's sharing policy Individual Signatories #Name of Organisation Last name, first name City Country 1 IESC/UFRJ Abelha Lucia Rio de Janeiro Brasil 2 Aceves-Calderon, Patricia Ensenada Mexico 3 Aguirre-Ligüera, Natalia Montevideo Uruguay 4 UQAC Allaire, Stéphane Chicoutimi Canada 5 Allred, Jeff New York USA 6 CUNY Almeida, Nora New York USA 7 Altarriba Vigatà, Núria Barcelona SPAIN 8 CNR-ISMAR Alvisi, Francesca Bologna Italy 9 Alzate Echeverri, Adriana Bogota Colombia Faculty of Sciences and 10 Thecnology Ana Alves Pereira Caparica Portugal 11 University of Coimbra Ana Miguéis Coimbra Portugal Universidade Federal do 12 Espírito Santo Andrade, Morgana Vitória Brazil 13 CNRS André Francis Paris France 14 Angulo, Félix Puerto Real Spain 15 Università di Cagliari Anna Deidda Cagliari Italia 16 ENEA ANNA ROSA SPROCATI Rome Italy 17 Antonella Tomasicchio Cerignola Italy 18 Antonia Padova Italia 19 Armigliato, Aldo Bologna Italy 20 Arnold, Alan Canberra Australia 21 University College Cork Arnold, Margaret Cork Ireland 22 Astrella, Sandra cagliari Italy 1 #Name of Organisation Last name, first name City Country 23 Audrit, Stéphanie Liège Belgique 24 Austin, Roddy New York United States Universidade Federal do Rio 25 de Janeiro Azeredo, Antonio Rio de Janeiro Brazil Universitat Autònoma de 26 Barcelona Azorín, Cristina Barcelona Catalunya Repositorios Digitales, 27 MINCyT, Rep. Argentina Azrilevich, Paola Buenos Aires Argentina 28 CLACSO Babini, Dominique Buenos Aires Argentina 29 Babu Vivek Cennai India 30 Indiana University