Proquest Dissertations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Redalyc.Sets and Pluralities

Red de Revistas Científicas de América Latina, el Caribe, España y Portugal Sistema de Información Científica Gustavo Fernández Díez Sets and Pluralities Revista Colombiana de Filosofía de la Ciencia, vol. IX, núm. 19, 2009, pp. 5-22, Universidad El Bosque Colombia Available in: http://www.redalyc.org/articulo.oa?id=41418349001 Revista Colombiana de Filosofía de la Ciencia, ISSN (Printed Version): 0124-4620 [email protected] Universidad El Bosque Colombia How to cite Complete issue More information about this article Journal's homepage www.redalyc.org Non-Profit Academic Project, developed under the Open Acces Initiative Sets and Pluralities1 Gustavo Fernández Díez2 Resumen En este artículo estudio el trasfondo filosófico del sistema de lógica conocido como “lógica plural”, o “lógica de cuantificadores plurales”, de aparición relativamente reciente (y en alza notable en los últimos años). En particular, comparo la noción de “conjunto” emanada de la teoría axiomática de conjuntos, con la noción de “plura- lidad” que se encuentra detrás de este nuevo sistema. Mi conclusión es que los dos son completamente diferentes en su alcance y sus límites, y que la diferencia proviene de las diferentes motivaciones que han dado lugar a cada uno. Mientras que la teoría de conjuntos es una teoría genuinamente matemática, que tiene el interés matemático como ingrediente principal, la lógica plural ha aparecido como respuesta a considera- ciones lingüísticas, relacionadas con la estructura lógica de los enunciados plurales del inglés y el resto de los lenguajes naturales. Palabras clave: conjunto, teoría de conjuntos, pluralidad, cuantificación plural, lógica plural. Abstract In this paper I study the philosophical background of the relatively recent (and in the last few years increasingly flourishing) system of logic called “plural logic”, or “logic of plural quantifiers”. -

Critical Review, Vol. X

29 Neutrosophic Vague Set Theory Shawkat Alkhazaleh1 1 Department of Mathematics, Faculty of Science and Art Shaqra University, Saudi Arabia [email protected] Abstract In 1993, Gau and Buehrer proposed the theory of vague sets as an extension of fuzzy set theory. Vague sets are regarded as a special case of context-dependent fuzzy sets. In 1995, Smarandache talked for the first time about neutrosophy, and he defined the neutrosophic set theory as a new mathematical tool for handling problems involving imprecise, indeterminacy, and inconsistent data. In this paper, we define the concept of a neutrosophic vague set as a combination of neutrosophic set and vague set. We also define and study the operations and properties of neutrosophic vague set and give some examples. Keywords Vague set, Neutrosophy, Neutrosophic set, Neutrosophic vague set. Acknowledgement We would like to acknowledge the financial support received from Shaqra University. With our sincere thanks and appreciation to Professor Smarandache for his support and his comments. 1 Introduction Many scientists wish to find appropriate solutions to some mathematical problems that cannot be solved by traditional methods. These problems lie in the fact that traditional methods cannot solve the problems of uncertainty in economy, engineering, medicine, problems of decision-making, and others. There have been a great amount of research and applications in the literature concerning some special tools like probability theory, fuzzy set theory [13], rough set theory [19], vague set theory [18], intuitionistic fuzzy set theory [10, 12] and interval mathematics [11, 14]. Critical Review. Volume X, 2015 30 Shawkat Alkhazaleh Neutrosophic Vague Set Theory Since Zadeh published his classical paper almost fifty years ago, fuzzy set theory has received more and more attention from researchers in a wide range of scientific areas, especially in the past few years. -

Collapse, Plurals and Sets 421

doi: 10.5007/1808-1711.2014v18n3p419 COLLAPSE,PLURALS AND SETS EDUARDO ALEJANDRO BARRIO Abstract. This paper raises the question under what circumstances a plurality forms a set. My main point is that not always all things form sets. A provocative way of presenting my position is that, as a result of my approach, there are more pluralities than sets. Another way of presenting the same thesis claims that there are ways of talking about objects that do not always collapse into sets. My argument is related to expressive powers of formal languages. Assuming classical logic, I show that if all plurality form a set and the quantifiers are absolutely general, then one gets a trivial theory. So, by reductio, one has to abandon one of the premiss. Then, I argue against the collapse of the pluralities into sets. What I am advocating is that the thesis of collapse limits important applications of the plural logic in model theory, when it is assumed that the quantifiers are absolutely general. Keywords: Pluralities; absolute generality; sets; hierarchies. We often say that some things form a set. For instance, every house in Beacon Hill may form a set. Also, all antimatter particles in the universe, all even numbers, all odd numbers, and in general all natural numbers do so. Naturally, following this line of thought, one might think that the plurality of all things constitutes a set. And al- though natural language allows us, by means of its plural constructions, to talk about objects without grouping them in one entity, there are also nominalization devices to turn constructions involving high order expressive resources into others that only make use of first order ones. -

Plurals and Mereology

Journal of Philosophical Logic (2021) 50:415–445 https://doi.org/10.1007/s10992-020-09570-9 Plurals and Mereology Salvatore Florio1 · David Nicolas2 Received: 2 August 2019 / Accepted: 5 August 2020 / Published online: 26 October 2020 © The Author(s) 2020 Abstract In linguistics, the dominant approach to the semantics of plurals appeals to mere- ology. However, this approach has received strong criticisms from philosophical logicians who subscribe to an alternative framework based on plural logic. In the first part of the article, we offer a precise characterization of the mereological approach and the semantic background in which the debate can be meaningfully reconstructed. In the second part, we deal with the criticisms and assess their logical, linguistic, and philosophical significance. We identify four main objections and show how each can be addressed. Finally, we compare the strengths and shortcomings of the mereologi- cal approach and plural logic. Our conclusion is that the former remains a viable and well-motivated framework for the analysis of plurals. Keywords Mass nouns · Mereology · Model theory · Natural language semantics · Ontological commitment · Plural logic · Plurals · Russell’s paradox · Truth theory 1 Introduction A prominent tradition in linguistic semantics analyzes plurals by appealing to mere- ology (e.g. Link [40, 41], Landman [32, 34], Gillon [20], Moltmann [50], Krifka [30], Bale and Barner [2], Chierchia [12], Sutton and Filip [76], and Champollion [9]).1 1The historical roots of this tradition include Leonard and Goodman [38], Goodman and Quine [22], Massey [46], and Sharvy [74]. Salvatore Florio [email protected] David Nicolas [email protected] 1 Department of Philosophy, University of Birmingham, Birmingham, United Kingdom 2 Institut Jean Nicod, Departement´ d’etudes´ cognitives, ENS, EHESS, CNRS, PSL University, Paris, France 416 S. -

Beyond Plurals

Beyond Plurals Agust´ınRayo philosophy.ucsd.edu/arayo July 10, 2008 I have two main objectives. The first is to get a better understanding of what is at issue between friends and foes of higher-order quantification, and of what it would mean to extend a Boolos-style treatment of second-order quantification to third- and higher- order quantification. The second objective is to argue that in the presence of absolutely general quantification, proper semantic theorizing is essentially unstable: it is impossible to provide a suitably general semantics for a given language in a language of the same logical type. I claim that this leads to a trilemma: one must choose between giving up absolutely general quantification, settling for the view that adequate semantic theorizing about certain languages is essentially beyond our reach, and countenancing an open-ended hierarchy of languages of ever ascending logical type. I conclude by suggesting that the hierarchy may be the least unattractive of the options on the table. 1 Preliminaries 1.1 Categorial Semantics Throughout this paper I shall assume the following: Categorial Semantics Every meaningful sentence has a semantic structure,1 which may be represented 1 as a certain kind of tree.2 Each node in the tree falls under a particular se- mantic category (e.g. `sentence', `quantifier’, `sentential connective'), and has an intension that is appropriate for that category. The semantic category and intension of each non-terminal node in the tree is determined by the semantic categories and intensions of nodes below it. Although I won't attempt to defend Categorial Semantics here,3 two points are worth emphasizing. -

Plural Reference.Pdf

OUP UNCORRECTED PROOF – FIRST PROOF, 11/12/2015, SPi Plurality and Unity Dictionary: NOSD 0002624321.INDD 1 11/12/2015 3:07:20 PM OUP UNCORRECTED PROOF – FIRST PROOF, 11/12/2015, SPi Dictionary: NOSD 0002624321.INDD 2 11/12/2015 3:07:20 PM OUP UNCORRECTED PROOF – FIRST PROOF, 11/12/2015, SPi Plurality and Unity Logic, Philosophy, and Linguistics !"#$!" %& Massimiliano Carrara, Alexandra Arapinis, and Friederike Moltmann Dictionary: NOSD 0002624321.INDD 3 11/12/2015 3:07:20 PM OUP UNCORRECTED PROOF – FIRST PROOF, 11/12/2015, SPi Great Clarendon Street, Oxford, OX' (DP, United Kingdom Oxford University Press is a department of the University of Oxford. It furthers the University’s objective of excellence in research, scholarship, and education by publishing worldwide. Oxford is a registered trade mark of Oxford University Press in the UK and in certain other countries © the several contributors ')*( +e moral rights of the authors have been asserted First Edition published in ')*( Impression: * All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, without the prior permission in writing of Oxford University Press, or as expressly permitted by law, by licence or under terms agreed with the appropriate reprographics rights organization. Enquiries concerning reproduction outside the scope of the above should be sent to the Rights Department, Oxford University Press, at the address above You must not circulate this work in any other form and you must impose this same condition on any acquirer Published in the United States of America by Oxford University Press *,- Madison Avenue, New York, NY *))*(, United States of America British Library Cataloguing in Publication Data Data available Library of Congress Control Number: ')*.,//0/. -

Cotangent Similarity Measures of Vague Multi Sets

International Journal of Pure and Applied Mathematics Volume 120 No. 7 2018, 155-163 ISSN: 1314-3395 (on-line version) url: http://www.acadpubl.eu/hub/ Special Issue http://www.acadpubl.eu/hub/ Cotangent Similarity Measures of Vague Multi Sets S. Cicily Flora1 and I. Arockiarani2 1,2Department of Mathematics Nirmala College for Women, Coimbatore-18 Email: [email protected] Abstract This paper throws light on a simplified technique to solve the selection procedure of candidates using vague multi set. The new approach is the enhanced form of cotangent sim- ilarity measures on vague multi sets which is a model of multi criteria group decision making. AMS Subject Classification: 3B52, 90B50, 94D05. Key Words and Phrases: Vague Multi sets, Cotan- gent similarity measures, Decision making. 1 Introduction The theory of sets, one of the most powerful tools in modern mathe- matics is usually considered to have begun with Georg Cantor[1845- 1918]. Considering the uncertainity factor, Zadeh [7] introduced Fuzzy sets in 1965. If repeated occurrences of any object are al- lowed in a set, then the mathematical structure is called multi sets [1]. As a generalizations of multi set,Yager [6] introduced fuzzy multi set. An element of a fuzzy multi set can occur more than once with possibly the same or different membership values. The concept of Intuitionistic fuzzy multi set was introduced by [3]. Sim- ilarity measure is a very important tool to determine the degree of similarity between two objects. Using the Cotangent function, a new similarity measure was proposed by wang et al [5]. -

Research Article Vague Filters of Residuated Lattices

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Crossref Hindawi Publishing Corporation Journal of Discrete Mathematics Volume 2014, Article ID 120342, 9 pages http://dx.doi.org/10.1155/2014/120342 Research Article Vague Filters of Residuated Lattices Shokoofeh Ghorbani Department of Mathematics, 22 Bahman Boulevard, Kerman 76169-133, Iran Correspondence should be addressed to Shokoofeh Ghorbani; [email protected] Received 2 May 2014; Revised 7 August 2014; Accepted 13 August 2014; Published 10 September 2014 AcademicEditor:HongJ.Lai Copyright © 2014 Shokoofeh Ghorbani. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Notions of vague filters, subpositive implicative vague filters, and Boolean vague filters of a residuated lattice are introduced and some related properties are investigated. The characterizations of (subpositive implicative, Boolean) vague filters is obtained. We prove that the set of all vague filters of a residuated lattice forms a complete lattice and we find its distributive sublattices. The relation among subpositive implicative vague filters and Boolean vague filters are obtained and it is proved that subpositive implicative vague filters are equivalent to Boolean vague filters. 1. Introduction of vague ideal in pseudo MV-algebras and Broumand Saeid [4] introduced the notion of vague BCK/BCI-algebras. In the classical set, there are only two possibilities for any The concept of residuated lattices was introduced by Ward elements: in or not in the set. Hence the values of elements and Dilworth [5] as a generalization of the structure of the in a set are only one of 0 and 1. -

A Vague Relational Model and Algebra 1

http://www.paper.edu.cn A Vague Relational Model and Algebra 1 Faxin Zhao, Z.M. Ma*, and Li Yan School of Information Science and Engineering, Northeastern University Shenyang, P.R. China 110004 [email protected] Abstract Imprecision and uncertainty in data values are pervasive in real-world environments and have received much attention in the literature. Several methods have been proposed for incorporating uncertain data into relational databases. However, the current approaches have many shortcomings and have not established an acceptable extension of the relational model. In this paper, we propose a consistent extension of the relational model to represent and deal with fuzzy information by means of vague sets. We present a revised relational structure and extend the relational algebra. The extended algebra is shown to be closed and reducible to the fuzzy relational algebra and further to the conventional relational algebra. Keywords: Vague set, Fuzzy set, Relational data model, Algebra 1 Introduction Over the last 30 years, relational databases have gained widespread popularity and acceptance in information systems. Unfortunately, the relational model does not have a comprehensive way to handle imprecise and uncertain data. Such data, however, exist everywhere in the real world. Consequently, the relational model cannot represent the inherently imprecise and uncertain nature of the data. The need for an extension of the relational model so that imprecise and uncertain data can be supported has been identified in the literature. Fuzzy set theory has been introduced by Zadeh [1] to handle vaguely specified data values by generalizing the notion of membership in a set. And fuzzy information has been extensively investigated in the context of the relational model. -

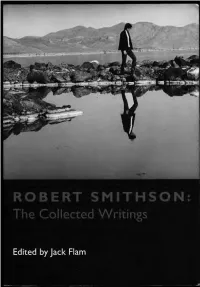

Collected Writings

THE DOCUMENTS O F TWENTIETH CENTURY ART General Editor, Jack Flam Founding Editor, Robert Motherwell Other titl es in the series available from University of California Press: Flight Out of Tillie: A Dada Diary by Hugo Ball John Elderfield Art as Art: The Selected Writings of Ad Reinhardt Barbara Rose Memo irs of a Dada Dnnnmer by Richard Huelsenbeck Hans J. Kl ein sc hmidt German Expressionism: Dowments jro111 the End of th e Wilhelmine Empire to th e Rise of National Socialis111 Rose-Carol Washton Long Matisse on Art, Revised Edition Jack Flam Pop Art: A Critical History Steven Henry Madoff Co llected Writings of Robert Mothen/le/1 Stephanie Terenzio Conversations with Cezanne Michael Doran ROBERT SMITHSON: THE COLLECTED WRITINGS EDITED BY JACK FLAM UNIVERSITY OF CALIFORNIA PRESS Berkeley Los Angeles Londo n University of Cali fornia Press Berkeley and Los Angeles, California University of California Press, Ltd. London, England © 1996 by the Estate of Robert Smithson Introduction © 1996 by Jack Flam Library of Congress Cataloging-in-Publication Data Smithson, Robert. Robert Smithson, the collected writings I edited, with an Introduction by Jack Flam. p. em.- (The documents of twentieth century art) Originally published: The writings of Robert Smithson. New York: New York University Press, 1979. Includes bibliographical references and index. ISBN 0-520-20385-2 (pbk.: alk. paper) r. Art. I. Title. II. Series. N7445.2.S62A3 5 1996 700-dc20 95-34773 C IP Printed in the United States of Am erica o8 07 o6 9 8 7 6 T he paper used in this publication meets the minimum requirements of ANSII NISO Z39·48-1992 (R 1997) (Per111anmce of Paper) . -

A New Approach of Interval Valued Vague Set in Plants L

International Journal of Pure and Applied Mathematics Volume 120 No. 7 2018, 325-333 ISSN: 1314-3395 (on-line version) url: http://www.acadpubl.eu/hub/ Special Issue http://www.acadpubl.eu/hub/ A NEW APPROACH OF INTERVAL VALUED VAGUE SET IN PLANTS L. Mariapresenti*, I. Arockiarani** *[email protected] Department of mathematics, Nirmala college for women, Coimbatore Abstract: This paper proposes a new mathematical model which anchors on interval valued vague set theory. An interval valued interview chat with interval valued vague degree is framed. Then in- terval valued vague weighted arithmetic average operators (IVWAA) are defined to aggregate the vague information from the symptomes. Also a new distance measure is formulated for our diagnosis Keywords: Interview chart, aggregate operator, interval valued vague weighted arithmetic average, distance measure. 1. Introduction The fuzzy set theory has been utilized in many approaches to model the diagnostic process.[1, 2, 4, 8, 11, 12, 14]. Sanchez [9] invented a full developed relationships modeling theory of symptoms and diseases using fuzzy set. Atanassov[3] in 1986 defined the notion of intuitionistic fuzzy sets, which is the generalization of notion of Zadeh’s[13] fuzzy set. In 1993 Gau and Buehrer [6] initated the concept of vague set theory which was the generalization of fuzzy set with membership and non membership function. The vague set theory has been explored by many authors and has been applied in different disciplines. The task of a agricultural consultant is to diagnose the disease of the plant. In agriculture diagnosis can be regarded as a label given by the agricultural consultant to describe and synthesize the status of the diseased plant. -

Semantics and the Plural Conception of Reality

Philosophers’ volume 14, no. 22 ccording to a traditional view, reality is singular. Socrates and Imprint july 2014 A Plato are philosophers; each of them has the property of being a philosopher.1 Being a philosopher is a singular property in that it is instantiated separately by Socrates and by Plato. The property of being a philosopher, like the property of being human, has the higher-order property of being instantiated. The property of being instantiated is singular too. It is instantiated separately by the property of being a SEMANTICS AND philosopher and by the property of being human. If we generalize these ideas, we obtain what may be called the singular conception of reality. This is the view that reality encompasses entities belonging to THE PLURAL two main categories: objects and singular properties of various orders.2 The singular conception of reality offers a simple picture of what there is, and it offers a simple picture of the semantics of singular pred- ication. A basic predication of the form S(t), composed of a singular CONCEPTION OF term t and a singular predicate S, is true in a given interpretation of the language if and only if, relative to that interpretation, the object denoted by t instantiates the property denoted by S. REALITY A broader conception of reality, however, has been advocated. The Romans conquered Gaul. This is not something that any Roman did separately. They conquered Gaul jointly. According to advocates of the broader conception of reality, conquering Gaul is a plural property, one that is instantiated jointly by the Romans.