Complexity Classes

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

NP-Completeness: Reductions Tue, Nov 21, 2017

CMSC 451 Dave Mount CMSC 451: Lecture 19 NP-Completeness: Reductions Tue, Nov 21, 2017 Reading: Chapt. 8 in KT and Chapt. 8 in DPV. Some of the reductions discussed here are not in either text. Recap: We have introduced a number of concepts on the way to defining NP-completeness: Decision Problems/Language recognition: are problems for which the answer is either yes or no. These can also be thought of as language recognition problems, assuming that the input has been encoded as a string. For example: HC = fG j G has a Hamiltonian cycleg MST = f(G; c) j G has a MST of cost at most cg: P: is the class of all decision problems which can be solved in polynomial time. While MST 2 P, we do not know whether HC 2 P (but we suspect not). Certificate: is a piece of evidence that allows us to verify in polynomial time that a string is in a given language. For example, the language HC above, a certificate could be a sequence of vertices along the cycle. (If the string is not in the language, the certificate can be anything.) NP: is defined to be the class of all languages that can be verified in polynomial time. (Formally, it stands for Nondeterministic Polynomial time.) Clearly, P ⊆ NP. It is widely believed that P 6= NP. To define NP-completeness, we need to introduce the concept of a reduction. Reductions: The class of NP-complete problems consists of a set of decision problems (languages) (a subset of the class NP) that no one knows how to solve efficiently, but if there were a polynomial time solution for even a single NP-complete problem, then every problem in NP would be solvable in polynomial time. -

Computational Complexity: a Modern Approach

i Computational Complexity: A Modern Approach Draft of a book: Dated January 2007 Comments welcome! Sanjeev Arora and Boaz Barak Princeton University [email protected] Not to be reproduced or distributed without the authors’ permission This is an Internet draft. Some chapters are more finished than others. References and attributions are very preliminary and we apologize in advance for any omissions (but hope you will nevertheless point them out to us). Please send us bugs, typos, missing references or general comments to [email protected] — Thank You!! DRAFT ii DRAFT Chapter 9 Complexity of counting “It is an empirical fact that for many combinatorial problems the detection of the existence of a solution is easy, yet no computationally efficient method is known for counting their number.... for a variety of problems this phenomenon can be explained.” L. Valiant 1979 The class NP captures the difficulty of finding certificates. However, in many contexts, one is interested not just in a single certificate, but actually counting the number of certificates. This chapter studies #P, (pronounced “sharp p”), a complexity class that captures this notion. Counting problems arise in diverse fields, often in situations having to do with estimations of probability. Examples include statistical estimation, statistical physics, network design, and more. Counting problems are also studied in a field of mathematics called enumerative combinatorics, which tries to obtain closed-form mathematical expressions for counting problems. To give an example, in the 19th century Kirchoff showed how to count the number of spanning trees in a graph using a simple determinant computation. Results in this chapter will show that for many natural counting problems, such efficiently computable expressions are unlikely to exist. -

Notes on Space Complexity of Integration of Computable Real

Notes on space complexity of integration of computable real functions in Ko–Friedman model Sergey V. Yakhontov Abstract x In the present paper it is shown that real function g(x)= 0 f(t)dt is a linear-space computable real function on interval [0, 1] if f is a linear-space computable C2[0, 1] real function on interval R [0, 1], and this result does not depend on any open question in the computational complexity theory. The time complexity of computable real functions and integration of computable real functions is considered in the context of Ko–Friedman model which is based on the notion of Cauchy functions computable by Turing machines. 1 2 In addition, a real computable function f is given such that 0 f ∈ FDSPACE(n )C[a,b] but 1 f∈ / FP if FP 6= #P. 0 C[a,b] R RKeywords: Computable real functions, Cauchy function representation, polynomial-time com- putable real functions, linear-space computable real functions, C2[0, 1] real functions, integration of computable real functions. Contents 1 Introduction 1 1.1 CF computablerealnumbersandfunctions . ...... 2 1.2 Integration of FP computablerealfunctions. 2 2 Upper bound of the time complexity of integration 3 2 3 Function from FDSPACE(n )C[a,b] that not in FPC[a,b] if FP 6= #P 4 4 Conclusion 4 arXiv:1408.2364v3 [cs.CC] 17 Nov 2014 1 Introduction In the present paper, we consider computable real numbers and functions that are represented by Cauchy functions computable by Turing machines [1]. Main results regarding computable real numbers and functions can be found in [1–4]; main results regarding computational complexity of computations on Turing machines can be found in [5]. -

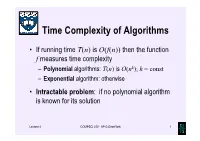

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

Glossary of Complexity Classes

App endix A Glossary of Complexity Classes Summary This glossary includes selfcontained denitions of most complexity classes mentioned in the b o ok Needless to say the glossary oers a very minimal discussion of these classes and the reader is re ferred to the main text for further discussion The items are organized by topics rather than by alphab etic order Sp ecically the glossary is partitioned into two parts dealing separately with complexity classes that are dened in terms of algorithms and their resources ie time and space complexity of Turing machines and complexity classes de ned in terms of nonuniform circuits and referring to their size and depth The algorithmic classes include timecomplexity based classes such as P NP coNP BPP RP coRP PH E EXP and NEXP and the space complexity classes L NL RL and P S P AC E The non k uniform classes include the circuit classes P p oly as well as NC and k AC Denitions and basic results regarding many other complexity classes are available at the constantly evolving Complexity Zoo A Preliminaries Complexity classes are sets of computational problems where each class contains problems that can b e solved with sp ecic computational resources To dene a complexity class one sp ecies a mo del of computation a complexity measure like time or space which is always measured as a function of the input length and a b ound on the complexity of problems in the class We follow the tradition of fo cusing on decision problems but refer to these problems using the terminology of promise problems -

User's Guide for Complexity: a LATEX Package, Version 0.80

User’s Guide for complexity: a LATEX package, Version 0.80 Chris Bourke April 12, 2007 Contents 1 Introduction 2 1.1 What is complexity? ......................... 2 1.2 Why a complexity package? ..................... 2 2 Installation 2 3 Package Options 3 3.1 Mode Options .............................. 3 3.2 Font Options .............................. 4 3.2.1 The small Option ....................... 4 4 Using the Package 6 4.1 Overridden Commands ......................... 6 4.2 Special Commands ........................... 6 4.3 Function Commands .......................... 6 4.4 Language Commands .......................... 7 4.5 Complete List of Class Commands .................. 8 5 Customization 15 5.1 Class Commands ............................ 15 1 5.2 Language Commands .......................... 16 5.3 Function Commands .......................... 17 6 Extended Example 17 7 Feedback 18 7.1 Acknowledgements ........................... 19 1 Introduction 1.1 What is complexity? complexity is a LATEX package that typesets computational complexity classes such as P (deterministic polynomial time) and NP (nondeterministic polynomial time) as well as sets (languages) such as SAT (satisfiability). In all, over 350 commands are defined for helping you to typeset Computational Complexity con- structs. 1.2 Why a complexity package? A better question is why not? Complexity theory is a more recent, though mature area of Theoretical Computer Science. Each researcher seems to have his or her own preferences as to how to typeset Complexity Classes and has built up their own personal LATEX commands file. This can be frustrating, to say the least, when it comes to collaborations or when one has to go through an entire series of files changing commands for compatibility or to get exactly the look they want (or what may be required). -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

The Complexity Class NP

Quantum Many-Body Systems Boulder School Ashwin Nayak (U. Waterloo & Perimeter) July 22, 2010 Quantum Complexity Theory II In the last lecture, we saw what it means to compute a function efficiently, and saw subproblems of ISING GROUND STATE and k-SAT for which we know efficient algorithms. We also saw that the best known algorithms or these problems in their full generality are not efficient, and in fact are not qualitatively better than taking a brute-force search approach. Today, we’ll develop a theory that explains to some extent the difficulty in designing efficient algorithms for these problems. The complexity class NP Let us revisit the language ISING GROUND STATE. If someone gave us a state σ claiming that it had en- ergy H(σ) at most the required threshold k, we would be able to efficiently check whether that is true. In fact, whenever an instance (G,c,b,k) is in the language, there is such a state—the ground state would be one such. When the instance is not in the language, there is no such state, and any claimed state can efficiently be checked and rejected. The problem k-SAT enjoys the same property. If we are presented with an assignment, and we can check efficiently whether it satisfies the given formula or not. By definition, whenever the formula is satisfiable, there is evidence supporting this (e.g., a satisfying assignment), and when the formula is not satisfiable any claimed evidence (like a putative satisfying assignment) can be checked and rejected efficiently. This property is common to a wide array of problems, including the travelling salesman problem, graph isomorphism, scheduling with constraints, etc., and is the defining characteristic of the complexity class NP. -

6.045J Lecture 12: Complexity Theory

6.045: Automata, Computability, and Complexity Or, GITCS Class 12 Nancy Lynch Today: Complexity Theory • First part of the course: Basic models of computation – Circuits, decision trees –DFAs, NFAs: • Restricted notion of computation: no auxiliary memory, just one pass over input. • Yields restricted class of languages: regular languages. • Second part: Computability – Very general notion of computation. – Machine models like Turing machines, or programs in general (idealized) programming languages. – Unlimited storage, multiple passes over input, compute arbitrarily long, possibly never halt. – Yields large language classes: Turing-recognizable = enumerable, and Turing-decidable. • Third part: Complexity theory Complexity Theory • First part of the course: Basic models of computation • Second part: Computability • Third part: Complexity theory – A middle ground. – Restrict the general TM model by limiting its use of resources: • Computing time (number of steps). • Space = storage (number of tape squares used). – Leads to interesting subclasses of the Turing-decidable languages, based on specific bounds on amounts of resources used. –Compare: • Computability theory answers the question “What languages are computable (at all)?” • Complexity theory answers “What languages are computable with particular restrictions on amount of resources?” Complexity Theory • Topics – Examples of time complexity analysis (informal). – Asymptotic function notation: O, o, Ω, Θ – Time complexity classes – P, polynomial time – Languages not in P – Hierarchy theorems • Reading: – Sipser, Sections 7.1, 7.2, and a bit from 9.1. •Next: – Midterm, then Section 7.3 (after the break). Examples of time complexity analysis Examples of time complexity analysis • Consider a basic 1-tape Turing machine M that decides membership in the language L = {0k1k | k ≥ 0}: – M first checks that its input is in 0*1*, using one left-to-right pass.