Images on the Net!

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Release 9.6.1 James R. Barlow

ocrmypdf Documentation Release 9.6.1 James R. Barlow 2020-03-03 Contents 1 Introduction 3 2 Release notes 7 3 Installing OCRmyPDF 37 4 PDF optimization 49 5 Installing additional language packs 51 6 Installing the JBIG2 encoder 53 7 Cookbook 55 8 OCRmyPDF Docker image 61 9 Advanced features 65 10 Batch processing 71 11 PDF security issues 79 12 Common error messages 83 13 Using the OCRmyPDF API 85 14 Contributing guidelines 89 15 Indices and tables 91 Index 93 i ii ocrmypdf Documentation, Release 9.6.1 OCRmyPDF adds an optical charcter recognition (OCR) text layer to scanned PDF files, allowing them to be searched. PDF is the best format for storing and exchanging scanned documents. Unfortunately, PDFs can be difficult to modify. OCRmyPDF makes it easy to apply image processing and OCR to existing PDFs. Contents 1 ocrmypdf Documentation, Release 9.6.1 2 Contents CHAPTER 1 Introduction OCRmyPDF is a Python 3 application and library that adds OCR layers to PDFs. 1.1 About OCR Optical character recognition is technology that converts images of typed or handwritten text, such as in a scanned document, to computer text that can be selected, searched and copied. OCRmyPDF uses Tesseract, the best available open source OCR engine, to perform OCR. 1.2 About PDFs PDFs are page description files that attempts to preserve a layout exactly. They contain vector graphics that can contain raster objects such as scanned images. Because PDFs can contain multiple pages (unlike many image formats) and can contain fonts and text, it is a good formats for exchanging scanned documents. -

XMP SPECIFICATION PART 3 STORAGE in FILES Copyright © 2016 Adobe Systems Incorporated

XMP SPECIFICATION PART 3 STORAGE IN FILES Copyright © 2016 Adobe Systems Incorporated. All rights reserved. Adobe XMP Specification Part 3: Storage in Files NOTICE: All information contained herein is the property of Adobe Systems Incorporated. No part of this publication (whether in hardcopy or electronic form) may be reproduced or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written consent of Adobe Systems Incorporated. Adobe, the Adobe logo, Acrobat, Acrobat Distiller, Flash, FrameMaker, InDesign, Illustrator, Photoshop, PostScript, and the XMP logo are either registered trademarks or trademarks of Adobe Systems Incorporated in the United States and/or other countries. MS-DOS, Windows, and Windows NT are either registered trademarks or trademarks of Microsoft Corporation in the United States and/or other countries. Apple, Macintosh, Mac OS and QuickTime are trademarks of Apple Computer, Inc., registered in the United States and other countries. UNIX is a trademark in the United States and other countries, licensed exclusively through X/Open Company, Ltd. All other trademarks are the property of their respective owners. This publication and the information herein is furnished AS IS, is subject to change without notice, and should not be construed as a commitment by Adobe Systems Incorporated. Adobe Systems Incorporated assumes no responsibility or liability for any errors or inaccuracies, makes no warranty of any kind (express, implied, or statutory) with respect to this publication, and expressly disclaims any and all warranties of merchantability, fitness for particular purposes, and noninfringement of third party rights. Contents 1 Embedding XMP metadata in application files . -

Metadefender Core V4.13.1

MetaDefender Core v4.13.1 © 2018 OPSWAT, Inc. All rights reserved. OPSWAT®, MetadefenderTM and the OPSWAT logo are trademarks of OPSWAT, Inc. All other trademarks, trade names, service marks, service names, and images mentioned and/or used herein belong to their respective owners. Table of Contents About This Guide 13 Key Features of Metadefender Core 14 1. Quick Start with Metadefender Core 15 1.1. Installation 15 Operating system invariant initial steps 15 Basic setup 16 1.1.1. Configuration wizard 16 1.2. License Activation 21 1.3. Scan Files with Metadefender Core 21 2. Installing or Upgrading Metadefender Core 22 2.1. Recommended System Requirements 22 System Requirements For Server 22 Browser Requirements for the Metadefender Core Management Console 24 2.2. Installing Metadefender 25 Installation 25 Installation notes 25 2.2.1. Installing Metadefender Core using command line 26 2.2.2. Installing Metadefender Core using the Install Wizard 27 2.3. Upgrading MetaDefender Core 27 Upgrading from MetaDefender Core 3.x 27 Upgrading from MetaDefender Core 4.x 28 2.4. Metadefender Core Licensing 28 2.4.1. Activating Metadefender Licenses 28 2.4.2. Checking Your Metadefender Core License 35 2.5. Performance and Load Estimation 36 What to know before reading the results: Some factors that affect performance 36 How test results are calculated 37 Test Reports 37 Performance Report - Multi-Scanning On Linux 37 Performance Report - Multi-Scanning On Windows 41 2.6. Special installation options 46 Use RAMDISK for the tempdirectory 46 3. Configuring Metadefender Core 50 3.1. Management Console 50 3.2. -

Flip64 CML Developer's Guide

Flip64 CML Developer Guide Flip64 CML Developer Guide Flip64 CML Developer Guide Vantage 8.0 ComponentPac 8.0.8 December 2020 302698 2 Copyrights and Trademark Notices Copyrights and Trademark Notices Copyright © 12/16/20. Telestream, LLC and its Affiliates. All rights reserved. Telestream products are covered by U.S. and foreign patents, issued and pending. Information in this publication supersedes that in all previously published material. Specification and price change privileges reserved. No part of this publication may be reproduced, transmitted, transcribed, altered, or translated into any languages without the written permission of Telestream. Information and specifications in this document are subject to change without notice and do not represent a commitment on the part of Telestream. TELESTREAM is a registered trademark of Telestream, LLC. All other trade names referenced are the service marks, trademarks, or registered trademarks of their respective companies. Telestream. Telestream, CaptionMaker, Cerify, Episode, Flip4Mac, FlipFactory, Flip Player, Gameshow, GraphicsFactory, Lightspeed, MetaFlip, Post Producer, Prism, ScreenFlow, Split-and-Stitch, Switch, Tempo, TrafficManager, Vantage, VOD Producer, and Wirecast are registered trademarks and Aurora, Cricket, e-Captioning, Inspector, iQ, iVMS, iVMS ASM, MacCaption, Pipeline, Sentry, Surveyor, Vantage Cloud Port, CaptureVU, Cerify, FlexVU, Prism, Sentry, Stay Genlock, Aurora, and Vidchecker are trademarks of Telestream, LLC and its Affiliates. All other trademarks are the property of their respective owners. Adobe. Adobe® HTTP Dynamic Streaming Copyright © 2014 Adobe Systems. All rights reserved. Apple. QuickTime, MacOS X, and Safari are trademarks of Apple, Inc. Bonjour, the Bonjour logo, and the Bonjour symbol are trademarks of Apple, Inc. Avid. Portions of this product Copyright 2012 Avid Technology, Inc. -

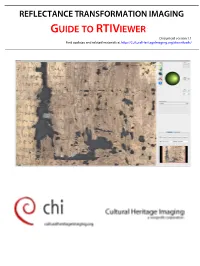

Guide to Rtiviewer

REFLECTANCE TRANSFORMATION IMAGING GUIDE TO RTIVIEWER Document version 1.1 Find updates and related materials at http://CulturalHeritageImaging.org/downloads/ 2 Guide to RTIViewer v 1.1 2013 Cultural Heritage Imaging and Visual Computing Lab, ISTI - Italian National Research Council. All rights reserved. This work is licensed under the Creative Commons Attribution-Noncommercial-No Derivative Works 3.0 United States License. To view a copy of this license, visit http://creativecommons.org/licenses/by-nc-nd/3.0/us/ or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco California 94105 USA. Attribution should be made to Cultural Heritage Imaging, http://CulturalHeritageImaging.org. The RTIViewer software described in this guide is made available under the Gnu General Public License version 3. Acknowledgments The RTIViewer tool, designed for cultural heritage and natural science applications, was primarily developed by the Italian National Research Council's (CNR) Institute for Information Science and Technology's (ISTI) Visual Computing Laboratory (http://vcg.isti.cnr.it) The work was financed by Cultural Heritage Imaging (http://CulturalHeritageImaging.org) with majority funding from the US Institute of Museum and Library Services' (IMLS) National leadership Grant Program (Award Number LG-25-06-0107-06) in partnership with the University of Southern California's West Semitic Research Project. RTIViewer also contains significant software and design contributions from the University of California Santa Cruz, the Universidade do Minho in Portugal, Tom Malzbender of HP Labs, and Cultural Heritage Imaging. Core research under the IMLS grant to develop the multi-view RTI technique along with the RTI and MVIEW file formats, and hemispherical harmonics fitter was largely performed at UC Santa Cruz under Professor James Davis, in collaboration with Tom Malzbender of HP Labs and staff of Cultural Heritage Imaging. -

Graphicconverter 7.4.1

User’s Interim Manual GraphicConverter 7.4.1 Programmed by Thorsten Lemke Manual by Hagen Henke This manual is under construction as many details of GraphicConverter have been changed in version 7. For updated versions please visit : http://www.lemkesoft.com/manuals-books.html Sales: Lemke Software GmbH PF 6034 D-31215 Peine Tel: +49-5171-72200 Fax:+49-5171-72201 E-mail: [email protected] In the PDF version of this manual, you can click the page numbers in the contents and index to jump to that particular page. © 2001-2011 Elbsand Publishers, Hagen Henke. All rights reserved. www.elbsand.de Sales: Lemke Software GmbH, PF 6034, D-31215 Peine; www.lemkesoft.com This book including all parts is protected by copyright. It may not be reproduced in any form outside of copyright laws without permission from the author. This applies in particular to photocopying, translation, copying onto microfilm and storage and processing on electronic systems. All due care was taken during the compilation of this book. However, errors cannot be completely ruled out. The author and distributors therefore accept no responsibility for any program or documentation errors or their consequences. This manual was written on a Mac using Adobe FrameMaker 8. Almost all software, hardware and other products or company names mentioned in this manual are registered trademarks and should be respected as such. The following list is not necessarily complete. Apple, the Apple logo, and Macintosh are trademarks of Apple Computer, Inc., registered in the United States and other countries. Mac and the Mac OS logo are trademarks of Apple Computer, Inc. -

Houdahgeo 6 User Guide

HoudahGeo 6 User Guide houdah.com/houdahGeo Table of Contents 1. Introduction 4 2. What’s New in HoudahGeo 6? 5 3. Quick Start Guide 7 3.1. Step 1: Load Images & Track Logs 7 3.2. Step 2: Geocode Your Images 9 3.3. Step 3: Export Geotags & Publish 11 4. Step by Step - In-depth 13 4.1. Step 1: Load 13 4.1.1. Adding Images to Your HoudahGeo Project 13 4.1.2. Camera Setup 16 4.1.3. Add GPS Data 21 4.2. Step 2: Process 24 4.2.1. The Map 24 4.2.2. The Images List 25 4.2.3. The Inspector Pane 27 4.2.4. Lift & Stamp 30 4.2.5. Geocoding Options 31 4.2.6. Adding Additional Metadata Information 39 4.2.7. Places 41 4.3. Step 3: Output 43 4.3.1. EXIF / XMP Export & Notify Library 43 4.3.2. Google Earth Export 49 4.3.3. KML Export 51 4.3.4. Flickr Upload 54 4.3.5. CSV and GPX Export 55 5. HoudahGeo Preferences 56 5.1. General Preferences 56 HoudahGeo: User Guide 2 5.2. Advanced Preferences 56 6. Frequently Asked Questions 57 6.1. Does HoudahGeo support RAW files? 57 6.2. Does HoudahGeo support XMP sidecar files? 57 6.3. I don't own a GPS. Can I still use HoudahGeo? 57 6.4. Does HoudahGeo work with DNG files? 57 6.5. Can you recommend a particular GPS device? 58 6.6. How do I ensure the camera's clock is accurate? 58 6.7. -

Autobwf Release 3.4.0

autoBWF Release 3.4.0 Feb 19, 2020 Contents 1 Contents 3 1.1 Installation................................................3 1.2 Usage...................................................4 1.3 Configuration...............................................6 1.4 Auxilliary tools..............................................9 2 Known issues 11 i ii autoBWF, Release 3.4.0 autoBWF is a Python/PyQt5 GUI for embedding internal metadata in WAVE audio files using the Broadcast Wave standard, FADGI BWFMetaEdit, and XMP. Unlike the existing BWFMetaEdit GUI, autoBWF is extremely opinionated and will automatically generate metadata content based on file naming conventions, system metadata, and pre-configured repository defaults. In addition, it can copy metadata fields from a template file to avoid having to enter the same information multiple times for derivative files of the same physical instantiation. Also included are auxilliary command line scripts which simplify the creation of derivative files and provide for export of embedded metadata to PBCore and CSV files. Contents 1 autoBWF, Release 3.4.0 2 Contents CHAPTER 1 Contents 1.1 Installation 1.1.1 Prerequisites Python 3 This code requires Python 3.6 or later. If you are running on Linux or Mac OS, then it is almost certainly already installed. If you are running on Widows, you can install Python using instructions on any number of web sites (such as this one). You may wish to configure a virtual environment within which to install and run autoBWF. bwfmetaedit If needed, download and run the installer for the BWFMetaEdit CLI (Command Line Interface) appropriate to your operating system from mediaarea.net. Note that having the BWFMetaEdit GUI installed is not sufficient. -

Mbox®Media Server User Manual

SOFTWARE VERSION v4.4.2 WWW.PRG.COM ® MBOX MEDIA SERVER USER MANUAL (rev. M) AutoPar®, Bad Boy®, Best Boy®, Followspot Controller™, Mbox®, Mini Node™, Nocturne®, Series 400®, ReNEW®, Super Node™, UV Bullet™, V476®, V676®, Virtuoso®, and White Light Bullet™, are trademarks of Production Resource Group, LLC, registered in the U.S. and other countries. Mac®, QuickTime® and FireWire® are registered trademarks of Apple Computer, Inc. Mbox uses NDI® technology. NDI® is a registered trademark of NewTek, Inc. Mbox uses the Hap family of codecs. Hap was created by Tom Butterworth and VidVox LLC. Mbox makes use of the Syphon Framework. Syphon was created by bangnoise (Tom Butterworth) & vade (Anton Marini). All other brand names which may be mentioned in this manual are trademarks or registered trademarks of their respective companies. This manual is for informational use only and is subject to change without notice. Please check www.prg.com for the latest version. PRG assumes no responsibility or liability for any claims resulting from errors or inaccuracies that may appear in this manual. Mbox® Media Server v4.4.2 User Manual Version as of: February 12, 2020 rev M PRG part number: 02.9800.0001.442 Production Resource Group Dallas Office 8617 Ambassador Row, Suite 120 Dallas, Texas 75247 www.prg.com Mbox® Media Server User Manual ©2020 Production Resource Group, LLC. All Rights Reserved. TABLE OF CONTENTS Chapter 1. Overview General Overview Features ....................................................................................................................................................................... -

Digital Photography Meta Data Overview

Digital Photography Meta Data Overview Digital Photography Meta Data Overview Table of Contents 1 Introduction......................................................................................................................... 2 2 Meta Data Defined.............................................................................................................. 2 3 Meta Data Location ............................................................................................................ 5 4 Viewing Meta Data ............................................................................................................. 6 5 Meta Data Types and Purposes......................................................................................... 7 6 The Meta Data Challenge................................................................................................... 8 7 The Six Causes of Confusion............................................................................................. 8 8 The Customer's Perspective. ........................................................................................... 16 9 The Software Developer’s Perspective ............................................................................ 17 10 Recommendations............................................................................................................ 17 11 Summary .......................................................................................................................... 18 12 Resources........................................................................................................................ -

Using Pitchblue with Vantage App Note Title

Vantage Application Note Using PitchBlue with Vantage App Note Title Left column - versioningThis App Note info Overview.......................................................................................Right column - table of contents 2 rightapplies justified to Settingleft justified Registry Key ..................................................................... 3 Vantage versions Workflow Example ........................................................................ 5 6.3 and later Captioning Setup ........................................................................ 12 Copyright and Trademark Notice ................................................ 19 Limited Warranty and Disclaimers .............................................. 19 139919 September 2014 Overview Telestream’s Vantage TrafficManager (license required) works with PitchBlue to automate the way TV stations ingest and process HD and SD syndicated programming sent from major media companies to their PitchBlue server. The Vantage workflow automation engine automates the entire process from ingest to delivery to the on-air server. Vantage can provide a single collection point for ingest, aggregation, and review of incoming HD syndicated programming. Powerful transcoding automates direct file transfer from the PitchBlue server to the on-air server at suitable bit rates and SD or HD resolution. This eliminates dubbing to tape and the need to manually process content. The PitchBlue server can be monitored for the arrival of new video content, the master control operator -

SARIF V2.1.0

Static Analysis Results Interchange Format (SARIF) Version 2.1.0 OASIS Standard 27 March 2020 This stage: https://docs.oasis-open.org/sarif/sarif/v2.1.0/os/sarif-v2.1.0-os.docx (Authoritative) https://docs.oasis-open.org/sarif/sarif/v2.1.0/os/sarif-v2.1.0-os.html https://docs.oasis-open.org/sarif/sarif/v2.1.0/os/sarif-v2.1.0-os.pdf Previous stage: https://docs.oasis-open.org/sarif/sarif/v2.1.0/cos01/sarif-v2.1.0-cos01.docx (Authoritative) https://docs.oasis-open.org/sarif/sarif/v2.1.0/cos01/sarif-v2.1.0-cos01.html https://docs.oasis-open.org/sarif/sarif/v2.1.0/cos01/sarif-v2.1.0-cos01.pdf Latest stage: https://docs.oasis-open.org/sarif/sarif/v2.1.0/sarif-v2.1.0.docx (Authoritative) https://docs.oasis-open.org/sarif/sarif/v2.1.0/sarif-v2.1.0.html https://docs.oasis-open.org/sarif/sarif/v2.1.0/sarif-v2.1.0.pdf Technical Committee: OASIS Static Analysis Results Interchange Format (SARIF) TC Chairs: David Keaton ([email protected]), Individual Member Luke Cartey ([email protected]), Semmle Editors: Michael C. Fanning ([email protected]), Microsoft Laurence J. Golding ([email protected]), Microsoft Additional artifacts: This prose specification is one component of a Work Product that also includes: • JSON schemas: https://docs.oasis-open.org/sarif/sarif/v2.1.0/cos02/schemas/ Abstract: This document defines a standard format for the output of static analysis tools. The format is referred to as the “Static Analysis Results Interchange Format” and is abbreviated as SARIF.