Instance Optimal Join Size Estimation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Links to the Past User Research Rage 2

ALL FORMATS LIFTING THE LID ON VIDEO GAMES User Research Links to Game design’s the past best-kept secret? The art of making great Zelda-likes Issue 9 £3 wfmag.cc 09 Rage 2 72000 Playtesting the 16 neon apocalypse 7263 97 Sea Change Rhianna Pratchett rewrites the adventure game in Lost Words Subscribe today 12 weeks for £12* Visit: wfmag.cc/12weeks to order UK Price. 6 issue introductory offer The future of games: subscription-based? ow many subscription services are you upfront, would be devastating for video games. Triple-A shelling out for each month? Spotify and titles still dominate the market in terms of raw sales and Apple Music provide the tunes while we player numbers, so while the largest publishers may H work; perhaps a bit of TV drama on the prosper in a Spotify world, all your favourite indie and lunch break via Now TV or ITV Player; then back home mid-tier developers would no doubt ounder. to watch a movie in the evening, courtesy of etix, MIKE ROSE Put it this way: if Spotify is currently paying artists 1 Amazon Video, Hulu… per 20,000 listens, what sort of terrible deal are game Mike Rose is the The way we consume entertainment has shifted developers working from their bedroom going to get? founder of No More dramatically in the last several years, and it’s becoming Robots, the publishing And before you think to yourself, “This would never increasingly the case that the average person doesn’t label behind titles happen – it already is. -

1300 Games in 1 Games List

1300 Games in 1 Games List 1. 1942 – (Shooting) [609] 2. 1941 : COUNTER ATTACK – (Shooting) [608] 3. 1943 : THE BATTLE OF MIDWAY – (Shooting) [610] 4. 1943 KAI : MIDWAY KAISEN – (Shooting) [611] 5. 1944 : THE LOOP MASTER – (Shooting) [521] 6. 1945KIII – (Shooting) [604] 7. 19XX : THE WAR AGAINST DESTINY – (Shooting) [612] 8. 2020 SUPER BASEBALL – (Sport) [839] 9. 3 COUNT BOUT – (Fighting) [70] 10. 4 EN RAYA – (Puzzle) [1061] 11. 4 FUN IN 1 – (Shooting) [714] 12. 4-D WARRIORS – (Shooting) [575] 13. STREET : A DETECTIVE STORY – (Action) [303] 14. 88GAMES – (Sport) [881] 15. 9 BALL SHOOTOUT – (Sport) [850] 16. 99 : THE LAST WAR – (Shooting) [813] 17. D. 2083 – (Shooting) [768] 18. ACROBAT MISSION – (Shooting) [678] 19. ACROBATIC DOG-FIGHT – (Shooting) [735] 20. ACT-FANCER CYBERNETICK HYPER WEAPON – (Action) [320] 21. ACTION HOLLYWOOOD – (Action) [283] 22. AERO FIGHTERS – (Shooting) [673] 23. AERO FIGHTERS 2 – (Shooting) [556] 24. AERO FIGHTERS 3 – (Shooting) [557] 25. AGGRESSORS OF DARK KOMBAT – (Fighting) [64] 26. AGRESS – (Puzzle) [1054] 27. AIR ATTACK – (Shooting) [669] 28. AIR BUSTER : TROUBLE SPECIALTY RAID UNIT – (Shooting) [537] 29. AIR DUEL – (Shooting) [686] 30. AIR GALLET – (Shooting) [613] 31. AIRWOLF – (Shooting) [541] 32. AKKANBEDER – (Shooting) [814c] 33. ALEX KIDD : THE LOST STARS – (Puzzle) [1248] 34. ALIBABA AND 40 THIEVES – (Puzzle) [1149] 35. ALIEN CHALLENGE – (Fighting) [87] 36. ALIEN SECTOR – (Shooting) [718] 37. ALIEN STORM – (Action) [322] 38. ALIEN SYNDROME – (Action) [374] 39. ALIEN VS. PREDATOR – (Action) [251] 40. ALIENS – (Action) [373] 41. ALLIGATOR HUNT – (Action) [278] 42. ALPHA MISSION II – (Shooting) [563] 43. ALPINE SKI – (Sport) [918] 44. AMBUSH – (Shooting) [709] 45. -

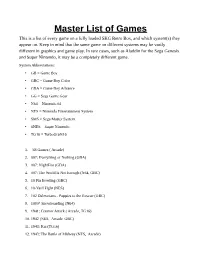

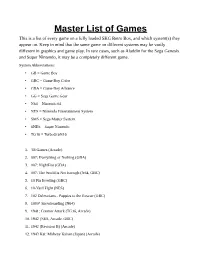

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games ( Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack ( Arcade, TG16) 10. 1942 (NES, Arcade, GBC) 11. 1943: Kai (TG16) 12. 1943: The Battle of Midway (NES, Arcade) 13. 1944: The Loop Master ( Arcade) 14. 1999: Hore, Mitakotoka! Seikimatsu (NES) 15. 19XX: The War Against Destiny ( Arcade) 16. 2 on 2 Open Ice Challenge ( Arcade) 17. 2010: The Graphic Action Game (Colecovision) 18. 2020 Super Baseball ( Arcade, SNES) 19. 21-Emon (TG16) 20. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 21. 3 Count Bout ( Arcade) 22. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 23. 3-D Tic-Tac-Toe (Atari 2600) 24. 3-D Ultra Pinball: Thrillride (GBC) 25. 3-D WorldRunner (NES) 26. 3D Asteroids (Atari 7800) 27. -

![[Japan] SALA GIOCHI ARCADE 1000 Miglia](https://docslib.b-cdn.net/cover/3367/japan-sala-giochi-arcade-1000-miglia-393367.webp)

[Japan] SALA GIOCHI ARCADE 1000 Miglia

SCHEDA NEW PLATINUM PI4 EDITION La seguente lista elenca la maggior parte dei titoli emulati dalla scheda NEW PLATINUM Pi4 (20.000). - I giochi per computer (Amiga, Commodore, Pc, etc) richiedono una tastiera per computer e talvolta un mouse USB da collegare alla console (in quanto tali sistemi funzionavano con mouse e tastiera). - I giochi che richiedono spinner (es. Arkanoid), volanti (giochi di corse), pistole (es. Duck Hunt) potrebbero non essere controllabili con joystick, ma richiedono periferiche ad hoc, al momento non configurabili. - I giochi che richiedono controller analogici (Playstation, Nintendo 64, etc etc) potrebbero non essere controllabili con plance a levetta singola, ma richiedono, appunto, un joypad con analogici (venduto separatamente). - Questo elenco è relativo alla scheda NEW PLATINUM EDITION basata su Raspberry Pi4. - Gli emulatori di sistemi 3D (Playstation, Nintendo64, Dreamcast) e PC (Amiga, Commodore) sono presenti SOLO nella NEW PLATINUM Pi4 e non sulle versioni Pi3 Plus e Gold. - Gli emulatori Atomiswave, Sega Naomi (Virtua Tennis, Virtua Striker, etc.) sono presenti SOLO nelle schede Pi4. - La versione PLUS Pi3B+ emula solo 550 titoli ARCADE, generati casualmente al momento dell'acquisto e non modificabile. Ultimo aggiornamento 2 Settembre 2020 NOME GIOCO EMULATORE 005 SALA GIOCHI ARCADE 1 On 1 Government [Japan] SALA GIOCHI ARCADE 1000 Miglia: Great 1000 Miles Rally SALA GIOCHI ARCADE 10-Yard Fight SALA GIOCHI ARCADE 18 Holes Pro Golf SALA GIOCHI ARCADE 1941: Counter Attack SALA GIOCHI ARCADE 1942 SALA GIOCHI ARCADE 1943 Kai: Midway Kaisen SALA GIOCHI ARCADE 1943: The Battle of Midway [Europe] SALA GIOCHI ARCADE 1944 : The Loop Master [USA] SALA GIOCHI ARCADE 1945k III SALA GIOCHI ARCADE 19XX : The War Against Destiny [USA] SALA GIOCHI ARCADE 2 On 2 Open Ice Challenge SALA GIOCHI ARCADE 4-D Warriors SALA GIOCHI ARCADE 64th. -

Master List of Games This Is a List of Every Game on a Fully Loaded SKG Retro Box, and Which System(S) They Appear On

Master List of Games This is a list of every game on a fully loaded SKG Retro Box, and which system(s) they appear on. Keep in mind that the same game on different systems may be vastly different in graphics and game play. In rare cases, such as Aladdin for the Sega Genesis and Super Nintendo, it may be a completely different game. System Abbreviations: • GB = Game Boy • GBC = Game Boy Color • GBA = Game Boy Advance • GG = Sega Game Gear • N64 = Nintendo 64 • NES = Nintendo Entertainment System • SMS = Sega Master System • SNES = Super Nintendo • TG16 = TurboGrafx16 1. '88 Games (Arcade) 2. 007: Everything or Nothing (GBA) 3. 007: NightFire (GBA) 4. 007: The World Is Not Enough (N64, GBC) 5. 10 Pin Bowling (GBC) 6. 10-Yard Fight (NES) 7. 102 Dalmatians - Puppies to the Rescue (GBC) 8. 1080° Snowboarding (N64) 9. 1941: Counter Attack (TG16, Arcade) 10. 1942 (NES, Arcade, GBC) 11. 1942 (Revision B) (Arcade) 12. 1943 Kai: Midway Kaisen (Japan) (Arcade) 13. 1943: Kai (TG16) 14. 1943: The Battle of Midway (NES, Arcade) 15. 1944: The Loop Master (Arcade) 16. 1999: Hore, Mitakotoka! Seikimatsu (NES) 17. 19XX: The War Against Destiny (Arcade) 18. 2 on 2 Open Ice Challenge (Arcade) 19. 2010: The Graphic Action Game (Colecovision) 20. 2020 Super Baseball (SNES, Arcade) 21. 21-Emon (TG16) 22. 3 Choume no Tama: Tama and Friends: 3 Choume Obake Panic!! (GB) 23. 3 Count Bout (Arcade) 24. 3 Ninjas Kick Back (SNES, Genesis, Sega CD) 25. 3-D Tic-Tac-Toe (Atari 2600) 26. 3-D Ultra Pinball: Thrillride (GBC) 27. -

Newagearcade.Com 5000 in One Arcade Game List!

Newagearcade.com 5,000 In One arcade game list! 1. AAE|Armor Attack 2. AAE|Asteroids Deluxe 3. AAE|Asteroids 4. AAE|Barrier 5. AAE|Boxing Bugs 6. AAE|Black Widow 7. AAE|Battle Zone 8. AAE|Demon 9. AAE|Eliminator 10. AAE|Gravitar 11. AAE|Lunar Lander 12. AAE|Lunar Battle 13. AAE|Meteorites 14. AAE|Major Havoc 15. AAE|Omega Race 16. AAE|Quantum 17. AAE|Red Baron 18. AAE|Ripoff 19. AAE|Solar Quest 20. AAE|Space Duel 21. AAE|Space Wars 22. AAE|Space Fury 23. AAE|Speed Freak 24. AAE|Star Castle 25. AAE|Star Hawk 26. AAE|Star Trek 27. AAE|Star Wars 28. AAE|Sundance 29. AAE|Tac/Scan 30. AAE|Tailgunner 31. AAE|Tempest 32. AAE|Warrior 33. AAE|Vector Breakout 34. AAE|Vortex 35. AAE|War of the Worlds 36. AAE|Zektor 37. Classic Arcades|'88 Games 38. Classic Arcades|1 on 1 Government (Japan) 39. Classic Arcades|10-Yard Fight (World, set 1) 40. Classic Arcades|1000 Miglia: Great 1000 Miles Rally (94/07/18) 41. Classic Arcades|18 Holes Pro Golf (set 1) 42. Classic Arcades|1941: Counter Attack (World 900227) 43. Classic Arcades|1942 (Revision B) 44. Classic Arcades|1943 Kai: Midway Kaisen (Japan) 45. Classic Arcades|1943: The Battle of Midway (Euro) 46. Classic Arcades|1944: The Loop Master (USA 000620) 47. Classic Arcades|1945k III 48. Classic Arcades|19XX: The War Against Destiny (USA 951207) 49. Classic Arcades|2 On 2 Open Ice Challenge (rev 1.21) 50. Classic Arcades|2020 Super Baseball (set 1) 51. -

Arcade Rewind 3500 Games List 190818.Xlsx

ArcadeRewind.com.au [email protected] Facebook.com/ArcadeRewind Tel: 1300 272233 3500 Games List No. 3/4 Player Games 1 2 On 2 Open Ice Challenge (rev 1.21) 114 Metamorphic Force (ver EAA) 2 Alien Storm (US,3 Players,FD1094 317-0147) 115 Mexico 86 3 Alien vs. Predator 116 Michael Jackson's Moonwalker (US,FD1094) 4 All American Football (rev E) 117 Michael Jackson's Moonwalker (World) 5 Arabian Fight (World) 118 Minesweeper (4-Player) 6 Arabian Magic <World> 119 Muscle Bomber Duo - Ultimate Team Battle <World> 7 Armored Warriors 120 Mystic Warriors (ver EAA) 8 Armored Warriors (Euro Phoenix) 121 NBA Hangtime (rev L1.1 04/16/96) 9 Asylum <prototype> 122 NBA Jam (rev 3.01 04/07/93) 10 Atomic Punk (US) 123 NBA Jam T.E. Nani Edition 11 B.Rap Boys 124 NBA Jam TE (rev 4.0 03/23/94) 12 Back Street Soccer 125 Neck-n-Neck (4 Players) 13 Barricade 126 Night Slashers (Korea Rev 1.3) 14 Battle Circuit <Japan 970319> 127 Ninja Baseball Batman <US> 15 Battle Toads 128 Ninja Kids <World> 16 Beast Busters (World) 129 Nitro Ball 17 Blazing Tornado 130 Numan Athletics (World) 18 Bomber Lord (bootleg) 131 Off the Wall (2/3-player upright) 19 Bomber Man World <World> 132 Oriental Legend <ver.112, Chinese Board> 20 Brute Force 133 Oriental Legend <ver.112> 21 Bucky O'Hare <World version> 134 Oriental Legend <ver.126> 22 Bullet (FD1094 317-0041) 135 Oriental Legend Special 23 Cadillacs and Dinosaurs <World> 136 Oriental Legend Special Plus 24 Cadillacs Kyouryuu-Shinseiki <Japan> 137 Paddle Mania 25 Captain America 138 Pasha Pasha 2 26 Captain America -

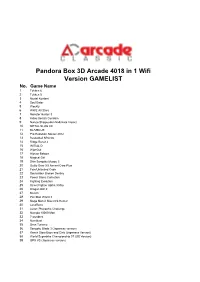

Pandora Box 3D Arcade 4018 in 1 Wifi Version GAMELIST No

Pandora Box 3D Arcade 4018 in 1 Wifi Version GAMELIST No. Game Name 1 Tekken 6 2 Tekken 5 3 Mortal Kombat 4 Soul Eater 5 Weekly 6 WWE All Stars 7 Monster Hunter 3 8 Kidou Senshi Gundam 9 Naruto Shippuuden Naltimate Impact 10 METAL SLUG XX 11 BLAZBLUE 12 Pro Evolution Soccer 2012 13 Basketball NBA 06 14 Ridge Racer 2 15 INITIAL D 16 WipeOut 17 Hitman Reborn 18 Magical Girl 19 Shin Sangoku Musou 5 20 Guilty Gear XX Accent Core Plus 21 Fate/Unlimited Code 22 Soulcalibur Broken Destiny 23 Power Stone Collection 24 Fighting Evolution 25 Street Fighter Alpha 3 Max 26 Dragon Ball Z 27 Bleach 28 Pac Man World 3 29 Mega Man X Maverick Hunter 30 LocoRoco 31 Luxor: Pharaoh's Challenge 32 Numpla 10000-Mon 33 7 wonders 34 Numblast 35 Gran Turismo 36 Sengoku Blade 3 (Japanese version) 37 Ranch Story Boys and Girls (Japanese Version) 38 World Superbike Championship 07 (US Version) 39 GPX VS (Japanese version) 40 Super Bubble Dragon (European Version) 41 Strike 1945 PLUS (US version) 42 Element Monster TD (Chinese Version) 43 Ranch Story Honey Village (Chinese Version) 44 Tianxiang Tieqiao (Chinese version) 45 Energy gemstone (European version) 46 Turtledove (Chinese version) 47 Cartoon hero VS Capcom 2 (American version) 48 Death or Life 2 (American Version) 49 VR Soldier Group 3 (European version) 50 Street Fighter Alpha 3 51 Street Fighter EX 52 Bloody Roar 2 53 Tekken 3 54 Tekken 2 55 Tekken 56 Mortal Kombat 4 57 Mortal Kombat 3 58 Mortal Kombat 2 59 The overlord continent 60 Oda Nobunaga 61 Super kitten 62 The battle of steel benevolence 63 Mech -

3390 in 1 Games List

3390 in 1 Games List www.arcademultigamesales.co.uk Game Name Tekken 3 Tekken 2 Tekken Street Fighter Alpha 3 Street Fighter EX Bloody Roar 2 Mortal Kombat 4 Mortal Kombat 3 Mortal Kombat 2 Gear Fighter Dendoh Shiritsu Justice gakuen Turtledove (Chinese version) Super Capability War 2012 (US Edition) Tigger's Honey Hunt(U) Batman Beyond - Return of the Joker Bio F.R.E.A.K.S. Dual Heroes Killer Instinct Gold Mace - The Dark Age War Gods WWF Attitude Tom and Jerry in Fists of Furry 1080 Snowboarding Big Mountain 2000 Air Boarder 64 Tony Hawk's Pro Skater Aero Gauge Carmageddon 64 Choro Q 64 Automobili Lamborghini Extreme-G Jeremy McGrath Supercross 2000 Mario Kart 64 San Francisco Rush - Extreme Racing V-Rally Edition 99 Wave Race 64 Wipeout 64 All Star Tennis '99 Centre Court Tennis Virtual Pool 64 NBA Live 99 Castlevania Castlevania: Legacy of 1 Darkness Army Men - Sarge's Heroes Blues Brothers 2000 Bomberman Hero Buck Bumble Bug's Life,A Bust A Move '99 Chameleon Twist Chameleon Twist 2 Doraemon 2 - Nobita to Hikari no Shinden Gex 3 - Deep Cover Gecko Ms. Pac-Man Maze Madness O.D.T (Or Die Trying) Paperboy Rampage 2 - Universal Tour Super Mario 64 Toy Story 2 Aidyn Chronicles Charlie Blast's Territory Perfect Dark Star Fox 64 Star Soldier - Vanishing Earth Tsumi to Batsu - Hoshi no Keishousha Asteroids Hyper 64 Donkey Kong 64 Banjo-Kazooie The Legend of Zelda: Majora's Mask The Legend of Zelda: Ocarina of Time The King of Fighters '97 Practice The King of Fighters '98 Practice The King of Fighters '98 Combo Practice The King of Fighters -

4539-Arcadegamelist.Pdf

1 005 80 3D_Tekken (WORLD) Ver. B 157 Alien Storm (Japan, 2 Players) 2 1 on 1 Government (Japan) 81 3D_Tekken <World ver.c> 158 Alien Storm (US,3 Players) 3 10 Yard Fight <Japan> 82 3D_Tekken 2 (JP) Ver. B 159 Alien Storm (World, 2 Players) 4 1000 Miglia:Great 1000 Miles Rally (94/07/18) 83 3D_Tekken 2 (World) Ver. A 160 Alien Syndrome 5 10-Yard Fight ‘85 (US, Taito license) 84 3D_Tekken 2 <World ver.b> 161 Alien Syndrome (set 6, Japan, new) 6 18 Holes Pro Golf (set 1) 85 3D_Tekken 3 (JP) Ver. A 162 Alien vs. Predator (Euro 940520 Phoenix Edition) 7 1941: Counter Attack (USA 900227) 86 3D_Tetris The Grand Master 163 Alien vs. Predator (Euro 940520) 8 1941:Counter Attack (World 900227) 87 3D_Tondemo Crisis 164 Alien vs. Predator (Hispanic 940520) 9 1942 (Revision B) 88 3D_Toshinden 2 165 Alien vs. Predator (Japan 940520) 10 1942 (Tecfri PCB, bootleg?) 89 3D_Xevious 3D/G (JP) Ver. A 166 Alien vs. Predator (USA 940520) 11 1943 Kai:Midway Kaisen (Japan) 90 3X3 Maze (Enterprise) 167 Alien3: The Gun (World) 12 1943: Midway Kaisen (Japan) 91 3X3 Maze (Normal) 168 Aliens <Japan> 13 1943:The Battle of Midway (Euro) 92 4 En Raya 169 Aliens <US> 14 1944: The Loop Master (USA Phoenix Edition) 93 4 Fun in 1 170 Aliens <World set 1> 15 1944:The Loop Master (USA 000620) 94 4-D Warriors 171 Aliens <World set 2> 16 1945 Part-2 (Chinese hack of Battle Garegga) 95 600 172 All American Football (rev D, 2 Players) 17 1945k III (newer, OPCX2 PCB) 96 64th. -

Stephen M. Cabrinety Collection in the History of Microcomputing, Ca

http://oac.cdlib.org/findaid/ark:/13030/kt529018f2 No online items Guide to the Stephen M. Cabrinety Collection in the History of Microcomputing, ca. 1975-1995 Processed by Stephan Potchatek; machine-readable finding aid created by Steven Mandeville-Gamble Department of Special Collections Green Library Stanford University Libraries Stanford, CA 94305-6004 Phone: (650) 725-1022 Email: [email protected] URL: http://library.stanford.edu/spc © 2001 The Board of Trustees of Stanford University. All rights reserved. Special Collections M0997 1 Guide to the Stephen M. Cabrinety Collection in the History of Microcomputing, ca. 1975-1995 Collection number: M0997 Department of Special Collections and University Archives Stanford University Libraries Stanford, California Contact Information Department of Special Collections Green Library Stanford University Libraries Stanford, CA 94305-6004 Phone: (650) 725-1022 Email: [email protected] URL: http://library.stanford.edu/spc Processed by: Stephan Potchatek Date Completed: 2000 Encoded by: Steven Mandeville-Gamble © 2001 The Board of Trustees of Stanford University. All rights reserved. Descriptive Summary Title: Stephen M. Cabrinety Collection in the History of Microcomputing, Date (inclusive): ca. 1975-1995 Collection number: Special Collections M0997 Creator: Cabrinety, Stephen M. Extent: 815.5 linear ft. Repository: Stanford University. Libraries. Dept. of Special Collections and University Archives. Language: English. Access Access restricted; this collection is stored off-site in commercial storage from which material is not routinely paged. Access to the collection will remain restricted until such time as the collection can be moved to Stanford-owned facilities. Any exemption from this rule requires the written permission of the Head of Special Collections. -

Welltris__FRENCH-GERMAN

Welltris Edeh Paee 2 F.-e"t Pag3 13 Deutar Selb 22 Iem- Paeha 3l t a ia\-llry EG O DOKA 1989. Al dehtr rsor\r€d. Ljcgrd b &ler PDof Solrtfir. Ad+t ion mS by INFOGBAi/ES w h 9rmadq| of 8.P.S. t{elltrts ENGLISH I.INTRODUCTION WELLTn|S: arpther cnalor{p b fE w6bm wqld fqn t|e Rtlssiatt garnam6br Abxey P4lr|ov, lhe a/f|or ol TETRIS. Algxey Palhov, tho irmoua mathematldan atd metnbor oflho Sovbt Ac€derny of Sdooco6, ha8 the p(tvfl€go b pfog]am and d6ign hb ganos m hb own p€rsonal@mpubr whlci b sdllrare ln lho Sovlet thion. P4ltnoy t|op6 b rav€l 8orno day, kdrrdlng tip3 b Japdr, tro Unlbd Stab6, w€6brn Europe... The InbrmtorC $cc6s ol hb gflr6 ca.r only h€lp t* hop6 cofl|e tuo. II. LOADING AND START UP NOTE| Yoq may coon€ct a jot8ia*. COMMODORE 64 Dlrt wrion: - ll you rr6e a C128, typo CiO 54 a.|d co.rfirm by prsGshg Y; - Thon. on Cd{ I w€ll. lrE€rt t|e di6k In the ddvo; - Type LOAD'VVELLTnF ,S - P.68 lho RETURN key; - Wh€n fE progrsn has loaded, the m68age 'RE DY wil diday; - Type RUN gld FsG6 FETURN. C|!rt|. Yrrrlonr - It you r.,6e a C!28, typo @ 6/t s|d q km by p€Gslng Y; . Tlrn. m C6{ c Fll. hsort the c-r€lb In lha rw&r; - Pr6s lhe ksy8 SHIFT zd RUIIFTOP *nunsnarsly; - P.6s the PLAY Urtbn on lho reordor: - Tho progrsm wil lod ard 6iarl aubradcally.