The Effect of Contract Year Performance on Free Agent Salary in Major League Baseball

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Conference & Region Recognition Voting Process

Conference & Region Recognition Voting and Viewing Process Varsity Rosters and Stats: All varsity rosters and stats need to be entered into MaxPreps.com (with the exception of beach volleyball which needs to have rosters entered in the AIA Admin). The coach and AD have logins that allow them to do just that, which is accessible by logging in at admin.aiaonline.org. The rosters and stats are needed for the following sports to be entered into MaxPreps.com in order to power the Conference and Region recognition process: • Baseball • Basketball • Football • Soccer • Softball • Volleyball • Beach Volleyball The following rosters are required to be entered into the AIA System for Section Player of the Year, Section Coach of the Year, and Division Coach of the Year voting: • Badminton • Tennis Region Voting (excluding Badminton and Tennis): The region reps from the conference committees will be responsible for entering in the results of the region voting for display on www.azpreps365.com. The region reps can designate this to another individual; however, the region rep will need to notify the AIA of who that rep would be. In some sports, where regions differ from the master regions, the conference chair will need to name the region reps for each region in that sport. Those sports include: 2A 11-man football, 1A 8-man football, 2A fall soccer boys, 2A fall soccer girls, 3A winter soccer boys, 3A winter soccer girls, 6A and 5A volleyball boys. Each region may meet as a whole to determine the region teams. If the region is unable to meet as a whole, or a member of that region is unable to meet, the region representative shall take those votes via email and bring them to the region meeting for consideration. -

Youth Baseball Player Pitch Rules 4Th-6Th Grade NATIONAL LEAGUE

MJCCA Youth Baseball Player Pitch Rules 4th-6th Grade NATIONAL LEAGUE: PLAYER PITCH BASEBALL The baseball used in this league will be a regular baseball. Time Limit: No new inning after 1 hour 30 minutes. If the Home Team is ahead when time expires, it is up to the Visiting Team to decide if they would like to finish the inning. THE FIELD Standard Little League Field The distance between bases will be seventy feet (70'). The distance from the pitching rubber to home plate will be approximately forty-seven feet (47'). INDIVIDUAL PLAYING TIME 1. All players will bat continuously through the batting order for the entire game. 2. No player will remain out of the game for two (2) consecutive innings during a game. Ex.: If player #5 bats second in the batting order, does not get to start in the field in the first inning. Therefore, player #5 must play in the field in the second inning. PITCHING RULES 1. Any team member may pitch, subject to the other restrictions of the pitching rules. 2. A pitcher shall not pitch any more than THREE (3) continuous innings per game. 3. Delivering one (1) pitch to a batter shall be considered as pitching one (1) inning Revised 8/08 4. A coach shall be entitled to request time, on defense, to talk to his pitcher once per inning. On the second visit in the same inning, he shall be required to remove the pitcher from the mound, but he can be placed at any other position in the field. -

The Business of Minor League Baseball: Amateur Eligibility Rules, 56 Case W

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Case Western Reserve University School of Law Case Western Reserve Law Review Volume 56 | Issue 3 2006 The uB siness of Minor League Baseball: Amateur Eligibility Rules Peter A. Carfagna John Farrell Mike Hazen Follow this and additional works at: https://scholarlycommons.law.case.edu/caselrev Part of the Law Commons Recommended Citation Peter A. Carfagna, John Farrell, and Mike Hazen, The Business of Minor League Baseball: Amateur Eligibility Rules, 56 Case W. Res. L. Rev. 695 (2006) Available at: https://scholarlycommons.law.case.edu/caselrev/vol56/iss3/15 This Symposium is brought to you for free and open access by the Student Journals at Case Western Reserve University School of Law Scholarly Commons. It has been accepted for inclusion in Case Western Reserve Law Review by an authorized administrator of Case Western Reserve University School of Law Scholarly Commons. THE BUSINESS OF MINOR LEAGUE BASEBALL: AMATEUR ELIGIBILITY RULES PeterA. Carfagnat John Farrelll Mike Hazen* I. A BRIEF OVERVIEW OF KEY RULES In this presentation, we will explore the eligibility rules of profes- sional baseball. Generally, we will look into when and why a young man should choose to turn professional. I will begin by throwing out a few provocative rules, and then we will see how the rules line up against the reality of an individual player's ability. The draft is covered in the official rules of Major League Baseball (MLB) under Rule 4.' The draft is held every June 2 by conference call among the thirty major league clubs, and the draft lasts fifty rounds. -

TOURNAMENT RULES Major League Rules with MANY Modifications

COOPERSTOWN BASEBALL WORLD - OFFICIAL TOURNAMENT RULES Major League Rules with MANY Modifications Cut-off date for each age group: May 1. Lead offs are allowed for all ages. Designated Hitter is allowed (10-man line-up may be used) for any player. The DH is “locked” with the positional player he is DH’ing Extra Hitter(s) - may be used; however they must always bat in the tenth (eleventh, twelfth, etc. spot). EH’s may enter the field after the first inning defensively as well for any position player. Re-Entry: allowed for all age groups Speed Up Rule: run for catcher & pitcher at any time. We will use the last batted out. If there is no batted out for that inning, it must be a sub. If batting all and there is not out for that inning, you will use the last batter up that specific inning. Balk rules:12U - 1 warning per pitcher during pool play and tournament 13U - one warning per pitcher during pool play, then straight baseball during tournament 14U/15U/16U - straight baseball the whole time (no warnings) The 3rd to 1st pick-off move is not allowed The 3rd to 1st pick-off move is not allowed No pitching limitations (coach’s discretion). BE SMART! All games will be six (6) innings in length or 2-hour time limit. No new inning will be started 5 minutes before 2-hour time limit is up. There is no time limit in the first round of the tournament (Wednesday) or in the winner’s bracket. Time limits are put back on for all consolation bracket games The “Mercy Rule” will be used (if one team leads another by 10 runs or more after only 4 innings of play, the game is over). -

Roy Hobbs World Series Eligibility Guidelines Eligibility for RHWS, Age Limits & Playoffs

Roy Hobbs World Series Eligibility Guidelines Eligibility for RHWS, age limits & playoffs Here are Roy Hobbs Baseball’s eligibility rules for the Roy Hobbs World Series (updates are shaded): v Eligibility Requirements Players Players are eligible to compete at the RHWS, provided they: u Satisfy the age and professional player eligibility requirements explained below. u Complete the registration process and execute a player “Registration Contract & Waiver” as required on-line or otherwise, certified by the team’s manager/coach, which affirms that both the player and the player’s manager/coach will be bound by the rules, regulations and governance of Roy Hobbs Baseball. u Complete in-person check-in, including presentation of appropriate government-issued photo identification before participating in any games. u Have his/her RHWS I.D. card received at check-in registration in his/her possession at all games. Players without their cards are ineligible to participate. u Have not violated any RHWS rules or been engaged in unsportsmanlike or inappropriate behavior in a prior RHWS. Managers All managers must sign a statement provided by Roy Hobbs Baseball at team check-in, certifying that (i) they are not aware of any intentional, unintentional or inadvertent violations of the RHWS rules by any player on the team or themselves, (ii) they are not aware that there are any ineligible players on their roster, and (iii) they will abide by all eligibility rules, interpretations or disciplinary decisions rendered by Roy Hobbs Baseball during the RHWS. Managers are subject to the same penalties as ineligible or disqualified players. v Age Age Divisions u All age minimums are determined on a calendar year basis; players must reach the division minimum by Dec. -

Use of FLEX/DP

Use of FLEX/DP: This individual can be listed in any of the nine spots in the batting order. The player listed number 10 in the lineup (plays defense for the DP), but can play offense for the DP also, will be called the FLEX. A. A designated player (DP) may be used for any defensive player provided it is made known prior to the start of the game. The DP must be indicated on the line- up as one of the nine hitters in the batting order. B. The name of the player for whom the DP is batting (FLEX) will be placed in the 10th position in the line-up. C. The DP and any substitutes for the DP must remain in the same position in the batting order for the entire game. The FLEX, and any substitutes for the FLEX, must enter the game in the 10th position in the line-up. D. The DP may be substituted for at any time either by a pinch hitter or pinch runner. This player becomes the DP and has all the options of the DP position. The starting DP and a substitute for the DP can never be in the game at the same time. E. The FLEX may be substituted for at any time. This player becomes the FLEX and has all the options of the FLEX position. The starting FLEX and a substitute for the FLEX can never be in the game at the same time. F. The starting DP, or her substitute, may be replaced by the FLEX as a hitter or as a runner. -

Peanut Rules 2020

PEANUT RULES 2020 2/26/20 1 | Page GENERAL RULES FOR ALL DIVISIONS GENERAL PURPOSE To inspire the youth of Daviess Co. regardless of race, creed or color to practice the highest ideals of sportsmanship and physical fitness. To bring our youth closer together through the means of a common interest through fellowship and athletic competition. To encourage adults to behave in an adult like manner when supervising the youth. To keep the welfare of the youth foremost and free from any adult compulsion for power and glory. To instill in the youth of Daviess Co. morality and fellowship with their fellow teammates. GENERAL RULES Rule #1 Once a player has been drafted or picked as a coach’s option that player MUST remain on that team until the player moves up to a higher division. An exception to this rule is a returning player who signs up after the draft, will go back into the pool to be placed on the team with the least # of players. NOTE: Only the head coach has a pick as a coach option, there are no coach options for the assistant coaches. Rule #2 Team roster size may not exceed 12 players or fall less than 8 players for league play (except for special consideration and permission by the WDC Cal Ripken board which may allow a maximum roster of 14 players). A team with less than 8 players shall forfeit that game. Team can play with 8 players. Ninth batter is an out unless it is the third out. If additional players arrive, they will go to the bottom of the batting line up. -

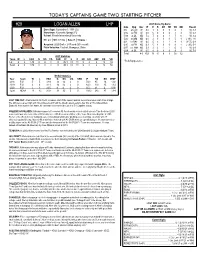

Today's Captains Game Two Starting Pitcher

TODAY’S CAPTAINS GAME TWO STARTING PITCHER #29 LOGAN ALLEN LHP 2021 Game-By-Game Date Opp Dec IP H R ER HR SO BB Result Born (Age): September 5, 1998 (22) 5/5 at LAN W 5.0 1 0 0 0 8 1 W, 3-1 Hometown: Altamonte Springs, FL 5/12 vs FW W 5.0 5 0 0 0 7 2 W, 5-3 School: Florida International University 5/19 at GL ND 5.2 7 2 2 1 7 0 W, 3-2 HT: 6’0” │WT: 170 lbs │ Bats: R │Throws: L 5/26 at WM ND 6.0 2 0 0 0 5 1 L (10), 1-0 6/3 vs DAY W 6.0 5 3 3 1 8 1 W (7), 6-3 Acquired: 2020 Draft – 2nd Round (56th overall) 6/10 at FW ND 6.1 5 4 3 0 8 4 L (10), 5-4 Pitch Selection: Fastball, Changeup, Slider 6/17 vs. WM ND 5.1 2 2 1 1 6 2 W, 6-3 6/24 at GL W 6.0 4 0 0 0 9 1 W, 7-1 Total 4-0 45.1 31 11 9 3 58 12 2021 Statistics Team W L ERA G G S CG SHO IP H R ER HR HBP BB SO LC 4 0 1.79 8 8 0 0 45.1 31 11 9 3 2 12 58 * Relief Appearance Total 4 0 1.79 8 8 0 0 45.1 31 11 9 3 2 12 58 NCAA Statistics Year Team W L ERA G GS CG SHO IP SO BB WHIP 2018 FIU 5 5 3.89 13 12 0 0 74.0 85 16 1.22 2019 FIU 4 6 3.11 14 14 0 0 84.0 120 25 1.14 2020 FIU 2 1 2.45 4 4 0 0 25.2 41 6 0.90 Total NCAA 11 12 3.33 31 30 0 0 183.2 246 47 1.14 LAST TIME OUT: Allen turned in his fourth scoreless start of the season and his second scoreless start of 6+ innings. -

Baseball Operating Code Updated: January 11, 2021 Recent: 1.1.2 + 2.1.4

Pennsylvania State Athletic Conference Baseball Operating Code Updated: January 11, 2021 Recent: 1.1.2 + 2.1.4 1. ORGANIZATION STRUCTURE 1.1 CONFERENCE STRUCTURE 1.1.1 The Conference consists of an Eastern Division and a Western Division. 1.1.1.1 East: Bloomsburg, East Stroudsburg, Kutztown, Lock Haven, Mansfield, Millersville, Shepherd, Shippensburg, West Chester. 1.1.1.2 West: California, Clarion, Gannon, IUP, Mercyhurst, Pitt-Johnstown, Seton Hill, Slippery Rock. 1.1.2 Conference play shall be conducted with each Western Division team playing four games against each opponent in their respective Division for a total of 28 games. The Eastern Division teams will play three games against each opponent in their respective division for a total of 24 games. (In 2021 Eastern Division teams will be scheduled for FOUR games against each divisional opponent for a total of 32 games). All mandated conference contests shall count in divisional standings. 1.2 CONFERENCE COMMITTEES 1.2.1 Sports Chairperson: An athletic administrator from a member school shall be selected chairperson to the baseball coaches. Duties: a. Annual Meeting/Special Meetings - Prepare and distribute an agenda, oversee recording and distribution of minutes. b. Present approved recommendations to the Athletics Administrators for action. c. Attend the championship and administrate any conference issues that arise at the site. d. Assist conference office with editing of the sport operating code. 1.3 MEETINGS: The annual meeting of baseball coaches shall be at the discretion of the conference office. 1.4 PERSONNEL 1.4.1 Staff Limitations a. 1 head coach b. 1 full-time assistant (coaching contract with benefits) or part-time assistant or volunteer c. -

Questions & Answers Regarding Offensive and Defensive Charged Conferences in Baseball

Offensive and Defensive Charged Conferences 1. Is a defensive timeout charged when the catcher goes out to the mound after the last pitch thrown between innings? Updated timing rules require the pitcher to be ready to throw the first pitch of the inning prior to the expiration of 120 seconds between innings. The catcher could visit the pitcher briefly within that time frame without a charged conference as long as the timing rule is not violated or ask the umpire for extra “time” to go visit the mound after the last pitch but be charged one of the team’s 6 conferences. 2. Is a defensive timeout charged if the catcher goes out to the mound after an umpire or hitter is hit with a pitch or foul ball? No, during a time out for an injury to a player or umpire, another player visiting the pitcher is not a charged conference, provided that the visit does not otherwise delay the return to game action. This has typically been a courtesy visit to give the umpire/catcher/batter time to collect themselves. 3. Can the offensive base runners, batter and coaches’ conference during a pitching change without being charged a timeout? Yes, the offense can get together during a pitching change as that is not a charged conference. However, the base runners must be back at their bases prior to the last warm-up pitch so the game can be started without waiting for them to return. If they are slow returning and the game is delayed waiting for them to return to their bases, it would be a charged offensive conference. -

Minor League Baseball Announces Pace of Play Regulations for 2018

For Immediate Release March 14, 2018 Minor League Baseball Announces Pace of Play Regulations for 2018 Minor League Baseball to begin extra innings with runners on base, mound visits to be limited, and Triple-A and Double-A to use 15-second pitch timers with no runners on base ST. PETERSBURG, Fla. — Minor League Baseball today announced rule and procedure changes aimed at reducing the length of extra innings games and the number of mound visits during a game throughout Minor League Baseball. In addition, the Triple-A and Double-A levels will use a 15-second pitch clock with no runners on base. The procedures, created in partnership with Major League Baseball, aim to reduce the number of pitchers used in extra innings and the issues created by extra innings games, including, but not limited to, shortages of pitchers in the days to follow, the use of position players as pitchers and the transferring of players between affiliates due to pitching shortages caused by extra innings games. “We believe these changes to extra innings will enhance the fans’ enjoyment of the game and will become something that the fans will look forward to on nights where the game is tied late in the contest,” said Minor League Baseball President Pat O’Conner. “Player safety has been an area of growing concern for our partners at the Major League Baseball level, and the impact that lengthy extra innings games has on pitchers, position players and an entire organization was something that needed to be addressed.” EXTRA INNINGS -At all levels of Minor League Baseball, extra innings will begin with a runner on second base. -

World Series & Super Regional Championship Rules

2020 World Series & Super Regional Championship Rules Updated 6/18/2020 Continental Amateur Baseball Association 3211 Heathland Way, Mt. Pleasant, SC 29466 (843) 860-1568 Email: [email protected] www.cababaseball.com (INDEX ON PAGE 21) SECTION 2 Team Eligibility Requirements You may purchase a copy of The National High School Federation Rules by contacting the Customer Service Department, PO Box 361246, Indianapolis, Indiana 46236-5324. 1-800-776-3462 Order Online: http://www.nfhs.com/c-192-baseball.aspx 1.1 Team Registration Fee Any team participating in any CABA sanctioned league, tournament, CABA World Series or Super Regional Championship, as well as leagues and programs choosing to have affiliation with CABA, will pay a registration fee of $25. Their registration will be verified by receipt of a registration number they will be required to provide as proof of their registration when entering into any CABA sanctioned event at any level. This Form may be downloaded from the CABA website.wwwcababaseball.com then going to forms, or can be paid on line at that same web site. 2.1 Team Composition Independent teams, league teams, all star teams, travel teams, select teams or any other combination of players are eligible to participate in CABA events subject to the guidelines listed in 2.9 below. World Series or Super Regional Champion- ship Host teams are permitted to participate in other level of CABA league or tournament play. 2.2 Team Roster Due Before First Tournament Game The Team roster submitted at the managers meeting is final and official. Rosters submitted by a team not attending the managers meeting, must have submitted their roster to the World Series/Super Regional Championship Director prior to the managers meeting.