IRIS Special 2020-2: Artificial Intelligence in the Audiovisual Sector

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

German Jews in the United States: a Guide to Archival Collections

GERMAN HISTORICAL INSTITUTE,WASHINGTON,DC REFERENCE GUIDE 24 GERMAN JEWS IN THE UNITED STATES: AGUIDE TO ARCHIVAL COLLECTIONS Contents INTRODUCTION &ACKNOWLEDGMENTS 1 ABOUT THE EDITOR 6 ARCHIVAL COLLECTIONS (arranged alphabetically by state and then city) ALABAMA Montgomery 1. Alabama Department of Archives and History ................................ 7 ARIZONA Phoenix 2. Arizona Jewish Historical Society ........................................................ 8 ARKANSAS Little Rock 3. Arkansas History Commission and State Archives .......................... 9 CALIFORNIA Berkeley 4. University of California, Berkeley: Bancroft Library, Archives .................................................................................................. 10 5. Judah L. Mages Museum: Western Jewish History Center ........... 14 Beverly Hills 6. Acad. of Motion Picture Arts and Sciences: Margaret Herrick Library, Special Coll. ............................................................................ 16 Davis 7. University of California at Davis: Shields Library, Special Collections and Archives ..................................................................... 16 Long Beach 8. California State Library, Long Beach: Special Collections ............. 17 Los Angeles 9. John F. Kennedy Memorial Library: Special Collections ...............18 10. UCLA Film and Television Archive .................................................. 18 11. USC: Doheny Memorial Library, Lion Feuchtwanger Archive ................................................................................................... -

Privacy & Confidence

ESSAYS ON 21ST-CENTURY PR ISSUES PRIVACY AND CONFIDENCE photo: “Anonymous Hollywood Scientology protest” by Jason Scragz http://www.flickr.com/photos/scragz/2340505105/ PAUL SEAMAN Privacy and Confidence Paul Seaman Part I Google’s Eric Schmidt says we should be able to reinvent our identity at will. That’s daft. But he’s got a point. Part II What are we PRs to do with the troublesome issue of privacy? We certainly have an interest in leading this debate. So what kind of resolution should we be advising our clients to seek in this brave new world? Well, perhaps we should be telling them to win public confidence. Part III Blowing the whistle on WikiLeaks - the case against transparency in defence of trust. This essay appeared as three posts on paulseaman.eu between February and August 2010. Privacy and Confidence 3 Paul Seaman screengrab of: http://en.wikipedia.org/wiki/Streisand_effect of: screengrab Musing on PR, privacy and confidence – Part I Google’s Eric Schmidt says we should be recently to the WSJ: able to reinvent our identity at will. That’s daft. But he’s got a point. Most personalities “I actually think most people don’t want possess more than one side. Google to answer their questions,” he elabo- rates. “They want Google to tell them what PRs are well aware of the “Streisand Effect”, they should be doing next. coined by Techdirt’s Mike Masnick1, as the exposure in public of everything you try “Let’s say you’re walking down the street. hardest to keep private, particularly pictures. -

DEEP SEA LEBANON RESULTS of the 2016 EXPEDITION EXPLORING SUBMARINE CANYONS Towards Deep-Sea Conservation in Lebanon Project

DEEP SEA LEBANON RESULTS OF THE 2016 EXPEDITION EXPLORING SUBMARINE CANYONS Towards Deep-Sea Conservation in Lebanon Project March 2018 DEEP SEA LEBANON RESULTS OF THE 2016 EXPEDITION EXPLORING SUBMARINE CANYONS Towards Deep-Sea Conservation in Lebanon Project Citation: Aguilar, R., García, S., Perry, A.L., Alvarez, H., Blanco, J., Bitar, G. 2018. 2016 Deep-sea Lebanon Expedition: Exploring Submarine Canyons. Oceana, Madrid. 94 p. DOI: 10.31230/osf.io/34cb9 Based on an official request from Lebanon’s Ministry of Environment back in 2013, Oceana has planned and carried out an expedition to survey Lebanese deep-sea canyons and escarpments. Cover: Cerianthus membranaceus © OCEANA All photos are © OCEANA Index 06 Introduction 11 Methods 16 Results 44 Areas 12 Rov surveys 16 Habitat types 44 Tarablus/Batroun 14 Infaunal surveys 16 Coralligenous habitat 44 Jounieh 14 Oceanographic and rhodolith/maërl 45 St. George beds measurements 46 Beirut 19 Sandy bottoms 15 Data analyses 46 Sayniq 15 Collaborations 20 Sandy-muddy bottoms 20 Rocky bottoms 22 Canyon heads 22 Bathyal muds 24 Species 27 Fishes 29 Crustaceans 30 Echinoderms 31 Cnidarians 36 Sponges 38 Molluscs 40 Bryozoans 40 Brachiopods 42 Tunicates 42 Annelids 42 Foraminifera 42 Algae | Deep sea Lebanon OCEANA 47 Human 50 Discussion and 68 Annex 1 85 Annex 2 impacts conclusions 68 Table A1. List of 85 Methodology for 47 Marine litter 51 Main expedition species identified assesing relative 49 Fisheries findings 84 Table A2. List conservation interest of 49 Other observations 52 Key community of threatened types and their species identified survey areas ecological importanc 84 Figure A1. -

![Arxiv:1907.05276V1 [Cs.CV] 6 Jul 2019 Media Online Are Rapidly Outpacing the Ability to Manually Detect and Respond to Such Media](https://docslib.b-cdn.net/cover/0026/arxiv-1907-05276v1-cs-cv-6-jul-2019-media-online-are-rapidly-outpacing-the-ability-to-manually-detect-and-respond-to-such-media-490026.webp)

Arxiv:1907.05276V1 [Cs.CV] 6 Jul 2019 Media Online Are Rapidly Outpacing the Ability to Manually Detect and Respond to Such Media

Human detection of machine manipulated media Matthew Groh1, Ziv Epstein1, Nick Obradovich1,2, Manuel Cebrian∗1,2, and Iyad Rahwan∗1,2 1Media Laboratory, Massachusetts Institute of Technology, Cambridge, Massachusetts, USA 2Center for Humans & Machines, Max Planck Institute for Human Development, Berlin, Germany Abstract Recent advances in neural networks for content generation enable artificial intelligence (AI) models to generate high-quality media manipulations. Here we report on a randomized experiment designed to study the effect of exposure to media manipulations on over 15,000 individuals’ ability to discern machine-manipulated media. We engineer a neural network to plausibly and automatically remove objects from images, and we deploy this neural network online with a randomized experiment where participants can guess which image out of a pair of images has been manipulated. The system provides participants feedback on the accuracy of each guess. In the experiment, we randomize the order in which images are presented, allowing causal identification of the learning curve surrounding participants’ ability to detect fake content. We find sizable and robust evidence that individuals learn to detect fake content through exposure to manipulated media when provided iterative feedback on their detection attempts. Over a succession of only ten images, participants increase their rating accuracy by over ten percentage points. Our study provides initial evidence that human ability to detect fake, machine-generated content may increase alongside the prevalence of such media online. Introduction The recent emergence of artificial intelligence (AI) powered media manipulations has widespread societal implications for journalism and democracy [1], national security [2], and art [3]. AI models have the potential to scale misinformation to unprecedented levels by creating various forms of synthetic media [4]. -

GLOBAL CENSORSHIP Shifting Modes, Persisting Paradigms

ACCESS TO KNOWLEDGE RESEARCH GLOBAL CENSORSHIP Shifting Modes, Persisting Paradigms edited by Pranesh Prakash Nagla Rizk Carlos Affonso Souza GLOBAL CENSORSHIP Shifting Modes, Persisting Paradigms edited by Pranesh Pra ash Nag!a Ri" Car!os Affonso So$"a ACCESS %O KNO'LE(GE RESEARCH SERIES COPYRIGHT PAGE © 2015 Information Society Project, Yale Law School; Access to Knowle !e for "e#elo$ment %entre, American Uni#ersity, %airo; an Instituto de Technolo!ia & Socie a e do Rio+ (his wor, is $'-lishe s'-ject to a %reati#e %ommons Attri-'tion./on%ommercial 0%%.1Y./%2 3+0 In. ternational P'-lic Licence+ %o$yri!ht in each cha$ter of this -oo, -elon!s to its res$ecti#e a'thor0s2+ Yo' are enco'ra!e to re$ro 'ce, share, an a a$t this wor,, in whole or in part, incl' in! in the form of creat . in! translations, as lon! as yo' attri-'te the wor, an the a$$ro$riate a'thor0s2, or, if for the whole -oo,, the e itors+ Te4t of the licence is a#aila-le at <https677creati#ecommons+or!7licenses7-y.nc73+07le!alco e8+ 9or $ermission to $'-lish commercial #ersions of s'ch cha$ter on a stan .alone -asis, $lease contact the a'thor, or the Information Society Project at Yale Law School for assistance in contactin! the a'thor+ 9ront co#er ima!e6 :"oc'ments sei;e from the U+S+ <m-assy in (ehran=, a $'-lic omain wor, create by em$loyees of the Central Intelli!ence A!ency / em-assy of the &nite States of America in Tehran, de$ict. -

Editorial Algorithms

March 26, 2021 Editorial Algorithms The next AI frontier in News and Information National Press Club Journalism Institute Housekeeping - You will receive slides after the presentation - Interactive polls throughout the session - Use QA tool on Zoom to ask questions - If you still have questions, reach out to Francesco directly [email protected] or connect on twitter @fpmarconi What will your learn during today’s session 1. How AI is being used and will be used by journalists and communicators, and what that means for how we work 2. The ethics of AI, particularly related to diversity, equity and representation 3. The role of AI in the spread of misinformation and ‘fake news’ 4. How journalists and communications professionals can avoid pitfalls while taking advantage of the possibilities AI provides to develop new ways of telling stories and connecting with audiences Poll time! What’s your overall feeling about AI and news? A. It’s the future of news and communications! B. I’m cautiously optimistic C. I’m cautiously pessimistic D. It’s bad news for journalism Journalism was designed to solve information scarcity Newspapers were established to provide increasingly literate audiences with information. Journalism was originally designed to solve the problem that information was not widely available. The news industry went through a process of standardization so it could reliably source and produce information more efficiently. This led to the development of very structured ways of writing such as the “inverted pyramid” method, to organizing workflows around beats and to plan coverage based on news cycles. A new reality: information abundance The flood of information that humanity is now exposed to presents a new challenge that cannot be solved exclusively with the traditional methods of journalism. -

Guadamuz2013.Pdf (5.419Mb)

This thesis has been submitted in fulfilment of the requirements for a postgraduate degree (e.g. PhD, MPhil, DClinPsychol) at the University of Edinburgh. Please note the following terms and conditions of use: • This work is protected by copyright and other intellectual property rights, which are retained by the thesis author, unless otherwise stated. • A copy can be downloaded for personal non-commercial research or study, without prior permission or charge. • This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the author. • The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the author. • When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given. Networks, Complexity and Internet Regulation Scale-Free Law Andres Guadamuz Submitted in accordance with the requirements for the degree of Doctor of Philosophy by Publication(PhD) The University of Edinburgh February 2013 The candidate confirms that the work submitted is his/her own and that appropriate credit has been given where reference has been made to the work of others. © Andrés Guadamuz 2013 Some rights reserved. This work is Licensed under a Creative Commons Attribution-NonCommercial- ShareAlike 3.0 Unported License. Contents Figures and Tables ix Figures ix Tables x Abbreviations xi Cases xv Acknowledgments xvii License xix Attribution-NonCommercial-NoDerivs 3.0 Unported xix 1. Introduction 1 A short history of psychohistory 3 Objectives 10 Some notes on methodology 13 2. The Science of Complex Networks 15 1. -

My Name Is Julian Sanchez, and I

September 24, 2020 The Honorable Jan Schakowsky The Honorable Cathy McMorris Rodgers Chairwoman Ranking Member Subcommittee on Consumer Subcommittee on Consumer Protection & Commerce Protection & Commerce Committee on Energy & Commerce Committee on Energy & Commerce U.S. House of Representatives U.S. House of Representatives Washington, DC 20515 Washington, DC 20515 Dear Chairwoman Schakowsky, Ranking Member McMorris Rodgers, and Members of the Subcommittee: My name is Julian Sanchez, and I’m a senior fellow at the Cato Institute who focuses on issues at the intersection of technology and civil liberties—above all, privacy and freedom of expression. I’m grateful to the committee for the opportunity to share my views on this important topic. New communications technologies—especially when they enable horizontal connections between individuals—are inherently disruptive. In 16th century Europe, the advent of movable type printing fragmented a once-unified Christendom into a dizzying array of varied—and often violently opposed—sects. In the 1980s, one popular revolution against authoritarian rule in the Philippines was spurred on by broadcast radio—and decades later, another was enabled by mobile phones and text messaging. Whenever a technology reduces the friction of transmitting ideas or connecting people to each other, the predictable result is that some previously marginal ideas, identities, and groups will be empowered. While this is typically a good thing on net, the effect works just as well for ideas and groups that had previously been marginal for excellent reasons. Periods of transition from lower to higher connectivity are particularly fraught. Urbanization and trade in Europe’s early modern period brought with them, among their myriad benefits, cyclical outbreaks of plague, as pathogens that might once have burned out harmlessly found conditions amenable to rapid spread and mutation. -

PLAYNOTES Season: 43 Issue: 05

PLAYNOTES SEASON: 43 ISSUE: 05 BACKGROUND INFORMATION PORTLANDSTAGE The Theater of Maine INTERVIEWS & COMMENTARY AUTHOR BIOGRAPHY Discussion Series The Artistic Perspective, hosted by Artistic Director Anita Stewart, is an opportunity for audience members to delve deeper into the themes of the show through conversation with special guests. A different scholar, visiting artist, playwright, or other expert will join the discussion each time. The Artistic Perspective discussions are held after the first Sunday matinee performance. Page to Stage discussions are presented in partnership with the Portland Public Library. These discussions, led by Portland Stage artistic staff, actors, directors, and designers answer questions, share stories and explore the challenges of bringing a particular play to the stage. Page to Stage occurs at noon on the Tuesday after a show opens at the Portland Public Library’s Main Branch. Feel free to bring your lunch! Curtain Call discussions offer a rare opportunity for audience members to talk about the production with the performers. Through this forum, the audience and cast explore topics that range from the process of rehearsing and producing the text to character development to issues raised by the work Curtain Call discussions are held after the second Sunday matinee performance. All discussions are free and open to the public. Show attendance is not required. To subscribe to a discussion series performance, please call the Box Office at 207.774.0465. By Johnathan Tollins Portland Stage Company Educational Programs are generously supported through the annual donations of hundreds of individuals and businesses, as well as special funding from: The Davis Family Foundation Funded in part by a grant from our Educational Partner, the Maine Arts Commission, an independent state agency supported by the National Endowment for the Arts. -

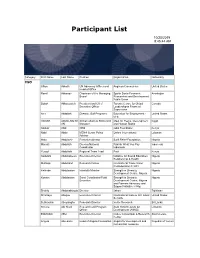

Participant List

Participant List 10/20/2019 8:45:44 AM Category First Name Last Name Position Organization Nationality CSO Jillian Abballe UN Advocacy Officer and Anglican Communion United States Head of Office Ramil Abbasov Chariman of the Managing Spektr Socio-Economic Azerbaijan Board Researches and Development Public Union Babak Abbaszadeh President and Chief Toronto Centre for Global Canada Executive Officer Leadership in Financial Supervision Amr Abdallah Director, Gulf Programs Educaiton for Employment - United States EFE HAGAR ABDELRAHM African affairs & SDGs Unit Maat for Peace, Development Egypt AN Manager and Human Rights Abukar Abdi CEO Juba Foundation Kenya Nabil Abdo MENA Senior Policy Oxfam International Lebanon Advisor Mala Abdulaziz Executive director Swift Relief Foundation Nigeria Maryati Abdullah Director/National Publish What You Pay Indonesia Coordinator Indonesia Yussuf Abdullahi Regional Team Lead Pact Kenya Abdulahi Abdulraheem Executive Director Initiative for Sound Education Nigeria Relationship & Health Muttaqa Abdulra'uf Research Fellow International Trade Union Nigeria Confederation (ITUC) Kehinde Abdulsalam Interfaith Minister Strength in Diversity Nigeria Development Centre, Nigeria Kassim Abdulsalam Zonal Coordinator/Field Strength in Diversity Nigeria Executive Development Centre, Nigeria and Farmers Advocacy and Support Initiative in Nig Shahlo Abdunabizoda Director Jahon Tajikistan Shontaye Abegaz Executive Director International Insitute for Human United States Security Subhashini Abeysinghe Research Director Verite -

Deepfaked Online Content Is Highly Effective in Manipulating People's

Deepfaked online content is highly effective in manipulating people’s attitudes and intentions Sean Hughes, Ohad Fried, Melissa Ferguson, Ciaran Hughes, Rian Hughes, Xinwei Yao, & Ian Hussey In recent times, disinformation has spread rapidly through social media and news sites, biasing our (moral) judgements of other people and groups. “Deepfakes”, a new type of AI-generated media, represent a powerful new tool for spreading disinformation online. Although Deepfaked images, videos, and audio may appear genuine, they are actually hyper-realistic fabrications that enable one to digitally control what another person says or does. Given the recent emergence of this technology, we set out to examine the psychological impact of Deepfaked online content on viewers. Across seven preregistered studies (N = 2558) we exposed participants to either genuine or Deepfaked content, and then measured its impact on their explicit (self-reported) and implicit (unintentional) attitudes as well as behavioral intentions. Results indicated that Deepfaked videos and audio have a strong psychological impact on the viewer, and are just as effective in biasing their attitudes and intentions as genuine content. Many people are unaware that Deepfaking is possible; find it difficult to detect when they are being exposed to it; and most importantly, neither awareness nor detection serves to protect people from its influence. All preregistrations, data and code available at osf.io/f6ajb. The proliferation of social media, dating apps, news and copy of a person that can be manipulated into doing or gossip sites, has brought with it the ability to learn saying anything (2). about a person’s moral character without ever having Deepfaking has quickly become a tool of to interact with them in real life. -

Subject Listing of Numbered Documents in M1934, OSS WASHINGTON SECRET INTELLIGENCE/SPECIAL FUNDS RECORDS, 1942-46

Subject Listing of Numbered Documents in M1934, OSS WASHINGTON SECRET INTELLIGENCE/SPECIAL FUNDS RECORDS, 1942-46 Roll # Doc # Subject Date To From 1 0000001 German Cable Company, D.A.T. 4/12/1945 State Dept.; London, American Maritime Delegation, Horta American Embassy, OSS; (Azores), (McNiece) Washington, OSS 1 0000002 Walter Husman & Fabrica de Produtos Alimonticios, "Cabega 5/29/1945 State Dept.; OSS Rio de Janeiro, American Embassy Branca of Sao Paolo 1 0000003 Contraband Currency & Smuggling of Wrist Watches at 5/17/1945 Washington, OSS Tangier, American Mission Tangier 1 0000004 Shipment & Movement of order for watches & Chronographs 3/5/1945 Pierce S.A., Switzerland Buenos Aires, American Embassy from Switzerland to Argentine & collateral sales extended to (Manufactures) & OSS (Vogt) other venues/regions (Washington) 1 0000005 Brueghel artwork painting in Stockholm 5/12/1945 Stockholm, British Legation; London, American Embassy London, American Embassy & OSS 1 0000006 Investigation of Matisse painting in possession of Andre Martin 5/17/1945 State Dept.; Paris, British London, American Embassy of Zurich Embassy, London, OSS, Washington, Treasury 1 0000007 Rubens painting, "St. Rochus," located in Stockholm 5/16/1945 State Dept.; Stockholm, British London, American Embassy Legation; London, Roberts Commission 1 0000007a Matisse painting held in Zurich by Andre Martin 5/3/1945 State Dept.; Paris, British London, American Embassy Embassy 1 0000007b Interview with Andre Martiro on Matisse painting obtained by 5/3/1945 Paris, British Embassy London, American Embassy Max Stocklin in Paris (vice Germans allegedly) 1 0000008 Account at Banco Lisboa & Acores in name of Max & 4/5/1945 State Dept.; Treasury; Lisbon, London, American Embassy (Peterson) Marguerite British Embassy 1 0000008a Funds transfer to Regerts in Oporto 3/21/1945 Neutral Trade Dept.