Proceedings ESCIM 2016

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Hard and Soft Computing for Artificial Intelligence, Multimedia and Security Advances in Intelligent Systems and Computing

Advances in Intelligent Systems and Computing 534 Shin-ya Kobayashi Andrzej Piegat Jerzy Pejaś Imed El Fray Janusz Kacprzyk Editors Hard and Soft Computing for Artificial Intelligence, Multimedia and Security Advances in Intelligent Systems and Computing Volume 534 Series editor Janusz Kacprzyk, Polish Academy of Sciences, Warsaw, Poland e-mail: [email protected] About this Series The series “Advances in Intelligent Systems and Computing” contains publications on theory, applications, and design methods of Intelligent Systems and Intelligent Computing. Virtually all disciplines such as engineering, natural sciences, computer and information science, ICT, economics, business, e-commerce, environment, healthcare, life science are covered. The list of topics spans all the areas of modern intelligent systems and computing. The publications within “Advances in Intelligent Systems and Computing” are primarily textbooks and proceedings of important conferences, symposia and congresses. They cover significant recent developments in the field, both of a foundational and applicable character. An important characteristic feature of the series is the short publication time and world-wide distribution. This permits a rapid and broad dissemination of research results. Advisory Board Chairman Nikhil R. Pal, Indian Statistical Institute, Kolkata, India e-mail: [email protected] Members Rafael Bello, Universidad Central “Marta Abreu” de Las Villas, Santa Clara, Cuba e-mail: [email protected] Emilio S. Corchado, University of Salamanca, Salamanca, -

ADVANCED TOPICS on SIGNAL PROCESSING, ROBOTICS and AUTOMATION

ADVANCED TOPICS on SIGNAL PROCESSING, ROBOTICS and AUTOMATION Proceedings of the 7th WSEAS International Conference on SIGNAL PROCESSING, ROBOTICS and AUTOMATION (ISPRA '08) Cambridge, UK, February 20-22, 2008 Mathematics and Computers in Science and Engineering A Series of Reference Books and Textbooks Published by WSEAS Press www.wseas.org ISBN: 978-960-6766-44-2 ISSN: 1790-5117 ADVANCED TOPICS on SIGNAL PROCESSING, ROBOTICS and AUTOMATION Proceedings of the 7th WSEAS International Conference on SIGNAL PROCESSING, ROBOTICS and AUTOMATION (ISPRA '08) Mathematics and Computers in Science and Engineering A Series of Reference Books and Textbooks Published by WSEAS Press www.wseas.org Copyright © 2008, by WSEAS Press All the copyright of the present book belongs to the World Scientific and Engineering Academy and Society Press. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the Editor of World Scientific and Engineering Academy and Society Press. All papers of the present volume were peer reviewed by two independent reviewers. Acceptance was granted when both reviewers' recommendations were positive. See also: http://www.worldses.org/review/index.html ISSN: 1790-5117 ISBN: 978-960-6766-44-2 World Scientific and Engineering Academy and Society ADVANCED TOPICS on SIGNAL PROCESSING, ROBOTICS and AUTOMATION Proceedings of the 7th WSEAS International Conference on SIGNAL PROCESSING, ROBOTICS and AUTOMATION (ISPRA '08) Cambridge, UK, February 20-22, 2008 Honorary Editors: Prof. Lotfi A. Zadeh University of Berkeley, USA Prof. -

Data Analytics and Management ICDAM-2020

International Conference on Data Analytics and Management An Indo-European Conference ICDAM-2020 18th June 2020 Organized by Jan Wyzykowski University, Polkowice, Poland and B.M. Institute of Engineering and Technology, Haryana, India 2 Schedule ICDAM-2020 3 4 ICDAM-2020 Steering Committee Members Patron(s): Dr. Tadeusz Kierzyk, Prof UJW, Rector of Jan Wyzykowski University, Polkowice, Poland Lukasz Puzniecki, Mayor of Polkowice, Poland Mr. Rajeev Jain, Chairman, BM Institute of Engineering & Technology, India Mr. Rakesh Kuchhal, Manager BOG, BM Institute of Engineering & Technology, India General Chair(s): Prof. Dr. Janusz Kacprzyk, Polish Academy of Sciences, Systems Research Institute, Poland Prof. Dr. Cesare Alippi, Polytechnic University of Milan, Italy Prof. Dr. Siddhartha Bhattacharyya, CHRIST (Deemed to be University), Bangalore, India Honorary Chairs: Prof. Dr. Aboul Ella Hassanien, Cairo University, Egypt Prof. Dr. Vaclav Snasel, Rector, VSB-Technical University of Ostrava, Czech Republic Conference Chair: Dr. Zdzislaw Polkowski, Prof UJW, Jan Wyzykowski University, Polkowice, Poland Prof. Dr. Harish Mittal, Principal, BM Institute of Engineering & Technology, India Prof. Dr. Joel J P C Rodrigues, Universidade Estadual do Piau Teresina, Brazil Prof. Dr. Abhishek Swaroop, Bhagwan Parshuram Institute of Technology, Delhi, India Prof. Dr. Anil K Ahlawat, KIET Group of Institutes, Ghaziabad, India Technical Program Chair: Dr. Stanislaw Piesiak, Prof UJW, Jan Wyzykowski University, Polkowice, Poland Dr. Jan Walczak, Jan Wyzykowski University, Polkowice, Poland Dr. Anna Wojciechowicz, Jan Wyzykowski University, Lubin, Poland Dr. Abhinav Juneja, Associate Director, BM Institute of Engineering & Technology, India Dr. Vishal Jain, Dean Academics, BM Institute of Engineering & Technology, India Technical Program Co-Chair: Prof. Dr. Victor Hugo C. -

Proceedings of the First International Conference on Information Technology and Knowledge Management December 22–23, 2017

Annals of Computer Science and Information Systems Volume 14 Proceedings of the First International Conference on Information Technology and Knowledge Management December 22–23, 2017. New Delhi, India Ajay Jaiswal, Vijender Kumar Solanki, Zhongyu (Joan) Lu, Nikhil Rajput (eds.) Annals of Computer Science and Information Systems, Volume 14 Series editors: Maria Ganzha, Systems Research Institute Polish Academy of Sciences and Warsaw University of Technology, Poland Leszek Maciaszek, Wrocław Universty of Economy, Poland and Macquarie University, Australia Marcin Paprzycki, Systems Research Institute Polish Academy of Sciences and Management Academy, Poland Senior Editorial Board: Wil van der Aalst, Department of Mathematics & Computer Science, Technische Universiteit Eindhoven (TU/e), Eindhoven, Netherlands Frederik Ahlemann, University of Duisburg-Essen, Germany Marco Aiello, Faculty of Mathematics and Natural Sciences, Distributed Systems, University of Groningen, Groningen, Netherlands Mohammed Atiquzzaman, School of Computer Science, University of Oklahoma, Norman, USA Barrett Bryant, Department of Computer Science and Engineering, University of North Texas, Denton, USA Ana Fred, Department of Electrical and Computer Engineering, Instituto Superior T´ecnico (IST—Technical University of Lisbon), Lisbon, Portugal Janusz Górski, Department of Software Engineering, Gdansk University of Technology, Gdansk, Poland Mike Hinchey, Lero—the Irish Software Engineering Research Centre, University of Limerick, Ireland Janusz Kacprzyk, Systems Research -

Consensus Reaching in Multi-Agent Decision Making: the Role of Fuzzy Logic, Human Judgments, Biases and Fairness Analyses

Consensus reaching in multi-agent decision making: the role of fuzzy logic, human judgments, biases and fairness analyses Janusz Kacprzyk Fellow of IEEE, IFSA, EurAI, SMIA Full Member, Polish Academy of Sciences Foreign Member, Bulgarian Academy of Sciences Member, Academia Europaea Member, European Academy of Sceinces and Arts Foreign Member, Spanish Royal Academy of Economic and Financial Sciences Systems Research Institute, Polish Academy of Sciences ul. Newelska 6, 01-447 Warsaw, Poland Email: [email protected] Abstract Decision making processes involving multiple agents (individuals, actors, decision makers, etc.) is concerned, and small groups of agents are assumed. The agents provide theirn testimonies, here their (fuzzy or graded) preferences. As a point of departure the reaching of consensus is discussed that may be claimed to lead to “better” decisions. A “soft” concept of consensus is assumed, in the sense of Kacprzyk and Fedrizzi (1987 - …) meant as a degree to which, e.g., “most of relevant agents agree as to their preferences (or other testimonies as, e.g., ordering of options) as to almost all crucial options”. The fuzzy majority, equated with linguistic quantifiers, is dealt with using the classic Zadeh’s calculus, OWA operators, etc. As usually, we mean consensus as a state of agreement in a group, as a way to reach consensus in the sense of time evolution of changes of the agents’ testimonies, and also as a way decision making should proceed in multiegent settings. Basically, consensus decision making aims at attaining the consent, not necessarily the agreement, of agents involved account for views of all agents involved to attain a decision that will be beneficial to the group as a whole, not necessarily to the particular agents who may give consent even if this will not be their first choice but just acceptable, to offer their willingness to cooperate. -

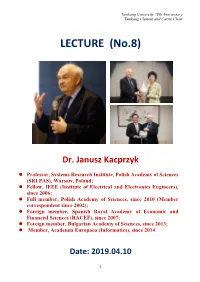

Lecture Notes

Tamkang University 70th Anniversary Tamkang Clement and Carrie Chair LECTURE (No.8) Dr. Janusz Kacprzyk Professor, Systems Research Institute, Polish Academy of Sciences (SRI PAS), Warsaw, Poland; Fellow, IEEE (Institute of Electrical and Electronics Engineers), since 2006; Full member, Polish Academy of Sciences, since 2010 (Member correspondent since 2002); Foreign member, Spanish Royal Academy of Economic and Financial Sciences (RACEF), since 2007; Foreign member, Bulgarian Academy of Sciences, since 2013; Member, Academia Europaea (Informatics), since 2014 Date: 2019.04.10 1 Tamkang University 70th Anniversary Tamkang Clement and Carrie Chair INTRODUCTION ● Janusz Kacprzyk is a Polish engineer Research Institute and an and mathematician, notable for his academician (full member) of the multiple contributions to the field of Polish Academy of Sciences. In 1965- computational and artificial 1970, he studied at the Department intelligence tools like fuzzy sets, of Electronics, Warsaw University of mathematical optimization, decision Technology in Warsaw, Poland, and making under uncertainty, his major field is computer science computational intelligence, and automatic control. Later, he intuitionistic fuzzy sets, data analysis obtained M.S. in computer science and data mining, with applications in and automatic control received in databases, ICT, mobile robotics and 1970 from the Warsaw University of others. Technology. He acquired Ph.D. with distinction in systems analysis ● Dr. Kacprzyk is a professor of received in 1977 from the Systems computer science at the Systems 2 Tamkang University 70th Anniversary Tamkang Clement and Carrie Chair Research Institute, Polish, Academy of Sciences in Warsaw, Poland. Afterwards, he obtained D.Sc. (habilitation) in computer science in 1990 from the Systems Research Institute, Polish Academy of Sciences. -

Artificial Intelligence Research Community and Associations in Poland 161

F O U N D A T I O N S O F C O M P U T I N G A N D D E C I S I O N S C I E N C E S Vol. 45 (2020) No. 3 ISSN 0867-6356 DOI: 10.2478/fcds-2020-0009 e-ISSN 2300-3405 Artificial Intelligence Research Community and Associations in Poland Grzegorz J. Nalepa ∗, Jerzy Stefanowski y Abstract. In last years Artificial Intelligence presented a tremendous progress by offering a variety of novel methods, tools and their spectacular applications. Besides showing scientific breakthroughs it attracted interest both of the general public and industry. It also opened heated debates on the impact of Artificial Intelligence on changing the economy and society. Having in mind this international landscape, in this short paper we discuss the Polish AI research community, some of its main achievements, opportunities and limitations. We put this discussion in the context of the current developments in the international AI community. Moreover, we refer to activities of Polish scientific associations and their initiative of founding Polish Alliance for the Development of Artificial Intelligence (PP-RAI). Finally two last editions of PP-RAI joint conferences are summarized. 1. Introductory remarks Artificial Intelligence (AI) began as an academic discipline nearly 70 years ago, while during the Dartmouth conference in 1956 the expression Artificial Intelligence was coined as the label for it. Since that time it has been evolving a lot and developing in the cycles of optimism and pessimism [27]. In the first period research in several main subfields were started but the expectations the founders put were not fully real- ized. -

1 ILMO. SR. DR. D. JANUSZ KACPRZYK Current Employment

ILMO. SR. DR. D. JANUSZ KACPRZYK Current employment: Professor, Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland; 1990 to present. Professor, WIT – Warsaw School of Appied Information Technology and Management, Warsaw, Poland, 1999 to present. Consultant, Industrial Institute of Automation and Measurements (PIAP), Warsaw, Poland, since 2007. Honorary professorship: Honorary External Professor, Department of Mathematics, Yli Normal University, Yining City, Xinjiang City, Xinjiang, P.R. of China, since 2006. Visiting professorships: Visiting Professor, Department of Computer Science, Tijuana Institute of Technology, Tijuana, BC, Mexico, since 2006. Visiting Professor, School of Science and Technology, Nottigham Trent University, Nottigham, UK, 2003 – 2006. Visiting Professor, Department of Management and Computer Sciences, University of Trento, 38100 Trento, Italy, Spring semester, 1994. Visiting Professor, Department of Computer Science, University of North Carolina, Charlotte, NC 29223, USA, Spring semester 1988. Visiting Professor, Department of Computer Science, The University of Tennessee, Knoxville, TN 37916, USA, Spring semester, 1986. Visiting Professor, Hagan School of Business, Iona College, New Rochelle, NY 10801, USA, 1981 – 1983. Current functions in organizations: President, International Fuzzy Systems Association (IFSA), Since 2007. President, Polish Society for Operational and Systems Research (PTBOiS), since 2007. Fellowships: Fellow, IEEE (Institute of Electrical and Electronics Engineers). Fellow, IFSA (International Fuzzy Systems Association) Current research interests: Uncertainty and imprecision in systems modeling; Decision making and control under uncertainty and imprecision (fuzziness); Intelligent decision support systems; Fuzzy logic; Fuzziness in database management systems; Fuzzy and possibilistic approaches to knowledge representation and processing; Management of uncertainty in knowledge-based systems; Evolutionary programming, and its applications; Neural networks, and their applications. -

CURRICULUM VITAE and LIST of PUBLICATIONS Academician

CURRICULUM VITAE AND LIST OF PUBLICATIONS Academician Janusz Kacprzyk. Birth date: July 12, 1947 Professor, Ph.D, D.Sc Fellow of IEEE, IFSA Systems Research Institute Born, raised and lived Polish Academy of Sciences in Warsaw, Poland ul. Newelska 6 Married, one child 01-447 Warsaw Poland Phone: +(48)(22) 3810275 - office Fax: +(48)(22) 3810103 - office Languages: (fluent): English, Russian, German, Italian, (good): French, Spanish E-mail: [email protected] WWW: http://www.ibspan.waw.pl/~kacprzyk Google: kacprzyk Current employment: Professor (full time), Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland; 1970 to present. Professor (part time), WIT – Warsaw School of Appied Information Technology and Management, Warsaw, Poland, 1998 - to present. Professor (part time), Industrial Institute of Automation and Measurements (PIAP), Warsaw, Poland, 2007 – to present. Professor (part time), Department of Electrical and Computer Engineering, Cracow University of Technology, Cracow, Poland, 2010 – to present. Current functions: Chairman of the Provosts’ Council, Department IV of Engineering Sciences, Polish Academy of Sciences: Supervising and evaluating 13 research institutes and 21 national scientific committees in the area of engineering sciences Member, Presidium of the Polish Academy of Sciences Member, Central Testimonial Commission of the Republic of Poland Head of Laboratory of Intelligent Systems, Systems Research Institute, Polish Academy of Sciences, Warsaw, Poland Memberships of Academies: Full member, Polish Academy of Sciences, since 2010 (Member correspondent since 2002). Foreign member, Spanish Royal Academy of Economic and Financial Sciences (RACEF), since 2007. Foreign member, Bulgarian Academy of Sciences, since 2013. Honorary professorship and functions: Honorary External Professor, Department of Mathematics, Yli Normal University, Yining City, Xinjiang City, Xinjiang, P.R. -

Burhan Turksen and Masoud Nikravesh

A GLIMPSE INTO THE FUTURE Moderators: Burhan Turksen and Masoud Nikravesh Panelists: Janusz Kacprzyk, K. Hirota, Vesa Niskenan, Masoud Nikravesh, Henri Prade, Enric Trillas, Burhan Turksen, Ron Yager, Lotfi A. Zadeh Predicting the future of fuzzy logic is difficult if fuzzy logic is interpreted in its wide sense, that is, a theory in which everything is or is allowed to be a matter of degree. But what is certain is that as we move further into the age of machine intelligence and mechanized decision-making, both theoretical and applied aspects of fuzzy logic will gain in visibility and importance. What will stand out is the unique capability of fuzzy logic to serve as a basis for reasoning and computation with information described in natural language. No other theory has this capability. Moderator: Burhan Turksen: I.B. Türksen received the B.S. and M.S. degrees in Industrial Engineering and the Ph.D. degree in Systems Management and Operations Research all from the University of Pittsburgh, PA. He joined the Faculty of Applied Science and Engineering at the University of Toronto and became Full Professor in 1983. In 1984-1985 academic year, he was a Visiting Professor at the Middle East Technical University and Osaka Prefecture University. Since 1987, he has been Director of the Knowledge / Intelligence Systems Laboratory. During the 1991- 1992 academic year, he was a Visiting Research Professor at LIFE Laboratory for International Fuzzy Engineering, and the Chair of Fuzzy Theory at Tokyo Institute of Technology. During 1996 academic year, he was Visiting Research Professor at the University of South Florida and Bilkent University. -

The 5Th International Fuzzy Systems Symposium 2017

THE 5TH INTERNATIONAL FUZZY SYSTEMS SYMPOSIUM 2017 PROGRAMME and ABSTRACTS October 14 - 15, 2017 Ankara, Turkey http://fuzzyss2017.etu.edu.tr/ Organized by Fuzzy Systems Association TOBB University of Economics & Technology PREFACE The Fifth International Fuzzy System Symposium (FUZZYSS’17) dedicated to Professor I. Burhan TURKSEN is organized by Fuzzy System Association in TOBB University of Economics and Technology. During FUZZYSS’17 a total of 115 oral presentations and four invited speeches are given. Prof. Dr. Ibrahim Ozkan, Prof. Dr. Adil Baykasoglu, Prof. Dr. Omer Akin and Assoc. Prof. Dr. Cagdas Hakan Aladag mention on the uncertainty, uncertainty management, and historical development of fuzzy systems. The oral presentations from different application areas are given during FUZZYSS'17. This programme and abstract book includes the abstracts of all accepted and presented studies. After FUZZYSS'17, some selected full-text papers will be published in special issues of the International Journals. We believe that FUZZYSS series will provide some important contributions to the scientific developments in fuzzy systems in Turkey and its neighboring countries. C.H. Aladag Ph.D., D. Alptekin, B. Alptekin Editors HONORARY COMMITTEE Chair Lotfi A. Zadeh University of California, USA İ. Burhan Türkşen TOBB University of Economics and Technology, Turkey Abraham Kandel, University of South Florida, USA Barnard Grabot, ENIT-LPG, France Bernard De Baets, Ghent University, Belgium Bill Grover, Simon Fraser University, British Colombia, USA Didier Dubois, Universite Paul Sebatier, France Germano Resconi, Catholic University, Italy Hans J. Zimmerman, Institute of Technology, Aachen, Germany Henri Prade, Universite Paul Sebatier, France Hideo Tanaka, Hiroshima International University, Japan J.