Clutter to Cluster

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Dockerdocker

X86 Exagear Emulation • Android Gaming • Meta Package Installation Year Two Issue #14 Feb 2015 ODROIDMagazine DockerDocker OS Spotlight: Deploying ready-to-use Ubuntu Studio containers for running complex system environments • Interfacing ODROID-C1 with 16 Channel Relay Play with the Weather Board • ODROID-C1 Minimal Install • Device Configuration for Android Development • Remote Desktop using Guacamole What we stand for. We strive to symbolize the edge of technology, future, youth, humanity, and engineering. Our philosophy is based on Developers. And our efforts to keep close relationships with developers around the world. For that, you can always count on having the quality and sophistication that is the hallmark of our products. Simple, modern and distinctive. So you can have the best to accomplish everything you can dream of. We are now shipping the ODROID U3 devices to EU countries! Come and visit our online store to shop! Address: Max-Pollin-Straße 1 85104 Pförring Germany Telephone & Fax phone : +49 (0) 8403 / 920-920 email : [email protected] Our ODROID products can be found at http://bit.ly/1tXPXwe EDITORIAL ow that ODROID Magazine is in its second year, we’ve ex- panded into several social networks in order to make it Neasier for you to ask questions, suggest topics, send article submissions, and be notified whenever the latest issue has been posted. Check out our Google+ page at http://bit.ly/1D7ds9u, our Reddit forum at http://bit. ly/1DyClsP, and our Hardkernel subforum at http://bit.ly/1E66Tm6. If you’ve been following the recent Docker trends, you’ll be excited to find out about some of the pre-built Docker images available for the ODROID, detailed in the second part of our Docker series that began last month. -

UNIVERSITY of CALIFORNIA SANTA CRUZ UNDERSTANDING and SIMULATING SOFTWARE EVOLUTION a Dissertation Submitted in Partial Satisfac

UNIVERSITY OF CALIFORNIA SANTA CRUZ UNDERSTANDING AND SIMULATING SOFTWARE EVOLUTION A dissertation submitted in partial satisfaction of the requirements for the degree of DOCTOR OF PHILOSOPHY in COMPUTER SCIENCE by Zhongpeng Lin December 2015 The Dissertation of Zhongpeng Lin is approved: Prof. E. James Whitehead, Jr., Chair Asst. Prof. Seshadhri Comandur Prof. Timothy J. Menzies Tyrus Miller Vice Provost and Dean of Graduate Studies Copyright c by Zhongpeng Lin 2015 Table of Contents List of Figures v List of Tables vii Abstract ix Dedication xi Acknowledgments xii 1 Introduction 1 1.1 Emergent Phenomena in Software . 1 1.2 Simulation of Software Evolution . 3 1.3 Research Outline . 4 2 Power Law and Complex Networks 6 2.1 Power Law . 6 2.2 Complex Networks . 9 2.3 Empirical Studies of Software Evolution . 12 2.4 Summary . 17 3 Data Set and AST Differences 19 3.1 Data Set . 19 3.2 ChangeDistiller . 21 3.3 Data Collection Work Flow . 23 4 Change Size in Four Open Source Software Projects 24 4.1 Methodology . 25 4.2 Commit Size . 27 4.3 Monthly Change Size . 32 4.4 Summary . 36 iii 5 Generative Models for Power Law and Complex Networks 38 5.1 Generative Models for Power Law . 38 5.1.1 Preferential Attachment . 41 5.1.2 Self-organized Criticality . 42 5.2 Generative Models for Complex Networks . 50 6 Simulating SOC and Preferential Attachment in Software Evolution 53 6.1 Preferential Attachment . 54 6.2 Self-organized Criticality . 56 6.3 Simulation Model . 57 6.4 Experiment Setup . -

Accesso Alle Macchine Virtuali in Lab Vela

Accesso alle Macchine Virtuali in Lab In tutti i Lab del camous esiste la possibilita' di usare: 1. Una macchina virtuale Linux Light Ubuntu 20.04.03, che sfrutta il disco locale del PC ed espone un solo utente: studente con password studente. Percio' tutti gli studenti che accedono ad un certo PC ed usano quella macchina virtuale hanno la stessa home directory e scrivono sugli stessi file che rimangono solo su quel PC. L'utente PUO' usare i diritti di amministratore di sistema mediante il comando sudo. 2. Una macchina virtuale Linux Light Ubuntu 20.04.03 personalizzata per ciascuno studente e la cui immagine e' salvata su un server di storage remoto. Quando un utente autenticato ([email protected]) fa partire questa macchina Virtuale LUbuntu, viene caricata dallo storage centrale un immagine del disco esclusivamente per quell'utente specifico. I file modificati dall'utente vengono salvati nella sua immagine sullo storage centrale. L'immagine per quell'utente viene utilizzata anche se l'utente usa un PC diverso. L'utente nella VM è studente con password studente ed HA i diritti di amministratore di sistema mediante il comando sudo. Entrambe le macchine virtuali usano, per ora, l'hypervisor vmware. • All'inizio useremo la macchina virtuale LUbuntu che salva i file sul disco locale, per poterla usare qualora accadesse un fault delle macchine virtuali personalizzate. • Dalla prossima lezione useremo la macchina virtuale LUbuntu che salva le immagini personalizzate in un server remoto. Avviare VM LUBUNTU in Locale (1) Se la macchina fisica è spenta occorre accenderla. Fatto il boot di windows occorre loggarsi sulla macchina fisica Windows usando la propria account istituzionale [email protected] Nel desktop Windows, aprire il File esplorer ed andare nella cartella C:\VM\LUbuntu Nella directory vedete un file LUbuntu.vmx Probabilmente l'estensione vmx non è visibile e ci sono molti file con lo stesso nome LUbuntu. -

Synthetic Data for English Lexical Normalization: How Close Can We Get to Manually Annotated Data?

Proceedings of the 12th Conference on Language Resources and Evaluation (LREC 2020), pages 6300–6309 Marseille, 11–16 May 2020 c European Language Resources Association (ELRA), licensed under CC-BY-NC Synthetic Data for English Lexical Normalization: How Close Can We Get to Manually Annotated Data? Kelly Dekker, Rob van der Goot University of Groningen, IT University of Copenhagen [email protected], [email protected] Abstract Social media is a valuable data resource for various natural language processing (NLP) tasks. However, standard NLP tools were often designed with standard texts in mind, and their performance decreases heavily when applied to social media data. One solution to this problem is to adapt the input text to a more standard form, a task also referred to as normalization. Automatic approaches to normalization have shown that they can be used to improve performance on a variety of NLP tasks. However, all of these systems are supervised, thereby being heavily dependent on the availability of training data for the correct language and domain. In this work, we attempt to overcome this dependence by automatically generating training data for lexical normalization. Starting with raw tweets, we attempt two directions, to insert non-standardness (noise) and to automatically normalize in an unsupervised setting. Our best results are achieved by automatically inserting noise. We evaluate our approaches by using an existing lexical normalization system; our best scores are achieved by custom error generation system, which makes use of some manually created datasets. With this system, we score 94.29 accuracy on the test data, compared to 95.22 when it is trained on human-annotated data. -

Clutter to Cluster

CoveR sToRy PelicanHPC Andrea Danti, Fotolia Danti, Andrea CLUTTERTurn your desktop computer into a high-performance TO CLUSTER cluster with PelicanHPC Crunch big numbers with your very own high-performance computing cores to work. Both 32-bit and 64-bit versions are available, so grab the one BY MAYANK SHARMA cluster. that matches your hardware. The developer claims that with Peli- f your users are clamoring for the puting in Fortran, C, Python, and Octave canHPC you can get a cluster up and Ipower of a data center but your pe- to provide some basic working examples running in five minutes. However, this is nurious employer tells you to make for beginners. a complete exaggeration – you can do it do with the hardware you already own, However, the process of maintaining in under three. don’t give up hope. With some some the distribution was pretty time con- First, make sure you get all the ingre- time, a little effort, and a few open suming, especially when it came to up- dients right: You need a computer to act source tools, you can transform your dating packages such as X and KDE. as a front-end node, and others that’ll mild-mannered desktop systems into a That’s when Creel discovered Debian act as slave computing nodes. The front- number-crunching super computer. For Live, spent time wrapping his head end and the slave nodes connect via the the impatient, the PelicanHPC Live CD around the live-helper package, and cre- network, so they need to be part of a will cobble off-the-shelf hardware into a ated a more systematic way to make a local LAN. -

Introduction to Fmxlinux Delphi's Firemonkey For

Introduction to FmxLinux Delphi’s FireMonkey for Linux Solution Jim McKeeth Embarcadero Technologies [email protected] Chief Developer Advocate & Engineer For quality purposes, all lines except the presenter are muted IT’S OK TO ASK QUESTIONS! Use the Q&A Panel on the Right This webinar is being recorded for future playback. Recordings will be available on Embarcadero’s YouTube channel Your Presenter: Jim McKeeth Embarcadero Technologies [email protected] | @JimMcKeeth Chief Developer Advocate & Engineer Agenda • Overview • Installation • Supported platforms • PAServer • SDK & Packages • Usage • UI Elements • Samples • Database Access FireDAC • Migrating from Windows VCL • midaconverter.com • 3rd Party Support • Broadway Web Why FMX on Linux? • Education - Save money on Windows licenses • Kiosk or Point of Sale - Single purpose computers with locked down user interfaces • Security - Linux offers more security options • IoT & Industrial Automation - Add user interfaces for integrated systems • Federal Government - Many govt systems require Linux support • Choice - Now you can, so might as well! Delphi for Linux History • 1999 Kylix: aka Delphi for Linux, introduced • It was a port of the IDE to Linux • Linux x86 32-bit compiler • Used the Trolltech QT widget library • 2002 Kylix 3 was the last update to Kylix • 2017 Delphi 10.2 “Tokyo” introduced Delphi for x86 64-bit Linux • IDE runs on Windows, cross compiles to Linux via the PAServer • Designed for server side development - no desktop widget GUI library • 2017 Eugene -

The Gnome Desktop Comes to Hp-Ux

GNOME on HP-UX Stormy Peters Hewlett-Packard Company 970-898-7277 [email protected] THE GNOME DESKTOP COMES TO HP-UX by Stormy Peters, Jim Leth, and Aaron Weber At the Linux World Expo in San Jose last August, a consortium of companies, including Hewlett-Packard, inaugurated the GNOME Foundation to further the goals of the GNOME project. An organization of open-source software developers, the GNOME project is the major force behind the GNOME desktop: a powerful, open-source desktop environment with an intuitive user interface, a component-based architecture, and an outstanding set of applications for both developers and users. The GNOME Foundation will provide resources to coordinate releases, determine future project directions, and promote GNOME through communication and press releases. At the same conference in San Jose, Hewlett-Packard also announced that GNOME would become the default HP-UX desktop environment. This will enhance the user experience on HP-UX, providing a full feature set and access to new applications, and also will allow commonality of desktops across different vendors' implementations of UNIX and Linux. HP will provide transition tools for migrating users from CDE to GNOME, and support for GNOME will be available from HP. Those users who wish to remain with CDE will continue to be supported. Hewlett-Packard, working with Ximian, Inc. (formerly known as Helix Code), will be providing the GNOME desktop on HP-UX. Ximian is an open-source desktop company that currently employs many of the original and current developers of GNOME, including Miguel de Icaza. They have developed and contributed applications such as Evolution and Red Carpet to GNOME. -

Debian \ Amber \ Arco-Debian \ Arc-Live \ Aslinux \ Beatrix

Debian \ Amber \ Arco-Debian \ Arc-Live \ ASLinux \ BeatriX \ BlackRhino \ BlankON \ Bluewall \ BOSS \ Canaima \ Clonezilla Live \ Conducit \ Corel \ Xandros \ DeadCD \ Olive \ DeMuDi \ \ 64Studio (64 Studio) \ DoudouLinux \ DRBL \ Elive \ Epidemic \ Estrella Roja \ Euronode \ GALPon MiniNo \ Gibraltar \ GNUGuitarINUX \ gnuLiNex \ \ Lihuen \ grml \ Guadalinex \ Impi \ Inquisitor \ Linux Mint Debian \ LliureX \ K-DEMar \ kademar \ Knoppix \ \ B2D \ \ Bioknoppix \ \ Damn Small Linux \ \ \ Hikarunix \ \ \ DSL-N \ \ \ Damn Vulnerable Linux \ \ Danix \ \ Feather \ \ INSERT \ \ Joatha \ \ Kaella \ \ Kanotix \ \ \ Auditor Security Linux \ \ \ Backtrack \ \ \ Parsix \ \ Kurumin \ \ \ Dizinha \ \ \ \ NeoDizinha \ \ \ \ Patinho Faminto \ \ \ Kalango \ \ \ Poseidon \ \ MAX \ \ Medialinux \ \ Mediainlinux \ \ ArtistX \ \ Morphix \ \ \ Aquamorph \ \ \ Dreamlinux \ \ \ Hiwix \ \ \ Hiweed \ \ \ \ Deepin \ \ \ ZoneCD \ \ Musix \ \ ParallelKnoppix \ \ Quantian \ \ Shabdix \ \ Symphony OS \ \ Whoppix \ \ WHAX \ LEAF \ Libranet \ Librassoc \ Lindows \ Linspire \ \ Freespire \ Liquid Lemur \ Matriux \ MEPIS \ SimplyMEPIS \ \ antiX \ \ \ Swift \ Metamorphose \ miniwoody \ Bonzai \ MoLinux \ \ Tirwal \ NepaLinux \ Nova \ Omoikane (Arma) \ OpenMediaVault \ OS2005 \ Maemo \ Meego Harmattan \ PelicanHPC \ Progeny \ Progress \ Proxmox \ PureOS \ Red Ribbon \ Resulinux \ Rxart \ SalineOS \ Semplice \ sidux \ aptosid \ \ siduction \ Skolelinux \ Snowlinux \ srvRX live \ Storm \ Tails \ ThinClientOS \ Trisquel \ Tuquito \ Ubuntu \ \ A/V \ \ AV \ \ Airinux \ \ Arabian -

Master Thesis Innovation Dynamics in Open Source Software

Master thesis Innovation dynamics in open source software Author: Name: Remco Bloemen Student number: 0109150 Email: [email protected] Telephone: +316 11 88 66 71 Supervisors and advisors: Name: prof. dr. Stefan Kuhlmann Email: [email protected] Telephone: +31 53 489 3353 Office: Ravelijn RA 4410 (STEPS) Name: dr. Chintan Amrit Email: [email protected] Telephone: +31 53 489 4064 Office: Ravelijn RA 3410 (IEBIS) Name: dr. Gonzalo Ord´o~nez{Matamoros Email: [email protected] Telephone: +31 53 489 3348 Office: Ravelijn RA 4333 (STEPS) 1 Abstract Open source software development is a major driver of software innovation, yet it has thus far received little attention from innovation research. One of the reasons is that conventional methods such as survey based studies or patent co-citation analysis do not work in the open source communities. In this thesis it will be shown that open source development is very accessible to study, due to its open nature, but it requires special tools. In particular, this thesis introduces the method of dependency graph analysis to study open source software devel- opment on the grandest scale. A proof of concept application of this method is done and has delivered many significant and interesting results. Contents 1 Open source software 6 1.1 The open source licenses . 8 1.2 Commercial involvement in open source . 9 1.3 Opens source development . 10 1.4 The intellectual property debates . 12 1.4.1 The software patent debate . 13 1.4.2 The open source blind spot . 15 1.5 Litterature search on network analysis in software development . -

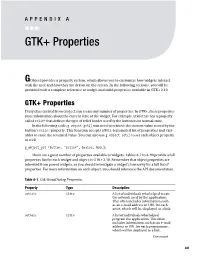

GTK+ Properties

APPENDIX A ■ ■ ■ GTK+ Properties GObject provides a property system, which allows you to customize how widgets interact with the user and how they are drawn on the screen. In the following sections, you will be provided with a complete reference to widget and child properties available in GTK+ 2.10. GTK+ Properties Every class derived from GObject can create any number of properties. In GTK+, these properties store information about the current state of the widget. For example, GtkButton has a property called relief that defines the type of relief border used by the button in its normal state. In the following code, g_object_get() was used to retrieve the current value stored by the button’s relief property. This function accepts a NULL-terminated list of properties and vari- ables to store the returned value. You can also use g_object_set() to set each object property as well. g_object_get (button, "relief", &value, NULL); There are a great number of properties available to widgets; Tables A-1 to A-90 provide a full properties list for each widget and object in GTK+ 2.10. Remember that object properties are inherited from parent widgets, so you should investigate a widget’s hierarchy for a full list of properties. For more information on each object, you should reference the API documentation. Table A-1. GtkAboutDialog Properties Property Type Description artists GStrv A list of individuals who helped create the artwork used by the application. This often includes information such as an e-mail address or URL for each artist, which will be displayed as a link. -

OSS Alphabetical List and Software Identification

Annex: OSS Alphabetical list and Software identification Software Short description Page A2ps a2ps formats files for printing on a PostScript printer. 149 AbiWord Open source word processor. 122 AIDE Advanced Intrusion Detection Environment. Free replacement for Tripwire(tm). It does the same 53 things are Tripwire(tm) and more. Alliance Complete set of CAD tools for the specification, design and validation of digital VLSI circuits. 114 Amanda Backup utility. 134 Apache Free HTTP (Web) server which is used by over 50% of all web servers worldwide. 106 Balsa Balsa is the official GNOME mail client. 96 Bash The Bourne Again Shell. It's compatible with the Unix `sh' and offers many extensions found in 147 `csh' and `ksh'. Bayonne Multi-line voice telephony server. 58 Bind BIND "Berkeley Internet Name Daemon", and is the Internet de-facto standard program for 95 turning host names into IP addresses. Bison General-purpose parser generator. 77 BSD operating FreeBSD is an advanced BSD UNIX operating system. 144 systems C Library The GNU C library is used as the C library in the GNU system and most newer systems with the 68 Linux kernel. CAPA Computer Aided Personal Approach. Network system for learning, teaching, assessment and 131 administration. CVS A version control system keeps a history of the changes made to a set of files. 78 DDD DDD is a graphical front-end for GDB and other command-line debuggers. 79 Diald Diald is an intelligent link management tool originally named for its ability to control dial-on- 50 demand network connections. Dosemu DOSEMU stands for DOS Emulation, and is a linux application that enables the Linux OS to run 138 many DOS programs - including some Electric Sophisticated electrical CAD system that can handle many forms of circuit design. -

How to Run POSIX Apps in a Minimal Picoprocess Jon Howell, Bryan Parno, John R

How to Run POSIX Apps in a Minimal Picoprocess Jon Howell, Bryan Parno, John R. Douceur Microsoft Research, Redmond, WA Abstract Libraries We envision a future where Web, mobile, and desktop Application Function # Examples applications are delivered as isolated, complete software Abiword word processor 63 Pango,Freetype stacks to a minimal, secure client host. This shift imbues Gimp raster graphics 55 Gtk,Gdk Gnucash personal finances 101 Gnome,Enchant app vendors with full autonomy to maintain their apps’ Gnumeric spreadsheet 54 Gtk,Gdk integrity. Achieving this goal requires shifting complex Hyperoid video game 6 svgalib behavior out of the client platform and into the vendors’ Inkscape vector drawing 96 Magick,Gnome isolated apps. We ported rich, interactive POSIX apps, Marble 3D globe 73 KDE, Qt such as Gimp and Inkscape, to a spartan host platform. Midori HTML/JS renderer 74 webkit We describe this effort in sufficient detail to support re- producibility. Table 1: A variety of rich, functional apps transplanted to run in a minimal native picoprocess. While these 1 Introduction apps are nearly fully functional, plugins that depend on fork() are not yet supported (§3.9). Numerous academic systems [5, 11, 13, 15, 19, 22, 25–28, 31] and deployed systems [1–3, 23] have started pushing towards a world in which Web, mobile, and multaneously [16]. It pushes the minimal client host in- desktop applications are strongly isolated by the client terface to an extreme, proposing a client host without kernel. A common theme in this work is that guarantee- TCP, a file system or even storage, and with a UI con- ing strong isolation requires simplifying the client, since strained to simple pixel blitting (i.e., copying pixel arrays complexity tends to breed vulnerability.