Mathematical Foundation of Photogrammetry (Part of EE5358)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Naval Postgraduate School Thesis

NAVAL POSTGRADUATE SCHOOL MONTEREY, CALIFORNIA THESIS LIDAR AND IMAGE POINT CLOUD COMPARISON by Amanda R. Mueller September 2014 Thesis Advisor: Richard C. Olsen Second Reader: David M. Trask Approved for public release; distribution is unlimited THIS PAGE INTENTIONALLY LEFT BLANK REPORT DOCUMENTATION PAGE Form Approved OMB No. 0704-0188 Public reporting burden for this collection of information is estimated to average 1 hour per response, including the time for reviewing instruction, searching existing data sources, gathering and maintaining the data needed, and completing and reviewing the collection of information. Send comments regarding this burden estimate or any other aspect of this collection of information, including suggestions for reducing this burden, to Washington headquarters Services, Directorate for Information Operations and Reports, 1215 Jefferson Davis Highway, Suite 1204, Arlington, VA 22202-4302, and to the Office of Management and Budget, Paperwork Reduction Project (0704-0188) Washington DC 20503. 1. AGENCY USE ONLY (Leave blank) 2. REPORT DATE 3. REPORT TYPE AND DATES COVERED September 2014 Master’s Thesis 4. TITLE AND SUBTITLE 5. FUNDING NUMBERS LIDAR AND IMAGE POINT CLOUD COMPARISON 6. AUTHOR Amanda R. Mueller 7. PERFORMING ORGANIZATION NAME(S) AND ADDRESS(ES) 8. PERFORMING ORGANIZATION Naval Postgraduate School REPORT NUMBER Monterey, CA 93943-5000 9. SPONSORING /MONITORING AGENCY NAME(S) AND ADDRESS(ES) 10. SPONSORING/MONITORING N/A AGENCY REPORT NUMBER 11. SUPPLEMENTARY NOTES The views expressed in this thesis are those of the author and do not reflect the official policy or position of the Department of Defense or the U.S. Government. IRB protocol number ____N/A____. 12a. -

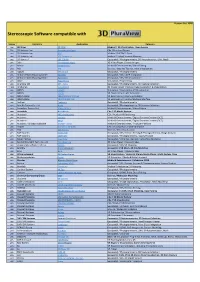

Sterescopic Software Compatible with 3D Pluraview

Version: Nov. 2020 Stereoscopic Software compatible with Stereo Company Application Category yes The ImagingSource ic3d 3D Image Viewer / Stereo Camera Calibration & Visualization FULL Anchorlab StereoBlend 3D Image Viewer / Stereo Image Generation & Visualization yes Masuji Suto StereoPhoto Maker 3D Image Viewer / Stereo Image Generation & Visualization, Freeware yes Presagis OpenFlight Creator 3D Model Building Environment yes 3dtv Stereoscopic Player 3D Video Player / Stereo Images yes Beijing Blue Sight Tech. Co., Ltd. ProvideService 3D Video Player / Stereo Images yes Bino Bino 3D Video Player / Stereo Images yes sView sView 3D Video Player / Stereo Images yes Xeometric ELITECAD Architecture BIM / Architecture, Construction, CAD Engine FULL Dassault Systems 3DVIA BIM / Interior Modeling yes Xeometric ELITECAD Styler BIM / Interior Modeling FULL SierraSoft Land BIM / Land Survey Restitution and Analysis FULL SierraSoft Roads BIM / Road & Highway Design yes Fraunhofer IAO Vrfx BIM / VR for Revit yes Xeometric ELITECAD ViewerPRO BIM / VR Viewer, Free Option yes ENSCAPE Enscape 2.8 BIM / VR Visualization Plug-In for ArchiCAD, Revit, SketchUp, Rhino, Vectorworks yes Xeometric ELITECAD Lumion BIM / VR Visualization, Architecture Models FULL Autodesk NavisWorks CAx / 3D Model Review FULL Dassault Systems eDrawings for Solidworks CAx / 3D Model Review yes OPEN CASCADE CAD CAD Assistant CAx / 3D Model Review FULL PTC Creo View MCAD CAx / 3D Model Review yes Gstarsoft Co., Ltd HaoChen 3D CAx / CAD, Architecture, HVAC, Electric & Power yes -

Stereo Software List

Version: Oct. 2020 Stereoscopic Software compatible with Stereo Company Application Category yes 3D Slicer 3D Slicer Medical / 3D Visualization, Open Source Yes 3D Systems, Inc. Geomagic Freeform CAx / Freeform Design yes 3D Systems, Inc. D2P Medical / DICOM-2-Print yes 3D Systems, Inc. VSP Technology Medical / Virtual Surgical Planning yes 3DFlow s.r.l. 3DF ZEPHYR Geospatial / Photogrammetry, 3D Reconstruction, UAV, Mesh yes 3dtv Stereoscopic Player 3D Video Player / Stereo Images yes Adobe Premiere Pro Media & Entertainment / Video Editing yes AGI STK Science / Satellite Tool Kit, Orbit Visualization yes Agisoft Metashape Geospatial / Photogrammetry yes AirborneHydroMapping GmbH KomVISH Geospatial / GIS, LiDAR Integration yes AirborneHydroMapping GmbH HydroVISH Geospatial / GIS, SDK Visualization yes Altair HyperWorks Simulation / Engineering yes Analytica Ltd DPS - Delta Geospatial / Photogrammetry, 3D Feature Collection FULL Anchorlab StereoBlend 3D Image Viewer / Stereo Image Generation & Visualization yes ANSYS EnSight Simulation / Engineering, VR Visualization yes ANSYS SPEOS VR Engineering / Light Simulation yes ANSYS Optis VREXPERIENCE SCANeR VR Engineering / Driving Simulation yes ANSYS Optis VRXPERIENCE HMI VR Engineering / Human Machine Interface yes Aplitop TcpStereo Geospatial / Photogrammetry yes Asia Air Survey Co., Ltd. Azuka Geospatial / Photogrammetry, 3D Feature Collection yes Assimilate Europe Ltd. SCRATCH Vs. 9 Media & Entertainment / Video Editing yes Autodesk NavisWorks CAx / 3D Model Review yes Autodesk VRED Professional -

3D Mapping by Photogrammetry and Lidar in Forest Studies

Available online at www.worldscientificnews.com WSN 95 (2018) 224-234 EISSN 2392-2192 3D Mapping by Photogrammetry and LiDAR in Forest Studies Firoz Ahmad1,*, Md Meraj Uddin2, Laxmi Goparaju1 1Vindhyan Ecology and Natural History Foundation, Mirzapur, Uttar Pradesh, India 2Department of Mathematics, Ranchi University, Ranchi, Jharkhand, India E-mail address: [email protected] ABSTRACT Aerial imagery have long been used for forest Inventories due to the high correlation between tree height and forest biophysical properties to determine the vertical canopy structure which is an important variable in forest inventories. The development in photogrammetric softwares and large availability of aerial imagery has carved the path in 3D mapping and has accelerated significantly the use of photogrammetry in forest inventory. There is tremendous capacity of 3D mapping which has been recognized in research, development and management of forest ecosystem. The aim of this article is to provide insights of 3D mapping (photogrammetry including Lidar) in forest-related disciplines. We utilizing the satellite stereo pair and LiDAR point cloud as a case study for producing the anaglyph map and Canopy Height Model (CHM) respectively. The study also revealed the area verses canopy height graph. Present study has some strength because it was demonstrated the use of advance software module of ARC/GIS and Erdas Imagine for 3D mapping using Photogrammetry and LiDAR data sets which is highly useful in forest management, planning and decision making. Keywords: Stereo-Image, Photogrammetry, Lidar, Point cloud, Anaglyph, Canopy Height Model 1. INTRODUCTION The prime objective of forest mapping is to generate, manipulate and update various thematic datasets representing forestry attributes. -

Digital Photogrammetry

Component-I(A) - Personal Details Role Name Affiliation Principal Investigator Prof.MasoodAhsanSiddiqui Department of Geography, JamiaMilliaIslamia, New Delhi Paper Coordinator, if any Dr. Mahaveer Punia BISR, Jaipur Content Writer/Author (CW) Ms. Kaushal Panwar Senior Research Fellow, Birla Institute of Scientific Research, Jaipur Content Reviewer (CR) Dr. Mahaveer Punia BISR, Jaipur Language Editor (LE) Component-I (B) - Description of Module Items Description of Module Subject Name Geography Paper Name Remote Sensing, GIS, GPS Module Name/Title DIGITAL PHOTOGRAMMETRY Module Id RS/GIS 12 Pre-requisites Objectives 1. Keywords DIGITAL PHOTOGRAMMETRY Learning Outcome Student will get to know about digital photogrammetry. Student will acquire skill to work upon DEM, DTM and ortho photos. Student will be equipped with knowledge to study further digital photogrammetry needs, applications and advancement in remote sensing field. Introduction to Photogrammetry: Photogrammetry as a science is among the earliest techniques of remote sensing. The word photogrammetry is the combination of three distinct Greek words: ‘Photos’ - light; ‘Gramma’ -to draw; and ‘Metron’ –to measure. The root words originally signify "measuring graphically by means of light." The fundamental goal of photogrammetry is to rigorously establish the geometric relationship between an object and an image and derive information about the object from the image. For the laymen, photogrammetry is the technological ability of determining the measurement of any object by means of photography. Why Digital Photogrammetry? With the advent of computing and imaging technology, photogrammetry has evolved from analogue to analytical to digital (softcopy) photogrammetry. The main difference between digital photogrammetry and its predecessors (analogue and analytical) is that it deals with digital imagery directly rather than (analogue) photographs. -

3D Pluraview Supported Stereoscopic Applications

Version: Jan. 2021 Stereoscopic Software compatible with Stereo Company Application Category yes 3D Slicer 3D Slicer Medical / 3D Visualization, Open Source Yes 3D Systems, Inc. Geomagic Freeform CAx / Freeform Design yes 3D Systems, Inc. D2P Medical / DICOM-2-Print yes 3D Systems, Inc. VSP Technology Medical / Virtual Surgical Planning FULL 3DFlow s.r.l. 3DF ZEPHYR Geospatial / Photogrammetry, 3D Reconstruction, UAV, Mesh FULL 3dtv Stereoscopic Player 3D Video Player / Stereo Images yes Adobe Premiere Pro Media & Entertainment / Video Editing yes AGI STK Science / Satellite Tool Kit, Orbit Visualization FULL Agisoft Metashape Geospatial / Photogrammetry FULL AirborneHydroMapping GmbH KomVISH Geospatial / GIS, LiDAR Integration FULL AirborneHydroMapping GmbH HydroVISH Geospatial / GIS, SDK Visualization yes Altair HyperWorks Simulation / Engineering FULL Analytica Ltd DPS - Delta Geospatial / Photogrammetry, 3D Feature Collection FULL Anchorlab StereoBlend 3D Image Viewer / Stereo Image Generation & Visualization FULL ANSYS EnSight Simulation / Engineering, VR Visualization FULL ANSYS SPEOS VR Engineering / Light Simulation FULL ANSYS Optis VREXPERIENCE SCANeR VR Engineering / Driving Simulation FULL ANSYS Optis VRXPERIENCE HMI VR Engineering / Human Machine Interface FULL Aplitop TcpStereo Geospatial / Photogrammetry yes Asia Air Survey Co., Ltd. Azuka Geospatial / Photogrammetry, 3D Feature Collection yes Assimilate Europe Ltd. SCRATCH Vs. 9 Media & Entertainment / Video Editing FULL Autodesk NavisWorks CAx / 3D Model Review FULL Autodesk -

Accuracy Assessment of Photogrammetric Digital Elevation

ACCURACY ASSESSMENT OF PHOTOGRAMMETRIC DIGITAL ELEVATION MODELS GENERATED FOR THE SCHULTZ FIRE BURN AREA By Danna K. Muise A Thesis Submitted in Partial Fulfillment of the Requirements for the degree of Master of Science in Applied Geospatial Sciences Northern Arizona University August 2014 Approved: Erik Schiefer, Ph.D., Chair Ruihong Huang, Ph.D. Mark Manone, M.A. Abstract ACCURACY ASSESSMENT OF PHOTOGRAMMETRIC DIGITAL ELEVATION MODELS GENERATED FOR THE SCHULTZ FIRE BURN AREA Danna K. Muise This paper evaluates the accuracy of two digital photogrammetric software programs (ERDAS Imagine LPS and PCI Geomatica OrthoEngine) with respect to high- resolution terrain modeling in a complex topographic setting affected by fire and flooding. The site investigated is the 2010 Schultz Fire burn area, situated on the eastern edge of the San Francisco Peaks approximately 10 km northeast of Flagstaff, Arizona. Here, the fire coupled with monsoon rains typical of northern Arizona drastically altered the terrain of the steep mountainous slopes and residential areas below the burn area. To quantify these changes, high resolution (1 m and 3 m) digital elevation models (DEMs) were generated of the burn area using color stereoscopic aerial photographs taken at a scale of approximately 1:12000. Using a combination of pre-marked and post-marked ground control points (GCPs), I first used ERDAS Imagine LPS to generate a 3 m DEM covering 8365 ha of the affected area. This data was then compared to a reference DEM (USGS 10 m) to evaluate the accuracy of the resultant DEM. Findings were then divided into blunders (errors) and bias (slight differences) and further analyzed to determine if different factors (elevation, slope, aspect and burn severity) affected the accuracy of the DEM. -

The Development of a Geospatial Information Database Project in the Republic of Zimbabwe

The Republic of Zimbabwe The Department of the Surveyor-General (DSG) THE DEVELOPMENT OF A GEOSPATIAL INFORMATION DATABASE PROJECT IN THE REPUBLIC OF ZIMBABWE FINAL REPORT June 2017 Japan International Cooperation Agency (JICA) Asia Air Survey Co., Ltd. PASCO Corporation EI JR 17-065 USD 1.00=JPY 111.326 (as of June 2017) The Republic of Zimbabwe The Department of the Surveyor-General (DSG) THE DEVELOPMENT OF A GEOSPATIAL INFORMATION DATABASE PROJECT IN THE REPUBLIC OF ZIMBABWE FINAL REPORT June 2017 Japan International Cooperation Agency (JICA) Asia Air Survey Co., Ltd. PASCO Corporation EI JR 17-065 The Development of A Geospatial Information Database Project in the Republic of Zimbabwe Final Report Project Location Map (Source: JICA Study Team) The Development of A Geospatial Information Database Project in the Republic of Zimbabwe Final Report Photo Album City Center in Harare City Skyscrapers in Harare City A major road (Milton street) in Harare city Distant view of Chitungwiza Municipality Inception seminar Existing National Geodetic Point 387/T(GPS-35) The Development of A Geospatial Information Database Project in the Republic of Zimbabwe Final Report Existing National Geodetic Point 3118/T(GPS-38) Existing National Benchmark BM21M 366 Aircraft for aerial photography Digital aerial camera Training on leveling Training on GNSS observation The Development of A Geospatial Information Database Project in the Republic of Zimbabwe Final Report Training on an air mark installation Lecture on analysis of observed data from GNSS survey