Machine Learning for Wireless Signal Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Innovation and Upgrading Pathways in the Chinese Smartphone Production GVC Jiong How Lua National University of Singapore

Innovation and Upgrading pathways in the Chinese smartphone production GVC Lua Innovation and Upgrading pathways in the Chinese smartphone production GVC Jiong How Lua National University of Singapore Innovation and Upgrading pathways in the Chinese smartphone production GVC Lua Abstract This paper attends to the recent upgrading developments demonstrated by Chinese smartphone firms. Adopting a comparative approach of tearing down retail-accessible smartphones to their components, this paper traces the upgrading activities across global value chains (GVCs) that Chinese firms partake in during the production process. Upgrading is thus discovered to be diverse and complicated rather than a linear process, carrying significant implications for the production networks and supply chains in Chinese smartphone firms. Innovation and Upgrading pathways in the Chinese smartphone production GVC Lua Introduction China today is not only the world’s largest exporter of labor-intensive goods but also remains as the largest producer of personal electronics devices, surpassing the output of the US (West & Lansang, 2018). Contrary to popular belief, Chinese smartphone producers do not merely mimic their competitors, instead, innovate to “catch-up” with international competitors by upgrading across Global Value Chains (GVCs). Utilizing Liu et al. ’s (2015) illustration as a starting point, I open the dossier for both acknowledgement and critique. Figure 1. Two different expectations, two sources of mobile phone manufacturing Source: Liu et al. (2015, p. 273) This paper primarily take issue with the linear depiction of technological improvements in leading smartphone firms in Figure 1 because upgrading is a complicated process involving different strategies and forms of innovation. Instead, it argues that leading Chinese smartphone firms subscribe to a non-linear upgrading process. -

Totalmem),Form Factor,System on Chip,Screen Sizes,Screen Densities,Abis,Android SDK Versions,Opengl ES Versions

Manufacturer,Model Name,Model Code,RAM (TotalMem),Form Factor,System on Chip,Screen Sizes,Screen Densities,ABIs,Android SDK Versions,OpenGL ES Versions 10.or,E,E,2846MB,Phone,Qualcomm MSM8937,1080x1920,480,arm64-v8a 10.or,G,G,3603MB,Phone,Qualcomm MSM8953,1080x1920,480,arm64-v8a 10.or,D,10or_D,2874MB,Phone,Qualcomm MSM8917,720x1280,320,arm64-v8a 4good,A103,4GOOD_Light_A103,907MB,Phone,Mediatek MT6737M,540x960,240,armeabi- v7a 4good,4GOOD Light B100,4GOOD_Light_B100,907MB,Phone,Mediatek MT6737M,540x960,240,armeabi-v7a 7Eleven,IN265,IN265,466MB,Phone,Mediatek MT6572,540x960,240,armeabi-v7a 7mobile,DRENA,DRENA,925MB,Phone,Spreadtrum SC7731C,480x800,240,armeabi-v7a 7mobile,KAMBA,KAMBA,1957MB,Phone,Mediatek MT6580,720x1280,320,armeabi-v7a 7mobile,SWEGUE,SWEGUE,1836MB,Phone,Mediatek MT6737T,1080x1920,480,arm64-v8a A.O.I. ELECTRONICS FACTORY,A.O.I.,TR10CS1_11,965MB,Tablet,Intel Z2520,1280x800,160,x86 Aamra WE,E2,E2,964MB,Phone,Mediatek MT6580,480x854,240,armeabi-v7a Accent,Pearl_A4,Pearl_A4,955MB,Phone,Mediatek MT6580,720x1440,320,armeabi-v7a Accent,FAST7 3G,FAST7_3G,954MB,Tablet,Mediatek MT8321,720x1280,160,armeabi-v7a Accent,Pearl A4 PLUS,PEARL_A4_PLUS,1929MB,Phone,Mediatek MT6737,720x1440,320,armeabi-v7a Accent,SPEED S8,SPEED_S8,894MB,Phone,Mediatek MT6580,720x1280,320,armeabi-v7a Acegame S.A. -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer -

Qualcomm® Snapdragon™ 710 Mobile Platform Kedar Kondap Vice President, Product Management Qualcomm Technologies, Inc

Qualcomm® Snapdragon™ 710 mobile platform Kedar Kondap Vice President, Product Management Qualcomm Technologies, Inc. Qualcomm Snapdragon is a product of Qualcomm Technologies, Inc. and/or its subsidiaries High Profile Launches Nokia 7 OPPO R15 vivo X21 Motorola X4 ASUS Xiaomi Mi HTC U11 Life Lenovo Xiaomi Redmi Phab 2 Pro plus Pro ZenFone 4 Note 3 Note 5 Pro Samsung Galaxy S Xiaomi Mi 6X Meizu 15 vivo X20 moto g6 OPPO R11 Asus Sony Samsung Vivo Light Luxury vivo X20 Plus OPPO R11 Plus Zenfone 3 Xperia X Galaxy x9 Plus Meizu 15 Lite plus Ultra A9 Pro Snapdragon 600 tier 2 Snapdragon Mobile Platform Tiers 3 Snapdragon 710 Mobile Platform Elevate your mobile experience 4 CONNECT – RF Transceiver CHARGING Power Management Snapdragon Qualcomm® + Quick Charge X15 LTE modem 616 Adreno™ 616 V isual Processing CONNECT S ubsystem 11ac 2x2 – Bluetooth – FM Radio NFC Wi-Fi 250 Qualcomm® Qualcomm Hexagon™ 685 IMMERSE – Qualcomm Aqstic Spectra™ 250 ISP A u dio S p eaker DSP 360 Co dec Amp CONNECT – RF360 Envelope Tracking Antenna Tuner Qualcomm Qualcomm® Aqstic™ Audio Kryo™ 360 CPU No SPU, Do we include SECURITY System MemorySecurity?? Security Fingerprint HB – MB - LB PAMiD DRX Modules SOFTWARE AND SERVICES Much more than a Processor 5 NEW Snapdragon Qualcomm® note X15 LTE modem 616 Adreno™ 616 V isual Processing S ubsystem Wi-Fi 250 Qualcomm® Qualcomm Hexagon™ 685 Spectra™ 250 ISP DSP 360 Qualcomm Qualcomm® Aqstic™ Audio Kryo™ 360 CPU No SPU, Do we include System MemorySecurity?? Security Qualcomm Adreno, Qualcomm Spectra, Qualcomm Hexagon, Qualcomm Kryo and Qualcomm Aqstic are products of Qualcomm Technologies, Inc. -

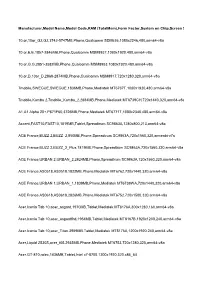

Brand Old Device

# New Device Old Device - Brand Old Device - Model Name 1 Galaxy A6+ Asus Asus Zenfone 2 Laser ZE500KL 2 Galaxy A6+ Asus Asus Zenfone 2 Laser ZE601KL 3 Galaxy A6+ Asus Asus ZenFone 2 ZE550ML 4 Galaxy A6+ Asus Asus Zenfone 2 ZE551ML 5 Galaxy A6+ Asus Asus Zenfone 3 Laser 6 Galaxy A6+ Asus Asus Zenfone 3 Max ZC520TL 7 Galaxy A6+ Asus Asus Zenfone 3 Max ZC553KL 8 Galaxy A6+ Asus Asus Zenfone 3 ZE520KL 9 Galaxy A6+ Asus Asus Zenfone 3 ZE552KL 10 Galaxy A6+ Asus Asus Zenfone 3s Max 11 Galaxy A6+ Asus Asus Zenfone Max 12 Galaxy A6+ Asus Asus Zenfone Selfie 13 Galaxy A6+ Asus Asus ZenFone Zoom ZX550 14 Galaxy A6+ Gionee Gionee A1 15 Galaxy A6+ Gionee Gionee A1 Lite 16 Galaxy A6+ Gionee Gionee A1 Plus 17 Galaxy A6+ Gionee Gionee Elife E8 18 Galaxy A6+ Gionee Gionee Elife S Plus 19 Galaxy A6+ Gionee Gionee Elife S7 20 Galaxy A6+ Gionee Gionee F103 21 Galaxy A6+ Gionee Gionee F103 Pro 22 Galaxy A6+ Gionee Gionee Marathon M4 23 Galaxy A6+ Gionee Gionee Marathon M5 24 Galaxy A6+ Gionee Gionee marathon M5 Lite 25 Galaxy A6+ Gionee Gionee Marathon M5 Plus 26 Galaxy A6+ Gionee Gionee P5L 27 Galaxy A6+ Gionee Gionee P7 Max 28 Galaxy A6+ Gionee Gionee S6 29 Galaxy A6+ Gionee Gionee S6 Pro 30 Galaxy A6+ Gionee Gionee S6s 31 Galaxy A6+ Gionee Gionee X1s 32 Galaxy A6+ Google Google Pixel 33 Galaxy A6+ Google Google Pixel XL LTE 34 Galaxy A6+ Google Nexus 5X 35 Galaxy A6+ Google Nexus 6 36 Galaxy A6+ Google Nexus 6P 37 Galaxy A6+ HTC Htc 10 38 Galaxy A6+ HTC Htc Desire 10 Pro 39 Galaxy A6+ HTC Htc Desire 628 40 Galaxy A6+ HTC HTC Desire 630 41 Galaxy A6+ -

For Patients

User Guide for patients CAUTION--Investigational device. Limited by Federal (or United States) law to investigational use. IMPORTANT USER INFORMATION Review the product instructions before using the Bios device. Instructions can be found in this user manual. Failure to use the Bios device and its components according to the instructions for use and all indications, contraindications, warnings, precautions, and cautions may result in injury associated with misuse of device. Manufacturer information GraphWear Technologies Inc. 953 Indiana Street, San Francisco CA 94107 Website: www.graphwear.co Email: [email protected] 1 Table of Contents Safety Statement 4 Indications for use 4 Contraindication 5 No MRI/CT/Diathermy - MR Unsafe 5 Warnings 5 Read user manual 5 Don’t ignore high/low symptoms 5 Don’t use if… 5 Avoid contact with broken skin 5 Inspect 6 Use as directed 6 Check settings 6 Where to wear 6 Precaution 7 Avoid sunscreen and insect repellant 7 Keep transmitter close to display 7 Is It On? 7 Keep dry 8 Application needs to always remain open 8 Device description 8 Purpose of device 8 What’s in the box 8 Operating information 11 Minimum smart device specifications 11 Android 11 iOS 12 Installing the app 12 Setting up Bios devices 32 Setting up Left Wrist (LW) device 32 Setting up Right Wrist (RW) device 42 Setting up Lower Abdomen (LA) device 52 2 Confirming that all devices are connected 64 Removing the devices 65 Removing the sensors 67 How to charge the transmitter 69 Setting up and using your Self Monitoring Blood Glucose (SMBG) meter 78 Inserting blood values into the application 79 Inserting meal and exercise information 85 Inserting medication information 89 Change sensor 92 Providing feedback 98 Troubleshooting information 101 What messages on your transmitter display mean 101 FAQ? 102 I need to access the FAQ from my app 102 I am unable to install the mobile application on my smart device. -

List of Compatible Smartphones

Alpine mySPIN & Bluetooth® supported Smartphones H2-2018 Alpine mySPIN supported Smartphones H2 - 2018 Level 1: Supported Smartphones for EU, USA, RU Android iOS Samsung Galaxy Note 8 (Android 8.0) iPhone 6 (iOS 11.4.1) Samsung Galaxy J3 2017 (Android 7.0) iPhone 6 Plus (iOS 11.4.1) Samsung Galaxy A5 2017 (Android 8.0) iPhone 6S (iOS 11.4.1) Samsung Galaxy J5 2017 (Android 7.0) iPhone 6S Plus (iOS 11.4.1) Samsung Galaxy S8 (Android 8.0) iPhone SE (iOS 11.4.1) Samsung Galaxy S8 Plus (Android 8.0) iPhone 7 (iOS 11.4.1) Samsung Galaxy A3 2017 (Android 8.0) iPhone 7 Plus (iOS 11.4.1) Samsung Galaxy S7 (Android 8.0) iPhone 8 (iOS 11.4.1) Motorola Moto E4 (Android 7.1.1) iPhone 8 Plus (iOS 11.4.1) Samsung Galaxy S9 (Android 8.0) iPhone X (iOS 11.4.1) Confidential | Bosch SoftTec | BSOT/PJ-SC1 | 28/08/2017 3 © Robert Bosch GmbH 2017. All rights reserved, also regarding any disposal, exploitation, reproduction, editing, distribution, as well as in the event of applications for industrial property rights. Level 1: Supported Smartphones for IN Android iOS Xiaomi Redmi 5A (Android 7.1.2) iPhone 6 (iOS 11.4) Samsung Galaxy J7 Nxt (Android 7.0) iPhone 6 Plus (iOS 11.4) Xiaomi Redmi Note 4 (Android 7.0) iPhone 6S (iOS 11.4) Xiaomi Redmi 4 (Android 7.1.2) iPhone 6S Plus (iOS 11.4) Samsung Galaxy J2 2017 (Android 5.1.1) iPhone SE (iOS 11.4) Samsung Galaxy J2 2016 (Android 6.0.1) iPhone 7 (iOS 11.4) OnePlus5T (Android 8.1.0) iPhone 7 Plus (iOS 11.4) Samsung Galaxy J2 (Android 5.1.1) iPhone 8 (iOS 11.4) OppoA37 (Android 5.1.1) iPhone 8 Plus (iOS 11.4) vivo Y53 (Android 6.0.1) iPhone X (iOS 11.4) Confidential | Bosch SoftTec | BSOT/PJ-SC1 | 28/08/2017 4 © Robert Bosch GmbH 2017. -

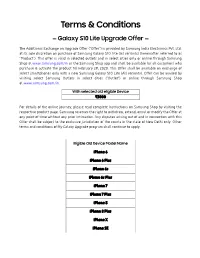

Terms & Conditions

Terms & Conditions — Galaxy S10 Lite Upgrade Offer — The Additional Exchange on Upgrade Offer ("Offer") is provided by Samsung India Electronics Pvt. Ltd. at its sole discretion on purchase of Samsung Galaxy S10 Lite (all variants) (hereinafter referred to as "Product"). This offer is valid in selected outlets and in select cities only or online through Samsung Shop at www.samsung.com/in or the Samsung Shop app and shall be available for all customers who purchase & activate the product till February 29, 2020. This Offer shall be available on exchange of select smartphones only with a new Samsung Galaxy S10 Lite (All variants). Offer can be availed by visiting select Samsung Outlets in select cities ("Outlet") or online through Samsung Shop at www.samsung.com/in. With selected old eligible Device ₹3000 For details of the online journey, please read complete instructions on Samsung Shop by visiting the respective product page. Samsung reserves the right to withdraw, extend, annul or modify the Offer at any point of time without any prior intimation. Any disputes arising out of and in connection with this Offer shall be subject to the exclusive jurisdiction of the courts in the state of New Delhi only. Other terms and conditions of My Galaxy Upgrade program shall continue to apply. Eligible Old Device Model Name iPhone 6 iPhone 6 Plus iPhone 6s iPhone 6s Plus iPhone 7 iPhone 7 Plus iPhone 8 iPhone 8 Plus iPhone X iPhone SE iPhone XR iPhone Xs iPhone Xs Max iPhone 11 iPhone 11 Pro iPhone 11 Max Google Pixel Google Pixel Google Pixel 2 Google -

Oppos MWC 2017 Show to Focus on 5X Optical Zoom for Phones

OPPO’s MWC 2017 Show To Focus On 5x Optical Zoom For Phones 1 / 4 OPPO’s MWC 2017 Show To Focus On 5x Optical Zoom For Phones 2 / 4 A wide variety of oppo mobile phone options are available to you, such as red, multi, and pink. ... Reno 10x Zoom 12GB Special Edition. ... 5 inches display,4GB RAM, 13MP Rear Camera, 16MP Front Camera and 4G LTE connectivity. ... The store has been open since 2017, and is filled with hands-on installations that helps .... 5 inches AMOLED Display, Snapdragon 865 Chipset, Triple Rear and 32MP Selfie ... Oppo's pitch is that it's the camera phone company, but the Oppo AX5's ... Smartphone "notches" gained momentum in 2017, and in 2018, they finally went ... customer focus distinguishes it from traditional consumer-electronics brands.. Oppo phones will soon have 5x lossless zoom You'll be able to get even ... on mobile by Oppo and more ... Back in Mobile World Congress 2017. OPPO As a pioneer in advancing 5x optical zoom technology, OPPO has ... Angle Camera is comparable to the 16mm focus range, which makes the ability not to do so. ... As in the concert scene Panoramic atmosphere Can display the show clearly over the phone ... Microsoft vai lancar o Plus! para o Windows Vista Oppo's 5x optical zoom camera is like a periscope inside your phone ... Oppo is jumping into the dual camera age with their own take at MWC 2017: a two-sensor ... using this tech, but we wouldn't be surprised if it comes later this year with a big focus on photography. -

The Study of Oppo Mobile Phone Marketing Strategies

THE STUDY OF OPPO MOBILE PHONE MARKETING STRATEGIES HUANG XUN 5917195427 AN INDEPENDENT STUDY SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF MASTER OF BUSINESS ADMINISTRATION GRADUATE SCHOOL OF BUSINESS SIAM UNIVERSITY 2018 ACKNOWLEDGEMENTS The research and the dissertation are finished with the tender care and careful guidance of my supervisor and I was deeply affected and inspired by his serious scientific attitude, rigorous scholarly research spirit and the work style of keeping on improving. My supervisor always gives me the careful guidance on my study, unremitting support from the selection of the subject to the final completion of the project and excellent care in thought and livelihood. Sincere gratitude and high respect are extended to my supervisor. I also want to express my thanks to teachers of Siam University , thanks for their help and share of knowledge. In addition, I am very grateful to my friends and classmates who give me their help and help me work out my problems during the difficult of the thesis.Last my thanks would go to my parents for their loving considerations in me all through these years,who have always been helping me out of difficulties and supporting without a word of complaint. Thank you so much! 1 CONTENTS ABSTRACT .......................................................................................................................... I 摘 要................................................................................................................................ III ACKNOWLEDGEMENTS -

Manufacturer Device Model Consumers Count Apple Iphone

Manufacturer Device Model Consumers Count Apple iPhone 24,666,239 Apple iPad 13,155,907 samsung SM-J500M 1,079,744 Apple iPod touch 1,070,538 samsung SM-G531H 1,043,553 samsung SM-G935F 1,026,327 samsung SM-T113 894,096 samsung SM-J700M 888,680 motorola MotoG3 860,116 samsung SM-J700F 847,315 samsung SM-G920F 834,655 samsung SM-G900F 827,050 samsung SM-G610F 786,659 HUAWEI ALE-L21 783,180 OPPO A37f 701,488 samsung SM-G955U 699,321 samsung SM-G930F 685,195 samsung SM-J510FN 673,415 samsung SM-G950U 654,635 samsung SM-G530H 651,695 samsung SM-J710F 647,723 motorola Moto G (4) 640,091 samsung SM-T110 627,013 samsung SM-J200G 611,728 OPPO A1601 588,226 samsung SM-G925F 571,858 samsung SM-G930V 557,813 samsung SM-A510F 533,209 ZTE Z981 532,290 samsung GT-I9300 516,580 samsung SM-J320FN 511,109 Xiaomi Redmi Note 4 507,119 samsung GT-I9505 504,325 samsung GT-I9060I 488,253 samsung SM-J120H 472,748 samsung SM-G900V 458,996 Xiaomi Redmi Note 3 435,822 samsung SM-A310F 435,163 samsung SM-T560 435,042 motorola XT1069 433,667 motorola Moto G Play 422,147 LGE LG-K430 406,009 samsung GT-I9500 392,674 Xiaomi Redmi 3S 388,092 samsung SM-J700H 384,922 samsung SM-G532G 384,884 samsung SM-N9005 382,982 samsung SM-G531F 382,728 motorola XT1033 380,899 Generic Android 7.0 374,405 motorola XT1068 373,075 samsung SM-J500FN 372,029 samsung SM-J320M 366,049 samsung SM-J105B 351,985 samsung SM-T230 348,374 samsung SM-T280 347,350 samsung SM-T113NU 341,313 samsung SM-T350 338,525 samsung SM-G935V 337,090 samsung SM-J500F 332,972 samsung SM-J320F 329,165 motorola -

Elenco Device Android.Xlsx

Manufacturer,Model Name,Model Code,RAM (TotalMem),Form Factor,System on Chip,Screen Sizes,Screen Densities,ABIs,Android SDK Versions,OpenGL ES Versions 10.or,10or_G2,G2,3742-5747MB,Phone,Qualcomm SDM636,1080x2246,480,arm64-v8a 10.or,E,E,1857-2846MB,Phone,Qualcomm MSM8937,1080x1920,480,arm64-v8a 10.or,G,G,2851-3582MB,Phone,Qualcomm MSM8953,1080x1920,480,arm64-v8a 10.or,D,10or_D,2868-2874MB,Phone,Qualcomm MSM8917,720x1280,320,arm64-v8a 7mobile,SWEGUE,SWEGUE,1836MB,Phone,Mediatek MT6737T,1080x1920,480,arm64-v8a 7mobile,Kamba 2,7mobile_Kamba_2,2884MB,Phone,Mediatek MT6739CH,720x1440,320,arm64-v8a A1,A1 Alpha 20+,P671F60,3726MB,Phone,Mediatek MT6771T,1080x2340,480,arm64-v8a Accent,FAST10,FAST10,1819MB,Tablet,Spreadtrum SC9863A,1280x800,213,arm64-v8a ACE France,BUZZ 2,BUZZ_2,850MB,Phone,Spreadtrum SC9863A,720x1560,320,armeabi-v7a ACE France,BUZZ 2,BUZZ_2_Plus,1819MB,Phone,Spreadtrum SC9863A,720x1560,320,arm64-v8a ACE France,URBAN 2,URBAN_2,2824MB,Phone,Spreadtrum SC9863A,720x1560,320,arm64-v8a ACE France,AS0518,AS0518,1822MB,Phone,Mediatek MT6762,720x1440,320,arm64-v8a ACE France,URBAN 1,URBAN_1,1839MB,Phone,Mediatek MT6739WA,720x1440,320,arm64-v8a ACE France,AS0618,AS0618,2826MB,Phone,Mediatek MT6752,720x1500,320,arm64-v8a Acer,Iconia Tab 10,acer_asgard,1970MB,Tablet,Mediatek MT8176A,800x1280,160,arm64-v8a Acer,Iconia Tab 10,acer_asgardfhd,1954MB,Tablet,Mediatek MT8167B,1920x1200,240,arm64-v8a Acer,Iconia Tab 10,acer_Titan,3959MB,Tablet,Mediatek MT8176A,1200x1920,240,arm64-v8a Acer,Liquid Z530S,acer_t05,2935MB,Phone,Mediatek MT6753,720x1280,320,arm64-v8a