Parallel Sorting with Minimal Data

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Time Complexity

Chapter 3 Time complexity Use of time complexity makes it easy to estimate the running time of a program. Performing an accurate calculation of a program’s operation time is a very labour-intensive process (it depends on the compiler and the type of computer or speed of the processor). Therefore, we will not make an accurate measurement; just a measurement of a certain order of magnitude. Complexity can be viewed as the maximum number of primitive operations that a program may execute. Regular operations are single additions, multiplications, assignments etc. We may leave some operations uncounted and concentrate on those that are performed the largest number of times. Such operations are referred to as dominant. The number of dominant operations depends on the specific input data. We usually want to know how the performance time depends on a particular aspect of the data. This is most frequently the data size, but it can also be the size of a square matrix or the value of some input variable. 3.1: Which is the dominant operation? 1 def dominant(n): 2 result = 0 3 fori in xrange(n): 4 result += 1 5 return result The operation in line 4 is dominant and will be executedn times. The complexity is described in Big-O notation: in this caseO(n)— linear complexity. The complexity specifies the order of magnitude within which the program will perform its operations. More precisely, in the case ofO(n), the program may performc n opera- · tions, wherec is a constant; however, it may not performn 2 operations, since this involves a different order of magnitude of data. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4]has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) In Bubble sort, Selection sort and Insertion sort, the O(N ) time time complexity that limits its usefulness to small number of complexity limits the performance when N gets very big. element no more than a few thousand data points. -

Mergesort and Quicksort ! Merge Two Halves to Make Sorted Whole

Mergesort Basic plan: ! Divide array into two halves. ! Recursively sort each half. Mergesort and Quicksort ! Merge two halves to make sorted whole. • mergesort • mergesort analysis • quicksort • quicksort analysis • animations Reference: Algorithms in Java, Chapters 7 and 8 Copyright © 2007 by Robert Sedgewick and Kevin Wayne. 1 3 Mergesort and Quicksort Mergesort: Example Two great sorting algorithms. ! Full scientific understanding of their properties has enabled us to hammer them into practical system sorts. ! Occupy a prominent place in world's computational infrastructure. ! Quicksort honored as one of top 10 algorithms of 20th century in science and engineering. Mergesort. ! Java sort for objects. ! Perl, Python stable. Quicksort. ! Java sort for primitive types. ! C qsort, Unix, g++, Visual C++, Python. 2 4 Merging Merging. Combine two pre-sorted lists into a sorted whole. How to merge efficiently? Use an auxiliary array. l i m j r aux[] A G L O R H I M S T mergesort k mergesort analysis a[] A G H I L M quicksort quicksort analysis private static void merge(Comparable[] a, Comparable[] aux, int l, int m, int r) animations { copy for (int k = l; k < r; k++) aux[k] = a[k]; int i = l, j = m; for (int k = l; k < r; k++) if (i >= m) a[k] = aux[j++]; merge else if (j >= r) a[k] = aux[i++]; else if (less(aux[j], aux[i])) a[k] = aux[j++]; else a[k] = aux[i++]; } 5 7 Mergesort: Java implementation of recursive sort Mergesort analysis: Memory Q. How much memory does mergesort require? A. Too much! public class Merge { ! Original input array = N. -

2.3 Quicksort

2.3 Quicksort ‣ quicksort ‣ selection ‣ duplicate keys ‣ system sorts Algorithms, 4th Edition · Robert Sedgewick and Kevin Wayne · Copyright © 2002–2010 · January 30, 2011 12:46:16 PM Two classic sorting algorithms Critical components in the world’s computational infrastructure. • Full scientific understanding of their properties has enabled us to develop them into practical system sorts. • Quicksort honored as one of top 10 algorithms of 20th century in science and engineering. Mergesort. last lecture • Java sort for objects. • Perl, C++ stable sort, Python stable sort, Firefox JavaScript, ... Quicksort. this lecture • Java sort for primitive types. • C qsort, Unix, Visual C++, Python, Matlab, Chrome JavaScript, ... 2 ‣ quicksort ‣ selection ‣ duplicate keys ‣ system sorts 3 Quicksort Basic plan. • Shuffle the array. • Partition so that, for some j - element a[j] is in place - no larger element to the left of j j - no smaller element to the right of Sir Charles Antony Richard Hoare • Sort each piece recursively. 1980 Turing Award input Q U I C K S O R T E X A M P L E shu!e K R A T E L E P U I M Q C X O S partitioning element partition E C A I E K L P U T M Q R X O S not greater not less sort left A C E E I K L P U T M Q R X O S sort right A C E E I K L M O P Q R S T U X result A C E E I K L M O P Q R S T U X Quicksort overview 4 Quicksort partitioning Basic plan. -

An Evolutionary Approach for Sorting Algorithms

ORIENTAL JOURNAL OF ISSN: 0974-6471 COMPUTER SCIENCE & TECHNOLOGY December 2014, An International Open Free Access, Peer Reviewed Research Journal Vol. 7, No. (3): Published By: Oriental Scientific Publishing Co., India. Pgs. 369-376 www.computerscijournal.org Root to Fruit (2): An Evolutionary Approach for Sorting Algorithms PRAMOD KADAM AND Sachin KADAM BVDU, IMED, Pune, India. (Received: November 10, 2014; Accepted: December 20, 2014) ABstract This paper continues the earlier thought of evolutionary study of sorting problem and sorting algorithms (Root to Fruit (1): An Evolutionary Study of Sorting Problem) [1]and concluded with the chronological list of early pioneers of sorting problem or algorithms. Latter in the study graphical method has been used to present an evolution of sorting problem and sorting algorithm on the time line. Key words: Evolutionary study of sorting, History of sorting Early Sorting algorithms, list of inventors for sorting. IntroDUCTION name and their contribution may skipped from the study. Therefore readers have all the rights to In spite of plentiful literature and research extent this study with the valid proofs. Ultimately in sorting algorithmic domain there is mess our objective behind this research is very much found in documentation as far as credential clear, that to provide strength to the evolutionary concern2. Perhaps this problem found due to lack study of sorting algorithms and shift towards a good of coordination and unavailability of common knowledge base to preserve work of our forebear platform or knowledge base in the same domain. for upcoming generation. Otherwise coming Evolutionary study of sorting algorithm or sorting generation could receive hardly information about problem is foundation of futuristic knowledge sorting problems and syllabi may restrict with some base for sorting problem domain1. -

Edsger W. Dijkstra: a Commemoration

Edsger W. Dijkstra: a Commemoration Krzysztof R. Apt1 and Tony Hoare2 (editors) 1 CWI, Amsterdam, The Netherlands and MIMUW, University of Warsaw, Poland 2 Department of Computer Science and Technology, University of Cambridge and Microsoft Research Ltd, Cambridge, UK Abstract This article is a multiauthored portrait of Edsger Wybe Dijkstra that consists of testimo- nials written by several friends, colleagues, and students of his. It provides unique insights into his personality, working style and habits, and his influence on other computer scientists, as a researcher, teacher, and mentor. Contents Preface 3 Tony Hoare 4 Donald Knuth 9 Christian Lengauer 11 K. Mani Chandy 13 Eric C.R. Hehner 15 Mark Scheevel 17 Krzysztof R. Apt 18 arXiv:2104.03392v1 [cs.GL] 7 Apr 2021 Niklaus Wirth 20 Lex Bijlsma 23 Manfred Broy 24 David Gries 26 Ted Herman 28 Alain J. Martin 29 J Strother Moore 31 Vladimir Lifschitz 33 Wim H. Hesselink 34 1 Hamilton Richards 36 Ken Calvert 38 David Naumann 40 David Turner 42 J.R. Rao 44 Jayadev Misra 47 Rajeev Joshi 50 Maarten van Emden 52 Two Tuesday Afternoon Clubs 54 2 Preface Edsger Dijkstra was perhaps the best known, and certainly the most discussed, computer scientist of the seventies and eighties. We both knew Dijkstra |though each of us in different ways| and we both were aware that his influence on computer science was not limited to his pioneering software projects and research articles. He interacted with his colleagues by way of numerous discussions, extensive letter correspondence, and hundreds of so-called EWD reports that he used to send to a select group of researchers. -

Algoritmi Za Sortiranje U Programskom Jeziku C++ Završni Rad

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the University of Rijeka SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA POLITEHNIKU Algoritmi za sortiranje u programskom jeziku C++ Završni rad Mentor završnog rada: doc. dr. sc. Marko Maliković Student: Alen Jakus Rijeka, 2016. SVEUČILIŠTE U RIJECI Filozofski fakultet Odsjek za politehniku Rijeka, Sveučilišna avenija 4 Povjerenstvo za završne i diplomske ispite U Rijeci, 07. travnja, 2016. ZADATAK ZAVRŠNOG RADA (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Zadatak: Algoritmi za sortiranje u programskom jeziku C++ Rješenjem zadatka potrebno je obuhvatiti sljedeće: 1. Napraviti pregled algoritama za sortiranje. 2. Opisati odabrane algoritme za sortiranje. 3. Dijagramima prikazati rad odabranih algoritama za sortiranje. 4. Opis osnovnih svojstava programskog jezika C++. 5. Detaljan opis tipova podataka, izvedenih oblika podataka, naredbi i drugih elemenata iz programskog jezika C++ koji se koriste u rješenjima odabranih problema. 6. Opis rješenja koja su dobivena iz napisanih programa. 7. Cjelokupan kôd u programskom jeziku C++. U završnom se radu obvezno treba pridržavati Pravilnika o diplomskom radu i Uputa za izradu završnog rada sveučilišnog dodiplomskog studija. Zadatak uručen pristupniku: 07. travnja 2016. godine Rok predaje završnog rada: ____________________ Datum predaje završnog rada: ____________________ Zadatak zadao: Doc. dr. sc. Marko Maliković 2 FILOZOFSKI FAKULTET U RIJECI Odsjek za politehniku U Rijeci, 07. travnja 2016. godine ZADATAK ZA ZAVRŠNI RAD (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Naslov završnog rada: Algoritmi za sortiranje u programskom jeziku C++ Kratak opis zadatka: Napravite pregled algoritama za sortiranje. Opišite odabrane algoritme za sortiranje. -

Quick Sort Algorithm Song Qin Dept

Quick Sort Algorithm Song Qin Dept. of Computer Sciences Florida Institute of Technology Melbourne, FL 32901 ABSTRACT each iteration. Repeat this on the rest of the unsorted region Given an array with n elements, we want to rearrange them in without the first element. ascending order. In this paper, we introduce Quick Sort, a Bubble sort works as follows: keep passing through the list, divide-and-conquer algorithm to sort an N element array. We exchanging adjacent element, if the list is out of order; when no evaluate the O(NlogN) time complexity in best case and O(N2) exchanges are required on some pass, the list is sorted. in worst case theoretically. We also introduce a way to approach the best case. Merge sort [4] has a O(NlogN) time complexity. It divides the 1. INTRODUCTION array into two subarrays each with N/2 items. Conquer each Search engine relies on sorting algorithm very much. When you subarray by sorting it. Unless the array is sufficiently small(one search some key word online, the feedback information is element left), use recursion to do this. Combine the solutions to brought to you sorted by the importance of the web page. the subarrays by merging them into single sorted array. 2 Bubble, Selection and Insertion Sort, they all have an O(N2) time In Bubble sort, Selection sort and Insertion sort, the O(N ) time complexity that limits its usefulness to small number of element complexity limits the performance when N gets very big. no more than a few thousand data points. -

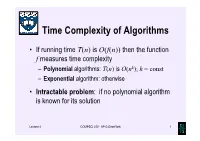

Time Complexity of Algorithms

Time Complexity of Algorithms • If running time T(n) is O(f(n)) then the function f measures time complexity – Polynomial algorithms: T(n) is O(nk); k = const – Exponential algorithm: otherwise • Intractable problem: if no polynomial algorithm is known for its solution Lecture 4 COMPSCI 220 - AP G Gimel'farb 1 Time complexity growth f(n) Number of data items processed per: 1 minute 1 day 1 year 1 century n 10 14,400 5.26⋅106 5.26⋅108 7 n log10n 10 3,997 883,895 6.72⋅10 n1.5 10 1,275 65,128 1.40⋅106 n2 10 379 7,252 72,522 n3 10 112 807 3,746 2n 10 20 29 35 Lecture 4 COMPSCI 220 - AP G Gimel'farb 2 Beware exponential complexity ☺If a linear O(n) algorithm processes 10 items per minute, then it can process 14,400 items per day, 5,260,000 items per year, and 526,000,000 items per century ☻If an exponential O(2n) algorithm processes 10 items per minute, then it can process only 20 items per day and 35 items per century... Lecture 4 COMPSCI 220 - AP G Gimel'farb 3 Big-Oh vs. Actual Running Time • Example 1: Let algorithms A and B have running times TA(n) = 20n ms and TB(n) = 0.1n log2n ms • In the “Big-Oh”sense, A is better than B… • But: on which data volume can A outperform B? TA(n) < TB(n) if 20n < 0.1n log2n, 200 60 or log2n > 200, that is, when n >2 ≈ 10 ! • Thus, in all practical cases B is better than A… Lecture 4 COMPSCI 220 - AP G Gimel'farb 4 Big-Oh vs. -

Heapsort Vs. Quicksort

Heapsort vs. Quicksort Most groups had sound data and observed: – Random problem instances • Heapsort runs perhaps 2x slower on small instances • It’s even slower on larger instances – Nearly-sorted instances: • Quicksort is worse than Heapsort on large instances. Some groups counted comparisons: • Heapsort uses more comparisons on random data Most groups concluded: – Experiments show that MH2 predictions are correct • At least for random data 1 CSE 202 - Dynamic Programming Sorting Random Data N Time (us) Quicksort Heapsort 10 19 21 100 173 293 1,000 2,238 5,289 10,000 28,736 78,064 100,000 355,949 1,184,493 “HeapSort is definitely growing faster (in running time) than is QuickSort. ... This lends support to the MH2 model.” Does it? What other explanations are there? 2 CSE 202 - Dynamic Programming Sorting Random Data N Number of comparisons Quicksort Heapsort 10 54 56 100 987 1,206 1,000 13,116 18,708 10,000 166,926 249,856 100,000 2,050,479 3,136,104 But wait – the number of comparisons for Heapsort is also going up faster that for Quicksort. This has nothing to do with the MH2 analysis. How can we see if MH2 analysis is relevant? 3 CSE 202 - Dynamic Programming Sorting Random Data N Time (us) Compares Time / compare (ns) Quicksort Heapsort Quicksort Heapsort Quicksort Heapsort 10 19 21 54 56 352 375 100 173 293 987 1,206 175 243 1,000 2,238 5,289 13,116 18,708 171 283 10,000 28,736 78,064 166,926 249,856 172 312 100,000 355,949 1,184,493 2,050,479 3,136,104 174 378 Nice data! – Why does N = 10 take so much longer per comparison? – Why does Heapsort always take longer than Quicksort? – Is Heapsort growth as predicted by MH2 model? • Is N large enough to be interesting?? (Machine is a Sun Ultra 10) 4 CSE 202 - Dynamic Programming .. -

A Short History of Computational Complexity

The Computational Complexity Column by Lance FORTNOW NEC Laboratories America 4 Independence Way, Princeton, NJ 08540, USA [email protected] http://www.neci.nj.nec.com/homepages/fortnow/beatcs Every third year the Conference on Computational Complexity is held in Europe and this summer the University of Aarhus (Denmark) will host the meeting July 7-10. More details at the conference web page http://www.computationalcomplexity.org This month we present a historical view of computational complexity written by Steve Homer and myself. This is a preliminary version of a chapter to be included in an upcoming North-Holland Handbook of the History of Mathematical Logic edited by Dirk van Dalen, John Dawson and Aki Kanamori. A Short History of Computational Complexity Lance Fortnow1 Steve Homer2 NEC Research Institute Computer Science Department 4 Independence Way Boston University Princeton, NJ 08540 111 Cummington Street Boston, MA 02215 1 Introduction It all started with a machine. In 1936, Turing developed his theoretical com- putational model. He based his model on how he perceived mathematicians think. As digital computers were developed in the 40's and 50's, the Turing machine proved itself as the right theoretical model for computation. Quickly though we discovered that the basic Turing machine model fails to account for the amount of time or memory needed by a computer, a critical issue today but even more so in those early days of computing. The key idea to measure time and space as a function of the length of the input came in the early 1960's by Hartmanis and Stearns. -

Advanced Topics in Sorting

Advanced Topics in Sorting complexity system sorts duplicate keys comparators 1 complexity system sorts duplicate keys comparators 2 Complexity of sorting Computational complexity. Framework to study efficiency of algorithms for solving a particular problem X. Machine model. Focus on fundamental operations. Upper bound. Cost guarantee provided by some algorithm for X. Lower bound. Proven limit on cost guarantee of any algorithm for X. Optimal algorithm. Algorithm with best cost guarantee for X. lower bound ~ upper bound Example: sorting. • Machine model = # comparisons access information only through compares • Upper bound = N lg N from mergesort. • Lower bound ? 3 Decision Tree a < b yes no code between comparisons (e.g., sequence of exchanges) b < c a < c yes no yes no a b c b a c a < c b < c yes no yes no a c b c a b b c a c b a 4 Comparison-based lower bound for sorting Theorem. Any comparison based sorting algorithm must use more than N lg N - 1.44 N comparisons in the worst-case. Pf. Assume input consists of N distinct values a through a . • 1 N • Worst case dictated by tree height h. N ! different orderings. • • (At least) one leaf corresponds to each ordering. Binary tree with N ! leaves cannot have height less than lg (N!) • h lg N! lg (N / e) N Stirling's formula = N lg N - N lg e N lg N - 1.44 N 5 Complexity of sorting Upper bound. Cost guarantee provided by some algorithm for X. Lower bound. Proven limit on cost guarantee of any algorithm for X.