Elements of Photonics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

888-9-LEVELS | Table of Contents MODEL NUMBER REFERENCE GUIDE 1-2

2016 x 888-9-LEVELS | www.johnsonlevel.com Table of Contents MODEL NUMBER REFERENCE GUIDE 1-2 WARRANTY 3 LASERS Rotary Lasers 6-23 Pipe Laser 24 Dot Lasers 25-28 Take a closer look at what Combination Line & Dot Lasers 29-32 Line Lasers 33-43 separates Johnson from the rest... Torpedo Lasers 41 Sheave Alignment & Industrial Lasers 44-47 With more than 65 years of developing MSHA Mining Lasers 48-49 solutions to help professional tradesmen Accessories 50-56 improve their work, Johnson products are OPTICAL INSTRUMENTS trusted by professionals worldwide to help Theodolites 58-59 them work more accurately, more quickly and Automatic Levels 60 more reliably. Over the years, we have built a Level & Transit Levels 61-62 comprehensive portfolio of leveling, marking LASER DISTANCE MEASURING 64-66 and layout tools which includes construction grade lasers, levels and squares. ELECTRONIC DIGITAL Levels 68-72 Angle Locators 73-76 In addition, Johnson is positioned to offer a Digital Measuring 77-79 broad spectrum of laser distance measures, LEVELS measuring wheels, digital measuring tools, Wood Levels 81 and digital levels and protractors. Like every Box Levels 82-85 product we supply, Johnson brand products I-Beam Levels 86-88 Torpedo Levels 89-92 are designed to offer a high quality tool that Line & Surface Levels 93 represents the highest value product available Specialty Levels 94 anywhere. Johnson has a reputation for exceptional service, education and everyday SQUARES Framing & Carpenter Squares 96-98 dependability to exceed expectations for Rafter Squares 99-100 quality and service. Combination Squares 101-102 Special Purpose Squares 103-104 T-Squares 105-106 MEASURING Straight Edges & Cutting Guide 108-109 Measuring Tapes 110-114 Measuring Wheels 115-116 MARKING & SPECIALTY TOOLS Carpenter Pencils & Crayons 118 Barricade Tape 118 Plumb Bobs 119 MERCHANDISING 120 x 888-9-LEVELS | www.johnsonlevel.com MODEL NUMBER REFERENCE MODEL NO PAGE MODEL NO PAGE MODEL NO PAGE MODEL NO PAGE MODEL NO PAGE MODEL NO PAGE 012 ........................119 1737-2459 ............. -

Measuring Technology from Bosch

Your benchmark for precision: Measuring technology from Bosch. Measuring – PLR 25, PLR 50 and PMB 300 L. Levelling – PCL 10, PCL 20, PLT 2 and PLL 5. Detecting – PDO Multi and PDO 6. – GB – Printed in Federal Republic of Germany – of Germany Republic in Federal – GB Printed 17 1619GU10 printing errors. for No liability is accepted alterations. technical Subject to Robert Bosch Ltd PO Box 98 Uxbridge Middlesex UB9 5HN www.bosch-do-it.co.uk As precise as can be: the Laser Rangefinders from Bosch. The Laser Rangefinders PLR 25 and PLR 50 from Bosch are equipped with state-of-the-art laser technology. They provide measurements with ultimate precision and reliability because one thing is certain: nothing is more precise than measuring with a laser. Laser measurement with PLR 25 and 50 Precise measurement using a laser. Measuring accuracy of ± 2 mm (regardless of distance). By comparison: ultrasonic measurement Tapered measurement using ultrasonic technology. Typical measuring accuracy of ± 50 mm over 10 m. Precise measurement of distances, areas and volumes. Aim at the target, press the measurement button, and read the precise measurement result. That’s how quick and easy it is to measure distances, areas or volumes with the Bosch Laser Rangefinders PLR 25 and PLR 50. A particularly handy feature is that you can measure from the front or back edge of the instruments. Using the laser point and targeting aid, you can accurately measure a distance of up to 25 m (PLR 25) or even up to 50 m (PLR 50) and the result will be instantly and reliably shown on the large display. -

I-Beam Levels

PRODUCT CATALOG WHY JOHNSON Founded in 1947, Johnson is a leading manufacturer of professional quality tools designed to help our customers get their work done more quickly and accurately. We believe our success is founded in a strong working relationship with our distributor customers and the professional tool user. Over the years we have built a comprehensive portfolio of leveling, measuring, marking and layout tools which has expanded into construction grade lasers, laser distance measurers and industrial grade machine mountable lasers and levels. Every product we produce is designed to offer our targeted end user a high quality tool that represents the highest value fi nished product available anywhere. We spend countless hours listening to the voice of the end user where we learn about their work habits, expectations and needs. They ask us to design products that are easy to understand, easy to use, durable, reliable and accurate. They ask for innovation because product innovation creates end user excitement. As a result, we are committed to tenaciously expanding our product offering and driving the highest value for our customers. As the marketplace continues to change, we strive to provide an exceptional overall customer experience through expanding product lines, exceptional fi ll rates and service levels, well trained and competent Team Members, and the fl exibility to meet your specifi c needs and expectations. Every Team Member at Johnson is committed to exceeding every expectation you may have of a supplier-partner. We work hard every day to earn your business and hope you take the time to see what separates Johnson from the rest. -

Measuring - Calipers, Barometers and Thermometers

MEASURING - CALIPERS, BAROMETERS AND THERMOMETERS DIGITAL BREAST BRIDGE The Digital Breast Bridge aids in setting up tangential breast treatment fields. After the borders of the tangential fields are determined place the digital breast bridge to these skin marks. The digital readout will give the angles needed for the gantry. The field separation is determined by the scale on the rails. The acrylic plates are 20 cm wide x 15 cm high and have 1 cm inscribed markings. Separations can be measured from 13 cm to 31 cm. Weight: 3.2 lbs Item # Description 270-001 Digital Breast Bridge BREAST BRIDGE The Breast Bridge aids in setting up portals for tangential breast P treatment. After the area to be treated has been marked, the bridge is placed on the patient’s chest and adjusted to the skin markings, thus, determining the separation of the fields. The angulation of the portals is determined from the ball protractor. The acrylic plates are 20 cm wide x 15 cm high and have 1 cm inscribed markings. Separations can be measured from 15 cm to 35 cm. The ball protractor provides angulation readings from 15O to 80O. Item # Description 270-000 Breast Bridge BREAST BRIDGE COMPRESSOR The Breast Bridge Compressor is used to compress the breast, particularly when it is desired to increase the dose to the residual mass at the end of a course of treatment. The aluminum mesh and frame are coated with a smooth blue vinyl plastic for minimum skin dose. Specifications Treatment Area Size: 10 cm H x 15 cm W Compression Distance: 1 cm to 13 cm Item # Description 272-100 Breast Bridge Compressor MAMMO CALIPER WITH DIGITAL LEVEL The Mammo Caliper is a rugged and accurate caliper designed to provide measurements for breast simulation or as general purpose caliper. -

Laser Tool Range

LASER TOOL RANGE stanleylasers.com 1 stanleylasers.com THE STANLEY® LASER RANGE Accurate measuement is essential for trouble free construction sites, speeding up the job and minimising waste and reworked tasks. Our aim has been to create a range of complimentary products - all built to our exacting standards - to enable professional users work quickly and accurately. That’s why the STANLEY® Laser Tools range offers all the benets of durable and well engineered products with user friendly features and features designed for the professional user. LASER TOOLS AND MORE... Alongside the laser tools, we’ve included a range of Measuring Wheels and Optical Levels to provide everything needed from survey to nished build. Our comprehensive accessories include wall and ceiling mounts, laser detectors and glasses as well as tripod and pole options for mounting measuring and leveling tools. Remote controls, rechargeable battery packs, chargers and rods are all available to add even more functionaility to the range. CONTENTS 03 09 28 INTRODUCTION LEVELLING MEASURING 04 Applications & Tool Types 10 Introduction 29 Introduction 06 The STANLEY® Standard 12 Rotary Laser Levels 31 True Laser Measure 07 Choosing the Right Laser for the Job 16 Point Laser Levels 38 Measuring Wheels 18 Cross & Multi Line Laser Levels 24 Optical Laser Levels 40 46 51 DETECTING ACCESSORIES SERVICE & REPAIR 41 Introduction 46 Laser Pole, Glasses 51 Service & repair & Tripod 42 Stud Sensors 44 Moisture Detectors 3 stanleylasers.com SITE LAYOUT FIRST FIX STANLEY® offer a wide range of professional laser tools to Set out walls and internal structures imply and accurately. make the perfect start to any construction project. -

Wharton Survey Equipment Catalog

SURVEY EQUIPMENT | MEASURING EQUIPMENT | ACCESSORIES Surveyors Equipment Catalog 2018 WHARTON HARDWARE & SUPPLY CO., INC. – NJ 7724 N. Cresent Blvd. Pennsauken, NJ 08110 NJ (856) 662-6935 WHARTON SUPPLY INC. OF VIRGINIA 7620 Backlick Rd. Springfield, VA 22150 METRO (703) 569-6660 • VA (703) 455-6700 WHARTON FORM RENTALS – CLINTON, MD 8042 Old Alexandria Ferry Road Clinton, MD 20735 MD (301) 877-8640 www.whartonhardware.com1 SURVEYING EQUIPMENT WHARTON HARDWARE & SUPPLY NJ (856)662-6935 | VA (703)569-6660 | MD (301)877-8640 www.whartonhardware.com EQUIPMENT GUIDE S Index Stripe Mark Machine Seymour Z-604..........11 Stripe Wand A Seymour Z-606..........11 Survey Marking Flag Automatic Levels Keson Survey Marking Flags..........10 Topcon AT B4..........6 Surveyors Tape Keson Vinyl Surveyors Tape..........11 E Survey Rods Engineering Field Book Topcon Rods..........6 Pentax Field Book..........9 Survey Stakes Oak..........10 I T Inverted Tip Marking Paint Seymour Brand Stripe Fluorescent Paint Spray Tri-pods ............11 Topcon TP-110/TP-100..........3 L Laser Levels Topcon Leo3..........5 Topcon RLH4C..........3 Topcon RLVH4DR-GC..........5 Laser Recievers LS-80 Laser Receiver..........6 Laser Systems Pacific PLS-3..........7 Pacific PLS-5..........8 Pacific PLS-5X..........8 M Measuring Wheels Keson MP401/MP301..........13 Keson RR30..........12 Keson RR182..........13 P Pipe Laser Topcon TP-L4B..........4 R Rotating Lasers Pacific Laser HVR-500..........9 Pacific Laser HVR-1000..........9 Topcon Taurus 2LS..........4 NJ: 856-662-6935 -

STABILA Catalogue — the PRODUCTS

STABILA Messgeräte Gustav Ullrich GmbH EN Landauer Str. 45 76855 Annweiler, Germany ) +49 6346 309-0 2 +49 6346 309-480 * [email protected] www.stabila.com 2020/2021 01/20 2020/2021 Follow STABILA on See all products at THE PRODUCTS 19584 www.stabila.com PRODUCTS STABILA – THE STABILA For all those who take pride in the quality of their work. True pro’s measure with STABILA. 2 – 3 Contents What we stand for 6 Spirit levels 8 Special spirit levels 38 Electronic measuring tools 46 Rotation, line and point lasers 54 Laser distance measurers 88 Laser accessories 100 Optical levels 108 Folding rules 110 Tape measures 116 Levelling boards, feather edges 128 and h-profile feather edges 4 – 5 What we stand for What we stand for You don't become good at what you do by chance As a professional, you work hard and your expectations are high. There are always challenging situations on construction sites that have to be overcome. And to achieve the best results you need all your skills accompanied by tools that let you reach your full working potential. Good tools – Good work Taking precise measurements is one of the most important tasks on construction sites, which is why it is crucial that professionals have tools that they can rely on at all times. Tools that enable them to carry out a variety of measurement tasks on the construction site precisely, efficiently and easily – time after time. Our way to innovative tools We are in constant dialogue with tradespeople, and this allows us to tailor and optimise our products to their specific requirements. -

NLC01 Manual

INSTRUCTION MANUAL 360°LEVELER GREEN LASER ENGLISH (ORIGINAL INSTRUCTION) IMPORTANT Read before using Model No. 3241PTL02 2 3 1 1 4 5 6 9 7 10 8 2 A B C D X X 3 Safety Notes Product Description and Specifications All instructions must be read and observed in order to work safely with the measuring tool. Never make Intended Use warning signs on the measuring tool The measuring tool is intended for determining and checking unrecognisable. SAVE THESE IN-STRUCTIONS FOR horizontal and vertical lines. FUTURE REFERENCE AND INCLUDE THEM WITH THE MEASURING TOOL WHEN GIVING IT TO A THIRD The measuring tool is suitable exclusively for operation in en- PARTY. closed working sites. • Caution – The use of other operating or adjusting equipment Product Features or the application of other processing methods than those The numbering of the product features shown refers to the mentioned here can lead to dangerous radiation exposure. illustration of the measuring tool on the graphic page. • The measuring tool is provided with a warning label 1 Exit opening for laser beam 2 Automatic levelling indicator 3 Mode button 4 On/Off switch 5 Latch of battery lid 6 Battery lid 7 Contact surface 8 Laser warning label 9 Tripod mount 1/4" • If the text of the warning label is not in your national lan- 10 Tripod* guage, stick the provided warning label in your national * The accessories illustrated or described are not included as language over it before operating for the first time. standard delivery. Technical Data Do not direct the laser beam at persons or animals and do not stare into the direct or 360° Laser Line NL360-2EG reflected laser beam yourself, not even from Power 6V,4-AA Battery a distance. -

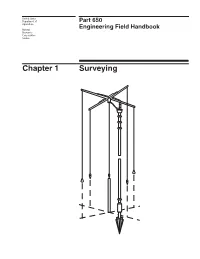

Chapter 1 Surveying Chapter 1 Surveying Part 650 Engineering Field Handbook

United States Department of Part 650 Agriculture Natural Engineering Field Handbook Resources Conservation Service Chapter 1 Surveying Chapter 1 Surveying Part 650 Engineering Field Handbook Issued October 2008 Cover illustration: Roman groma The early Roman surveyors used an instrument called the groma to lay out their cities and roads by right angles. The word “groma” is thought to have come from the root Greek word “gnome,” which is defined as the pointer of a sundial. The importance of turning right angles is shown in the layout of several cities in northern Italy, as well as North Africa. The land was subdi- vided into 2,400 Roman feet square. Each square was called a centuria. The centurias were crossed by road at right angles to each other. The roads that ran north-south were called cardines, and the roads that ran east-west were called decumani. The groma was also used to locate and place monumen- tation for property corners. Once the centurias were in place, maps were drawn on bronze tablets giving a physical representation of the centuria. The U.S. Department of Agriculture (USDA) prohibits discrimination in all its programs and activities on the basis of race, color, national origin, age, disability, and where applicable, sex, marital status, familial status, parental status, religion, sexual orientation, genetic information, political beliefs, re- prisal, or because all or a part of an individual’s income is derived from any public assistance program. (Not all prohibited bases apply to all programs.) Persons with disabilities who require alternative means for communication of program information (Braille, large print, audiotape, etc.) should contact USDA’s TARGET Center at (202) 720-2600 (voice and TDD). -

Diamond Pattern

page 1 Diamond Pattern Choose the right tape for this technique and the surfaces you are going to mask! Details on page 2 One of the secrets to a successful painting project is properly preparing the surface. That means repairing any imperfections on the wall like cracks or old nail holes and sanding the wall smooth with 3M™ abrasives. Then, clean the wall and any surfaces you plan to mask. It is easier to achieve sharp, professional paint lines when you tape on a clean, dry surface. Not sure what Scotch-Blue painter’s tape to choose? Use the chart on the next page to help you identify which tape to use on which surface. This is particularly important when trying different painting techniques. You will want a tape that can be applied to paint that is only 24-hours old. Step-by-Step Guide for this Painting Technique Create a classic checkerboard pattern on either a wall Step 5: Using Step 9: Press or a floor. By using various paint colors, the look can a template that down all of the tape range from dramatic black and white to a country is the exact size edges. Paint every chic look using a rich gold and red color scheme. and shape of the other diamond with diamond (such as the darker color Step 1: Planning: Start by drawing a diagram of the a tile, a stretched starting at the tape wall to scale so that you can plan your pattern. Plan canvas or cut and working your the size of your diamonds (which are really squares wood), lightly way to the center. -

P R O D U C T C a T a L

PRODUCT CATALOG CeLebratinG 60 years in bUsiness, Johnson Level & Tool has been totally focused on serving the needs of our customers since 1947. We believe the success of our business is founded in strong relationships, understanding the needs of our customers and delivering effective results. Serving Professional tradesmen and Do-It-Yourselfers alike, Johnson Level represents the most comprehensive portfolio of measuring, marking, layout, and hand tools in the marketplace. Our product offerings have been expanded to include a complete line of Laser Levels, Industrial-quality Hand Tools, and the most comprehensive line of Wood, Aluminum I-beam, Box Beam, Torpedo Levels, and Squares in the industry. At Johnson, we pride ourselves on thoroughly understanding the needs of our customers and responding to deliver creative, effective solutions. Our entire team is committed to providing quality products at fair prices, delivered on time, for precision results. The rules continue to change in today’s increasingly competitive global marketplace. As the marketplace changes, we strive to provide the best overall customer experience through our extensive product offerings, exceptional people, and flexibility to meet and exceed our customers’ expectations in everything we do. Take a closer look at what separates Johnson from the rest. LASERS Rotary Lasers . 2-4 Dot Lasers . 5 Line Lasers . 6-7 Torpedo Lasers . 8 Optical Levels . 9 Detectors. 10 Tripods & Accessories . 10-12 LEVELS Electronic Levels & Inclinometers . 13 Wood Levels. 14 Box Levels . 15 I-Beam Levels . 16-19 Utility & Special Purpose Levels . 20 Torpedo Levels . 21-23 SQUARES Framing Squares . 24-25 Rafter Squares & Protractors . 26-27 Combination Squares . -

To View Catalog (.PDF)

EQUIPMENT & SUPPLY CO., INC. MEASURING & MARKING CATALOG VOLUME 7 • ISSUE 5 LASERS, LEVELS, TAPE MEASURES, MEASURING WHEELS, MEASURING PAINTS, CONES, FLAGS, & MORE Serving the Construction Industry since 1968 • farrellequipment.com MEASURING & MARKING PRODUCTS ROTARY LASER KIT ROTARY LASER KIT SL100-2 SLHV101GC2 LL100N Laser Kit HV101 Horizontal/Vertical Laser A complete system is contained in a Package single hard-shelled, portable carrying The automatic, self-leveling case —the laser, receiver, clamp, tripod, and grade rod—for easy transportation, Spectra Precision Laser HV101 storage, and use. Automatic, self-leveling Laser is a professional tool with an ensures fast, accurate setups. Easy, one-button economical price tag. The HV101 operation requires minimal training. Capable is capable of handling a wide variety of horizontal, vertical, and of handling a wide variety of elevation control plumb applications. It is rugged enough for the toughest jobsite applications. and designed to survive a 3 foot drop onto concrete. Backed by the industry's only 3-year instant over the counter exchange. Includes HV101 laser, GR151 Grade Rod, HR320 Receiver, C59 Rod Clamp, carrying case, tripod, RC601 remote control, wall ROTARY LASER mount, ceiling target, and glasses. SL300 LL300N Self-Leveling Laser with Detector & Bracket Our most rugged laser level. Rugged enough to survive drops of up to 3 feet onto concrete and can be tipped on a tripod up ROTARY LASER to 5 feet and keep working. Includes HR350 detector and bracket. SLGL422 Grade Lasers Cost-effective, automatic self- leveling lasers that do three jobs—level, grade, and vertical alignment with plumb. Both ROTARY LASER KIT lasers feature a 2-way, full- SL300-2 function remote control so you LL300N Laser Kit with HL450 Receiver, can make grade changes from Tripod, & Grade Rod anywhere on the jobsite for reduced setup time and faster operation.