A Comparative Case Study on Tools for Internal Software Quality Measures

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Use of Summation to Aggregate Software Metrics Hinders the Performance of Defect Prediction Models

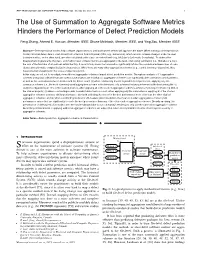

IEEE TRANSACTIONS ON SOFTWARE ENGINEERING, VOL. XX, NO. XX, XXX 2016 1 The Use of Summation to Aggregate Software Metrics Hinders the Performance of Defect Prediction Models Feng Zhang, Ahmed E. Hassan, Member, IEEE, Shane McIntosh, Member, IEEE, and Ying Zou, Member, IEEE Abstract—Defect prediction models help software organizations to anticipate where defects will appear in the future. When training a defect prediction model, historical defect data is often mined from a Version Control System (VCS, e.g., Subversion), which records software changes at the file-level. Software metrics, on the other hand, are often calculated at the class- or method-level (e.g., McCabe’s Cyclomatic Complexity). To address the disagreement in granularity, the class- and method-level software metrics are aggregated to file-level, often using summation (i.e., McCabe of a file is the sum of the McCabe of all methods within the file). A recent study shows that summation significantly inflates the correlation between lines of code (Sloc) and cyclomatic complexity (Cc) in Java projects. While there are many other aggregation schemes (e.g., central tendency, dispersion), they have remained unexplored in the scope of defect prediction. In this study, we set out to investigate how different aggregation schemes impact defect prediction models. Through an analysis of 11 aggregation schemes using data collected from 255 open source projects, we find that: (1) aggregation schemes can significantly alter correlations among metrics, as well as the correlations between metrics and -

Command Line Interface

Command Line Interface Squore 21.0.2 Last updated 2021-08-19 Table of Contents Preface. 1 Foreword. 1 Licence. 1 Warranty . 1 Responsabilities . 2 Contacting Vector Informatik GmbH Product Support. 2 Getting the Latest Version of this Manual . 2 1. Introduction . 3 2. Installing Squore Agent . 4 Prerequisites . 4 Download . 4 Upgrade . 4 Uninstall . 5 3. Using Squore Agent . 6 Command Line Structure . 6 Command Line Reference . 6 Squore Agent Options. 6 Project Build Parameters . 7 Exit Codes. 13 4. Managing Credentials . 14 Saving Credentials . 14 Encrypting Credentials . 15 Migrating Old Credentials Format . 16 5. Advanced Configuration . 17 Defining Server Dependencies . 17 Adding config.xml File . 17 Using Java System Properties. 18 Setting up HTTPS . 18 Appendix A: Repository Connectors . 19 ClearCase . 19 CVS . 19 Folder Path . 20 Folder (use GNATHub). 21 Git. 21 Perforce . 23 PTC Integrity . 25 SVN . 26 Synergy. 28 TFS . 30 Zip Upload . 32 Using Multiple Nodes . 32 Appendix B: Data Providers . 34 AntiC . 34 Automotive Coverage Import . 34 Automotive Tag Import. 35 Axivion. 35 BullseyeCoverage Code Coverage Analyzer. 36 CANoe. 36 Cantata . 38 CheckStyle. .. -

Squore Acceptance Provides a Fast and High Return on Investment by Efficiently

Acceptance SQUORE Squoring Technologies delivers an innovative decision-making dashboard dedicated to managing outsourced software development projects. Acceptance represents a key phase of every software development project, whatever the process: Acquisition or Third Party Application Maintenance. Beyond functional suitability, Acceptance must consider all software product dimensions, from quality characteristics such as Reliability, Maintainability and Performance, to work products like source code, requirements and test cases. TREND As required by the CMMI®, Supplier Management INDICATOR implies an objective and impartial assessment of these components, based on quantified measurement criteria adapted to the context and objectives of each project. Squore Acceptance provides a fast and high return on investment by efficiently: Contractualizing non- Increasing confidence Securing deployment functional, technical between customer and and operation. requirements. supplier. Defining common and Demonstrating compliance Reducing acceptance shared acceptance of deliverables with costs and efforts. criteria. quality requirements. Visit www.squore-acceptance.com Innovative features dedicated to the management of outsourced software projects. “Out-of-the-box” standardized control points, metrics and rules using best industry standards, and still customizable to fit in-house practices. Predefined software product quality models based on international standards: ISO SQuaRE 25010, ISO/IEC 9126, ECSS Quality Handbook, SQUALE . Standardized evaluation process in accordance with ISO/IEC 14598 and ISO/IEC 15939 standards. Squore covers all software product quality characteristics under a standard breakdown Quantified acceptance criteria for every type of deliverable, from requirements to documentation, via source code and test cases. Comprehensive overview of software product compliance through Key Performance Indicators and trend analysis. Unrivaled in-depth analysis where at-risk components are immediately identified, down to the most elementary function or method. -

Software Maintainability a Brief Overview & Application to the LFEV 2015

Software Maintainability A Brief Overview & Application to the LFEV 2015 Adam I. Cornwell Lafayette College Department of Electrical & Computer Engineering Easton, Pennsylvania [email protected] Abstract — This paper gives a brief introduction on For “lexical level” approaches which base what software maintainability is, the various methods complexity on program code, the following which have been devised to quantify and measure software maintainability, its relevance to the ECE 492 measurands are typical: individual and average Senior Design course, and some practical lines of code; number of commented lines, implementation guidelines. executable statements, blank lines, tokens, and data declarations; source code readability, or the Keywords — Software Maintainability; Halstead ratio of LOC to commented LOC; Halstead Metrics; McCabe’s Cyclomatic complexity; radon Metrics, including Halstead Length, Volume, and Effort; McCabe’s Cyclomatic Complexity; the I. INTRODUCTION control structure nesting level; and the number of Software Maintainability is an important knots, or the number of times the control flow concept in the upkeep of long-term software crosses. The final measurand is not as useful with systems. According to the IEEE, software object oriented programming but can still be of maintainability is defined as “the ease with which some use. a software system or component can be modified to correct faults, improve performance or other “Psychological complexity” approaches measure attributes, or adapt to a changed environment difficulty and are based on understandability and [1].” Software Maintainability can be measured the user [3]. The nature of the file and the using various devised methods, although none difficulty experienced by those working with the have been conclusively shown to work in a large file are what contribute to these kinds of variety of software systems [6]. -

A Metrics-Based Software Maintenance Effort Model

A Metrics-Based Software Maintenance Effort Model Jane Huffman Hayes Sandip C. Patel Liming Zhao Computer Science Department Computer Science Department Computer Science Department Lab for Advanced Networking University of Louisville University of Kentucky University of Kentucky [email protected] [email protected] [email protected] (corresponding author) Abstract planning a new software development project. Albrecht introduced the notion of function points (FP) to estimate We derive a model for estimating adaptive software effort [1]. Mukhopadhyay [19] proposes early software maintenance effort in person hours, the Adaptive cost estimation based on requirements alone. Software Maintenance Effort Model (AMEffMo). A number of Life Cycle Management (SLIM) [23] is based on the metrics such as lines of code changed and number of Norden/Rayleigh function and is suitable for large operators changed were found to be strongly correlated projects. Shepperd et al. [27] argued that algorithmic cost to maintenance effort. The regression models performed models such as COCOMO and those based on function well in predicting adaptive maintenance effort as well as points suggested an approach based on using analogous provide useful information for managers and maintainers. projects to estimate the effort for a new project. In addition to the traditional off-the-self models such as 1. Introduction COCOMO, machine-learning methods have surfaced recently. In [17], Mair et al. compared machine-learning Software maintenance typically accounts for at least 50 methods in building software effort prediction systems. percent of the total lifetime cost of a software system [16]. There has also been some work toward applying fuzzy Schach et al. -

The Correlation Among Software Complexity Metrics with Case Study

International Journal of Advanced Computer Research (ISSN (print): 2249-7277 ISSN (online): 2277-7970) Volume-4 Number-2 Issue-15 June-2014 The Correlation among Software Complexity Metrics with Case Study Yahya Tashtoush1, Mohammed Al-Maolegi2, Bassam Arkok3 Abstract software product attributes such as functionality, quality, complexity, efficiency, reliability or People demand for software quality is growing maintainability. For example, a higher number of increasingly, thus different scales for the software code lines will lead to greater software complexity are growing fast to handle the quality of software. and so on. The software complexity metric is one of the measurements that use some of the internal The complexity of software effects on maintenance attributes or characteristics of software to know how activities like software testability, reusability, they effect on the software quality. In this paper, we understandability and modifiability. Software cover some of more efficient software complexity complexity is defined as ―the degree to which a metrics such as Cyclomatic complexity, line of code system or component has a design or implementation and Hallstead complexity metric. This paper that is difficult to understand and verify‖ [1]. All the presents their impacts on the software quality. It factors that make program difficult to understand are also discusses and analyzes the correlation between responsible for complexity. So it is necessary to find them. It finally reveals their relation with the measurements for software to reduce the impacts of number of errors using a real dataset as a case the complexity and guarantee the quality at the same study. time as much as possible. -

![Arxiv:2106.03271V1 [Cs.SE] 6 Jun 2021](https://docslib.b-cdn.net/cover/3221/arxiv-2106-03271v1-cs-se-6-jun-2021-543221.webp)

Arxiv:2106.03271V1 [Cs.SE] 6 Jun 2021

Noname manuscript No. (will be inserted by the editor) Fixing Vulnerabilities Potentially Hinders Maintainability Sofia Reis · Rui Abreu · Luis Cruz Received: date / Accepted: date Abstract Security is a requirement of utmost importance to produce high- quality software. However, there is still a considerable amount of vulnerabil- ities being discovered and fixed almost weekly. We hypothesize that develop- ers affect the maintainability of their codebases when patching vulnerabili- ties. This paper evaluates the impact of patches to improve security on the maintainability of open-source software. Maintainability is measured based on the Better Code Hub’s model of 10 guidelines on a dataset, including 1300 security-related commits. Results show evidence of a trade-off between secu- rity and maintainability for 41:90% of the cases, i.e., developers may hinder software maintainability. Our analysis shows that 38:29% of patches increased software complexity and 37:87% of patches increased the percentage of LOCs per unit. The implications of our study are that changes to codebases while patching vulnerabilities need to be performed with extra care; tools for patch risk assessment should be integrate into the CI/CD pipeline; computer science curricula needs to be updated; and, more secure programming languages are necessary. Keywords Software Security Software Maintenance Open-Source Software · · Sofia Reis INESC ID and IST, University of Lisbon, Lisbon, Portugal E-mail: sofi[email protected] Rui Abreu INESC ID and FEUP, University of Porto, Porto, Portugal E-mail: [email protected] arXiv:2106.03271v2 [cs.SE] 12 Sep 2021 Luis Cruz Delft University of Technology, Delft, The Netherlands E-mail: [email protected] 2 Sofia Reis et al. -

A Machine Learning Approach Towards Automatic Software Design Pattern Recognition Across Multiple Programming Languages

ICSEA 2020 : The Fifteenth International Conference on Software Engineering Advances A Machine Learning Approach Towards Automatic Software Design Pattern Recognition Across Multiple Programming Languages Roy Oberhauser[0000-0002-7606-8226] Computer Science Dept. Aalen University Aalen, Germany e-mail: [email protected] Abstract—As the amount of software source code increases, languages of programmers that affect naming, tribal manual approaches for documentation or detection of software community effects, the programmer's (lack of) knowledge of design patterns in source code become inefficient relative to the these patterns and use of (proper) naming and notation or value. Furthermore, typical automatic pattern detection tools markers, make it difficult to identify pattern usage by experts are limited to a single programming language. To address this, or tooling. While many code repositories are accessible to our Design Pattern Detection using Machine Learning the public on the web, many more repositories are hidden (DPDML) offers a generalized and programming language within companies or other organizations and are not agnostic approach for automated design pattern detection necessarily accessible for analysis. While determining actual based on Machine Learning (ML). The focus of our evaluation pattern usage is beneficial for identifying which patterns are was on ensuring DPDML can reasonably detect one design used where and can help avoid unintended pattern pattern in the structural, creational, and behavioral category for two popular programming languages (Java and C#). 60 degradation and associated technical debt and quality issues, unique Java and C# code projects were used to train the the investment necessary for manual pattern extraction, artificial neural network (ANN) and 15 projects were then recovery, and archeology is not economically viable. -

Referência Debian I

Referência Debian i Referência Debian Osamu Aoki Referência Debian ii Copyright © 2013-2021 Osamu Aoki Esta Referência Debian (versão 2.85) (2021-09-17 09:11:56 UTC) pretende fornecer uma visão geral do sistema Debian como um guia do utilizador pós-instalação. Cobre muitos aspetos da administração do sistema através de exemplos shell-command para não programadores. Referência Debian iii COLLABORATORS TITLE : Referência Debian ACTION NAME DATE SIGNATURE WRITTEN BY Osamu Aoki 17 de setembro de 2021 REVISION HISTORY NUMBER DATE DESCRIPTION NAME Referência Debian iv Conteúdo 1 Manuais de GNU/Linux 1 1.1 Básico da consola ................................................... 1 1.1.1 A linha de comandos da shell ........................................ 1 1.1.2 The shell prompt under GUI ......................................... 2 1.1.3 A conta root .................................................. 2 1.1.4 A linha de comandos shell do root ...................................... 3 1.1.5 GUI de ferramentas de administração do sistema .............................. 3 1.1.6 Consolas virtuais ............................................... 3 1.1.7 Como abandonar a linha de comandos .................................... 3 1.1.8 Como desligar o sistema ........................................... 4 1.1.9 Recuperar uma consola sã .......................................... 4 1.1.10 Sugestões de pacotes adicionais para o novato ................................ 4 1.1.11 Uma conta de utilizador extra ........................................ 5 1.1.12 Configuração -

EBOOK-Injection-Guide.Pdf

1 INDEX What is Injection .......................................................................................................................... 5 Tainted Data Flow .................................................................................................................... 5 Injection Prevention Rules ...................................................................................................... 6 Injection Types ............................................................................................................................. 7 SQL Injection (CWE-89) ......................................................................................................... 10 LDAP Injection (CWE-90) ....................................................................................................... 13 XML Injection (CWE-91) ......................................................................................................... 15 XPath Injection (CWE-643) .................................................................................................... 16 XML External Entity Reference (XXE) (CWE-611) ................................................................ 18 Expression Language (EL) Injection (CWE-917) .................................................................. 20 OS Command Injection (CWE-78) ......................................................................................... 21 2 According to the OWASP Top 10 Application Security Risks (2017) . ... (https://www.owasp.org/index.php/Top_10-2017_Top_10), -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Comparison of Static Analysis Tools for Quality Measurement of RPG Programs

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by SZTE Publicatio Repozitórium - SZTE - Repository of Publications Comparison of Static Analysis Tools for Quality Measurement of RPG Programs Zolt´anT´oth1, L´aszl´oVid´acs2, and Rudolf Ferenc1 1 Department of Software Engineering, University of Szeged, Hungary [email protected], [email protected] 2 MTA-SZTE Research Group on Artificial Intelligence, Hungary [email protected] Abstract. The RPG programming language is a popular language em- ployed widely in IBM i mainframes nowadays. Legacy mainframe systems that evolved and survived the past decades usually data intensive and even business critical applications. Recent, state of the art quality assur- ance tools are mostly focused on popular languages like Java, C++ or Python. In this work we compare two source code based quality man- agement tools for the RPG language. The study is focused on the data obtained using static analysis, which is then aggregated to higher level quality attributes. SourceMeter is a command line tool-chain capable to measure various source attributes like metrics and coding rule viola- tions. SonarQube is a quality management platform with RPG language support. To facilitate the objective comparison, we used the SourceMe- ter for RPG plugin for SonarQube, which seamlessly integrates into the framework extending its capabilities. The evaluation is built on analysis success and depth, source code metrics, coding rules and code duplica- tions. We found that SourceMeter is more advanced in analysis depth, product metrics and finding duplications, while their performance of cod- ing rules and analysis success is rather balanced.