Numerical Methods for High-Dimensional Probability Density Function Equations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Modelling for Aquifer Parameters Determination

Journal of Engineering Science, Vol. 14, 71–99, 2018 Mathematical Implications of Hydrogeological Conditions: Modelling for Aquifer Parameters Determination Sheriffat Taiwo Okusaga1, Mustapha Adewale Usman1* and Niyi Olaonipekun Adebisi2 1Department of Mathematical Sciences, Olabisi Onabanjo University, Ago-Iwoye, Nigeria 2Department of Earth Sciences, Olabisi Onabanjo University, Ago-Iwoye, Nigeria *Corresponding author: [email protected] Published online: 15 April 2018 To cite this article: Sheriffat Taiwo Okusaga, Mustapha Adewale Usman and Niyi Olaonipekun Adebisi. (2018). Mathematical implications of hydrogeological conditions: Modelling for aquifer parameters determination. Journal of Engineering Science, 14: 71–99, https://doi.org/10.21315/jes2018.14.6. To link to this article: https://doi.org/10.21315/jes2018.14.6 Abstract: Formulations of natural hydrogeological phenomena have been derived, from experimentation and observation of Darcy fundamental law. Mathematical methods are also been applied to expand on these formulations, but the management of complexities which relate to subsurface heterogeneity is yet to be developed into better models. This study employs a thorough approach to modelling flow in an aquifer (a porous medium) with phenomenological parameters such as Transmissibility and Storage Coefficient on the basis of mathematical arguments. An overview of the essential components of mathematical background and a basic working knowledge of groundwater flow is presented. Equations obtained through the modelling process -

The Cauchy-Kovalevskaya Theorem

CHAPTER 2 The Cauchy-Kovalevskaya Theorem This chapter deals with the only “general theorem” which can be extended from the theory of ODEs, the Cauchy-Kovalevskaya Theorem. This also will allow us to introduce the notion of non-characteristic data, principal symbol and the basic clas- sification of PDEs. It will also allow us to explore why analyticity is not the proper regularity for studying PDEs most of the time. Acknowledgements. Some parts of this chapter – in particular the four proofs of the Cauchy-Kovalevskaya theorem for ODEs – are strongly inspired from the nice lecture notes of Bruce Diver available on his webpage, and some other parts – in particular the organisation of the proof in the PDE case and the discussion of the counter-examples – are strongly inspired from the nice lectures notes of Tsogtgerel Gantumur available on his webpage. 1. Recalls on analyticity Let us first do some recalls on the notion of real analyticity. Definition 1.1. A function f defined on some open subset U of the real line is said to be real analytic at a point x if it is an infinitely di↵erentiable function 0 2U such that the Taylor series at the point x0 1 f (n)(x ) (1.1) T (x)= 0 (x x )n n! − 0 n=0 X converges to f(x) for x in a neighborhood of x0. A function f is real analytic on an open set of the real line if it is real analytic U at any point x0 . The set of all real analytic functions on a given open set is often denoted by2C!U( ). -

Review of the Methods of Transition from Partial to Ordinary Differential

Archives of Computational Methods in Engineering https://doi.org/10.1007/s11831-021-09550-5 REVIEW ARTICLE Review of the Methods of Transition from Partial to Ordinary Diferential Equations: From Macro‑ to Nano‑structural Dynamics J. Awrejcewicz1 · V. A. Krysko‑Jr.1 · L. A. Kalutsky2 · M. V. Zhigalov2 · V. A. Krysko2 Received: 23 October 2020 / Accepted: 10 January 2021 © The Author(s) 2021 Abstract This review/research paper deals with the reduction of nonlinear partial diferential equations governing the dynamic behavior of structural mechanical members with emphasis put on theoretical aspects of the applied methods and signal processing. Owing to the rapid development of technology, materials science and in particular micro/nano mechanical systems, there is a need not only to revise approaches to mathematical modeling of structural nonlinear vibrations, but also to choose/pro- pose novel (extended) theoretically based methods and hence, motivating development of numerical algorithms, to get the authentic, reliable, validated and accurate solutions to complex mathematical models derived (nonlinear PDEs). The review introduces the reader to traditional approaches with a broad spectrum of the Fourier-type methods, Galerkin-type methods, Kantorovich–Vlasov methods, variational methods, variational iteration methods, as well as the methods of Vaindiner and Agranovskii–Baglai–Smirnov. While some of them are well known and applied by computational and engineering-oriented community, attention is paid to important (from our point of view) but not widely known and used classical approaches. In addition, the considerations are supported by the most popular and frequently employed algorithms and direct numerical schemes based on the fnite element method (FEM) and fnite diference method (FDM) to validate results obtained. -

Method of Separation of Variables

2.2. Linearity 33 2.2 Linearity As in the study of ordinary differential equations, the concept of linearity will be very important for liS. A linear operator L lJy definition satisfies Chapter 2 (2.2.1) for any two functions '/1,1 and 112, where Cl and ('2 are arhitrary constants. a/at and a 2 /ax' are examples of linear operators since they satisfy (2.2.1): Method of Separation iJ at (el'/11 + C2'/1,) of Variables a' 2 (C1'/11 +C2U 2) ax It can be shown (see Exercise 2.2.1) that any linear combination of linear operators is a linear operator. Thus, the heat operator 2.1 Introduction a iJl In Chapter I we developed from physical principles an lmderstanding of the heat at - k a2·2 eqnation and its corresponding initial and boundary conditions. We are ready is also a linear operator. to pursue the mathematical solution of some typical problems involving partial A linear equation fora it is of the form differential equations. \Ve \-vilt use a technique called the method of separation of variables. You will have to become an expert in this method, and so we will discuss L(u) = J, (2.2.2) quite a fev.; examples. v~,fe will emphasize problem solving techniques, but \ve must also understand how not to misuse the technique. where L is a linear operator and f is known. Examples of linear partial dijjerentinl A relatively simple but typical, problem for the equation of heat conduction equations are occurs for a one - dimensional rod (0 ::; x .::; L) when all the thermal coefficients are constant. -

Hydrogeology and Groundwater Flow

Hydrogeology and Groundwater Flow Hydrogeology (hydro- meaning water, and -geology meaning the study of rocks) is the part of hydrology that deals with the distribution and movement of groundwater in the soil and rocks of the Earth's crust, (commonly in aquifers). The term geohydrology is often used interchangeably. Some make the minor distinction between a hydrologist or engineer applying themselves to geology (geohydrology), and a geologist applying themselves to hydrology (hydrogeology). Hydrogeology (like most earth sciences) is an interdisciplinary subject; it can be difficult to account fully for the chemical, physical, biological and even legal interactions between soil, water, nature and society. Although the basic principles of hydrogeology are very intuitive (e.g., water flows "downhill"), the study of their interaction can be quite complex. Taking into account the interplay of the different facets of a multi-component system often requires knowledge in several diverse fields at both the experimental and theoretical levels. This being said, the following is a more traditional (reductionist viewpoint) introduction to the methods and nomenclature of saturated subsurface hydrology, or simply hydrogeology. © 2014 All Star Training, Inc. 1 Hydrogeology in Relation to Other Fields Hydrogeology, as stated above, is a branch of the earth sciences dealing with the flow of water through aquifers and other shallow porous media (typically less than 450 m or 1,500 ft below the land surface.) The very shallow flow of water in the subsurface (the upper 3 m or 10 ft) is pertinent to the fields of soil science, agriculture and civil engineering, as well as to hydrogeology. -

Separation of Variables 1.1 Separation for the Laplace Problem

Physics 342 Lecture 1 Separation of Variables Lecture 1 Physics 342 Quantum Mechanics I Monday, January 28th, 2008 There are three basic mathematical tools we need, and then we can begin working on the physical implications of Schr¨odinger'sequation, which will take up the rest of the semester. So we start with a review of: Linear Algebra, Separation of Variables (SOV), and Probability. There will be no particular completeness to our discussions { at this stage, I want to emphasize those aspects of each of these that will prove useful. Pitfalls and caveats will be addressed as we encounter them in actual problems (where they won't show up, so won't be addressed). Try as one might, it is difficult to relate these three areas, so we will take each one in term, starting from the most familiar, and working to the least (that's a matter of taste, so apologies in advance for the wrong ordering { in anticipation of our section on probability: How many different orderings of these three subjects are there? On average, then, how many people disagree with the current one?). We will begin with separation of variables, a simple technique that you have used recently { we will review the basic electrostatic interest in these solu- tions, and do a few examples of SOV applied to PDE's other than Laplace's equation (r2 V = 0). 1.1 Separation for the Laplace Problem Separation of variables refers to a class of techniques for probing solutions to partial differential equations (PDEs) by turning them into ordinary differen- tial equations (ODEs). -

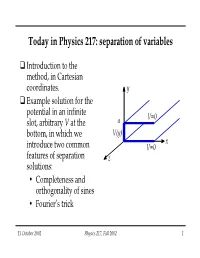

Today in Physics 217: Separation of Variables

Today in Physics 217: separation of variables Introduction to the method, in Cartesian coordinates. y Example solution for the potential in an infinite V=0 slot, arbitrary V at the a bottom, in which we V(y) x introduce two common V=0 features of separation z solutions: • Completeness and orthogonality of sines • Fourier’s trick 11 October 2002 Physics 217, Fall 2002 1 Introduction to separation of variables If the method of images can’t be used on a problem you must solve that involves conductors, the next thing to try is direct solution of the Laplace equation, subject to boundary conditions on V and/or ∂∂Vn. Separation of variables is the easiest direct solution technique. It works best with conductors for which the surfaces are well behaved (planes, spheres, cylinders, etc.). Here’s how it works, in Cartesian coordinates, in which the Laplace equation is ∂∂∂222VVV ∇=2V + + =0 ∂∂∂xyz222 which can’t be integrated directly like the 1-D case. 11 October 2002 Physics 217, Fall 2002 2 Introduction to separation of variables (continued) Consider solutions of the form Vxyz(),, = XxYyZz() () () Then, the Laplace equation is dX222 dY dZ YZ++= XZ XY 0 dx222 dy dz or, dividing through by XYZ, 111dX222 dY dZ ++=0 XYZdx222 dy dz i.e. fx()++= gy() hz() 0 11 October 2002 Physics 217, Fall 2002 3 Introduction to separation of variables (continued) The only way for this to be true for all x,y,z is for each term to be a constant, and for the three constants to add up to zero: 111dX222 dY dZ ++=+−+=AB() AB 0 XYZdx222 dy dz So this is a way to trade one partial differential equation for three ordinary differential equations, which can often be solved much more easily: dX222 dY dZ −=AX00 −= BY ++=() A B Z 0 dx222 dy dz 11 October 2002 Physics 217, Fall 2002 4 Introduction to separation of variables (continued) Nothing guarantees that V will always factor into functions of x, y, and z alone. -

I THERMAL CONDUCTION EQUATIONS for a MEDIUM WITH

THERMAL CONDUCTION EQUATIONS FOR A MEDIUM WITH AN INCLUSION USING GALERKIN METHOD by THIAGARAJAN SIVALINGAM Presented to the Faculty of the Honors College of The University of Texas at Arlington in Partial Fulfillment of the Requirements for the Degree of MASTER OF SCIENCE IN MECHANICAL ENGINEERING THE UNIVERSITY OF TEXAS AT ARLINGTON December 2009 i Copyright © by Thiagarajan Sivalingam 2009 All Rights Reserved ii This thesis is dedicated to my parents and friends for their continuous support and encouragement iii ACKNOWLEDGMENTS To begin with I would like to extend my gratitude to my advisor Prof. Seiichi Nomura for giving me an opportunity to work in this interesting topic. His strong source of motivation, guidance and belief kept me moving on with the projects. I would also like to thank my committee members Professor Bo Ping Wang and Professor Haji-Sheikh for their valuable time. Finally I thank my parents, all my friends and roommates for their support and encouragement throughout my Masters program. October 16, 2009 iv ABSTRACT THERMAL CONDUCTION EQUATIONS FOR A MEDIUM WITH AN INCLUSION USING GALERKIN METHOD Thiagarajan Sivalingam, MS The University of Texas at Arlington, 2009 Supervising Professor: Seiichi Nomura The Galerkin method is used to semi-analytically solve the heat conduction equation in non-homogeneous materials. The problem under deliberation is a square plate with a circular inclusion having different thermal conductivities. A generalized procedure that involves the Galerkin method and formulation of the final solution in terms of the procured base functions is adopted. The Galerkin method basically involves expressing the given boundary value problem in terms of a standard mathematical relation, generating a set of continuous base functions, formulating the matrix equation, and determining the solution. -

C.2 Separation of Variables Use Separation of Variables to Solve Differential Equations

APPENDIX C Differential Equations A27 C.2 Separation of Variables Use separation of variables to solve differential equations. • Use differential equations to model and solve real-life problems. Separation of Variables The simplest type of differential equation is one of the form yЈ ϭ f ͑x͒. You know that this type of equation can be solved by integration to obtain y ϭ ͵ f ͑x͒ dx. In this section, you will learn how to use integration to solve another important family of differential equations—those in which the variables can be separated. TECHNOLOGY This technique is called separation of variables. You can use a symbolic integration utility to solve a separable variables differential Separation of Variables equation. Use a symbolic integra- If f and g are continuous functions, then the differential equation tion utility to solve the differential equation dy ϭ f ͑x͒g͑y͒ x dx yЈ ϭ . y2 ϩ 1 has a general solution of 1 ͵ dy ϭ ͵ f ͑x͒ dx ϩ C. g͑y͒ Essentially, the technique of separation of variables is just what its name implies. For a differential equation involving x and y, you separate the x variables to one side and the y variables to the other. After separating variables, integrate each side to obtain the general solution. Here is an example. EXAMPLE 1 Solving a Differential Equation dy x Find the general solution of ϭ . dx y2 ϩ 1 Solution Begin by separating variables, then integrate each side. dy x ϭ Differential equation dx y2 ϩ 1 ( y2 ϩ 1͒ dy ϭ x dx Separate variables. ͵͑y2 ϩ 1͒ dy ϭ ͵x dx Integrate each side. -

Green's Function for the Heat Equation

cs: O ani pe ch n e A c M c Hassan, Fluid Mech Open Acc 2017, 4:2 d e i s u s l F Fluid Mechanics: Open Access DOI: 10.4172/2476-2296.1000152 ISSN: 2476-2296 Perspective Open Access Green’s Function for the Heat Equation Abdelgabar Adam Hassan* Department of Mathematics, College of Science and Arts at Tabrjal, AlJouf University, Kingdom of Saudi Arabia Abstract The solution of problem of non-homogeneous partial differential equations was discussed using the joined Fourier- Laplace transform methods in finding the Green’s function of heat equation in different situations. Keywords: Heat equation; Green’s function; Sturm-Liouville So problem; Electrical engineering; Quantum mechanics dy d22 y dp() x dy d y dy d () =+=+() () ()() px px 22px pxbx dx dx dx dx dx dx dx Introduction Thus eqn (3) can be written as: The Green's function is a powerful tool of mathematics method dy is used in solving some linear non-homogenous PDEs, ODEs. So d px() ++ qxy()()λ rxy =0 (4) Green’s functions are derived by the specially development method of dx dx separation of variables, which uses the properties of Dirac’s function. Where q(x)=p(x)c(x) and r(x)=p(x)d(x). This method was considerable more efficient than the others well known classical methods. Equation in form (4) is known as Sturm-Liouville equation. Satisfy the boundary conditions [1-5]. The series solution of differential equation yields an infinite series which often converges slowly. Thus it is difficult to obtain an insight Regular Sturm-Liouville problem: In case p(a)≠0 and p(b)≠0, into over-all behavior of the solution. -

An Introduction to the Finite Element Method (FEM) for Differential Equations

An Introduction to the Finite Element Method (FEM) for Differential Equations Mohammad Asadzadeh January 20, 2010 Contents 0 Introduction 5 0.1 Preliminaries ........................... 5 0.2 Trinities .............................. 6 1 Polynomial approximation in 1d 15 1.1 Overture.............................. 15 1.2 Exercises.............................. 37 2 Polynomial Interpolation in 1d 41 2.1 Preliminaries ........................... 41 2.2 Lagrangeinterpolation . 48 2.3 Numerical integration, Quadrature rule . 52 2.3.1 Gaussquadraturerule . 58 2.4 Exercises.............................. 62 3 Linear System of Equations 65 3.1 Directmethods .......................... 65 3.2 Iterativemethod ......................... 72 3.3 Exercises.............................. 81 4 Two-points BVPs 83 4.1 ADirichletproblem ....................... 83 4.2 Minimizationproblem . 86 4.3 A mixed Boundary Value Problem . 87 4.4 The finite element method. (FEM) . 90 4.5 Errorestimatesintheenergynorm . 91 4.6 Exercises.............................. 97 3 4 CONTENTS 5 Scalar Initial Value Problem 103 5.1 Fundamental solution and stability . 103 5.2 Galerkin finite element methods (FEM) for IVP . 105 5.3 AnaposteriorierrorestimateforcG(1) . 108 5.4 AdG(0)aposteriorierrorestimate . 114 5.5 Apriorierroranalysis . .116 5.6 The parabolic case (a(t) 0) ..................120 ≥ 5.6.1 Shortsummaryoferrorestimates . 124 5.7 Exercises..............................127 6 The heat equation in 1d 129 6.1 Stabilityestimates . .130 6.2 FEMfortheheatequation. .135 6.3 Erroranalysis ...........................141 6.4 Exercises..............................146 7 The wave equation in 1d 149 7.1 FEMforthewaveequation . .150 7.2 Exercises..............................154 8 Piecewise polynomials in several dimensions 157 8.1 Introduction............................157 8.2 Piecewise linear approximation in 2 D . 160 8.2.1 Basis functions for the piecewise linears in 2 D . 160 8.2.2 Error estimates for piecewise linear interpolation . -

Solution of Exact Equations Contents

Solution of Exact Equations Contents • First order ordinary differential equation • Differential of a function of two variables • Short Notes on Partial Derivatives • Exact Equations • Criterion for Exactness • Examples • Method of Solution • Worked Example • Practice Problems • Solutions to practice problems First Order Ordinary differential equations • A differential equation having a first derivative as the highest derivative is a first order differential equation. • If the derivative is a simple derivative, as opposed to a partial derivative, then the equation is referred to as ordinary. Differential of a function of two variables If given a function 푧 = 푓(푥, 푦) Then its differential is defined as the following: 휕푓 휕푓 푑푧 = 푑푥 + 푑푦 휕푥 휕푦 The symbol 휕 represents the partial derivative of the function. This tells us that if we know the differential of a function, we can get back the original function under certain conditions. In a special case when 푓 푥, 푦 = 푐 then we have: 휕푓 휕푓 푑푥 + 푑푦 = 0 휕푥 휕푦 Short note on Partial derivatives For a function of two variables, a partial derivative with respect to a particular variable means differentiating the function with that variable while assuming the other variable to be fixed. Ex: if 푓 = 푥 + 푦 sin 푥 + 푦3푙푛푥 휕푓 1 then = 1 + 푦 cos 푥 + 푦3 휕푥 푥 휕푓 and = sin 푥 + 3푦2푙푛푥 휕푦 Exact Equation If given a differential equation of the form 푀 푥, 푦 푑푥 + 푁 푥, 푦 푑푦 = 0 Where M(x,y) and N(x,y) are functions of x and y, it is possible to solve the equation by separation of variables.