Haptic-Only Lead and Follow Dancing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction to Latin Dance

OFFICE OF CURRICULUM, INSTRUCTION & PROFESSIONAL DEVELOPMENT HIGH SCHOOL COURSE OUTLINE Course Code 3722 Course Title Introduction to Latin Dance Department Physical Education Short Title Intro Latin Dance Course Length 2 Semesters Grade 11-12 Credits/Semester 5 Required for Graduation No Meets H.S. Grad Requirement Elective Credit Yes Meets UC “a-g” No Meets NCAA Requirement No Requirement Prerequisites 2 years physical education COURSE DESCRIPTION: This course is designed to teach students the basic elements of Latin Dance. Students will analyze dance’s role in improving and maintaining one’s health related fitness and then incorporate dance activities into their personal fitness program/plan. Students will learn basic steps as well as complex combinations in Merengue, Salsa, Bachata, and the Cha Cha. For each dance, the students will learn the historical and geographical roots, the music and the instruments associated with each one. This course will help students learn the skills of dance while improving their technique, poise, self-confidence and creative ability as well as deepening their understanding of and appreciation for the rich and colorful heritage that each dance represents. As a course involving couples’ dances, it will allow students to develop an understanding of the social etiquette involved in couples dancing. GOALS: Students need to: • Demonstrate knowledge and skills related to performance of the following dances: Merengue, Salsa, Bachata and Cha Cha. • Assess and maintain a level of physical fitness to improve health and performance. • Demonstrate knowledge of physical fitness concepts, principles, and strategies to improve health and performance in dance. • Demonstrate and utilize knowledge of psychological and sociological concepts, principles, and strategies as applied to learning and performance of Latin dance. -

Teaching English Through Body Movement a Pa

AMERICAN UNIVERSITY OF ARMENIA College of Humanities and Social Sciences Dancing – Teaching English through Body Movement A paper is submitted in partial fulfillment of the requirements for the degree Master of Arts in Teaching English as a Foreign Language By Ninel Gasparyan Adviser: Raichle Farrelly Reader: Rubina Gasparyan Yerevan, Armenia May 7, 2014 We hereby approve that this design project By Ninel Gasparyan Entitled Dancing – Teaching English through Body Movement Be accepted in partial fulfillment for the requirements of the degree Master of Arts in Teaching English as a Foreign Language Committee on the MA Design Project ………..………………………… Raichle Farrelly ………..………………………… Rubina Gasparyan ………..………………………… Dr. Irshat Madyarov MA TEFL Program Chair Yerevan, Armenia May 7, 2014 ii TABLE OF CONTENTS Abstract ….....….………………………………………..………………………… v Chapter One: Introduction …………...….………………………………………… 1 Chapter Two: Literature Review ……..…………………………………………… 3 2.1. Content-Based Instruction Models ……..……………..……………………… 5 2.1.1. The use of Dance in an EFL Classroom ………...…..……………………… 11 Chapter Three: Proposed Plan and Deliverables…………………..……………… 15 3.1. Course Description ..………………………………………………………….. 15 3.1.1. Needs and Environment Analysis ……………………..…………………… 15 3.1.2. Goals and Objectives ……………………………………………….………. 16 3.1.3. Assessment Plan …………………………………………………….…….... 17 3.1.4. Learning Plan ……..…………………………………………….…..……… 19 3.1.5. Deliverables …………………………………………………………....…… 24 Chapter Four: Reflection and Recommendations ……………………..……...…… 27 4.1. Reflection -

Tango Fundamentals (Year-1) - Course Syllabus

Tango Fundamentals (Year-1) - Course Syllabus The course aims to provide a solid foundation in the key elements of Argentine Tango in an enjoyable sociable environment. The first ten weeks concentrates on how to lead and follow the fundamental steps of Tango to enable you to quickly get dancing. Once completed you will be able to move on to follow the full year-1 syllabus. During the full course you will learn • how to lead or follow this improvised dance (there are no long set sequences) • how to execute turns, pivots, ochos and cross steps • some fun easy ‘foot and leg play’ – paradas (blocks) and barridas (sweeps) • to develop musicality in the dance, and how to adjust the elements of Tango to suit the different musical styles found on a typical Buenos Aires dance floor. We will focus on developing skills so that you can enjoy dancing at a Tango social event (called a milonga) and to be able improvise the dance with any partner – wherein lies the pleasure of dancing Tango. There will be time to practice what you have learnt both in the class and also at optional prácticas (practice sessions) on Sunday evenings at Martlesham Leisure. The first 10 week term What is Argentine Tango? – the nature of dance and the music and the improvisation which makes it so different from other dances. The Connection: how to ‘connect’ with your partner and its importance for leading and following. Walking: Walking in ‘two-track’ with your partner in an open embrace (‘parallel system’) Leading and following side-steps Dancing the pauses in the music More -

International Dance Conservatory – Ballroom Program

INTERNATIONAL DANCE CONSERVATORY – BALLROOM PROGRAM YEAR FALL SPRING Year 1 Latin Ballroom School Figures Latin Ballroom School Figures (Bronze level) (Silver & Gold levels) Standard Ballroom School Figures Standard Ballroom School Figures (Bronze level) (Silver & Gold levels) Year 2 Latin Technique 1 Latin Technique 2 Latin Ballroom 1 Latin Ballroom 2 Standard Technique 1 Standard Technique 2 Standard Choreography 1 Standard Choreography 2 Year 3 American Smooth 1 American Smooth 2 Student Choreography 1 Student Choreography 2 Advanced Ballroom Technique 1 Advanced Ballroom Technique 2 Year 4 Business of Ballroom Intro to Ballroom Instruction Advanced Choreography 1 Advanced Choreography 2 INTERNATIONAL DANCE CONSERVATORY – BALLROOM PROGRAM Advanced Ballroom Technique 1 & 2 This is an advanced class that focuses on the body mechanics, timing, footwork, partnering, style, expression, and emotion of many Ballroom & Latin dances. Students will continue to develop a deeper understanding of the techniques and stylings of each dance. Students will apply this training in the demonstration of their Latin, Ballroom, and Smooth competition routines. Advanced Choreography 1 & 2 This is an advanced class that focuses on learning open choreography in many Ballroom and Latin Dances. Students will experience this creative process first hand and apply their technique to this choreography. Students will perform these open routines with attention to technical proficiency and embodying the character of each dance. American Smooth 1 & 2 This is an advanced class that focuses on learning open choreography in all four American Smooth Ballroom Dances - Waltz, Tango, Foxtrot, & Viennese Waltz. Students will be prepared to compete in all four dances at the Open Amateur Level. -

28Th November – 1St December 2018 Dance Course in Dresden - Ballroom Dances of the 19Th Century

update 20.01.2018 28th November – 1st December 2018 Dance Course in Dresden - Ballroom dances of the 19th century This year’s focus will be on couple dances in quadrilles, choreographed dances, lead and follow in free dances Dances to be taught Quadrille „Sleigh Bell Polka“ (Philadelphia, 1866) This polka-quadrille fits well the winter season. It consist 5 figures with beautiful quadrille figures in polka tempo and the polka waltz. It was published by Professor C. Brooks in Philadelphia in 1866 and became one oft he favorite dances at balls in North America and Europe. Quadrille „Strauss Quadrille“ Quadrille with polka, varsovienne, schottisch, mazurka, galop, waltz The favourite dances of the 19th century have been incorporated in this quadrille, containing 6 figures. Its enjoyment is further enhanced by the melodies composed by the members of the Strauss family. The figures are: La Bohémienne (dance: polka, music: „Fashion Polka“ by Josef Strauss), La Varsovienne (dance: varsovienne, music: „La Varsoviana“ by Johann Strauss the father), La Rhénane (dance: schottisch /Rheinländer polka, music: „Frühlingsluft Rheinländer“ by Josef Strauss), La Parisienne (dance: mazurka waltz and redowa, music: „Frauenherz“ by Josef Strauss), La Britannique (dance: galop, music: „Wien über alles“ by Eduard Strauss) and La Viennoise (dance: waltz, music: „Viennese Blood“ by Johann Strauss the son). They will be taught in accordance with the research into the original sources on step technique and figure set-up. Valse Russe „La Czarine“ (Paris 1857/ New York 1860) La Czarine is a Russian waltz, choreographed by Société Académique des Professeurs de Danse in Paris in 1857. Originating from the French ballroom, the dance records show several variations of this Russian waltz between 1860 and 1883. -

Group Class Descriptions

Group Class Descriptions Social Foundation – Mr. Daniel Skyler A great way to refocus on your lead and follow and brush up on a few patterns you may have forgotten or are just being introduced to! Merenguito - Mr. Sergio Sanchez Learn exciting approaches and techniques along with fun new material to implement into your Merengue. And get an awesome cardio workout while you do it!! Other dances featured include: Salsa and Bachata. Salsa Shine Patterns – Mr. Brandon Romero Salsa Shine patterns are designed to be danced by yourself as a presentation of individual dance skill outside of the partner dance interaction. The focus of the dancer is on themself by using a combination of turns, syncopations, and body rhythms. Rhythm Techniques – Ms. Rachel Sayotovich Come and explore different techniques we use in dancing the American Rhythm style- including turns, rotations, Cuban motion, body action, footwork, and more! All levels of dancing are welcome! Latin – Mr. Oleksandr Kozhukhar Come and experience an introduction to the basic fundamentals and characteristics of the five Latin dances - Cha Cha, Samba, Rumba, Paso Doble, and Jive. We will cover basic characteristics of the dances, footwork, body actions, timing, and more! No Latin experience required. Line Dance Party Prep – Mr. Alexander Zarek This group class will go over some of the line dances that will appear at our Thursday night virtual parties so that you will have a head start on the material! The line dances and music selections will change from week to week. Social Club Dances for All Levels - Mr. Dana James You know those moves - the ones that teacher seem to lead at the parties but don’t always (or never) get to on lessons? That is because there is no box to check off once it has been taught. -

Tango Argentino Faqs

Survival Tango FAQs Tango Argentino Vocabulary! What is the difference between Argentine tango and ballroom tango? - Argentine tango refers to the style of social dance that is done in the tango clubs of Buenos Aires and now around the world. Fundamental to Argentine social tango is the improvisational basis of the dance. The dance is intended to abrazo embrace, hug express the music, so a desire to hear and respond rhythmically is essential. adorno adornment Rather than memorizing a prescribed group of steps, as is done in ballroom, amague a feint the students are encouraged to learn how to lead and follow with each step being an invitation to further exploration. Class rotation of partners and an arrastre drag emphasis upon learning the dance as an individual rather than a couple, allows barrida sweep students to master the language of tango as a universal communication so when traveling, they can easily dance with strangers in Moscow or San boleo a rebounding leg action Francisco. The connection between the two bodies is famously described by cabeseo nod Juan Carlos Copes, “One heart and four legs”. cadena chain http://tangonova.com/odfaq/index.php?cat=1 calesita carousel - Argentine Tango is THE TANGO danced at milongas around the world. caminada walk American and International Tango (or together, Ballroom Tango) are competi- tion sports that people study and practice for ballroom dance competitions. corrida a run The movements are loosely based on Argentine Tango (some say parodies of), cruzada, cruze crossed position but are very stiff and uncomfortable, and you will rarely find people dancing enrosque twisting movement these other Tangos socially. -

CDSS Spring 2018 News

FALL 2018 FALL CDSS NEWSCDSS COUNTRY DANCE AND SONG SOCIETY founded in 1915 BALANCE AND SING Store Update ~ Fall 2018 ow back from a wonderful summer of dancing and singing at our camps, we’re excited to offer some new music and N dance books for your reading and listening pleasure. Relax into the fall with some new favorites from great musicians! Once Upon a Waltz by Ladies At Play More, Please! by Eloise & Co. 13 original waltzes The latest offering from and 3 hambos by the two of the contra and folk Oklahoma-based scene’s brightest stars, trio Ladies at Play Rachel Bell (Accordion) (Miranda Arana, and Becky Tracy (Fiddle) Kathy Dagg, Shanda who form Eloise & McDonald). The Co. with guitarists trio has been Andrew VanNorstrand playing for English and Owen Morrison. and Contra dances since 2008 The debut offering by this and most of the tunes are written for or inspired by the band features wonderful tracks from French tunes people they’ve met playing for dances in the Oklahoma to traditional jigs to songs composed by both musicians area. A companion CD is available with all the tunes as well as by Keith Murphy and others. CD - $15.00 (except The Giddy Goat). Book - $10.00; CD - $15.00 Chantons, dansons: Songs, Games and Dances for Learners of French w/CD by Swimming Down the Stars — Tunebook Marian Rose by Jonathan Jensen, CD by Ladies At Play This is a collection of musical games, songs and 16 Tunes by the inspired pianist dances that are valuable tools in achieving fluency Jonathan Jensen whose in oral French. -

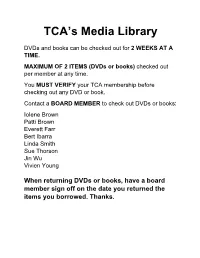

TCA's Media Library

TCA’s Media Library DVDs and books can be checked out for 2 WEEKS AT A TIME. MAXIMUM OF 2 ITEMS (DVDs or books) checked out per member at any time. You MUST VERIFY your TCA membership before checking out any DVD or book. Contact a BOARD MEMBER to check out DVDs or books: Iolene Brown Patti Brown Everett Farr Bert Ibarra Linda Smith Sue Thorson Jin Wu Vivien Young When returning DVDs or books, have a board member sign off on the date you returned the items you borrowed. Thanks. Tango Club of Albuquerque Video Rentals – Complete List V1 - El Tango Argentino - Vol 1 Eduardo and Gloria A famous dancing and teaching couple have developed a series of tapes designed to take a dancer from neophyte to accomplished intermediate in salon-style Tango. (This is not the club-style Tango Eduardo sometimes teaches in workshops.) The first tape covers the basics including the proper embrace and elementary steps. The video quality is high and so is the instruction. The voice over is a bit dramatic and sometimes slightly out of synch with the steps. The tapes are a somewhat expensive for the amount of material covered, but this is a good series for anyone just starting in Tango. V2 - El Tango Argentino - Vol 2 Eduardo and Gloria A famous dancing and teaching couple have developed a series of tapes designed to take a dancer from neophyte to accomplished intermediate in salon-style Tango. (This is not the club-style Tango Eduardo sometimes teaches in workshops.) The second and third videos cover additional steps including complex figures and embellishments. -

Adjust to Your Partner—Men, You Must Lead and Read

Adjust To Your Partner—Men, You Must Lead and Read by Harold & Meredith Sears A big part of dancing is adjusting to your partner as you go. This idea will not be news to the women, who are regularly reminded that they are expected to “follow” their man. These two quotes come from dance etiquette rules from the 1800s, referring to behavior both on and off the floor: A lady should recollect that it is the gentleman’s part to lead her, and hers to follow his directions. In ascending stairs with ladies, gentlemen should go beside or before them. It is a gentleman’s province to lead, and the lady’s to follow. But a dance is not a one-way series of directives by the man to the woman; it is a two- way conversation between them. The "gentleman's province" really is to lead and to read his partner's movements and adjust. For instance, the man is in charge of dance position—closed, semi, banjo—so he needs to adjust his steps as he dances to stay in good position. He mustn't slavishly dance the textbook steps, but should continuously read the woman's following movements and then adjust his steps to stay together. She too will make every effort to stay up to him at the hips and to step in response to his lead. She will follow, but he "follows" too. Let's think about dancing a Reverse Turn in Foxtrot. We might be in closed position facing line of dance. The man knows what to do. -

Got Rhythm? Haptic-Only Lead and Follow Dancing

Got rhythm? Haptic-only lead and follow dancing Sommer Gentry1, Steven Wall2, Ian Oakley3, and Roderick Murray-Smith24 1 Massachusetts Institute of Technology, Cambridge, MA, USA ([email protected]) 2 Department of Computing Science, University of Glasgow, Glasgow G12 8QQ, Scotland, UK 3 Palpable Machines Group, Media Lab Europe, Sugar House Lane, Bellevue, Dublin 8, Ireland 4 Hamilton Institute, National University of Ireland, Maynooth, Co. Kildare, Ireland Abstract. This paper describes the design and implementation of a lead and follow dance to be per- formed with a human follower and PHANToM leader, or executed by two humans via two PHANToMs, reciprocally linked. In some contexts, such as teaching calligraphic character writing, haptic-only com- munication of information has demonstrated limited effectiveness. This project was inspired by the observation that experienced partner dance followers can easily decipher haptic-only cues, perhaps be- cause of the structure of dancing, wherein a set of possible moves is known and changes between moves happen at times signalled by the music. This work is a demonstration of dancing, meaning coordination of a randomly sequenced set of known movements to a musical soundtrack, with a PHANToM as a leader, and of a human leader and follower dancing via a dual PHANToM teleoperation system. 1 Background In numerous studies, human haptic and proprioceptive senses have been shown to lack the precision of visual perception. For example, just noticeable differences (JND’s) for force perception are larger than those for visual perception [1]. Previous work on haptic interfaces between humans found evidence that haptic-only cooperation was inferior to visual-only cooperation and found no evidence that haptic- plus-visual cooperation was superior to visual-only cooperation when the item to be communicated was an unknown Japanese calligraphic character [2]. -

Group Class Descriptions

Group Class Descriptions Latin – Mr. Oleksandr Kozhukhar Come and experience an introduction to the basic fundamentals and characteristics of the five Latin dances – Cha Cha, Samba, Rumba, Paso Doble, and Jive. We will cover basic characteristics of the dances, footwork, body actions, timing, and more! No Latin experience required. Tiny Dancers – Mr. Slash Sharan In a tiny space, learn a unique combination and dance your hearts out! Dance Smart – Mr. Slash Sharan This class presents an academic and mental approach to Ballroom Dancing. Learn little tricks and tips to help you become a better dancer…quicker! Easy Conditioning Through Ballet – Ms. Rebecca Vincitore This is the class that kicks you into shape with easy body movements. No gym? No problem! Please have a mat for moments on the floor and enough space to march in place! This is going to awaken your entire body by the end of this practice and supplement the process to get you back on track with your dancing! Body Coordination – Mr. Brandon Romero Learn how to produce the same amazing grace, power, and precision of Professional Dancers through simple exercises. By exploring the use of your bones and joints, develop the relationship between different parts of your body to grasp fundamental dance principles. Ballet Barre – Ms. Rachel Sayotovich Learn the fundamentals of ballet barre work as it applies to our ballroom dancing with a partner! We will explore different ballet moves and exercises to isolate and control certain muscles groups to create aesthetically beautiful lines. No ballet experience required. Elements of Dance – Mr. Daniel Skyler In this class Mr.