Understanding the Perception of Road Segmentation and Traffic Light Detection Using Machine Learning Techniques

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Belgaum District Lists

Group "C" Societies having less than Rs.10 crores of working capital / turnover, Belgaum District lists. Sl No Society Name Mobile Number Email ID District Taluk Society Address 1 Abbihal Vyavasaya Seva - - Belgaum ATHANI - Sahakari Sangh Ltd., Abbihal 2 Abhinandan Mainariti Vividha - - Belgaum ATHANI - Uddeshagala S.S.Ltd., Kagawad 3 Abhinav Urban Co-Op Credit - - Belgaum ATHANI - Society Radderahatti 4 Acharya Kuntu Sagara Vividha - - Belgaum ATHANI - Uddeshagala S.S.Ltd., Ainapur 5 Adarsha Co-Op Credit Society - - Belgaum ATHANI - Ltd., Athani 6 Addahalli Vyavasaya Seva - - Belgaum ATHANI - Sahakari Sangh Ltd., Addahalli 7 Adishakti Co-Op Credit Society - - Belgaum ATHANI - Ltd., Athani 8 Adishati Renukadevi Vividha - - Belgaum ATHANI - Uddeshagala S.S.Ltd., Athani 9 Aigali Vividha Uddeshagala - - Belgaum ATHANI - S.S.Ltd., Aigali 10 Ainapur B.C. Tenenat Farming - - Belgaum ATHANI - Co-Op Society Ltd., Athani 11 Ainapur Cattele Breeding Co- - - Belgaum ATHANI - Op Society Ltd., Ainapur 12 Ainapur Co-Op Credit Society - - Belgaum ATHANI - Ltd., Ainapur 13 Ainapur Halu Utpadakari - - Belgaum ATHANI - S.S.Ltd., Ainapur 14 Ainapur K.R.E.S. Navakarar - - Belgaum ATHANI - Pattin Sahakar Sangh Ainapur 15 Ainapur Vividha Uddeshagal - - Belgaum ATHANI - Sahakar Sangha Ltd., Ainapur 16 Ajayachetan Vividha - - Belgaum ATHANI - Uddeshagala S.S.Ltd., Athani 17 Akkamahadevi Vividha - - Belgaum ATHANI - Uddeshagala S.S.Ltd., Halalli 18 Akkamahadevi WOMEN Co-Op - - Belgaum ATHANI - Credit Society Ltd., Athani 19 Akkamamhadevi Mahila Pattin - - Belgaum -

Prl. District and Session Judge, Belagavi. SRI. BASAVARAJ I ADDL

Prl. District and Session Judge, Belagavi. SRI. BASAVARAJ I ADDL. DISTRICT AND SESSIONS JUDGE BELAGAVI Cause List Date: 18-09-2020 Sr. No. Case Number Timing/Next Date Party Name Advocate 1 M.A. 8/2020 Moulasab Maktumsab Sangolli A.D. (HEARING) Age 70Yrs R/o Bailhongal Dist SHILLEDAR IA/1/2020 Belagavi. Vs The Chief officer Bailhongal Town Municipal Council Tq Bailhongal Dist Belagavi. 2 L.A.C. 607/2018 Laxman Dundappa Umarani age C B Padnad (EVIDENCE) 65 Yrs R/o Kesaral Tq Athani Dt Belagavi Vs The SLAO Hipparagi Project , Athani Dist Belagavi. 3 L.A.C. 608/2018 Babalal Muktumasab Biradar C B Padanad (EVIDENCE) Patil Age 55 yrs R/o Athani Tq Athani Dt Belagavi. Vs The SLAO Hipparagi Project , Athani, Tq Athani Dist Belagavi. 4 L.A.C. 609/2018 Gadigeppa Siddappa Chili age C B padanad (EVIDENCE) 65 Yrs R/o Athani Tq Athani Dt Belagavi Vs The SLAO Hipparagi Project , Athani Dist Belagavi. 5 L.A.C. 610/2018 Kedari Ningappa Gadyal age 45 C B Padanad (EVIDENCE) Yrs R/o Athani Tq Athani Dt Belagavi Vs The SLAO Hipparagi Project , Athani Dist Belagavi. 6 L.A.C. 611/2018 Smt Kallawwa alias Kedu Bhima C B padanad (EVIDENCE) Pujari Vs The SLAO Hipparagi Project , Athani Dist Belagavi. 7 L.A.C. 612/2018 Kadappa Bhimappa Shirahatti C B Padanad (EVIDENCE) age 55 Yrs R/o Athani Tq Athani Dt Belagavi Vs The SLAO Hipparagi Project , Athani. Dist Belagavi. 1/8 Prl. District and Session Judge, Belagavi. SRI. BASAVARAJ I ADDL. DISTRICT AND SESSIONS JUDGE BELAGAVI Cause List Date: 18-09-2020 Sr. -

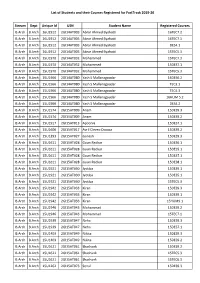

Stream Dept Unique Id USN Student Name Registered Courses B.Arch B

List of Students and their Courses Registered for FastTrack 2019-20 Stream Dept Unique Id USN Student Name Registered Courses B.Arch B.Arch 16U2912 2GI14AT003 Abrar Ahmed Byahatti 16TEC7.2 B.Arch B.Arch 16U2912 2GI14AT003 Abrar Ahmed Byahatti 16TEC7.1 B.Arch B.Arch 16U2912 2GI14AT003 Abrar Ahmed Byahatti DES4.1 B.Arch B.Arch 16U2912 2GI14AT003 Abrar Ahmed Byahatti 15TEC5.3 B.Arch B.Arch 15U1970 2GI14AT032 Mohammed 15TEC7.3 B.Arch B.Arch 15U1970 2GI14AT032 Mohammed 15DES7.1 B.Arch B.Arch 15U1970 2GI14AT032 Mohammed 15TEC5.3 B.Arch B.Arch 15U1966 2GI14AT080 Yash S Mallanagoudar 16DES6.2 B.Arch B.Arch 15U1966 2GI14AT080 Yash S Mallanagoudar TEC3.1 B.Arch B.Arch 15U1966 2GI14AT080 Yash S Mallanagoudar TEC4.3 B.Arch B.Arch 15U1966 2GI14AT080 Yash S Mallanagoudar 16HUM 5.2 B.Arch B.Arch 15U1966 2GI14AT080 Yash S Mallanagoudar DES4.2 B.Arch B.Arch 15U1574 2GI15AT009 Anam 15DES9.3 B.Arch B.Arch 15U1574 2GI15AT009 Anam 15DES9.2 B.Arch B.Arch 15U1917 2GI15AT013 Apoorva 15DES7.1 B.Arch B.Arch 15U1606 2GI15AT017 Avril Cleveo Dsouza 15DES9.2 B.Arch B.Arch 15U1393 2GI15AT027 Ganesh 15DES9.3 B.Arch B.Arch 15U1611 2GI15AT028 Gauri Redkar 15DES6.1 B.Arch B.Arch 15U1611 2GI15AT028 Gauri Redkar 15DES5.1 B.Arch B.Arch 15U1611 2GI15AT028 Gauri Redkar 15DES7.1 B.Arch B.Arch 15U1611 2GI15AT028 Gauri Redkar 15DES8.1 B.Arch B.Arch 15U1921 2GI15AT030 Jyotiba 15DES9.1 B.Arch B.Arch 15U1921 2GI15AT030 Jyotiba 15DES5.1 B.Arch B.Arch 15U1921 2GI15AT030 Jyotiba 15TEC5.3 B.Arch B.Arch 15U1942 2GI15AT033 Kiran 15DES9.3 B.Arch B.Arch 15U1942 2GI15AT033 Kiran 15DES9.1 -

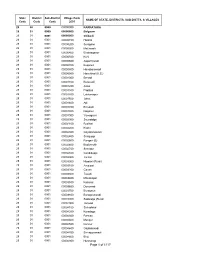

Village Code NAME of STATE, DISTRICTS, SUB-DISTTS

State District Sub-District Village Code NAME OF STATE, DISTRICTS, SUB-DISTTS. & VILLAGES Code Code Code 2001 29 00 0000 00000000 KARNATAKA 29 01 0000 00000000 Belgaum 29 01 0001 00000000 Chikodi 29 01 0001 00000100 Hadnal 29 01 0001 00000200 Sulagaon 29 01 0001 00000300 Mattiwade 29 01 0001 00000400 Bhatnaganur 29 01 0001 00000500 Kurli 29 01 0001 00000600 Appachiwadi 29 01 0001 00000700 Koganoli 29 01 0001 00000800 Hanabarawadi 29 01 0001 00000900 Hanchinal (K.S.) 29 01 0001 00001000 Benadi 29 01 0001 00001100 Bolewadi 29 01 0001 00001200 Akkol 29 01 0001 00001300 Padlihal 29 01 0001 00001400 Lakhanapur 29 01 0001 00001500 Jatrat 29 01 0001 00001600 Adi 29 01 0001 00001700 Bhivashi 29 01 0001 00001800 Naganur 29 01 0001 00001900 Yamagarni 29 01 0001 00002000 Soundalga 29 01 0001 00002100 Budihal 29 01 0001 00002200 Kodni 29 01 0001 00002300 Gayakanawadi 29 01 0001 00002400 Shirguppi 29 01 0001 00002500 Pangeri (B) 29 01 0001 00002600 Budulmukh 29 01 0001 00002700 Shendur 29 01 0001 00002800 Gondikuppi 29 01 0001 00002900 Yarnal 29 01 0001 00003000 Nippani (Rural) 29 01 0001 00003100 Amalzari 29 01 0001 00003200 Gavan 29 01 0001 00003300 Tavadi 29 01 0001 00003400 Manakapur 29 01 0001 00003500 Kasanal 29 01 0001 00003600 Donewadi 29 01 0001 00003700 Boragaon 29 01 0001 00003800 Boragaonwadi 29 01 0001 00003900 Sadalaga (Rural) 29 01 0001 00004000 Janwad 29 01 0001 00004100 Shiradwad 29 01 0001 00004200 Karadaga 29 01 0001 00004300 Barwad 29 01 0001 00004400 Mangur 29 01 0001 00004500 Kunnur 29 01 0001 00004600 Gajabarwadi 29 01 0001 00004700 Shivapurawadi 29 01 0001 00004800 Bhoj 29 01 0001 00004900 Hunnaragi Page 1 of 1117 State District Sub-District Village Code NAME OF STATE, DISTRICTS, SUB-DISTTS. -

Tank Information System Map of Bailhongala Taluk, Belagavi District

Tank Information System Map of Bailhongala Taluk, Belagavi District. Sunakumpi µ Mastamaradi 26719 1:110,300 Hoskoti 2178 2179 Vannur Gajaminhal 2301 2177 Legend 2176 Hanabar Hatti Drainage 19563 Mekalmaradi Mohare Ujjanatti Deshanur Mallapur Kariyat Nesargi District Road Koldur 19562 Nesargi Kalkuppi National Highway Somanatti 2452 State Highway Hogarti Koldur Madanbhavi Hanamana Hatti Taluk Boundary Mattikoppa Sutagatti Muraki Bhavi District Boundary Yaraguddi Lakkundi State Boundary Bairanatti Hannikeri 2441 Village Boundary Nagnur Hiremele Marikatti Jakkanaikanakoppa 18984 Bhavihal Pularkoppa Bailwad Bail Hongal (TMC) Chivatagundi Yaragoppa Ganikoppa Shigihalli Kariyat Sampagaon 18985 Navalgatti 31000 Bailhongal (Rural) 26762 2442 Tigadi Sanikoppa Giriyal Kariyat Bagewadi 19055 18988 2961 18986 Anigol Sampagaon (Bailhongal) 2130 Devalapur Benachinamardi 18987 Korvikoppa Yaradal Amatur Kallur Chick-Bagewadi2962 18970 19020 19054 Wakkund 2967 Mugabasav Mardinagalapur 2443 Pattihal Kariyat Sampagaon Bevinkoppa Chikkmulakur Hiremulakur Budihal Gaddikurvinkoppa 2969 Neginhal Araw alli 19021 19022 19023 Jalikoppa Lingadahalli TankInformation_OwnershipWise Kesarkoppa 2145 19024 19043 2970 2444 Garjur 19044 Kuragund Kenganoor Single Ownership 19047 Holihosur 19049 2971 Sangolli 19048 19053 19050 19052 Gudadur M.K.Hubli Belawadi KFD (4) 19051 Udikeri Holinagalapur 2933 18978 18966 2950 18960 Savatagi 2934 2938 2930 2931 MI (23) Amarapur Turamari 18956 18937 2935 Kulamanatti Pattihal K.B. 2125 2897 18967 2901 18980 2124 2952 2942 18940 Dodawad -

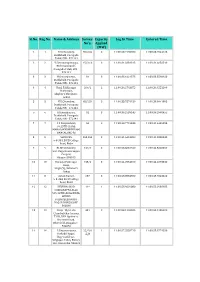

Sl.No. Reg.No. Name & Address Survey No's. Capacity Applied (MW

Sl.No. Reg.No. Name & Address Survey Capacity Log In Time Entered Time No's. Applied (MW) 1 1 H.V.Chowdary, 65/2,84 3 11:00:23.7195700 11:00:23.7544125 Doddahalli, Pavagada Taluk, PIN - 572141 2 2 Y.Satyanarayanappa, 15/2,16 3 11:00:31.3381315 11:00:31.6656510 Bheemunikunte, Pavagada Taluk, PIN - 572141 3 3 H.Ramanjaneya, 81 3 11:00:33.1021575 11:00:33.5590920 Doddahalli, Pavagada Taluk, PIN - 572141 4 4 Hanji Fakkirappa 209/2 2 11:00:36.2763875 11:00:36.4551190 Mariyappa, Shigli(V), Shirahatti, Gadag 5 5 H.V.Chowdary, 65/2,84 3 11:00:38.7876150 11:00:39.0641995 Doddahalli, Pavagada Taluk, PIN - 572141 6 6 H.Ramanjaneya, 81 3 11:00:39.2539145 11:00:39.2998455 Doddahalli, Pavagada Taluk, PIN - 572141 7 7 C S Nanjundaiah, 56 2 11:00:40.7716345 11:00:41.4406295 #6,15TH CROSS, MAHALAKHSMIPURAM, BANGALORE-86 8 8 SRINIVAS, 263,264 3 11:00:41.6413280 11:00:41.8300445 9-8-384, B.V.B College Road, Bidar 9 9 BLDE University, 139/1 3 11:00:23.8031920 11:00:42.5020350 Smt. Bagaramma Sajjan Campus, Bijapur-586103 10 10 Basappa Fakirappa 155/2 3 11:00:44.2554010 11:00:44.2873530 Hanji, Shigli (V), Shirahatti Gadag 11 11 Ashok Kumar, 287 3 11:00:48.8584860 11:00:48.9543420 9-8-384, B.V.B College Road, Bidar 12 12 DEVUBAI W/O 11* 1 11:00:53.9029080 11:00:55.2938185 SHARANAPPA ALLE, 549 12TH CROSS IDEAL HOMES RAJARAJESHWARI NAGAR BANGALORE 560098 13 13 Girija W/o Late 481 2 11:00:58.1295585 11:00:58.1285600 ChandraSekar kamma, T105, DNA Opulence, Borewell Road, Whitefield, Bangalore - 560066 14 14 P.Satyanarayana, 22/*/A 1 11:00:57.2558710 11:00:58.8774350 Seshadri Nagar, ¤ltĔ Bagewadi Post, Siriguppa Taluq, Bellary Dist, Karnataka-583121 Sl.No. -

A Study on Cascading Impact of Demonetization On

International Journal of Marketing & Financial Management, Volume 5, Issue 5, May-2017, pp 27-36 ISSN: 2348 –3954 (Online) ISSN: 2349 –2546 (Print), www.arseam.com Impact Factor: 3.43 Cite this paper as : SHIVASHANKAR K & BASAVARAJ TIGADI (2017), “A STUDY ON CASCADING IMPACT OF DEMONETIZATION ON LIVELIHOOD IN BELGAUM CITY OF KARNATAKA”, International Journal of Marketing & Financial Management, ISSN: 2348 –3954 (online) ISSN: 2349 –2546 (print), Volume 5,(Issue 5,May-2017), pp 27-36 A STUDY ON CASCADING IMPACT OF DEMONETIZATION ON LIVELIHOOD IN BELGAUM CITY OF KARNATAKA SHIVASHANKAR K & Mr. BASAVARAJ TIGADI Department of MBA Post Graduate Studies centre Visvesvaraya Technological University, Belagavi , India ABSTRACT Demonetization has often been used as a tool to cut down hyper inflation. Which is essential to maintain country inflation in a moderate level in turn helps to have fastest growing economy in the world? Prime Minister of India Mr. Narendra Modi came out with his master stroke on corruption, counterfeit currency, terrorism and black money by announcing demonetization and ceasing Rs 500 and Rs. 1000 notes as a part of legal tender in India. Many of the scholars, politicians, business men and every other category the society defined their views in different way about the effects of demonetization in India. Hence this study has been taken up to know the reality of the Cascading impact of this demonetization on livelihoods of the Belgaum city of Karnataka. Emphasis was given on studying the various factors and problems / constraints faced by the people by the Demonetization in Belgaum city of Karnataka. Primary data was collected by taking response of different class of the people in Belgaum city of Karnataka with help of structured questionnaire. -

ER Sheet Data Entry Form Name of Organization : CENTRAL

ER Sheet Data Entry Form Name of Organization : CENTRAL WATER AND POWER RESEARCH STATION, PUNE Employee No. : E0516 Service CCS Designation Stenographer Gr.I Sub Cadre Joining Date : 01-03-1982 Name Details Title First Name Middle Name SurName Mr Liyakatali Mahamulal Tigadi Initials LMT Identity Card No. : 246/2003 Sex Male Date Of Birth 20.7.1958 Date of Retirement 31.07.2018 Community Muslim Religion Islam Father’s Name Mahamulal Birth Details Birth Place Belgaum Birth State/ Karnataka Nationality Indian UT Birth District Belgaum Mother Tongue Urdu Domicile Maharashtra Physically Handicap Status NO Blood Group B +ve Identification Marks Marital Details Marital Status Married Spouse Name Mrs.Naseem Tigadi Spouse Nationality Indian Joining Details Source of Recruitment Staff Selection Joining 1-3-1982 Retirement 31.07.2018 Commission Date Date Departmental Examination Details (If applicable) Level Year Rank 1 Not Applicabe 2 3 Remarks (if any) Languages known Name of Language Read Write Speak Indian Languages 1 Urdu yes yes yes Known 2 Hindi yes yes yes 3 English yes yes yes 4 Marathi yes No yes 5 Kannada Yes Yes No Foreign Languages Arabic Yes No No Known 1 2 3 Details of deputation (if applicable) Name of the Office Post held at that Name of post Period of deputation time in parent office (selected for deputation Since From Not Applicable Details of Foreign Visit Sl. Place of Visit Date of Post held at Whether it Details of visit No. visit that time is a personal or official visit Not Applicable Transfer/Posting Detail (if applicable) Place Period of posting Since From Not Applicable Qualification (Use extra photocopy sheets for multi qualifications, experience, training, awards details) Qualification Discipline Specialization 1 Diploma in Commercial Non-Technical Stenography Practice Year Division CGPA/ % Marks Specialization 2 1982 II Institution University Place Country Govt. -

Government of Karnataka Performance Budget

GOVERNMENT OF KARNATAKA WATER RESOURCES DEPARTMENT (MAJOR AND MEDIUM) PERFORMANCE BUDGET 2008-09 JULY 2009 1 INDEX SL. PAGE PROJ ECT NO NO. A CADA 5 B PROJECTS UNDER KRISHNA BHAGYA J ALA NIGAM 14 1 UPPER KRISHNA PROJ ECT 15 1.1 DAM ZONE ALMATTI 17 1.2 CANAL, ZONE NO.1, BHEEMARAYANAGUDI 23 1.3 CANAL ZONE NO.2, KEMBHAVI 27 1.4 O & M ZONE, NARAYANAPUR 32 1.5 BAGALKOT TOWN DEVELOPMENT AUTHORITY, 35 BAGALKOT C KARNATAKA NEERAVARI NIGAM LIMITED 42 1 MALAPRABHA PROJ ECT 43 2 GHATAPRABHA PROJ ECT 46 3 HIPPARAGI IRRIGATION PROJ ECT 50 4 MARKANDEYA RESERVOIR PROJ ECT 53 5 HARINALA IRRIGATION PROJ ECT 56 6 UPPER TUNGA PROJ ECT 59 7 SINGTALUR LIFT IRRIGATION SCHEME 62 8 BHIMA LIFT IRRIGATION SCHEME 66 9 GANDORINALA PROJ ECT 72 10 TUNGA LIFT IRRIGATION SCHEME 75 11 KALASA – BHANDURNALA DIVERSION SCHEME S 77 12 DUDHGANGA IRRIGATION PROJ ECT 79 13 BASAPURA LIFT IRRIGATION SCHEME 82 14 ITAGI SASALWAD LIFT IRRIGATION SCHEME 84 15 SANYASIKOPPA LIS 87 16 KALLUVADDAHALLA NEW TANK 89 17 TILUVALLI LIS 90 18 NAMMURA BANDARAS 92 19 DANDAVATHY RESERVOIR PROJECT 93 20 BENNITHORA PROJ ECT 94 21 LOWER MULLAMARI PROJ ECT 97 22 VARAHI PROJ ECT 100 23 UBRANI – AMRUTHAPURA LIFT IRRIGATION SCHEME 102 24 GUDDADAMALLAPURA LIFT IRRIGATION SCHEME 104 25 AMARJ A PROJ ECT 106 2 SL. PAGE PROJ ECT NO NO. 26 SRI RAMESHWARA LIFT IRRIGATION SCHEME 109 27 BELLARYNALA LIFT IRRIGATION SCHEME 111 28 HIRANYAKESHI LIFT IRRIGATION SCHEME 114 29 KINIYE PROJECT 116 30 J AVALAHALLA LIFT IRRIGATION SCHEME 117 31 BENNIHALLA LIFT IRRIGATION SCHEME 118 32 KONNUR LIFT IRRIGATION SCHEME 119 33 -

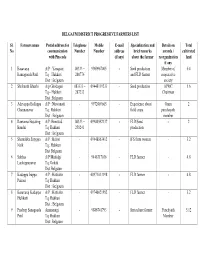

BELGAUM DISTRICT PROGRESSIVE FARMERS LIST Sl. No Farmers

BELGAUM DISTRICT PROGRESSIVE FARMERS LIST Sl. Farmers name Postal address for Telephone Mobile E-mail Specialization and Details on Total No communication Number Number address brief remarks awards / cultivated with Pin code (if any) about the farmer reorganization land if any 1 Basavaraj A/P : Yaragatii 08333 – 9900967405 - Seed production Member of 6.4 Ramagouda Patil Tq – Hukkeri 280776 and FLD farmer cooperative Dist : Belgaum society 2 Shrikanth Bhushi A/p Ghodageri 083333 – 09448119233 - Seed production APMC 5.6 Tq – Hukkeri 287233 Chairman Dist :Belgaum 3 Adeveppa Ballappa A/P : Nirwanatti - 9972469665 - Experience about Gram 2 Channanavar Tq : Hukkeri field crops. panchayath Dist : Belgaum member 4 Ramanna Bosaling A/P: Beniwad 08333 – 09980592137 - FLD/Seed - 2 Bandai Tq: Hukkeri 258241 production Dist : Belgaum 5 Shantakka Satyppa A/P : Belavi - 09448863412 - IFS farm women - 3.2 Naik Tq : Hukkeri Dist Belgaum 6 Subhas A/P Mudalgi - 9448317036 - FLD farmer - 4.8 Lankeppanavar Tq :Gokak Dist Belgaum 7 Kadappa Irappa A/P : Hattialur - 08971013198 - FLD farmer - 4.8 Pairasi Tq: Hukkeri Dist : Belgaum 8 Basavaraj Kadappa A/P : Hattialur - 09740021982 - FLD farmer - 3.2 Hulikatti Tq: Hukkeri Dist : Belgaum 9 Pradeep Sanagouda Ammanagi, - 9886741793 - Sericulture farmer Panchyath 5.12 Patil Tq: Hukkeri Member Dist: Belgaum 10 Dundappa Appanna Ammanagi, - 09740634049 - Sericulture farmer - 2.20 Chinchewadi Tq: Hukkeri Dist: Belgaum 11 Shankar Kadappa A/P: Ghodageri - 09448253679 - IFS farmer Award from 14 Mutagar Tq : Hukkeri UAS Dharwad Dist :Belgaum 12 Mallappa Belavi - 09980381412 - Organic farmer - 10.4 Bharamanna Naik Tq: Hukkeri Dist: Belgaum 13 Vittal Patil Rajapur - 09901780466 - Jaggary producer - 2 Tq: Gokak and Marketing – Dist: Belgaum Sugarcane farmer 14 Ramesh A/P : Hallur - 09986911500 Rdesai2010 Sugarcane grower - 4.8 Gurushiddappa Tq – Gokak @rediffmail. -

Prl. District and Session Judge, Belagavi. Sri. Chandrashekhar Mrutyunjaya Joshi PRL

Prl. District and Session Judge, Belagavi. Sri. Chandrashekhar Mrutyunjaya Joshi PRL. DISTRICT AND SESSIONS JUDGE BELAGAVI Cause List Date: 14-09-2020 Sr. No. Case Number Timing/Next Date Party Name Advocate 11.00 AM-02.00 PM 1 Misc 65/2020 Anitab Vidyadhar Nadagouda age 54 yrs V.V.PATIL (NOTICE) R/o Tirth tq Athani Dt Belagavi Vs Kalagouda Mahaveer Patil age 32 yrs R/o Tirth Tq Athani Dt Belagavi 2 COMM.O.S 7/2020 M/s Sairaj Builders and Developers R.B.Deshpande (A.D.R.) 356/1A MG road Tilakwadi Belagavi Vs Govind Mahadev Raut age 77 yrs R/o P.No.2 Swami Vivekanand Colony Tilakwadi Belagavi 3 R.A. 336/2019 Manisha Balaram Warang Age 49 yrs R/o Pawar Shrikant (ARGUMENTS) Plot No.6/5,Brahmadev Ng,Dhamane S. IA/1/2019 Road,Vadagaon,Belagavi590005 Vs K A Salavi Renuka Ningappa Patil Age 59yrs R/oAshwmegh Building,Parijat Colony,Anand Nagar,M.Vadagaon,Belagavi 4 R.A. 34/2020 Yallappa Balu More Age 67 yrs R/o A.L.Sandrimani (ARGUMENTS) H.No.5/B,Chavat Galli, IA/1/2020 Kawalewadi,A/P.Bijagarni,Ta.Dt.Belagavi. IA/2/2020 Vs Sushila Krishna Tashildar Age 48 yrs R/o 308, Mujawar Galli, Tal.Dt.Belagavi. 5 R.A. 348/2019 Appasaheb Bhikuji A/S Balaji Mane Age Jadhav Rupesh (Steps) 59yrs R/o Pattan Kadoli,PaireMala,Tq G. IA/1/2019 Hatakangala,Dt Kolhapur IA/2/2019 Vs Bapusaheb Bhikuji A/S Balaji Mane Age 66 yrs R/o Rukmini Nagar,Belagavi. -

Sub Centre List As Per HMIS SR

Sub Centre list as per HMIS SR. DISTRICT NAME SUB DISTRICT FACILITY NAME NO. 1 Bagalkote Badami ADAGAL 2 Bagalkote Badami AGASANAKOPPA 3 Bagalkote Badami ANAVALA 4 Bagalkote Badami BELUR 5 Bagalkote Badami CHOLACHAGUDDA 6 Bagalkote Badami GOVANAKOPPA 7 Bagalkote Badami HALADURA 8 Bagalkote Badami HALAKURKI 9 Bagalkote Badami HALIGERI 10 Bagalkote Badami HANAPUR SP 11 Bagalkote Badami HANGARAGI 12 Bagalkote Badami HANSANUR 13 Bagalkote Badami HEBBALLI 14 Bagalkote Badami HOOLAGERI 15 Bagalkote Badami HOSAKOTI 16 Bagalkote Badami HOSUR 17 Bagalkote Badami JALAGERI 18 Bagalkote Badami JALIHALA 19 Bagalkote Badami KAGALGOMBA 20 Bagalkote Badami KAKNUR 21 Bagalkote Badami KARADIGUDDA 22 Bagalkote Badami KATAGERI 23 Bagalkote Badami KATARAKI 24 Bagalkote Badami KELAVADI 25 Bagalkote Badami KERUR-A 26 Bagalkote Badami KERUR-B 27 Bagalkote Badami KOTIKAL 28 Bagalkote Badami KULAGERICROSS 29 Bagalkote Badami KUTAKANAKERI 30 Bagalkote Badami LAYADAGUNDI 31 Bagalkote Badami MAMATGERI 32 Bagalkote Badami MUSTIGERI 33 Bagalkote Badami MUTTALAGERI 34 Bagalkote Badami NANDIKESHWAR 35 Bagalkote Badami NARASAPURA 36 Bagalkote Badami NILAGUND 37 Bagalkote Badami NIRALAKERI 38 Bagalkote Badami PATTADKALL - A 39 Bagalkote Badami PATTADKALL - B 40 Bagalkote Badami SHIRABADAGI 41 Bagalkote Badami SULLA 42 Bagalkote Badami TOGUNSHI 43 Bagalkote Badami YANDIGERI 44 Bagalkote Badami YANKANCHI 45 Bagalkote Badami YARGOPPA SB 46 Bagalkote Bagalkot BENAKATTI 47 Bagalkote Bagalkot BENNUR Sub Centre list as per HMIS SR. DISTRICT NAME SUB DISTRICT FACILITY NAME NO.