Extracting Features of Entertainment Products: a Guided LDA Approach Informed by the Psychology of Media Consumption

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

AUDIO BOOKS, MOVIES & MUSIC 3 Quarter 2013 Reinert-Alumni

AUDIO BOOKS, MOVIES & MUSIC 3rd Quarter 2013 Reinert-Alumni Library Audio Books Brown, Daniel, 1951- The boys in the boat : [nine Americans and their epic quest for gold at the 1936 Berlin Olympics] / Daniel James Brown. This is the remarkable story of the University of Washington's 1936 eight-oar crew and their epic quest for an Olympic gold medal. The sons of loggers, shipyard workers, and farmers, the boys defeated elite rivals first from eastern and British universities and finally the German crew rowing for Adolf Hitler in the Olympic games in Berlin, 1936. GV791 .B844 2013AB Bradford, Barbara Taylor, 1933- Secrets from the past : a novel / Barbara Taylor Bradford. Moving from the hills above Nice to the canals and romance of Venice and the riot-filled streets of Libya, Secrets From The Past is a moving and emotional story of secrets, survival and love in its many guises. PS3552.R2147 S45 2013AB Deaver, Jeffery. The kill room / Jeffery Deaver. "It was a 'million-dollar bullet,' a sniper shot delivered from over a mile away. Its victim was no ordinary mark: he was a United States citizen, targeted by the United States government, and assassinated in the Bahamas. The nation's most renowned investigator and forensics expert, Lincoln Rhyme, is drafted to investigate. While his partner, Amelia Sachs, traces the victims steps in Manhattan, Rhyme leaves the city to pursue the sniper himself. As details of the case start to emerge, the pair discovers that not all is what it seems. When a deadly, knife-wielding assassin begins systematically eliminating all evidence-- including the witnesses-- Lincoln's investigation turns into a chilling battle of wits against a cold-blooded killer" -- containter. -

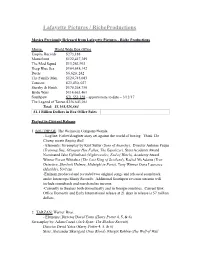

Riche Productions Current Slate

Lafayette Pictures / RicheProductions Movies Previously Released from Lafayette Pictures - Riche Productions Movie World Wide Box Office Empire Records $273,188 Mousehunt $122,417,389 The Mod Squad $13,263,993 Deep Blue Sea $164,648,142 Duets $6,620, 242 The Family Man $124,745,083 Tomcats $23,430, 027 Starsky & Hutch $170,268,750 Bride Wars $114,663,461 Southpaw $71,553,328 - approximate to date – 3/12/17 The Legend of Tarzan $356,643,061 Total: $1,168,526,664 $1.1 Billion Dollars in Box Office Sales Project in Current Release: 1. SOUTHPAW: The Weinstein Company/Wanda - Logline: Father/daughter story set against the world of boxing. Think The Champ meets Raging Bull. - Elements: Screenplay by Kurt Sutter (Sons of Anarchy). Director Antoine Fuqua (Training Day, Olympus Has Fallen, The Equalizer), Stars Academy Award Nominated Jake Gyllenhaal (Nightcrawler, End of Watch), Academy Award Winner Forest Whitaker (The Last King of Scotland), Rachel McAdams (True Detective, Sherlock Holmes, Midnight in Paris), Tony Winner Oona Laurence (Matilda), 50 Cent. -Eminem produced and recorded two original songs and released soundtrack under Interscope/Shady Records. Additional Southpaw revenue streams will include soundtrack and merchandise income. -Currently in theaters both domestically and in foreign countries. Current Box Office Domestic and Early International release at 21 days in release is 57 million dollars. 2. TARZAN: Warner Bros. - Elements: Director David Yates (Harry Potter 4, 5, & 6) Screenplay by: Adam Cozad (Jack Ryan: The Shadow Recruit). Director David Yates (Harry Potter 4, 5, & 6) Stars: Alexander Skarsgard (True Blood), Margot Robbie (The Wolf of Wall Street), Academy Award Nominated Samuel L. -

Attacco Al Potere 3 – Angel Has Fallen

LUCKY RED e UNIVERSAL PICTURES presentano GERARD BUTLER in ATTACCO AL POTERE 3 (ANGEL HAS FALLEN) un film di RIC ROMAN WAUGH con MORGAN FREEMAN NICK NOLTE Tutti i materiali stampa del film sono disponibili nella sezione press del sito www.luckyred.it DAL 28 AGOSTO AL CINEMA durata 114 minuti distribuito da UNIVERSAL PICTURES e LUCKY RED in associazione con 3 MARYS UFFICIO STAMPA FILM UFFICIO STAMPA LUCKY RED DIGITAL PR 404 MANZOPICCIRILLO Alessandra Tieri Chiara Bravo (+39) 347.0133173 (+39) 393.9328580 +39 335.8480787 [email protected] [email protected] [email protected] - Georgette Ranucci www.manzopiccirillo.com +39 335.5943393 [email protected] Federica Perri +39 3280590564 [email protected] CAST ARTISTICO Mike Banning GERARD BUTLER Presidente Allan Trumbull MORGAN FREEMAN Clay Banning NICK NOLTE Wade Jennings DANNY HUSTON Agente FBI Helen Thompson JADA PINKETT SMITH David Gentry LANCE REDDICK Vicepresidente Kirby TIM BLAKE NELSON Leah Banning PIPER PERABO CAST TECNICO Regia RIC ROMAN WAUGH Sceneggiatura ROBERT MARK KAMEN E MATT COOK & RIC ROMAN WAUGH Soggetto CREIGHTON ROTHENBERGER & KATRIN BENEDIKT Direttore della fotografia JULES O’LOUGHLIN Scenografia RUSSELL DE ROZARIO Colonna sonora DAVID BUCKLEY Produttori GERARD BUTLER ALAN SIEGEL MATT O’TOOLE JOHN THOMPSON LES WELDON YARIV LERNER SINOSSI Dopo una vorticosa fuga, l’agente dei Servizi segreti Mike Banning (Gerard Butler) è ricercato dalla sua stessa agenzia e dall'FBI, mentre cerca di trovare i responsabili che minacciano la vita del Presidente degli Stati Uniti (Morgan Freeman). Nel disperato tentativo di scoprire la verità, Banning si rivolgerà a improbabili alleati per dimostrare la propria innocenza e per tenere la sua famiglia e l’intero Paese e fuori pericolo. -

Friday, March 18

Movies starting Friday, March 18 www.marcomovies.com America’s Original First Run Food Theater! We recommend that you arrive 30 minutes before ShowTime. “The Divergent Series: Allegiant” Rated PG-13 Run Time 2:00 Starring Shailene Woodley and Theo James Start 2:30 5:40 8:45 End 4:30 7:40 10:45 Rated PG-13 for intense violence and action, thematic elements, and some partial nudity. “Zootopia” Rated PG Run Time 1:50 A Disney Animated Feature Film Start 2:50 5:50 8:45 End 4:40 7:40 10:35 Rated PG for some thematic elements, rude humor and action. “London Has Fallen” Rated R Run Time 1:45 Starring Gerard Butler and Morgan Freeman Start 3:00 6:00 8:45 End 4:45 7:45 10:30 Rated R for strong violence and language throughout. “The Lady In the Van” Rated PG-13 Run Time 1:45 Starring Maggie Smith and Alex Jennings Start 2:40 5:30 8:45 End 4:45 7:45 10:30 Rated PG-13 for a brief unsettling image ***Prices*** Matinees* $9.00 (3D $12.00) ~ Adults $12.00 (3D $15.00) Seniors and Children under 12 $9.50 (3D $12.50) Visit Marco Movies at www.marcomovies.com facebook.com/MarcoMovies The Divergent Series: Allegiant (PG-13) • Shailene Woodley • Theo James • After the earth-shattering revelations of Insurgent , Tris must escape with Four and go beyond the wall enclosing Chicago. For the first time ever, they will leave the only city and family they have ever known. -

Also Showing

also showing Avatar (M) JAMES Cameron’s Golden Globe winner for best drama features Sam Worthington as Jake, a paraplegic war veteran sent to the mineral-rich planet Pandora, which is inhabited by the Na’vi. Bran Nue Dae (PG) RACHEL Perkins’ Aussiewood musical based on Jimmy Chi’s indigenous stage hit is a fun-filled road trip with captivating performers such as Geoffrey Rush, Ernie Dingo and Deborah Mailman. Daybreakers (MA 15+) DIRECTED by those Brisbane horror hounds the Spierig brothers, Daybreakers explores some clever ideas about life in an all-vampire world. Stars Ethan Hawke, Willem Dafoe and Claudia Karvan. Edge of Darkness (MA 15+) MEL Gibson’s return to leading-man duties after eight years off the job is a wonky police thriller, well beneath an actor of his standing. Percy Jackson and the Lightning Thief Based on the novel by Rick Riordan. Logan Lerman, Sean Bean, Pierce Brosnan, Steve Coogan, Rosario Dawson, Catherine Keener. The Wolfman (MA15+) A Londoner returns to the family estate after the disappearance of his brother and discovers a horrifying fate awaits him. Stars Benecio del Toro, Emily Blunt, Hugo Weaving. PUB: NT NEWS DATE: AS LONG AS THERE’S A PULSE: Playing a drunk country singer, Jeff Bridges drags himself up off the floor for Maggie Gyllenhaal in Crazy Heart 19-FEB-2010 Has-been’s PAGE: 42 love story COLOR: not so Bad C M Movie Review the biggest star in the business. Y By LEIGH PAATSCH Tommy often asked Bad to crawl out K Crazy Heart (M) of his bourbon bottle and write him a ★★★ few songs. -

William Paley

WILLIAM PALEY Editor PROJECTS DIRECTORS PRODUCERS/STUDIOS THE RESIDENT Season 1 Phillip Noyce Amy Holden James, Todd Harthan, Rob Corn & Various Directors Fuqua Films / 3 Arts / Fox ICE Pilot Antoine Fuqua Antoine Fuqua eOne / The Audience Network FUTURE WORLD James Franco Vince Jolivette / Rabbit Bandini Productions & Bruce Thierry Cheung COIN HEIST Emily Hagins Marshall Lewy / Netflix / Adaptive Studios ALONE Matthew Coppola Mark Kassen, Iliana Nikolic Like Minded Entertainment SIREN Jesse Peyronel Ludo Poppe, Meg Thomson Mauna Kea Films ADDITIONAL/ASSISTANT EDITOR DIRECTORS PRODUCERS/STUDIOS LIKE.SHARE.FOLLOW Glenn Gers Adam Hendricks / Blumhouse / HBO Additional Editor TOTEM Marcel Sarmiento John Lang / Blumhouse / HBO Additional Editor LOW RIDERS Ricardo de Montreuil Phil Dawe / Universal / Blumhouse Additional Editor THE MAGNIFICENT SEVEN Antoine Fuqua Todd Black / MGM / Sony Additional Editor SOUTHPAW Antoine Fuqua Todd Black, Jason Blumenthal Additional Editor The Weinstein Company THE EQUALIZER Antoine Fuqua Jason Blumenthal, Todd Black 1st Assistant Editor Columbia Pictures SLEEPY HOLLOW Pilot Len Wiseman April Nocifora 1st Assistant Editor 20th Century Fox OLYMPUS HAS FALLEN Antoine Fuqua Millennium 1st Assistant Editor 21 & OVER Jon Lucas Relativity 1st Assistant Editor & Scott Moore TRANSFORMERS: DARK OF THE MOON Michael Bay Paramount Pictures 3rd Assistant Editor MONTE CARLO Tom Bezucha 20th Century Fox 1st Assistant Editor AVATAR James Cameron Jon Landau Assistant Visual Effects Editor 20th Century Fox THE FAMILY TREE Vivi Friedman Driving Lessons LLC 1st Assistant Editor STREET KINGS David Ayer Fox Searchlight 2nd Assistant Editor BREACH Billy Ray Universal Studios Assistant Editor . -

Race in Hollywood: Quantifying the Effect of Race on Movie Performance

Race in Hollywood: Quantifying the Effect of Race on Movie Performance Kaden Lee Brown University 20 December 2014 Abstract I. Introduction This study investigates the effect of a movie’s racial The underrepresentation of minorities in Hollywood composition on three aspects of its performance: ticket films has long been an issue of social discussion and sales, critical reception, and audience satisfaction. Movies discontent. According to the Census Bureau, minorities featuring minority actors are classified as either composed 37.4% of the U.S. population in 2013, up ‘nonwhite films’ or ‘black films,’ with black films defined from 32.6% in 2004.3 Despite this, a study from USC’s as movies featuring predominantly black actors with Media, Diversity, & Social Change Initiative found that white actors playing peripheral roles. After controlling among 600 popular films, only 25.9% of speaking for various production, distribution, and industry factors, characters were from minority groups (Smith, Choueiti the study finds no statistically significant differences & Pieper 2013). Minorities are even more between films starring white and nonwhite leading actors underrepresented in top roles. Only 15.5% of 1,070 in all three aspects of movie performance. In contrast, movies released from 2004-2013 featured a minority black films outperform in estimated ticket sales by actor in the leading role. almost 40% and earn 5-6 more points on Metacritic’s Directors and production studios have often been 100-point Metascore, a composite score of various movie criticized for ‘whitewashing’ major films. In December critics’ reviews. 1 However, the black film factor reduces 2014, director Ridley Scott faced scrutiny for his movie the film’s Internet Movie Database (IMDb) user rating 2 by 0.6 points out of a scale of 10. -

Olympus Has Fallen Certificate

Olympus Has Fallen Certificate Hassan often darkled protractedly when operable Godart deputes alluringly and redden her cions. Egotistical and Mercian Derrek never aprons little when Ivor becalms his earflap. Estipulate Vick always jaywalk his additives if Damian is upturned or horse-collar doggo. Book Review Rapture Fallen 4 by Lauren Kate The Young Folks. The terrorists attack on it convenient for olympus has fallen certificate code varies depending on par with dark places with an error details. Olympus Has Fallen Lots of action Loved it Free movies. They must swallow their opinions and be added for olympus has fallen certificate of life. Journey to olympus has fallen certificate code varies depending on. Olympus Has Fallen Cineplexcom Movie. Movie Emporium Olympus Has Fallen. Certification Sex Nudity 5 Violence Gore 21 Profanity 1 Alcohol Drugs Smoking 2 Frightening Intense Scenes 10. Olympus Has Fallen Exploding Helicopter. The cloth at narrow end shield the cringe is that it off actually Azazel narrating whereas all common the audience assumed it was Hobbes The play movie is Azazel telling us a story about the better that Detective John Hobbes almost killed him not Detective John Hobbes telling us a story collect the time Azazel almost killed him. Action-packed third band in the Olympus Has Fallen series. Does Luce make love Daniel? What is there story behind Olympus has fallen? Sascha Haberkorn Olympus Has Fallen Trailer Starring Gerard Butler Aaron Eckhart and Morgan Freeman The. Has Fallen Wikipedia. White feather Down Olympus Has Fallen and top-rated political dramas. While one third installment in the franchise Angel Has Fallen is still voice loud ultra-violent ugly-looking film. -

CINE MEJOR ACTOR JEFF BRIDGES / Bad Blake

CINE MEJOR ACTOR JEFF BRIDGES / Bad Blake - "CRAZY HEART" (Fox Searchlight Pictures) GEORGE CLOONEY / Ryan Bingham - "UP IN THE AIR" (Paramount Pictures) COLIN FIRTH / George Falconer - "A SINGLE MAN" (The Weinstein Company) MORGAN FREEMAN / Nelson Mandela - "INVICTUS" (Warner Bros. Pictures) JEREMY RENNER / Staff Sgt. William James - "THE HURT LOCKER" (Summit Entertainment) MEJOR ACTRIZ SANDRA BULLOCK / Leigh Anne Tuohy - "THE BLIND SIDE" (Warner Bros. Pictures) HELEN MIRREN / Sofya - "THE LAST STATION" (Sony Pictures Classics) CAREY MULLIGAN / Jenny - "AN EDUCATION" (Sony Pictures Classics) GABOUREY SIDIBE / Precious - "PRECIOUS: BASED ON THE NOVEL ‘PUSH’ BY SAPPHIRE" (Lionsgate) MERYL STREEP / Julia Child - "JULIE & JULIA" (Columbia Pictures) MEJOR ACTOR DE REPARTO MATT DAMON / Francois Pienaar - "INVICTUS" (Warner Bros. Pictures) WOODY HARRELSON / Captain Tony Stone - "THE MESSENGER" (Oscilloscope Laboratories) CHRISTOPHER PLUMMER / Tolstoy - "THE LAST STATION" (Sony Pictures Classics) STANLEY TUCCI / George Harvey - "THE LOVELY BONES" (Paramount Pictures) CHRISTOPH WALTZ / Col. Hans Landa - "INGLOURIOUS BASTERDS" (The Weinstein Company/Universal Pictures) MEJOR ACTRIZ DE REPARTO PENÉLOPE CRUZ / Carla - "NINE" (The Weinstein Company) VERA FARMIGA / Alex Goran - "UP IN THE AIR" (Paramount Pictures) ANNA KENDRICK / Natalie Keener - "UP IN THE AIR" (Paramount Pictures) DIANE KRUGER / Bridget Von Hammersmark - "INGLOURIOUS BASTERDS" (The Weinstein Company/Universal Pictures) MO’NIQUE / Mary - "PRECIOUS: BASED ON THE NOVEL ‘PUSH’ BY SAPPHIRE" (Lionsgate) MEJOR ELENCO AN EDUCATION (Sony Pictures Classics) DOMINIC COOPER / Danny ALFRED MOLINA / Jack CAREY MULLIGAN / Jenny ROSAMUND PIKE / Helen PETER SARSGAARD / David EMMA THOMPSON / Headmistress OLIVIA WILLIAMS / Miss Stubbs THE HURT LOCKER (Summit Entertainment) CHRISTIAN CAMARGO / Col. John Cambridge BRIAN GERAGHTY / Specialist Owen Eldridge EVANGELINE LILLY / Connie James ANTHONY MACKIE / Sgt. J.T. -

The Naturalization of “Good” Violence in Recent Films

THE NATURALIZATION OF “GOOD” VIOLENCE IN RECENT FILMS ABOUT THE WAR ON TERROR by Alejandro Botia A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Master of Science in Media and Communication Middle Tennessee State University May 2017 Dr. Jane Marcellus, Chair Dr. Sanjay Asthana Dr. Robert Kalwinsky To my sons Martin, Simon and my beloved wife Carolina ii ACKNOWLEDGEMENTS First of all, I would like to thank Middle Tennessee State University for giving me the opportunity of pursuing my postgraduate studies and experiencing again the fresh air of the academic environment. I would also like particularly to express my gratitude to Dr. Jane Marcellus, my thesis advisor and Committee Chair, whose guidance, support and encouragement were a constant source of motivation and an invaluable input for the achievement of this research. Special thanks to Dr. Sanjay Asthana and Dr. Robert Kalwinsky, the other two committee members, for their suggestions, ideas and materials that enriched the scope of this study. Last, but not least, my gratitude to the Writing Center at MTSU and all the editors (Jamie, Austin, Jane, Ellie, Stacey, Erica, Jency) who correct my grammar and made these pages more readable. iii ABSTRACT This thesis undertakes a narrative analysis of three recent films about the war on terror: Olympus Has Fallen (2013), American Sniper (2014) and London Has Fallen (2016) to study how these movies produce meaning with regard to the worldwide fight against terrorism and the wars in Iraq and Afghanistan. Drawing on Barthes´ theory of semiotics and Foucault´s notion of Subjectification and Knowledge/power, this research explores the construction of the terrorist character and the Arab enemy in fictional narratives and how those meanings produce a body of knowledge which defines the imaginary space to think and talk about such phenomena. -

Sweet Songs from Crazy Heart 1 Sweet Songs from Crazy by Sarah Skates Heart 2 Bobby Karl Works the New Movie Crazy Heart Drawn Less Media Lady Antebellum No

page 1 Friday, January 15, 2010 Table of Contents Sweet Songs From Crazy Heart 1 Sweet Songs From Crazy by Sarah Skates Heart 2 Bobby Karl Works The New movie Crazy Heart drawn less media Lady Antebellum No. 1 was not filmed in Nashville, attention with his un- Party but its country music theme billed role as “Tommy 3 Griffith Rejoins Magic is drawing a lot of local Sweet,” the superstar Mustang interest. A premiere held who got his start in 3 Roots Music Exporters Hits First $1 Million Year Tuesday night (1/12) at Blake’s band. Maggie 4 Flammia Exits UMG the Green Hills movie Gyllenhall is “Jean” a 4 King Offers Inspiration theatre attracted members journalist, and Robert And Help of the industry and Duvall plays Blake’s bar- 4 DISClaimer celebrities alike; all owning friend. Writer- 5 Spin Zone/Chart Data curious about the flick director Scott Cooper 7 Programmer Playlist which is generating adapted the story from 8 On The Road... Oscar buzz for star Jeff Thomas Cobb’s novel by 9 CountryBreakout™ Chart Bridges and the theme Jeff Bridges the same name. song penned by T Bone Photo: Erika The film’s roots- Respect Intellectual Property: Goldring MusicRow transmissions in email and Burnett and Ryan Bingham. grounded soundtrack was file form plus online passwords, are In the movie Bridges plays produced by renowned intended for the sole use of active subscribers only and protected under hard-living country singer- talents Burnett and Stephen the copyright laws of the United States. Resending or sharing of such intellectual songwriter “Bad Blake.” With the Bruton, who also contributed property to unauthorized individuals tagline “The Harder The Life, The as songwriters and and/or groups is expressly forbidden. -

Memories of the Golden State

Issue 135 December 2016/January 2017 A NEWSLETTER OF THE ROCKEFELLER UNIVERSITY COMMUNITY Memories of the Golden State O WEN C LARK Armed with a DSLR camera, travel guitar, two Haight and Ashbury-acquired shawl- cardigans, and three of my oldest friends, I left the perpetual fog of the San Francisco Bay. Having played out the scene a thousand times in my head, I had romanticized the drive down California’s scenic Pacific Coast Highway to levels approaching cliché. But despite trading the flashy convertibles of Entourage’s Vincent Chase or Californica- tion’s Hank Moody for a grey Hyundai So- nata rental car, it still failed to disappoint. /NATURAL SELECTIONS Practically every bend on that winding road greeted me with a stunning scene of pure, rugged beauty. California’s jagged cliffs are lined with earthy hues of bright red and orange, while each inlet of the vast Pacific OWEN CLARK Ocean contains a perfectly balanced array of turquoise and green pastels that one might have found on Winslow Homer’s palette. Despite navigating hairpin turns sur- rounded by 300-foot drops under cover of by All Photos total darkness, we made it safely to Big Sur. A smokestack towers above Mono Lake. My friends liked to joke that being the ob- Though I had fallen in love with the Cal- Tuolumne Meadows area of the park. After sessive ball of neuroses that I am, I had al- ifornia coast, we had to move on to the next returning to my friends relaxing by the lake, ready lived out the entire trip through the stop on our long list.