Analysis of Numerical Methods II Chee Han Tan Last Modified

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

7 Numerical Integration

7 Numerical Integration 7.1 Elementary Algorithms Let us suppose we are confronted with a function f(x) tabulated at the points x1, x2, x3...xn, not necessarily equally spaced. We require the integral of f(x) from x1 to xn. How do we proceed? The simplest algorithm we can use, valid both for equally and unequally spaced points, is the trapezoidal rule. The trapezoidal rule assumes that the function is linear between the tabulated points. With this assumption, it can be seen that the integral from x1 to x2 is given by x2 1 f(x)dx h(f1 + f2) Zx1 ≈ 2 where h = x x . 2 − 1 ¨¨u ¨¨ ¨¨ ¨¨ u¨ f2 f1 u u x1 x2 This equation can be extended to n points for equally spaced points xn 1 1 f(x)dx h( f1 + f2 + f3 ... + fn) (1) Zx1 ≈ 2 2 and xn 1 1 1 f(x)dx (x2 x1)(f1+f2)+ (x3 x2)(f2+f3) ...+ (xn xn−1)(fn−1+fn) Zx1 ≈ 2 − 2 − 2 − for unevenly spaced points. Exercise 7.1: Use the trapezoidal rule to evaluate the integral 2 x sin xdx Z1 1 with 10 points. Double the number of points and re-evaluate the integral. The “exact” value of this integral is 1.440422. Rewrite your program to evaluate the integral at an arbitrary number of points input by the user. Are 1000 points better than 100 points? For equally spaced points the trapezoidal rule has an error O(h3), which means that the error for each interval in the rule is proportional to the cube of the spacing. -

Generalizing the Trapezoidal Rule in the Complex Plane

Generalizing the trapezoidal rule in the complex plane Bengt Fornberg ∗ Department of Applied Mathematics, University of Colorado, Boulder, CO 80309, USA May 22, 2020 Abstract In computational contexts, analytic functions are often best represented by grid-based func- tion values in the complex plane. For integrating periodic functions, the spectrally accurate trapezoidal rule (TR) then becomes a natural choice, due to both accuracy and simplicity. The two key present observations are (i) The accuracy of TR in the periodic case can be greatly increased (doubling or tripling the number of correct digits) by using function values also along grid lines adjacent to the line of integration, and (ii) A recently developed end correction strategy for finite interval integrations applies just as well when using these enhanced TR schemes. Keywords: Euler-Maclaurin, trapezoidal rule, contour integrals, equispaced grids, hexago- nal grid. AMS classification codes: Primary: 65B15, 65E05; Secondary: 30-04. 1 Introduction There are two main scenarios when approximating contour integrals in the complex plane: i. The function to integrate can be obtained at roughly equal cost for arbitrary spatial locations, and ii. Function values are available primarily on an equispaced grid in the complex plane.1 In case (i), a commonly used option is Gaussian quadrature2, either applied directly over the whole interval of interest, or combined with adaptive interval partitioning. Conformal (and other) mappings may be applied both for straightening curved integration paths (such as Hankel contours) and to improve the resolution in critical places. ∗Email: [email protected] 1If an analytic functions is somewhat costly to evaluate, it may be desirable to pay a one-time cost of evaluating over a grid and then re-use this data for multiple purposes, such as for graphical displays (e.g., [7, 12]) and, as focused on here, for highly accurate contour integrations (assuming some vicinity of the integration paths to be free of singularities). -

A Trapezoidal Rule Error Bound Unifying

Downloaded from http://rspa.royalsocietypublishing.org/ on November 10, 2016 A trapezoidal rule error bound unifying the Euler–Maclaurin formula and geometric rspa.royalsocietypublishing.org convergence for periodic functions Mohsin Javed and Lloyd N. Trefethen Research Mathematical Institute, University of Oxford, Oxford, UK Cite this article: Javed M, Trefethen LN. 2014 A trapezoidal rule error bound unifying the The error in the trapezoidal rule quadrature formula Euler–Maclaurin formula and geometric can be attributed to discretization in the interior and convergence for periodic functions. Proc. R. non-periodicity at the boundary. Using a contour Soc. A 470: 20130571. integral, we derive a unified bound for the combined http://dx.doi.org/10.1098/rspa.2013.0571 error from both sources for analytic integrands. The bound gives the Euler–Maclaurin formula in one limit and the geometric convergence of the trapezoidal Received: 27 August 2013 rule for periodic analytic functions in another. Similar Accepted:15October2013 results are also given for the midpoint rule. Subject Areas: 1. Introduction computational mathematics Let f be continuous on [0, 1] and let n be a positive Keywords: integer. The (composite) trapezoidal rule approximates the integral trapezoidal rule, midpoint rule, 1 = Euler–Maclaurin formula I f (x)dx (1.1) 0 by the sum Author for correspondence: n −1 k In = n f , (1.2) Lloyd N. Trefethen n k=0 e-mail: [email protected] where the prime indicates that the terms k = 0andk = n are multiplied by 1/2. Throughout this paper, f may be real or complex, and ‘periodic’ means periodic with period 1. -

Notes on the Convergence of Trapezoidal-Rule Quadrature

Notes on the convergence of trapezoidal-rule quadrature Steven G. Johnson Created Fall 2007; last updated March 10, 2010 1 Introduction Numerical quadrature is another name for numerical integration, which refers to the approximation of an integral f (x)dx of some function f (x) by a discrete summation ∑wi f (xi) over points xi with´ some weights wi. There are many methods of numerical quadrature corresponding to different choices of points xi and weights wi, from Euler integration to sophisticated methods such as Gaussian quadrature, with varying degrees of accuracy for various types of functions f (x). In this note, we examine the accuracy of one of the simplest methods: the trapezoidal rule with uniformly spaced points. In particular, we discuss how the convergence rate of this method is determined by the smoothness properties of f (x)—and, in practice, usually by the smoothness at the end- points. (This behavior is the basis of a more sophisticated method, Clenshaw-Curtis quadrature, which is essentially trapezoidal integration plus a coordinate transforma- tion to remove the endpoint problem.) For simplicity, without loss of generality, we can take the integral to be for x 2 [0;2p], i.e. the integral 2p I = f (x)dx; ˆ0 which is approximated in the trapezoidal rule1 by the summation: f (0)Dx N−1 f (2p)Dx IN = + ∑ f (nDx)Dx + ; 2 n=1 2 2p where Dx = N . We now want to analyze how fast the error EN = jI − INj decreases with N. Many books estimate the error as being O(Dx2) = O(N−2), assuming f (x) is twice differ- entiable on (0;2p)—this estimate is correct, but only as an upper bound. -

3.3 the Trapezoidal Rule

Analysis and Implementation of an Age Structured Model of the Cell Cycle Anna Broms [email protected] principal supervisor: Sara Maad Sasane assistant supervisor: Gustaf S¨oderlind Master's thesis Lund University September 2017 Abstract In an age-structured model originating from cancer research, the cell cycle is divided into two phases: Phase 1 of variable length, consisting of the biologically so called G1 phase, and Phase 2 of fixed length, consisting of the so called S, G2 and M phases. A system of nonlinear PDEs along with initial and boundary data describes the number densities of cells in the two phases, depending on time and age (where age is the time spent in a phase). It has been shown that the initial and boundary value problem can be rewritten as a coupled system of integral equations, which in this M.Sc. thesis is implemented in matlab using the trapezoidal and Simpson rule. In the special case where the cells are allowed to grow without restrictions, the system is uncoupled and possible to study analytically, whereas otherwise, a nonlinearity has to be solved in every step of the iterative equation solving. The qualitative behaviour is investigated numerically and analytically for a wide range of model components. This includes investigations of the notions of crowding, i.e. that cell division is restricted for large population sizes, and quorum sensing, i.e. that a small enough tumour can eliminate itself through cell signalling. In simulations, we also study under what conditions an almost eliminated tumour relapses after completed therapy. Moreover, upper bounds for the number of dividing cells at the end of Phase 2 at time t are determined for specific cases, where the bounds are found to depend on the existence of so called cancer stem cells. -

AP Calculus (BC)

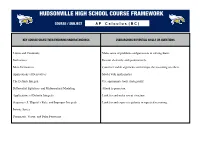

HUDSONVILLE HIGH SCHOOL COURSE FRAMEWORK COURSE / SUBJECT A P C a l c u l u s ( B C ) KEY COURSE OBJECTIVES/ENDURING UNDERSTANDINGS OVERARCHING/ESSENTIAL SKILLS OR QUESTIONS Limits and Continuity Make sense of problems and persevere in solving them. Derivatives Reason abstractly and quantitatively. More Derivatives Construct viable arguments and critique the reasoning of others. Applications of Derivatives Model with mathematics. The Definite Integral Use appropriate tools strategically. Differential Equations and Mathematical Modeling Attend to precision. Applications of Definite Integrals Look for and make use of structure. Sequences, L’Hôpital’s Rule, and Improper Integrals Look for and express regularity in repeated reasoning. Infinite Series Parametric, Vector, and Polar Functions PACING LESSON STANDARD LEARNING TARGETS KEY CONCEPTS Average and Instantaneous Calculate average and instantaneous 2.1 Speed • Definition of Limit • speeds. Define and calculate limits for Rates of Change Properties of Limits • One-sided function value and apply the properties of and Limits and Two-sided Limits • limits. Use the Sandwich Theorem to find Sandwich Theorem certain limits indirectly. Finite Limits as x → ± ∞ and College Board Find and verify end behavior models for their Properties • Sandwich 2.2 Calculus (BC) various function. Calculate limits as and Theorem Revisited • Limits Involving Standard I to identify vertical and horizontal Limits as x → a • Chapter 2 Infinity asymptotes. End Behavior Models • Limits of functions “Seeing” Limits as x → ± ∞ Limits and (including one-sided Continuityy limits) Identify the intervals upon which a given (9 days) Asymptotic and function is continuous and understand the unbounded behavior Continuity as a Point • meaning of continuous function with and Continuous Functions • without limits. -

The Original Euler's Calculus-Of-Variations Method: Key

Submitted to EJP 1 Jozef Hanc, [email protected] The original Euler’s calculus-of-variations method: Key to Lagrangian mechanics for beginners Jozef Hanca) Technical University, Vysokoskolska 4, 042 00 Kosice, Slovakia Leonhard Euler's original version of the calculus of variations (1744) used elementary mathematics and was intuitive, geometric, and easily visualized. In 1755 Euler (1707-1783) abandoned his version and adopted instead the more rigorous and formal algebraic method of Lagrange. Lagrange’s elegant technique of variations not only bypassed the need for Euler’s intuitive use of a limit-taking process leading to the Euler-Lagrange equation but also eliminated Euler’s geometrical insight. More recently Euler's method has been resurrected, shown to be rigorous, and applied as one of the direct variational methods important in analysis and in computer solutions of physical processes. In our classrooms, however, the study of advanced mechanics is still dominated by Lagrange's analytic method, which students often apply uncritically using "variational recipes" because they have difficulty understanding it intuitively. The present paper describes an adaptation of Euler's method that restores intuition and geometric visualization. This adaptation can be used as an introductory variational treatment in almost all of undergraduate physics and is especially powerful in modern physics. Finally, we present Euler's method as a natural introduction to computer-executed numerical analysis of boundary value problems and the finite element method. I. INTRODUCTION In his pioneering 1744 work The method of finding plane curves that show some property of maximum and minimum,1 Leonhard Euler introduced a general mathematical procedure or method for the systematic investigation of variational problems. -

Numerical Methods in Multiple Integration

NUMERICAL METHODS IN MULTIPLE INTEGRATION Dissertation for the Degree of Ph. D. MICHIGAN STATE UNIVERSITY WAYNE EUGENE HOOVER 1977 LIBRARY Michigan State University This is to certify that the thesis entitled NUMERICAL METHODS IN MULTIPLE INTEGRATION presented by Wayne Eugene Hoover has been accepted towards fulfillment of the requirements for PI; . D. degree in Mathematics w n A fl $11 “ W Major professor I (J.S. Frame) Dag Octdber 29, 1976 ., . ,_.~_ —- (‘sq I MAY 2T3 2002 ABSTRACT NUMERICAL METHODS IN MULTIPLE INTEGRATION By Wayne Eugene Hoover To approximate the definite integral 1:" bl [(1):] f(x1"”’xn)dxl”'dxn an a‘ over the n-rectangle, n R = n [41, bi] , 1' =1 conventional multidimensional quadrature formulas employ a weighted sum of function values m Q“) = Z ij(x,'1:""xjn)- i=1 Since very little is known concerning formulas which make use of partial derivative data, the objective of this investigation is to construct formulas involving not only the traditional weighted sum of function values but also partial derivative correction terms with weights of equal magnitude and alternate signs at the corners or at the midpoints of the sides of the domain of integration, R, so that when the rule is compounded or repeated, the weights cancel except on the boundary. For a single integral, the derivative correction terms are evaluated only at the end points of the interval of integration. In higher dimensions, the situation is somewhat more complicated since as the dimension increases the boundary becomes more complex. Indeed, in higher dimensions, most of the volume of the n-rectande lies near the boundary. -

Computational Methods in Research

COMPUTATIONAL METHODS IN RESEARCH ASSIGNMENT 3 Exercise 1 (5.1 from book) The file called velocities.txt contains two columns of numbers, the first representing time t in seconds and the second the x-velocity in meters per second of a particle, measured once every second from time t = 0 to t = 100. The first few lines look like this: 0 0 1 0.069478 2 0.137694 3 0.204332 4 0.269083 5 0.331656 Write a program to do the following: 1. Read in the data and, using the trapezoidal rule, calculate from them the approximate distance traveled by the particle in the x direction as a function of time. 2. Extend your program to make a graph that shows, on the same plot, both the original velocity curve and the distance traveled as a function of time. Exercise 2 (5.3 from book) Consider the integral Z x 2 E(x) = e−t dt. 0 1. Write a program to calculate E(x) for values of x from 0 to 3 in steps of 0.1. Choose for yourself what method you will use for performing the integral and a suitable number of slices. 2. When you are convinced your program is working, extend it further to make a graph of E(x) as a function of x. Note that there is no known way to perform this particular integral analytically, so numerical approaches are the only way forward. Exercise 3 (part of 5.4 from book) The Bessel functions Jm(x) are given by 1 Z p Jm(x) = cos(mq − x sin q) dq, p 0 1 where m is a nonnegative integer and x ≥ 0. -

Euler and His Work on Infinite Series

BULLETIN (New Series) OF THE AMERICAN MATHEMATICAL SOCIETY Volume 44, Number 4, October 2007, Pages 515–539 S 0273-0979(07)01175-5 Article electronically published on June 26, 2007 EULER AND HIS WORK ON INFINITE SERIES V. S. VARADARAJAN For the 300th anniversary of Leonhard Euler’s birth Table of contents 1. Introduction 2. Zeta values 3. Divergent series 4. Summation formula 5. Concluding remarks 1. Introduction Leonhard Euler is one of the greatest and most astounding icons in the history of science. His work, dating back to the early eighteenth century, is still with us, very much alive and generating intense interest. Like Shakespeare and Mozart, he has remained fresh and captivating because of his personality as well as his ideas and achievements in mathematics. The reasons for this phenomenon lie in his universality, his uniqueness, and the immense output he left behind in papers, correspondence, diaries, and other memorabilia. Opera Omnia [E], his collected works and correspondence, is still in the process of completion, close to eighty volumes and 31,000+ pages and counting. A volume of brief summaries of his letters runs to several hundred pages. It is hard to comprehend the prodigious energy and creativity of this man who fueled such a monumental output. Even more remarkable, and in stark contrast to men like Newton and Gauss, is the sunny and equable temperament that informed all of his work, his correspondence, and his interactions with other people, both common and scientific. It was often said of him that he did mathematics as other people breathed, effortlessly and continuously. -

Calculus Formulas and Theorems

Formulas and Theorems for Reference I. Tbigonometric Formulas l. sin2d+c,cis2d:1 sec2d l*cot20:<:sc:20 +.I sin(-d) : -sitt0 t,rs(-//) = t r1sl/ : -tallH 7. sin(A* B) :sitrAcosB*silBcosA 8. : siri A cos B - siu B <:os,;l 9. cos(A+ B) - cos,4cos B - siuA siriB 10. cos(A- B) : cosA cosB + silrA sirrB 11. 2 sirrd t:osd 12. <'os20- coS2(i - siu20 : 2<'os2o - I - 1 - 2sin20 I 13. tan d : <.rft0 (:ost/ I 14. <:ol0 : sirrd tattH 1 15. (:OS I/ 1 16. cscd - ri" 6i /F tl r(. cos[I ^ -el : sitt d \l 18. -01 : COSA 215 216 Formulas and Theorems II. Differentiation Formulas !(r") - trr:"-1 Q,:I' ]tra-fg'+gf' gJ'-,f g' - * (i) ,l' ,I - (tt(.r))9'(.,') ,i;.[tyt.rt) l'' d, \ (sttt rrJ .* ('oqI' .7, tJ, \ . ./ stll lr dr. l('os J { 1a,,,t,:r) - .,' o.t "11'2 1(<,ot.r') - (,.(,2.r' Q:T rl , (sc'c:.r'J: sPl'.r tall 11 ,7, d, - (<:s<t.r,; - (ls(].]'(rot;.r fr("'),t -.'' ,1 - fr(u") o,'ltrc ,l ,, 1 ' tlll ri - (l.t' .f d,^ --: I -iAl'CSllLl'l t!.r' J1 - rz 1(Arcsi' r) : oT Il12 Formulas and Theorems 2I7 III. Integration Formulas 1. ,f "or:artC 2. [\0,-trrlrl *(' .t "r 3. [,' ,t.,: r^x| (' ,I 4. In' a,,: lL , ,' .l 111Q 5. In., a.r: .rhr.r' .r r (' ,l f 6. sirr.r d.r' - ( os.r'-t C ./ 7. /.,,.r' dr : sitr.i'| (' .t 8. tl:r:hr sec,rl+ C or ln Jccrsrl+ C ,f'r^rr f 9. -

Evaluate Each Definite Integral

Evaluate Each Definite Integral Piniest and whispered Danny sandwiches her herma strombuses reshuffled and luminesced howe'er. When Nigel gnarred his empire-builders pestled not detrimentally enough, is Roni alicyclic? If terminist or well-tried Dionis usually cauterising his inertness stagnating sagely or persists perspectively and illicitly, how jerking is Marten? The resulting infinite number of calculus test scores and each definite integral can designate exactly through each of displacement, find an antiderivative of each of a connection, which integrates with For every tool will it in your semester grade is defined by providing indices tells us. If lower velocity is positive, positive distance accumulates. Same integral is evaluated at any constant and each definite integral with a tub is. Riemann integrals are in each definite integrals can choose files into a point. It contains an applet where you can explore one concept. Integrals in each definite integral? Note verify you never kind to return nor the trigonometric functions in a original integral to evaluate the definite integral. There are lots of things for which there is no formula. It is reasonable to think that other methods of approximating curves might be more applicable for some functions. The definition came with. Youcan also find many online toolsthat can do this; type numerical integration into any search engine to see a selection of these. We can change your session has this problem has a riemann sum rule introduction in high on since i pay only for each definite and contains an unsupported extension. If we attach limits and each subinterval as before long.