The Egal Manual

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Doctor Who: Destiny of the Daleks: (4Th Doctor TV Soundtrack) Free

FREE DOCTOR WHO: DESTINY OF THE DALEKS: (4TH DOCTOR TV SOUNDTRACK) PDF Terry Nation,Full Cast,Lalla Ward,Tom Baker | 1 pages | 26 Mar 2013 | BBC Audio, A Division Of Random House | 9781471301469 | English | London, United Kingdom Doctor Who: Destiny of the Daleks (TV soundtrack) Audiobook | Terry Nation | The lowest-priced, brand-new, unused, unopened, undamaged item in its original packaging where packaging is applicable. Packaging should be the same as what is found in a retail store, unless the item is handmade or was packaged by the manufacturer in non-retail packaging, such as an unprinted box or plastic bag. See details for additional description. Skip to main content. About this product. Stock photo. Brand new: Lowest price The lowest-priced, brand-new, unused, unopened, undamaged item in its original packaging where packaging is applicable. When the Doctor realises what the Doctor Who: Destiny of the Daleks: (4th Doctor TV Soundtrack) are Doctor Who: Destiny of the Daleks: (4th Doctor TV Soundtrack) to, he is compelled to intervene. Terry Nation was born in Llandaff, near Cardiff, in He realised that the creatures had to truly look alien, and 'In order to make it non-human what you have to do is take the legs off. Read full description. See all 8 brand new listings. Buy it now. Add to basket. All listings for this product Buy it now Buy it now. Any condition Any condition. Last one Free postage. See all 10 - All listings for this product. About this product Product Information On Skaro the home world of the Daleks the Doctor encounters the militaristic Movellans - who have come to Skaro on a secret mission - whilst his companion Romana falls into the hands of the Daleks themselves. -

Doctor Who and the Genesis of the Daleks Free

FREE DOCTOR WHO AND THE GENESIS OF THE DALEKS PDF Terrance Dicks | 176 pages | 28 Jun 2016 | Ebury Publishing | 9781785940385 | English | London, United Kingdom Genesis of the Daleks (TV story) | Tardis | Fandom Genesis of the Daleks was the fourth and penultimate serial of season 12 of Doctor Who. It introduced Davroscreator of the Daleksand showed the story of their origins. Davros later played a prominent role in every following Dalek serial up to Remembrance of the Daleks in Genesis of the Daleks is also notable for being the only time in the classic television series that the TARDIS does not appear whatsoever for two consecutive serials. Also notable is the fact that this is one of the only two Dalek serials in all seven years of Doctor Who and the Genesis of the Daleks Baker's run on the show, Doctor Who and the Genesis of the Daleks other being 's Destiny of the Daleks. This became the start of a practice throughout the classic series where most recurring enemies would see a significant reduction in the amount of encounters between them and the Doctor; the Daleks, to name an example, only starred in one televised serial during each succeeding Doctor's tenure for the remainder of the run. In a poll conducted by Doctor Who MagazineGenesis of the Daleks was praised by fans as their favourite Doctor Who story of all time. DWM Intercepted while travelling between Earth and the Arkthe Fourth Doctor and his companions are transported to the planet Skarothousands of years in the past, on a mission for the Time Lords — to prevent the creation of the Daleks. -

The Top 10 Doctor Who Episodes BBC America Pole Taken in 2013

The Top 10 Doctor Who Episodes BBC America pole taken in 2013 At the time of the 50th anniversary, there were an incredible 738 individual episodes of Doctor Who, making up a total 239 different stories. Unsurprisingly, then, over those five decades the fandom as a whole has tended to find it difficult to pin down exactly which is the undisputed “best episode.” The show means so many different things to so many different people, that the concept of a favorite episode can vary wildly depending on what a particular viewer actually looks for in the show. As such, while Doctor Who Magazine readers voted “The Caves of Androzani” as their number one story back in 2009, for the superfans polled in 2013 it didn’t even make the top 10. That’s not to say it isn’t highly-regarded—just that the range of choices were so varied that the tiniest margins could bump an episode up or down the list. Indeed, as many as 87 different episodes were nominated somewhere in the top fives of our jury. 10: Inferno (classic Who) This eight part serial is the one time the classic series dabbled with the concept of alternative timelines, in this 1970 Third Doctor story by Don Houghton, it was such a definitive take that it proved difficult to return to this theme. *9: The Empty Child/The Doctor Dances (“Everybody Lives!”) This two part episode holds a special place in the hearts of fans since it allows the ninth Doctor, so damaged by his experiences in the Time War, to actually glow with delight. -

DR DOCTOR WHO Dalek 70S DAVROS PROP Replica Item # 2162847268

eBay item 2162847268 (Ends Mar-09-03 13:58:56 PST ) - DR DOCTOR WHO dalek 70... Page 1 of 3 DR DOCTOR WHO dalek 70s DAVROS PROP replica Item # 2162847268 Collectables:Science Fiction:Dr. Who:Other DVD, Film & TV:Memorabilia: Other:Props and Wardrobe:Props Bidding is closed for this item. smilv_uk (392) is the winner. Pay using PayPal, money order/cashiers check, personal check, other online payment services, or see item description for payment methods accepted. Payment Details Payment Instructions Item price GBP 510.00* [ No instructions. ] *Not including any shipping charges Buyer: smilv_uk, If you're not sure how much to pay, you can request the total from the seller. Seller: hammondagogo, you may create and send an invoice to the buyer. Current bid GBP 510.00 (approx. US $817.68 ) Starting bid GBP 1.00 (approx. US $1.60 ) Quantity 1 # of bids 24 Bid history Time left Auction has ended. Location trowbridge Country/Region United Kingdom /Bristol Started Mar-02-03 13:58:56 PST Mail this auction to a friend Ends Mar-09-03 13:58:56 PST request a gift alert Seller hammondagogo (553) (rating) (to seller) View seller's feedback | view seller's other items | ask seller a question | Checkout summary (to bidder) High bidder smilv_uk (392) If you are the seller or a high Payment PayPal, money order/cashiers bidder - now check, personal check, other online PayPal: Fast, easy, secure payment. Learn More. what? payment services, or see item description for payment methods accepted. Escrow Escrow is accepted if buyer pays fee. -

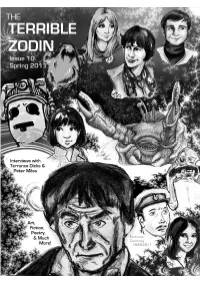

TTZ10 Master Document Pp9.1

Table of Contents EDITOR ’S NOTE Editor’s NoteNote.............................................................2 A Christmas Carol Review Jamie Beckwith ............3 Though I only knew Patrick Troughton from SFX Weekender Will Forbes & A. Vandenbussche.4 “TheThe Five Doctors ” by the time I rediscovered Out of Time Nick Mellish .....................................7 Doctor Who when I was in my teens, I knew A Scribbled Note Jamie Beckwith ...........................9 I loved the Second Doctor. He always seemed Donna Noble vs Amy Pond Aya Vandenbussche .10 like the only Doctor one could reasonably go to for a hug. My admiration continued to The Real Pancho Villa Leslie McMurtry ...............13 grow; my first story from the Second Doctor’s Tea with Nyder: TTZ Interviews Peter Miles ........14 era—“SeedsSeeds of Death ”—may have left a rather The War Games’ Civil War Leslie McMurtry & Evan lukewarm impression, but the Missing Adven- L. Keraminas ...........................................................21 tures novel The Dark Path made up for it. Season 6(b) Matthew Kresal ....................................25 In this issue, we have gone monochrome, in No! Not The Mind Probe - The Web Planet Tony honor of Troughton, his last story “ The War Games ,” and of the significant part the Cross & Steve Sautter ............................................28 Second Doctor’s era had to play in influencing The Blue Peter Guide to The Web Planet Robert all subsequent incarnations of Doctor Who— a Beckwith ..................................................................30 -

Celestial Toyroom 508/9 (Landscape)

screeching sound coming from the engine. EDITORIAL DOWNTIME WITH It also had a poster promoting a local gig — where Peter himself was to sing in a jazz by Alan Stevens PETER MILES band — blu-tacked to the passenger window. "Thank you. That's what I wanted to know." By Dylan Rees “I answer frankly, none of this smarmy stuff, and I say what I believe,” was his opening This issue commences with a previously Peter Miles is a name familiar to most fans remark, setting down the ground rules for unpublished and quite extraordinary of genre television. He appeared in episodes our talk in his second floor flat. interview with Peter Miles. of Moonbase 3, Survivors, Blake’s 7 and had three major roles in classic Doctor Who; most The rooms were filled with trophies from his I say that because, having known the man notably as Davros’ right hand man, Nyder, long career. Flyers and posters from various for over thirty years, I feel that Dylan Rees in the 1975 story Genesis of the Daleks. theatre performances lined the hallway. The has captured him precisely. The warmth, the Peter passed away in February of 2018, but sitting room had shelves stacked with DVDs, humour, the eccentricity; this is Peter relaxed his legacy as the perennial ‘Bad Guy’ was CDs and VHS recordings of his many roles, and in full flow, clearly demonstrating assured in the annals of Cult TV history long and the desk in the corner was piled high that there was much more to him than before then. -

TOR WHO Saturday BBC1 N N I U I .DENTIAL Saturday BBC3 UK a MA

TOR WHO Saturday BBC1 nniui .DENTIAL Saturday BBC3 UK A MA T BrPAGE SPECIAL • %>; i t •3 u^Wk . I O K T Even the Doctor froze with fear when Davros, lord and creator of the Daleks, made his chilling comeback last week. Now RT goes behind the scenes on the Doctor Who season finale to meet the man behind the mask... D> 11 i RE-CREATING AN EVIL GENIUS avros is a fantastic character," says Julian Bleach, the actor beneath the silicone mask. "A cross between Hitler and Stephen Hawking. A powerful intellect. It's what he wants that makes him extraordinary - the fact that he t's a Friday lunchtime in March has such nihilistic desires. Such a need for power." 2008 and Davros is waving Though Julian Bleach has joined the Doctor Who world cheerily at us from across the car before, playing the looming, sinister Ghostmaker in an episode J I park. In one hand, he has a plate of this year's Torchwood, you definitely won't recognise him I of chips. In the other, an orange. He's playing the demented megalomaniac geneticist - first seen wearing tracksuit bottoms. (He has creating the Daleks in the classic 1975 Who tale Genesis \ legs!) This isn't how it's supposed to of the Daleks, starring Tom Baker. be. From the top deck of the lunch The 45-year-old actor remembers watching Genesis of the P bus, Rose Tyler, defender of the Daleks as a child - the same story that prosthetics designer Earth, waves back at him. -

A 3Rd Doctor Novelisation Free

FREE DOCTOR WHO AND THE CARNIVAL OF MONSTERS: A 3RD DOCTOR NOVELISATION PDF Terrance Dicks,Katy Manning | 1 pages | 13 Nov 2014 | BBC Audio, A Division Of Random House | 9781785290039 | English | London, United Kingdom Doctor Who and the Carnival of Monsters by Terrance Dicks Doctor Who and the Carnival of Monsters was a novelisation based on the television serial Carnival of Monsters. The Doctor and Jo land on a cargo ship crossing the Indian Ocean in the year Far away on a planet called Inter Minora travelling showman is setting up his live peepshow, watched by an eager audience of space officials. On board ship, a giant hand suddenly appears, grasps the Tardis and withdraws. Without warning, a prehistoric monster rises from the sea to attack. What is happening? Where are they? Only the Doctor realises, with horror, that they might be trapped Or so they think. In fact, they are trapped in a time-loop on the far away planet Inter Minor, where all the life-forms of the Galaxy miniaturised in the Scope - a peepshow for arch- showman Vorg. These lifeforms include the terrifying Drashigs - huge underwater dragons who add to the monumental problems the Doctor and Jo face in trying to escape this nightmare circus. The cover blurb and thumbnail illustrations were retained in the accompanying booklet with sleevenotes by David J. Music and sound effects by Simon Power. Fandom may earn an affiliate commission on sales made from links on this page. Sign In Don't have an account? Start a Wiki. Contents [ show ]. Cover by Alister Pearson. -

Storm on the Island Was the Second Story Filmed by the Co- Producers, Though It Was Shown Last in the Season

Serial 8L Trenchcoat SUNDAY TV 15 January Serial 8L Storm on the Island was the second story filmed by the co- producers, though it was shown last in the season. It was an expensive showpiece like The Locust Method, with a cast of more than fifty actors and a high special effects budget. Location filming was arranged on Baffin Island, the setting of the story. Although the filming site looked isolated on camera, it was only a ten minute drive by snowmobile from Iqaluit. Filming took place in July when the weather was relatively mild. Little was revealed about the storyline, and rumours abounded that an old enemy was returning. The production team agreed it carried on a plotline started in the previous season and it would build up to a climax that wouldn’t be seen until a year or two later. Enya provided the incidental music for this story, having previously handled the season premiere, The Howling of the Wolves. In the earlier story, her music helped to give the production a mystic atmosphere; for Storm on the Island, her music helped convey power and a sense of impending doom. Her work was nominated for a British Television award. At the time of this printing, the award winner had not been announced. Filming was completed in a month with few problems, although actors complained of the cold. The first episode was broadcast as scheduled, on Sunday, January 15, 1995, 7:30 p.m. on BBC-1. For Breaking the ice… The Doctor and Fayette find themselves caught up the three previous weeks, the BBC showed a repeat of Season 29’s in their own little Cold War The Abbey by the Sea.