Affective Computing and Autism

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Neural Correlates of Personality Dimensions and Affective Measures During the Anticipation of Emotional Stimuli

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by RERO DOC Digital Library Brain Imaging and Behavior (2011) 5:86–96 DOI 10.1007/s11682-011-9114-7 ORIGINAL RESEARCH Neural correlates of personality dimensions and affective measures during the anticipation of emotional stimuli Annette Beatrix Brühl & Marie-Caroline Viebke & Thomas Baumgartner & Tina Kaffenberger & Uwe Herwig Published online: 25 January 2011 # Springer Science+Business Media, LLC 2011 Abstract Neuroticism and extraversion are proposed per- measures. Neuroticism-related regions were partially cross- sonality dimensions for individual emotion processing. correlated with anxiety and depression and vice versa. Neuroticism is correlated with depression and anxiety Extraversion-related activity was not correlated with the disorders, implicating a common neurobiological basis. other measures. The neural correlates of extraversion Extraversion is rather inversely correlated with anxiety and compared with those of neuroticism and affective measures depression. We examined neural correlates of personality in fit with concepts of different neurobiological bases of the relation to depressiveness and anxiety in healthy adult personality dimensions and point at predispositions for subjects with functional magnetic resonance imaging affective disorders. during the cued anticipation of emotional stimuli. Distrib- uted particularly prefrontal but also other cortical regions Keywords Extraversion . Neuroticism . Emotion and the thalamus were associated with extraversion. processing . fMRI . Affective disorders Parieto-occipital and temporal regions and subcortically the caudate were correlated with neuroticism and affective Introduction Electronic supplementary material The online version of this article (doi:10.1007/s11682-011-9114-7) contains supplementary material, The relation between personality dimensions and affective which is available to authorized users. -

CITY MOOD How Does Your City Feel?

CITY MOOD how does your city feel? Luisa Fabrizi Interaction Design One year Master 15 Credits Master Thesis August 2014 Supervisor: Jörn Messeter CITY MOOD how does your city feel? 2 Thesis Project I Interaction Design Master 2014 Malmö University Luisa Fabrizi [email protected] 3 01. INDEX 01. INDEX .................................................................................................................................03 02. ABSTRACT ...............................................................................................................................04 03. INTRODUCTION ...............................................................................................................................05 04. METHODOLOGY ..............................................................................................................................08 05. THEORETICAL FRamEWORK ...........................................................................................................09 06. DESIGN PROCESS ...................................................................................................................18 07. GENERAL CONCLUSIONS ..............................................................................................................41 08. FURTHER IMPLEMENTATIONS ....................................................................................................44 09. APPENDIX ..............................................................................................................................47 02. -

Tor Wager Diana L

Tor Wager Diana L. Taylor Distinguished Professor of Psychological and Brain Sciences Dartmouth College Email: [email protected] https://wagerlab.colorado.edu Last Updated: July, 2019 Executive summary ● Appointments: Faculty since 2004, starting as Assistant Professor at Columbia University. Associate Professor in 2009, moved to University of Colorado, Boulder in 2010; Professor since 2014. 2019-Present: Diana L. Taylor Distinguished Professor of Psychological and Brain Sciences at Dartmouth College. ● Publications: 240 publications with >50,000 total citations (Google Scholar), 11 papers cited over 1000 times. H-index = 79. Journals include Science, Nature, New England Journal of Medicine, Nature Neuroscience, Neuron, Nature Methods, PNAS, Psychological Science, PLoS Biology, Trends in Cognitive Sciences, Nature Reviews Neuroscience, Nature Reviews Neurology, Nature Medicine, Journal of Neuroscience. ● Funding: Currently principal investigator on 3 NIH R01s, and co-investigator on other collaborative grants. Past funding sources include NIH, NSF, Army Research Institute, Templeton Foundation, DoD. P.I. on 4 R01s, 1 R21, 1 RC1, 1 NSF. ● Awards: Awards include NSF Graduate Fellowship, MacLean Award from American Psychosomatic Society, Colorado Faculty Research Award, “Rising Star” from American Psychological Society, Cognitive Neuroscience Society Young Investigator Award, Web of Science “Highly Cited Researcher”, Fellow of American Psychological Society. Two patents on research products. ● Outreach: >300 invited talks at universities/international conferences since 2005. Invited talks in Psychology, Neuroscience, Cognitive Science, Psychiatry, Neurology, Anesthesiology, Radiology, Medical Anthropology, Marketing, and others. Media outreach: Featured in New York Times, The Economist, NPR (Science Friday and Radiolab), CBS Evening News, PBS special on healing, BBC, BBC Horizons, Fox News, 60 Minutes, others. -

Cognitive & Affective Neuroscience

Cognitive & Affective Neuroscience: From Circuitry to Network and Behavior Monday, Jun 18: 8:00 AM - 9:15 AM 1835 Symposium Monday - Symposia AM Using a multi-disciplinary approach integrating cognitive, EEG/ERP and fMRI techniques and advanced analytic methods, the four speakers in this symposium investigate neurocognitive processes underlying nuanced cognitive and affective functions in humans. the neural basis of changing social norms through persuasion using carefully designed behavioral paradigms and functional MRI technique; Yuejia Luo will describe how high temporal resolution EEG/ERPs predict dynamical profiles of distinct neurocognitive stages involved in emotional negativity bias and its reciprocal interactions with executive functions such as working memory; Yongjun Yu conducts innovative behavioral experiments in conjunction with fMRI and computational modeling approaches to dissociate interactive neural signals involved in affective decision making; and Shaozheng Qin applies fMRI with simultaneous recording skin conductance and advanced analytic approaches (i.e., MVPA, network dynamics) to determine neural representational patterns and subjects can modulate resting state networks, and also uses graph theory network activity levels to delineate dynamic changes in large-scale brain network interactions involved in complex interplay of attention, emotion, memory and executive systems. These talks will provide perspectives on new ways to study brain circuitry and networks underlying interactions between affective and cognitive functions and how to best link the insights from behavioral experiments and neuroimaging studies. Objective Having accomplished this symposium or workshop, participants will be able to: 1. Learn about the latest progress of innovative research in the field of cognitive and affective neuroscience; 2. Learn applications of multimodal brain imaging techniques (i.e., EEG/ERP, fMRI) into understanding human cognitive and affective functions in different populations. -

How Should Neuroscience Study Emotions? by Distinguishing Emotion States, Concepts, and Experiences Ralph Adolphs

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Caltech Authors - Main Social Cognitive and Affective Neuroscience, 2017, 24–31 doi: 10.1093/scan/nsw153 Advance Access Publication Date: 19 October 2016 Original article How should neuroscience study emotions? by distinguishing emotion states, concepts, and experiences Ralph Adolphs Division of Humanities and Social Sciences, California Institute of Technology, HSS 228-77, Caltech, Pasadena, CA 91125, USA. E-mail: [email protected] Abstract In this debate with Lisa Feldman Barrett, I defend a view of emotions as biological functional states. Affective neuroscience studies emotions in this sense, but it also studies the conscious experience of emotion (‘feelings’), our ability to attribute emotions to others and to animals (‘attribution’, ‘anthropomorphizing’), our ability to think and talk about emotion (‘concepts of emotion’, ‘semantic knowledge of emotion’) and the behaviors caused by an emotion (‘expression of emotions’, ‘emotional reactions’). I think that the most pressing challenge facing affective neuroscience is the need to carefully distinguish between these distinct aspects of ‘emotion’. I view emotion states as evolved functional states that regulate complex behavior, in both people and animals, in response to challenges that instantiate recurrent environmental themes. These functional states, in turn, can also cause conscious experiences (feelings), and their effects and our memories for those effects also contribute to our semantic -

ARCHITECTS of INTELLIGENCE for Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS of INTELLIGENCE

MARTIN FORD ARCHITECTS OF INTELLIGENCE For Xiaoxiao, Elaine, Colin, and Tristan ARCHITECTS OF INTELLIGENCE THE TRUTH ABOUT AI FROM THE PEOPLE BUILDING IT MARTIN FORD ARCHITECTS OF INTELLIGENCE Copyright © 2018 Packt Publishing All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, without the prior written permission of the publisher, except in the case of brief quotations embedded in critical articles or reviews. Every effort has been made in the preparation of this book to ensure the accuracy of the information presented. However, the information contained in this book is sold without warranty, either express or implied. Neither the author, nor Packt Publishing or its dealers and distributors, will be held liable for any damages caused or alleged to have been caused directly or indirectly by this book. Packt Publishing has endeavored to provide trademark information about all of the companies and products mentioned in this book by the appropriate use of capitals. However, Packt Publishing cannot guarantee the accuracy of this information. Acquisition Editors: Ben Renow-Clarke Project Editor: Radhika Atitkar Content Development Editor: Alex Sorrentino Proofreader: Safis Editing Presentation Designer: Sandip Tadge Cover Designer: Clare Bowyer Production Editor: Amit Ramadas Marketing Manager: Rajveer Samra Editorial Director: Dominic Shakeshaft First published: November 2018 Production reference: 2201118 Published by Packt Publishing Ltd. Livery Place 35 Livery Street Birmingham B3 2PB, UK ISBN 978-1-78913-151-2 www.packt.com Contents Introduction ........................................................................ 1 A Brief Introduction to the Vocabulary of Artificial Intelligence .......10 How AI Systems Learn ........................................................11 Yoshua Bengio .....................................................................17 Stuart J. -

The Functional Neuroanatomy of Emotion and Affective Style Richard J

Bedford – Keeping perception accurate Review 30 Held, R. (1965) Plasticity in sensory–motor systems Sci. Am. 213, 84–94 34 Calvert, G.A., Brammer, M.J. and Iverson, S.D. (1998) Crossmodal 31 Clifton, R.K. et al. (1988) Growth in head size during infancy: identification Trends Cognit. Sci. 2, 247–253 implications for sound localization Dev. Psychol. 24, 477–483 35 Driver, J. and Spence, C. (1998) Attention and the crossmodal 32 Shinn-Cunningham, B. Adapting to remapped auditory localization construction of space Trends Cognit. Sci. 2, 254–262 cues: a decision-theory model Percept. Psychophys. (in press) 36 Jones, T.A, Hawrylak, N. and Greenough, W.T. (1996) Rapid laminar- 33 Shinn-Cunningham, B.G., Durlach, N.I. and Held, R.M. (1998) Adapting dependent changes in GFAP immunoreactive astrocytes in the visual to supernormal auditory localization cues: II. Constraints on cortex of rats reared in a complex environment Psychoneuro- adaptation of mean response J. Acoust. Soc. Am. 103, 3667–3676 endocrinology 21, 189–201 The functional neuroanatomy of emotion and affective style Richard J. Davidson and William Irwin Recently, there has been a convergence in lesion and neuroimaging data in the identification of circuits underlying positive and negative emotion in the human brain. Emphasis is placed on the prefrontal cortex (PFC) and the amygdala as two key components of this circuitry. Emotion guides action and organizes behavior towards salient goals. To accomplish this, it is essential that the organism have a means of representing affect in the absence of immediate elicitors. It is proposed that the PFC plays a crucial role in affective working memory. -

Re-Excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael Lyons

”Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael Lyons To cite this version: Michael Lyons. ”Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset. 2021. hal-03321964 HAL Id: hal-03321964 https://hal.archives-ouvertes.fr/hal-03321964 Preprint submitted on 18 Aug 2021 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution - NonCommercial - NoDerivatives| 4.0 International License “Excavating AI” Re-excavated: Debunking a Fallacious Account of the JAFFE Dataset Michael J. Lyons Ritsumeikan University Abstract Twenty-five years ago, my colleagues Miyuki Kamachi and Jiro Gyoba and I designed and photographed JAFFE, a set of facial expression images intended for use in a study of face perception. In 2019, without seeking permission or informing us, Kate Crawford and Trevor Paglen exhibited JAFFE in two widely publicized art shows. In addition, they published a nonfactual account of the images in the essay “Excavating AI: The Politics of Images in Machine Learning Training Sets.” The present article recounts the creation of the JAFFE dataset and unravels each of Crawford and Paglen’s fallacious statements. -

Letters to the Editor

letters to the editor anticipatory SCRs for good decks than for advantageous. (Penalties never cancel the of reward or punishment hidden in the bad decks (Fig. 1d–f). gain, as in decks C & D.) The immediate deck from which subjects are about to Results suggest that across both exper- tendency to prefer the high reward does select, depending on whether anticipato- iments, card selection is driven by long- not need to be opposed in order to ry SCRs reflect negative or positive somat- term consequences, whereas anticipatory achieve. Apparent and ultimate goodness ic states, higher anticipatory SCRs also SCRs are driven by the immediate act to coincide. There is no conflict. Normal sub- coincide with the long-term conse- be performed, independently of the posi- jects should prefer decks A & B. quences—anticipation of a long-term tive or negative long-term value of the In the original task, the higher antici- negative or positive outcome. When antic- decision. In the original gambling task patory SCRs preceded card turns from ipatory SCRs do not develop, a support experiments5,6, anticipatory SCRs were bad decks; by contrast, in the modified mechanism for making advantageous interpreted as correlates of somatic mark- task, higher anticipatory SCRs preceded decisions under conflict and uncertainty ers that bias individuals’ decision-making. turns from good decks. Because higher falls apart, as was critically demonstrated However, by changing the schedule of anticipatory SCRs related to decks carry- in patients with prefrontal damage6. punishments and rewards in Experiment ing the immediate higher magnitude of Another explanation for the finding 2, we observed an opposite pattern of reward or punishment, the authors argue would be that high-magnitude anticipato- SCRs. -

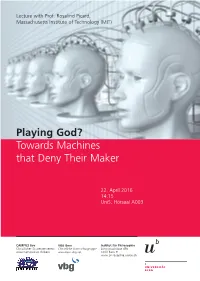

Playing God? Towards Machines That Deny Their Maker

Lecture with Prof. Rosalind Picard, Massachusetts Institute of Technology (MIT) Playing God? Towards Machines that Deny Their Maker 22. April 2016 14:15 UniS, Hörsaal A003 CAMPUS live VBG Bern Institut für Philosophie Christlicher Studentenverein Christliche Hochschulgruppe Länggassstrasse 49a www.campuslive.ch/bern www.bern.vbg.net 3000 Bern 9 www.philosophie.unibe.ch 22. April 2016 14:15 UniS, Hörsaal A003 (Schanzeneckstrasse 1, Bern) Lecture with Prof. Rosalind Picard (MIT) Playing God? Towards Machines that Deny their Maker "Today, technology is acquiring the capability to sense and respond skilfully to human emotion, and in some cases to act as if it has emotional processes that make it more intelligent. I'll give examples of the potential for good with this technology in helping people with autism, epilepsy, and mental health challenges such as anxie- ty and depression. However, the possibilities are much larger: A few scientists want to build computers that are vastly superior to humans, capable of powers beyond reproducing their own kind. What do we want to see built? And, how might we make sure that new affective technologies make human lives better?" Professor Rosalind W. Picard, Sc.D., is founder and director of the Affective Computing Research Group at the Massachusetts Institute of Technology (MIT) Media Lab where she also chairs MIT's Mind+Hand+Heart initiative. Picard has co- founded two businesses, Empatica, Inc. creating wearable sensors and analytics to improve health, and Affectiva, Inc. delivering software to measure and communi- cate emotion through facial expression analysis. She has authored or co-authored over 200 scientific articles, together with the book Affective Computing, which was instrumental in giving rise to the field by that name. -

Empathy: a Social Cognitive Neuroscience Approach Lian T

Social and Personality Psychology Compass 3/1 (2009): 94–110, 10.1111/j.1751-9004.2008.00154.x Empathy: A Social Cognitive Neuroscience Approach Lian T. Rameson* and Matthew D. Lieberman Department of Psychology, University of California, Los Angeles Abstract There has been recent widespread interest in the neural underpinnings of the experience of empathy. In this review, we take a social cognitive neuroscience approach to understanding the existing literature on the neuroscience of empathy. A growing body of work suggests that we come to understand and share in the experiences of others by commonly recruiting the same neural structures both during our own experience and while observing others undergoing the same experience. This literature supports a simulation theory of empathy, which proposes that we understand the thoughts and feelings of others by using our own mind as a model. In contrast, theory of mind research suggests that medial prefrontal regions are critical for understanding the minds of others. In this review, we offer ideas about how to integrate these two perspectives, point out unresolved issues in the literature, and suggest avenues for future research. In a way, most of our lives cannot really be called our own. We spend much of our time thinking about and reacting to the thoughts, feelings, intentions, and behaviors of others, and social psychology has demonstrated the manifold ways that our lives are shared with and shaped by our social relationships. It is a marker of the extreme sociality of our species that those who don’t much care for other people are at best labeled something unflattering like ‘hermit’, and at worst diagnosed with a disorder like ‘psychopathy’ or ‘autism’. -

Membership Information

ACM 1515 Broadway New York, NY 10036-5701 USA The CHI 2002 Conference is sponsored by ACM’s Special Int e r est Group on Computer-Human Int e r a c t i o n (A CM SIGCHI). ACM, the Association for Computing Machinery, is a major force in advancing the skills and knowledge of Information Technology (IT) profession- als and students throughout the world. ACM serves as an umbrella organization offering its 78,000 members a variety of forums in order to fulfill its members’ needs, the delivery of cutting-edge technical informa- tion, the transfer of ideas from theory to practice, and opportunities for information exchange. Providing high quality products and services, world-class journals and magazines; dynamic special interest groups; numerous “main event” conferences; tutorials; workshops; local special interest groups and chapters; and electronic forums, ACM is the resource for lifelong learning in the rapidly changing IT field. The scope of SIGCHI consists of the study of the human-computer interaction process and includes research, design, development, and evaluation efforts for interactive computer systems. The focus of SIGCHI is on how people communicate and interact with a broadly-defined range of computer systems. SIGCHI serves as a forum for the exchange of ideas among com- puter scientists, human factors scientists, psychologists, social scientists, system designers, and end users. Over 4,500 professionals work together toward common goals and objectives. Membership Information Sponsored by ACM’s Special Interest Group on Computer-Human