Security Testing Terminology and Concepts/Full Document - Codeetsi

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Systems Security Engineering Considerations for a Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems

NIST Special Publication 800-160 VOLUME 1 Systems Security Engineering Considerations for a Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems RON ROSS MICHAEL McEVILLEY JANET CARRIER OREN This publication contains systems security engineering considerations for ISO/IEC/IEEE 15288:2015, Systems and software engineering — System life cycle processes. It provides security-related implementation guidance for the standard and should be used in conjunction with and as a complement to the standard. This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-160v1 NIST Special Publication 800-160 VOLUME 1 Systems Security Engineering Considerations for a Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems RON ROSS Computer Security Division National Institute of Standards and Technology MICHAEL McEVILLEY The MITRE Corporation JANET CARRIER OREN Legg Mason This publication is available free of charge from: https://doi.org/10.6028/NIST.SP.800-160v1 November 2016 INCLUDES UPDATES AS OF 03-21-2018: PAGE XIII U.S. Department of Commerce Penny Pritzker, Secretary National Institute of Standards and Technology Willie May, Under Secretary of Commerce for Standards and Technology and Director SPECIAL PUBLICATION 800-160, VOLUME 1 SYSTEMS SECURITY ENGINEERING A Multidisciplinary Approach in the Engineering of Trustworthy Secure Systems ________________________________________________________________________________________________ Authority This publication has been developed by NIST to further its statutory responsibilities under the Federal Information Security Modernization Act (FISMA) of 2014, 44 U.S.C. § 3551 et seq., Public Law (P.L.) 113-283. NIST is responsible for developing information security standards and guidelines, including minimum requirements for federal information systems, but such standards and guidelines shall not apply to national security systems without the express approval of appropriate federal officials exercising policy authority over such systems. -

Practicing a Science of Security a Philosophy of Science Perspective

Practicing a Science of Security A Philosophy of Science Perspective Jonathan M. Spring Tyler Moore David Pym University College London The University of Tulsa University College London Gower Street 800 South Tucker Drive London WC1E 6BT London WC1E 6BT Tulsa, OK 74104-9700 Alan Turing Institute [email protected] [email protected] [email protected] ABSTRACT Experiments Structured observations of the empirical are untenable world Our goal is to refocus the question about cybersecurity re- Reproducibility Evaluate by repetition, replication, varia- search from ‘is this process scientific’ to ‘why is this scientific is impossible tion, reproduction, and/or corroboration process producing unsatisfactory results’. We focus on five No laws of common complaints that claim cybersecurity is not or can- Mechanistic explanation of phenomena nature not be scientific. Many of these complaints presume views to make nature intelligible No single associated with the philosophical school known as Logical Em- Specialization necessitates translation ontology piricism that more recent scholarship has largely modified or rejected. Modern philosophy of science, supported by mathe- ‘Just’ Both science and engineering are neces- matical modeling methods, provides constructive resources engineering sary to mitigate all purported challenges to a science of security. Table 1: Five common complaints raised by the science of cy- Therefore, we argue the community currently practices a bersecurity community and positive reframing from the phi- science of cybersecurity. A philosophy of science perspective losophy of science literature. suggests the following form of practice: structured observa- tion to seek intelligible explanations of phenomena, evaluating explanations in many ways, with specialized fields (including engineering and forensics) constraining explanations within Its proponents claim a science of security is needed for ongo- their own expertise, inter-translating where necessary. -

A Cybersecurity Testbed for Industrial Control Systems

A Cybersecurity Testbed for Industrial Control Systems R. Candell, D.M. Anand, and K. Stouffer National Institute of Standards and Technology, Gaithersburg MD, U.S.A [rick.candell, dhananjay.anand, keith.stouffer]@nist.gov Abstract — The National Institute of Standards and Technology (NIST) is developing a cybersecurity testbed for industrial control systems (ICS). The goal of this testbed is to measure the performance of an ICS when instrumented with cybersecurity protections in accordance with practices prescribed by prevailing standards and guidelines. This paper outlines the testbed design and lists research goals, use cases, and performance metrics currently being considered. The paper is also intended to initiate discussion between control and security practitioners – two groups that have had little interaction in the past. Research outcomes from the testbed will highlight specific cases where security technologies impact control performance, as well as motivate methods by which control engineers can leverage security engineering to design control algorithms that extend safety and fault tolerance to include advanced persistent threats. Keywords — industrial control systems, robotics, chemical process control, cybersecurity, industrial security, process resilience, penetration testing, process performance, measurement science, testbed, robotics, robot control, safety, supervisory control and data acquisition (SCADA) I. Introduction Given the increasing interest in security of industrial control systems (ICS) and the evolving nature of advanced persistent threats against critical industrial infrastructure [1], the National Institute of Standards and Technology (NIST) has been actively involved in developing standards for cyber and control systems security via several standards bodies. Examples of such standards and guidelines include [2] and [3]. A research testbed, currently in development at NIST, will provide a platform on which to apply cybersecurity strategies to use cases that are practically relevant to industry. -

Cyber Security Engineering, Bs

2016-2017 Volgenau School of Engineering CYBER SECURITY ENGINEERING, B.S. 2016 - 2017 Cyber Security Engineering is concerned with the development of cyber resilient systems which include the protection of the physical as well as computer and network systems. It requires a proactive approach in engineering design of physical systems with cyber security incorporated from the beginning of system development. Cyber security engineering is an important quantitative methodology to be used in all industries to include, but not limited to, transportation, energy, healthcare, infrastructure, finance, government (federal, state, and local), and defense. The program is focused on the cyber security engineering of integrated cyber-physical systems. This degree provides a foundation in cyber security engineering, and is most appropriate for students with a strong mathematics and science background. The program is administered by the Dean's Office, Volgenau School of Engineering. Cyber security engineers are part of integrated design and development teams for physical systems that require embedded cyber security design, working with engineers from other disciplines (e.g. civil, mechanical, electrical, systems engineers as well as computer scientists and software engineers). Cyber security engineers are engineers who know technology, but who also have in-depth exposure to the application/domain area. Not only do they provide technological solutions to cyber security problems of engineering systems posed by others, but by having an understanding of the application/domain, they can formulate potential security threats, propose appropriate solutions, and then provide leadership in the design of a system to resist and survive these threats. Because of their interdisciplinary training, cyber security engineers are expected to play an increasing role in attacking some of the most pressing current cyber security issues in the country. -

Systems Security Engineering Fact Sheet

The MITRE Corporation Systems Security Engineering The MITRE Corporation is using Systems Security Engineering (SSE) to ensure the U.S. Air Force fields and sustains resilient capabilities that provide mission assurance against evolving threats. SSE uses scientific and engineering principles to deliver assured system-level protection via a single, full-system/full life cycle view of system security. The Challenge Requirements DMS* Cyber attacks, and our ability to effectively deal with them, impact Cryptography Program our nation’s infrastructure, military Software Protection Assurance capabilities, and commercial and military industry partners. Cyber Anti- HW Supply Chain Risk Tamper technology is thoroughly embed- Assurance Management ded into everything we engineer, including both business and weap- Sustainment Other Information ons systems. The historical ways Assurance in which we acquire, operate, and *Diminishing Manufacturing Sources sustain our systems must change Typical security engineering approach: “As Is” because, given time, sophisticated and persistent attackers will get in. Holistic, integrated, program protection The Solution Systems Security Engineering (SSE) New thinking in the applica- tions of systems engineering is Systems Security Engineering Engineering (SSE) Instructions for required in the face of evolving CPI Identification Engineering threats. MITRE is working with Instructions for SSE Integrated our sponsors to address these Technical Process challenges in a holistic manner (SITP) Anti-Tamper that allows the -

2019 Doctor's Programs in Central South University

2019 Doctor’s Programs in Central South University School Major Research Field Language Duration School of Marxism Marxism Basic Principle of Marxism Chinese 4 year School of Marxism Marxism Sinicization of Marxism Chinese 4 year School of Marxism Marxism Marxist Studies Abroad Chinese 4 year School of Marxism Marxism Basic Problem of Modern Chinese history Chinese 4 year School of Public Administration Philosophy Marxist Philosophy Chinese 4 year School of Public Administration Philosophy Chinese Philosophy Chinese 4 year School of Public Administration Philosophy Foreign Philosophy Chinese 4 year School of Public Administration Philosophy Ethics Chinese 4 year School of Public Administration Philosophy Aesthetics Chinese 4 year School of Public Administration Philosophy Philosophy of Science and Technology Chinese 4 year School of Public Administration Philosophy Management Philosophy Chinese 4 year School of Public Administration Sociology Theoretical Sociology Chinese 4 year School of Public Administration Sociology Applied Sociology Chinese 4 year School of Public Administration Sociology Public Administration and Social Policy Chinese 4 year School of Public Administration Sociology Social Work Chinese 4 year School of Public Administration Public Management Urban and Local Governance Chinese 4 year School of Public Administration Public Management Public Security and Hazard Governance Chinese 4 year School of Public Administration Public Management Social Organizations and Public Policy Chinese 4 year School of Public Administration -

Security Requirements Reusability and the SQUARE Methodology

Security Requirements Reusability and the SQUARE Methodology Travis Christian Faculty Advisor Nancy Mead September 2010 TECHNICAL NOTE CMU/SEI-2010-TN-027 CERT® Program Unlimited distribution subject to the copyright. http://www.sei.cmu.edu This report was prepared for the SEI Administrative Agent ESC/XPK 5 Eglin Street Hanscom AFB, MA 01731-2100 The ideas and findings in this report should not be construed as an official DoD position. It is published in the interest of scientific and technical information exchange. This work is sponsored by the U.S. Department of Defense. The Software Engineering Institute is a federally funded research and development center sponsored by the U.S. Department of Defense. Copyright 2010 Carnegie Mellon University. NO WARRANTY THIS CARNEGIE MELLON UNIVERSITY AND SOFTWARE ENGINEERING INSTITUTE MATERIAL IS FURNISHED ON AN ―AS-IS‖ BASIS. CARNEGIE MELLON UNIVERSITY MAKES NO WARRANTIES OF ANY KIND, EITHER EXPRESSED OR IMPLIED, AS TO ANY MATTER INCLUDING, BUT NOT LIMITED TO, WARRANTY OF FITNESS FOR PURPOSE OR MERCHANTABILITY, EXCLUSIVITY, OR RESULTS OBTAINED FROM USE OF THE MATERIAL. CARNEGIE MELLON UNIVERSITY DOES NOT MAKE ANY WARRANTY OF ANY KIND WITH RESPECT TO FREEDOM FROM PATENT, TRADEMARK, OR COPYRIGHT INFRINGEMENT. Use of any trademarks in this report is not intended in any way to infringe on the rights of the trademark holder. Internal use. Permission to reproduce this document and to prepare derivative works from this document for internal use is granted, provided the copyright and ―No Warranty‖ statements are included with all reproductions and derivative works. External use. This document may be reproduced in its entirety, without modification, and freely distributed in written or electronic form without requesting formal permission. -

Systems Security Engineering Cyber Resiliency Considerations for the Engineering of Trustworthy Secure Systems

Draft NIST Special Publication 800-160 VOLUME 2 Systems Security Engineering Cyber Resiliency Considerations for the Engineering of Trustworthy Secure Systems RON ROSS RICHARD GRAUBART DEBORAH BODEAU ROSALIE MCQUAID This document is a supporting publication to the NIST systems security engineering guidance provided in Special Publication 800-160, Volume 1. The content was specifically designed to PRE-RELEASE DRAFT be used with and to complement the flagship systems security NOT FOR DISTRIBUTION engineering publication to support organizations that require cyber resiliency as a property or characteristic of their systems. The goals, objectives, techniques, implementation approaches, and design principles that are described in this publication are an integral part of a cyber resiliency engineering framework and are applied in a life cycle-based systems engineering process. Draft NIST Special Publication 800-160 VOLUME 2 Systems Security Engineering Cyber Resiliency Considerations for the Engineering of Trustworthy Secure Systems RON ROSS Computer Security Division National Institute of Standards and Technology RICHARD GRAUBART DEBORAH BODEAU ROSALIE MCQUAID Cyber Resiliency and Innovative Mission Engineering Department The MITRE Corporation March 2018 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology DRAFT NIST SP 800-160, VOLUME 2 SYSTEMS SECURITY ENGINEERING CYBER RESILIENCY CONSIDERATIONS FOR THE ENGINEERING OF TRUSTWORTHY SECURE SYSTEMS ________________________________________________________________________________________________________________________________________________ Authority This publication has been developed by the National Institute of Standards and Technology to further its statutory responsibilities under the Federal Information Security Modernization Act (FISMA) of 2014, 44 U.S.C. § 3551 et seq., Public Law (P.L.) 113-283. -

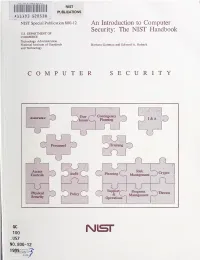

An Introduction to Computer Security: the NIST Handbook U.S

HATl INST. OF STAND & TECH R.I.C. NIST PUBLICATIONS AlllOB SEDS3fl NIST Special Publication 800-12 An Introduction to Computer Security: The NIST Handbook U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards Barbara Guttman and Edward A. Roback and Technology COMPUTER SECURITY Contingency Assurance User 1) Issues Planniii^ I&A Personnel Trairang f Access Risk Audit Planning ) Crypto \ Controls O Managen»nt U ^ J Support/-"^ Program Kiysfcal ~^Tiireats Policy & v_ Management Security Operations i QC 100 Nisr .U57 NO. 800-12 1995 The National Institute of Standards and Technology was established in 1988 by Congress to "assist industry in the development of technology . needed to improve product quality, to modernize manufacturing processes, to ensure product reliability . and to facilitate rapid commercialization ... of products based on new scientific discoveries." NIST, originally founded as the National Bureau of Standards in 1901, works to strengthen U.S. industry's competitiveness; advance science and engineering; and improve public health, safety, and the environment. One of the agency's basic functions is to develop, maintain, and retain custody of the national standards of measurement, and provide the means and methods for comparing standards used in science, engineering, manufacturing, commerce, industry, and education with the standards adopted or recognized by the Federal Government. As an agency of the U.S. Commerce Department's Technology Administration, NIST conducts basic and applied research in the physical sciences and engineering, and develops measurement techniques, test methods, standards, and related services. The Institute does generic and precompetitive work on new and advanced technologies. NIST's research facilities are located at Gaithersburg, MD 20899, and at Boulder, CO 80303. -

System Security Engineering Guidebook

The Black Book: A Starter Guide to Systems Security Engineering for Acquisition Bradley Doohan (Australia); Steve Sterling, Mark Jennings, Frederic Painchaud, LCol Yves Turcotte, LCdr Marc Lanouette (Canada); Paul Caseley, Gerard Talbert and Edward Bush (United Kingdom); and, Melinda Reed, Dana Franz, Jean-Paul LeSaint, Glenda Turner (United States). TTCP Document DOC-JSA/TP4-1-2016 Defence Research and Development Canada Reference Document DRDC-RDDC-2016-D061 October 2016 © Her Majesty the Queen in Right of Canada, as represented by the Minister of National Defence, 2016 © Sa Majesté la Reine (en droit du Canada), telle que représentée par le ministre de la Défense nationale, 2016 Abstract This booklet is an introduction to systems security engineering management concepts, terms and activities and supports the TTCP four national strategic drivers: Interoperability; Affordability; Effective Decision Making; and Agility (flexible adaptable systems). It is intended to help systems engineers within TTCP Nations and defense contractor personnel understand how security issues affect their role within the systems acquisition process. The contents of this booklet is intended for information purposes only and must therefore not be used as the basis for any contract or instruction to contractors. i Résumé Le présent livret est une introduction aux concepts, aux modalités et aux activités de gestion en matière d’ingénierie de la sécurité des systèmes et appuie les quatre facteurs stratégiques nationaux du TTCP : interopérabilité, viabilité financière, prise de décisions efficaces et agilité (systèmes adaptables et souples). Ce document vise à aider les ingénieurs de systèmes au sein des pays membres du TTCP et du personnel de l’entrepreneur de la défense à comprendre comment les problèmes de sécurité ont une incidence sur leur rôle dans le cadre du processus d’acquisition de systèmes. -

Security Engineering: Development of Curriculum and Research for Homeland Security

Security Engineering: Development of Curriculum and Research for Homeland Security • Texas A&M University-Kingsville has received an award from the Department of Homeland Security (DHS) – To establish Curriculum and Research in the integrated study of UAVs, Wireless Sensors Networks, Data Mining, Optimization, and Information Analysis and Modeling, – Two-Phase Project: • Phase I: Project refinement, extended visits by TAMUK faculty to COE partner institution (UT-El Paso), equipment purchase, validation and testing to enhance internal capabilities (Two years, $700K). • Phase II: Full research project implementation and student participation (Three years, ~$750K). • Faculty: • Dr. Selahattin Ozcelik (PI), Dr. Kai Jin (Co-PI), Dr. Hua Li (Co-PI) Department of Mechanical and Industrial Engineering • Dr. Nuri Yilmazer (Co-PI), Dr. Mais Nijim (Co-PI) Department of Electrical Engineering and Computer Science 1 Table Content • Program Features 3 • DHS Summary 4 • Main Components of Program 7 • Curriculum 9 • Courses 11 • Research 27 • Eligibility 36 2 • Program Features $7,000/academic year in Scholarships 10 weeks Fully Paid Internships or Summer Research at DHS Facility or DHS Centers of Excellence Earn a certificate in Security Engineering Field Excellent Career Placement Opportunity Graduate School Studies 3 • DHS Mission: The unified national effort to secure the U.S.A. • DHS will prevent, protect against and respond to threats and hazards to the Nation. • The mission is extremely challenging and involves many different aspects of security and variety of operations from helping to defend the U.S.A. against threats, to providing timely and effective support in response to natural disasters. • DHS and its sub-agencies deal with extremely large number of security and safety issues that require STEM educated technical workforce for solutions. -

Building Secure and Reliable Systems

Building Secure & Reliable Systems Best Practices for Designing, Implementing and Maintaining Systems Compliments of Heather Adkins, Betsy Beyer, Paul Blankinship, Piotr Lewandowski, Ana Oprea & Adam Stubblefi eld Praise for Building Secure and Reliable Systems It is very hard to get practical advice on how to build and operate trustworthy infrastructure at the scale of billions of users. This book is the first to really capture the knowledge of some of the best security and reliability teams in the world, and while very few companies will need to operate at Google’s scale many engineers and operators can benefit from some of the hard-earned lessons on securing wide-flung distributed systems. This book is full of useful insights from cover to cover, and each example and anecdote is heavy with authenticity and the wisdom that comes from experimenting, failing and measuring real outcomes at scale. It is a must for anybody looking to build their systems the correct way from day one. —Alex Stamos, Director of the Stanford Internet Observatory and former CISO of Facebook and Yahoo This book is a rare treat for industry veterans and novices alike: instead of teaching information security as a discipline of its own, the authors offer hard-wrought and richly illustrated advice for building software and operations that actually stood the test of time. In doing so, they make a compelling case for reliability, usability, and security going hand-in-hand as the entirely inseparable underpinnings of good system design. —Michał Zalewski, VP of Security Engineering at Snap, Inc. and author of The Tangled Web and Silence on the Wire This is the “real world” that researchers talk about in their papers.