A Virtuous Cycle of Semantics and Participation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Diplomarbeit Webdav-Debugger

Elektrotechnik und Informatik DIPLOMARBEIT Entwicklung eines grafikunterstützten Debugging-Werkzeugs zur Implementierung von WebDAV-Applikationen vorgelegt von: Hans Jochen Wüst Matr.-Nr.: 609058 betreut von: Prof. Dr.-Ing. habil. Hans Wojtkowiak Dr.-Ing. Bernd Klose (Universität Siegen) Dipl.-Math. Andreas Schreiber Dipl.-Inform. Markus Litz (Deutsches Zentrum für Luft- und Raumfahrt - Köln) Tag der Ausgabe: 14.12.2007 Tag der Abgabe: 13.06.2008 Abstract This document presents the results of the diploma thesis „Entwicklung ei- nes grafikunterstützten Debugging-Werkzeugs zur Implementierung von WebDAV-Applikationen“. In cooperation with the German Aerospace Cen- ter an open source tool will be realized to graphically test and evaluate the communication between WebDAV applications. First of all the necessary basics are discussed. Following this the developed solution is introduced and the concepts of the various subsystems are explained. Based on these results the design and implementation of the subsystems are described. A case of application shows the practical use of the developed concepts. Fi- nally problems having occurred in the course of the development will be examined, and an outlook for possible developments and optimizing of the prototype will be given. Im Rahmen dieser Ausarbeitung werden die Ergebnisse der Diplomar- beit „Entwicklung eines grafikunterstützten Debugging-Werkzeugs zur Im- plementierung von WebDAV-Applikationen“ präsentiert. In Kooperation mit dem Deutschen Zentrum für Luft- und Raumfahrt wird ein Open- Source-Werkzeug realisiert, mit dessen Hilfe die Kommunikation zwischen WebDAV-Anwendungen grafikunterstützt getestet und ausgewertet wer- den kann. Zunächst werden die benötigten Grundlagen erörtert. Im An- schluss daran wird das entwickelte Lösungskonzept vorgestellt und die Ent- würfe der verschiedenen Subsysteme werden dargelegt. -

What Are Kernel-Mode Rootkits?

www.it-ebooks.info Hacking Exposed™ Malware & Rootkits Reviews “Accessible but not dumbed-down, this latest addition to the Hacking Exposed series is a stellar example of why this series remains one of the best-selling security franchises out there. System administrators and Average Joe computer users alike need to come to grips with the sophistication and stealth of modern malware, and this book calmly and clearly explains the threat.” —Brian Krebs, Reporter for The Washington Post and author of the Security Fix Blog “A harrowing guide to where the bad guys hide, and how you can find them.” —Dan Kaminsky, Director of Penetration Testing, IOActive, Inc. “The authors tackle malware, a deep and diverse issue in computer security, with common terms and relevant examples. Malware is a cold deadly tool in hacking; the authors address it openly, showing its capabilities with direct technical insight. The result is a good read that moves quickly, filling in the gaps even for the knowledgeable reader.” —Christopher Jordan, VP, Threat Intelligence, McAfee; Principal Investigator to DHS Botnet Research “Remember the end-of-semester review sessions where the instructor would go over everything from the whole term in just enough detail so you would understand all the key points, but also leave you with enough references to dig deeper where you wanted? Hacking Exposed Malware & Rootkits resembles this! A top-notch reference for novices and security professionals alike, this book provides just enough detail to explain the topics being presented, but not too much to dissuade those new to security.” —LTC Ron Dodge, U.S. -

Index Images Download 2006 News Crack Serial Warez Full 12 Contact

index images download 2006 news crack serial warez full 12 contact about search spacer privacy 11 logo blog new 10 cgi-bin faq rss home img default 2005 products sitemap archives 1 09 links 01 08 06 2 07 login articles support 05 keygen article 04 03 help events archive 02 register en forum software downloads 3 security 13 category 4 content 14 main 15 press media templates services icons resources info profile 16 2004 18 docs contactus files features html 20 21 5 22 page 6 misc 19 partners 24 terms 2007 23 17 i 27 top 26 9 legal 30 banners xml 29 28 7 tools projects 25 0 user feed themes linux forums jobs business 8 video email books banner reviews view graphics research feedback pdf print ads modules 2003 company blank pub games copyright common site comments people aboutus product sports logos buttons english story image uploads 31 subscribe blogs atom gallery newsletter stats careers music pages publications technology calendar stories photos papers community data history arrow submit www s web library wiki header education go internet b in advertise spam a nav mail users Images members topics disclaimer store clear feeds c awards 2002 Default general pics dir signup solutions map News public doc de weblog index2 shop contacts fr homepage travel button pixel list viewtopic documents overview tips adclick contact_us movies wp-content catalog us p staff hardware wireless global screenshots apps online version directory mobile other advertising tech welcome admin t policy faqs link 2001 training releases space member static join health -

Syndicate Framework 55 Table of Contents Ix

Individual Service Composition in the Web-age Inauguraldissertation zur Erlangung der Wurde¨ eines Doktors der Philosophie vorgelegt der Philosophisch-Naturwissenschaftlichen Fakultat¨ der Universitat¨ Basel von Sven Rizzotti aus Basel Basel, 2008 Genehmigt von der Philosophisch-Naturwissenschaftlichen Fakult¨atauf Antrag von Herrn Prof. Dr. Helmar Burkhart, Universit¨atBasel und Herrn Prof. Dr. Gustavo Alonso, ETH Z¨urich, Korreferent. Basel, den 11.12.2007 Prof. Dr. Hans-Peter Hauri, Dekan To my parents, Heide and Fritz Rizzotti and my brother J¨org. On the Internet, nobody knows you're a dog. Peter Steiner cartoon in The New Yorker (5 July 1993) page 61 Abstract Nowadays, for a web site to reach peak popularity it must present the latest information, combined from various sources, to give an interactive, customizable impression. Embedded content and functionality from a range of specialist fields has led to a significant improvement in web site quality. However, until now the capacity of a web site has been defined at the time of creation; extension of this capacity has only been possible with considerable additional effort. The aim of this thesis is to present a software architecture that allows users to personalize a web site themselves, with capabilities taken from the immense resources of the World Wide Web. Recent web sites are analyzed and categorized according to their customization potential. The results of this analysis are then related to patterns in the field of software engineering and from these results, a general conclusion is drawn about the requirements of an application architecture to support these patterns. A theoretical concept of such an architecture is proposed and described in detail. -

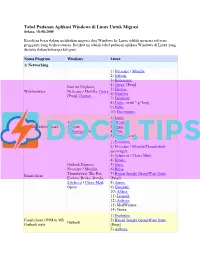

List Software Pengganti Windows Ke Linux

Tabel Padanan Aplikasi Windows di Linux Untuk Migrasi Selasa, 18-08-2009 Kesulitan besar dalam melakukan migrasi dari Windows ke Linux adalah mencari software pengganti yang berkesesuaian. Berikut ini adalah tabel padanan aplikasi Windows di Linux yang disusun dalam beberapa kategori. Nama Program Windows Linux 1) Networking. 1) Netscape / Mozilla. 2) Galeon. 3) Konqueror. 4) Opera. [Prop] Internet Explorer, 5) Firefox. Web browser Netscape / Mozilla, Opera 6) Nautilus. [Prop], Firefox, ... 7) Epiphany. 8) Links. (with "-g" key). 9) Dillo. 10) Encompass. 1) Links. 1) Links 2) ELinks. Console web browser 2) Lynx 3) Lynx. 3) Xemacs + w3. 4) w3m. 5) Xemacs + w3. 1) Evolution. 2) Netscape / Mozilla/Thunderbird messenger. 3) Sylpheed / Claws Mail. 4) Kmail. Outlook Express, 5) Gnus. Netscape / Mozilla, 6) Balsa. Thunderbird, The Bat, 7) Bynari Insight GroupWare Suite. Email client Eudora, Becky, Datula, [Prop] Sylpheed / Claws Mail, 8) Arrow. Opera 9) Gnumail. 10) Althea. 11) Liamail. 12) Aethera. 13) MailWarrior. 14) Opera. 1) Evolution. Email client / PIM in MS 2) Bynari Insight GroupWare Suite. Outlook Outlook style [Prop] 3) Aethera. 4) Sylpheed. 5) Claws Mail 1) Sylpheed. 2) Claws Mail Email client in The Bat The Bat 3) Kmail. style 4) Gnus. 5) Balsa. 1) Pine. [NF] 2) Mutt. Mutt [de], Pine, Pegasus, Console email client 3) Gnus. Emacs 4) Elm. 5) Emacs. 1) Knode. 2) Pan. 1) Agent [Prop] 3) NewsReader. 2) Free Agent 4) Netscape / Mozilla Thunderbird. 3) Xnews 5) Opera [Prop] 4) Outlook 6) Sylpheed / Claws Mail. 5) Netscape / Mozilla Console: News reader 6) Opera [Prop] 7) Pine. [NF] 7) Sylpheed / Claws Mail 8) Mutt. -

The Rise of Cloud Computing

Many of the designations used by manufacturers and sellers to distinguish their prod- Editor-in-Chief ucts are claimed as trademarks. Where those designations appear in this book, and Mark Taub the publisher was aware of a trademark claim, the designations have been printed Executive Editor with initial capital letters or in all capitals. Debra Williams Cauley The author and publisher have taken care in the preparation of this book, but make no Development Editor expressed or implied warranty of any kind and assume no responsibility for errors or omissions. No liability is assumed for incidental or consequential damages in connec- Songlin Qiu tion with or arising out of the use of the information or programs contained herein. Managing Editor The publisher offers excellent discounts on this book when ordered in quantity for Kristy Hart bulk purchases or special sales, which may include electronic versions and/or custom Project Editor covers and content particular to your business, training goals, marketing focus, and Andy Beaster branding interests. For more information, please contact: Copy Editor U.S. Corporate and Government Sales Barbara Hacha (800) 382-3419 [email protected] Indexer Heather McNeill For sales outside the United States please contact: Proofreader International Sales Language Logistics, LLC [email protected] Technical Reviewer Visit us on the Web: www.informit.com/ph Corey Burger Library of Congress Cataloging-in-Publication Data is on file Cover Designer Alan Clements Composition Copyright © 2009 Pearson Education, Inc. Bronkella Publishing, LLC All rights reserved. Printed in the United States of America. This publication is pro- tected by copyright, and permission must be obtained from the publisher prior to any prohibited reproduction, storage in a retrieval system, or transmission in any form or by any means, electronic, mechanical, photocopying, recording, or likewise. -

Nadgrajeno Brskanje

UNIVERZA V LJUBLJANI FAKULTETA ZA RAČUNALNIŠTVO IN INFORMATIKO Matjaž Horvat Nadgrajeno brskanje DIPLOMSKO DELO NA UNIVERZITETNEM ŠTUDIJU Mentor: prof. dr. Saša Divjak Ljubljana, 2009 IZJAVA O AVTORSTVU diplomskega dela Spodaj podpisani Matjaž Horvat z vpisno številko 63030124 sem avtor diplomskega dela z naslovom Nadgrajeno brskanje. S svojim podpisom zagotavljam, da: • sem diplomsko delo izdelal samostojno pod mentorstvom prof. dr. Saše Divjaka; • so elektronska oblika diplomskega dela, naslov (slov., angl.), povzetek (slov., angl.) ter ključne besede (slov., angl.) identični s tiskano obliko diplomskega dela; • soglašam z javno objavo elektronske oblike diplomskega dela v zbirki »Dela FRI«. V Ljubljani, dne 15. 12. 2009 Podpis avtorja ____________________________________ Zahvaljujem se svoji najmlajši sestri Tini Horvat Vidovič, ki me je pred zagovorom diplomskega dela vztrajno vabila na obisk v Rim. Ker sem si jo na njenem domu v večnem mestu želel obiskati šele po pridobitvi naziva univerzitetni diplomirani inženir računalništva in informatike, je bilo njeno vztrajno ponavljanje vabila najboljša motivacija za pisanje diplomskega dela. Kazalo Povzetek .............................................................................................................................................1 AbstraCt ..............................................................................................................................................2 1 Uvod..................................................................................................................................................3 -

次世代 Greasemonkey、Tsukikage System の紹介

次世代 Greasemonkey、Tsukikage System の紹介 中山心太 電気通信大学 電気通信学研究科 人間コミュニケーション学専攻 182-8585 東京都調布市調布ヶ丘 1-5-1 [email protected] 概要 Firefox の拡張機能に Greasemonkey というものがある。このソフトウェアはウェブページを閲覧してい る際に、任意の JavaScript を実行することができる。しかし JavaScript はセキュリティ上の都合からローカル ファイルの読み書き、ネイティブコードの実行などができない。そこで本稿ではそれらを可能にした Tsukikage System を提案する。 Introduction of next generation Greasemonkey,Tsukikage System. Shinta Nakayama The Department of Human Communication, The Graduate School of Electro-Communications, The University of Electro-Communications 1-5-1,Chofugaoka, chofu-shi 182-8585,Japan [email protected] 2.2 他ブラウザの同様技術 1 はじめに ブラウザ ソフトウェア名称 Firefox の拡張機能のひとつに Greasemonkey1が 2 ある。このソフトウェアはウェブページを閲覧してい IE Trixie る際に任意の JavaScirpt を実行し、ウェブページを Sleipnir3 SeaHorse4 書き換えるものである。これを利用することにより、 5 たとえば余計な広告を消したり、Amazon で閲覧中 Safari GreaseKit の商品を Yahoo!オークションで検索したりすること Opera UserJavaScript ができる。しかしこのソフトウェアには限界がある。 表 1:Greasemonkey 相当のソフトウェア ウェブページの書き換えに JavaScript を利用してい るため、自然言語処理やネイティブコードを利用し 他のブラウザでも、Greasemonkey と同様の働きを た処理ができない。そこで本稿では、これらを改善 するソフトウェア、機能はいくつかある[表 1]。 した Tsukikage System の紹介を行う。 UserJavaScript は、Opera に標準で付いている機能 である。それ以外はブラウザの拡張機能やプラグイ 2 既存技術 ンとして実装されている。また、どのソフトも Greasemonkey との互換性を重視して作られており、 2.1 Greasemonkey Firefox 独自の JavaScript の拡張を利用したもので Greasemonkey は Firefox の拡張機能で、閲覧中 無い限り、Greamosenkey 用のスクリプトを利用する のウェブページにたいして任意の JavaScirpt を実 ことができる。 行することができる。また、Firefox の特権モードで 動作しているため、Firefox そのものを拡張したり、 制限モードと違いドメインをまたいだ HTTP 通信が できるなど、高い拡張性がある。 2 http://www.bhelpuri.net/Trixie/Trixie.htm 3 http://www.fenrir.co.jp/sleipnir/ 4 http://www.fenrir.co.jp/sleipnir/plugins/seahorse.html -

Detecting In-Flight Page Changes with Web Tripwires

Detecting In-Flight Page Changes with Web Tripwires Charles Reis Steven D. Gribble Tadayoshi Kohno University of Washington University of Washington University of Washington Nicholas C. Weaver International Computer Science Institute Abstract have for end users and web publishers. In the study, our web server recorded any changes made to the HTML While web pages sent over HTTP have no integrity code of our web page for visitors from over 50,000 guarantees, it is commonly assumed that such pages are unique IP addresses. not modified in transit. In this paper, we provide ev- Changes to our page were seen by 1.3% of the client idence of surprisingly widespread and diverse changes IP addresses in our sample, drawn from a population of made to web pages between the server and client. Over technically oriented users. We observed many types of 1% of web clients in our study received altered pages, changes caused by agents with diverse incentives. For and we show that these changes often have undesirable example, ISPs seek revenue by injecting ads, end users consequences for web publishers or end users. Such seek to filter annoyances like ads and popups, and mal- changes include popup blocking scripts inserted by client ware authors seek to spread worms by injecting exploits. software, advertisements injected by ISPs, and even ma- licious code likely inserted by malware using ARP poi- Many of these changes are undesirable for publish- soning. Additionally, we find that changes introduced ers or users. At a minimum, the injection or removal of by client software can inadvertently cause harm, such as ads by ISPs or proxies can impact the revenue stream of introducing cross-site scripting vulnerabilities into most a web publisher, annoy the end user, or potentially ex- pages a client visits. -

The Table of Equivalents / Replacements / Analogs of Windows Software in Linux

The table of equivalents / replacements / analogs of Windows software in Linux. Last update: 16.07.2003, 31.01.2005, 27.05.2005, 04.12.2006 You can always find the last version of this table on the official site: http://www.linuxrsp.ru/win-lin-soft/. This page on other languages: Russian, Italian, Spanish, French, German, Hungarian, Chinese. One of the biggest difficulties in migrating from Windows to Linux is the lack of knowledge about comparable software. Newbies usually search for Linux analogs of Windows software, and advanced Linux-users cannot answer their questions since they often don't know too much about Windows :). This list of Linux equivalents / replacements / analogs of Windows software is based on our own experience and on the information obtained from the visitors of this page (thanks!). This table is not static since new application names can be added to both left and the right sides. Also, the right column for a particular class of applications may not be filled immediately. In future, we plan to migrate this table to the PHP/MySQL engine, so visitors could add the program themselves, vote for analogs, add comments, etc. If you want to add a program to the table, send mail to winlintable[a]linuxrsp.ru with the name of the program, the OS, the description (the purpose of the program, etc), and a link to the official site of the program (if you know it). All comments, remarks, corrections, offers and bugreports are welcome - send them to winlintable[a]linuxrsp.ru. Notes: 1) By default all Linux programs in this table are free as in freedom. -

Cache Cookies: Searching for Hidden Browser Storage

Bachelor thesis Computer Science Radboud University Cache Cookies: searching for hidden browser storage Author: First supervisor/assessor: Patrick Verleg Prof. dr. M.C.J.D van Eekelen s3049701 [email protected] Second assessor: Dr. ir. H.P.E. Vranken [email protected] June 26, 2014 Abstract Various ways are known to persistently store information in a browser to achieve cookie-like behaviour. These methods are collectively known as `su- per cookies'. This research looks into various super cookie methods. Atten- tion is given to the means a user has to regulate data storage and what is allowed by the cookiewet. The main goal of this research is to determine whether a browser's cache can be used to store and retrieve a user's unique identification. The results show that this is the case, and these `cache cookies' use a method so fun- damentally entangled with the cache that there is no easy way to prevent them. Because of cache cookies and other methods, the only way to prevent all tracking methods is to disable cache, history, cookies and plug-in storage. Contents 1 Introduction 2 2 Cookies for maintaining browser state 5 2.1 Storing and retrieving cookies . .5 2.1.1 Same-origin policy . .6 2.1.2 Third party cookies . .6 2.2 Protection against cookies . .7 2.2.1 Technical means . .7 2.2.2 Regulation . .8 3 Super cookies 11 3.1 Plug-in cookies . 11 3.2 HTML 5 . 14 3.3 Misusing browser features . 15 3.4 Regulation . 18 4 Research: Cache Cookies 20 4.1 Method . -

A Longitudinal Analysis of Online Ad-Blocking Blacklists

A Longitudinal Analysis of Online Ad-Blocking Blacklists Saad Sajid Hashmi Muhammad Ikram Mohamed Ali Kaafar Macquarie University Macquarie University Macquarie University [email protected] University of Michigan Data61, CSIRO [email protected] [email protected] Abstract—Websites employ third-party ads and tracking ser- research work in this domain has been on-going for some vices leveraging cookies and JavaScript code, to deliver ads time and ad-blocking tools have been developed, we believe and track users’ behavior, causing privacy concerns. To limit these studies typically consist of short-term measurements of online tracking and block advertisements, several ad-blocking (black) lists have been curated consisting of URLs and domains specific tracking techniques and the ad-blocking tools have of well-known ads and tracking services. Using Internet Archive’s not been comprehensively evaluated over time. Given that Wayback Machine in this paper, we collect a retrospective view the online ads, web tracking, and prevention landscape is of the Web to analyze the evolution of ads and tracking services continuously evolving, studies performed at a snapshot or and evaluate the effectiveness of ad-blocking blacklists. We longitudinally starting in the present may not comprehensively propose metrics to capture the efficacy of ad-blocking blacklists to investigate whether these blacklists have been reactive or illuminate online ads/tracking to improve ad-blocking. proactive in tackling the online ad and tracking services. We To fill the gap, we leverage the historical data extracted from introduce a stability metric to measure the temporal changes in the Internet Archive’s Wayback Machine [5] to analyze online ads and tracking domains blocked by ad-blocking blacklists and ads (resp.