Database Encryption - How to Balance Security with Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Data and Database Security and Controls

1 Handbook of Information Security Management, Auerbach Publishers, 1993, pages 481-499. DATA AND DATABASE SECURITY AND CONTROLS Ravi S. Sandhu and Sushil Jajodia Center for Secure Information Systems & Department of Information and Software Systems Engineering George Mason University, Fairfax, VA 22030-4444 Telephone: 703-993-1659 1 Intro duction This chapter discusses the topic of data security and controls, primarily in the context of Database Management Systems DBMSs. The emphasis is on basic principles and mechanisms, which have b een successfully used by practitioners in actual pro ducts and systems. Where appropriate, the limitations of these techniques are also noted. Our discussion fo cuses on principles and general concepts. It is therefore indep endent of any particular pro duct except for section 7 which discusses some pro ducts. In the more detailed considerations we limit ourselves sp eci cally to relational DBMSs. The reader is assumed to be familiar with rudimentary concepts of relational databases and SQL. A brief review of essential concepts is given in the app endix. The chapter b egins with a review of basic security concepts in section 2. This is followed, in section 3, by a discussion of access controls in the current generation of commercially available DBMSs. Section 4 intro duces the problem of multilevel security. It is shown that the techniques of section 3 are inadequate to solve this problem. Additional techniques develop ed for multilevel security are reviewed. Sec- tion 5, discusses the various kinds of inference threats that arise in a database system, and discusses metho ds that have b een develop ed for dealing with them. -

Design and Implement Database Security Policies in an Enterprise

Global Symphony Services Design of Database Security Policy in Enterprise Systems Design of Database Security Policy In Enterprise Systems by Krishna R Singitam Database Architect Authored: Krishna R Singitam. Database Architect & DBA. Lawson – GOC. Page 1 of 10 Global Symphony Services Design of Database Security Policy in Enterprise Systems Table of Contents 1. Abstract ..................................................................................................................................... 3 2. Introduction................................................................................................................................ 3 2.1. Understanding the Necessity of Security Policy ................................................................ 3 3. Design of Database Security Policy .......................................................................................... 4 3.1. Requirements from Business owners................................................................................ 4 3.2. Regulatory Requirements.................................................................................................. 4 3.2.1. Sarbanes-Oxley ......................................................................................................... 4 3.2.2. PCI DSS.................................................................................................................... 5 3.2.3. CA SB1386................................................................................................................5 3.2.4. HIPAA -

Understanding Holistic Database Security 8 Steps to Successfully Securing Enterprise Data Sources 2 Understanding Holistic Database Security

Information Management White Paper Understanding holistic database security 8 steps to successfully securing enterprise data sources 2 Understanding holistic database security News headlines about the increasing frequency of stolen information and identity theft have focused awareness on data security breaches—and their consequences. In response to this issue, regulations have been enacted around the world. Although the specifics of the regulations may differ, failure to ensure compliance can result in significant financial penalties, loss of customer loyalty and even criminal prosecution. In addition to the growing number of compliance mandates, organizations are under pressure to embrace the new era of computing, which brings with it new security challenges and a complex security landscape. Hackers are becoming more skilled; they are building sophisticated networks and in some cases are state sponsored. The rise of social media, cloud computing, mobility and big data are making threats harder to identify. Thus unscrupulous insiders are finding more ways to pass protected information to outsiders with less chance of detection. Organizations need to adopt a more proactive and Figure 1: Analysis of malicious or criminal attacks experienced according to systematic approach to securing sensitive data and addressing the 2011 Cost of Data Breach Study, Ponemon Institute published March 2012 compliance requirements amid the digital information explosion. This approach must span across complex, attacks are increasing. The number of SQL injection attacks geographically dispersed systems. A paradox exists where has jumped by more than two thirds: from 277,770 in Q1 2012 organizations are able to process more information than at any to 469,983 in Q2 2012.1 Ponemon reports that SQL injection other point in history, yet they are unable to understand what accounts for 28% of all breaches. -

Imperva Database Security

DATASHEET Imperva Database Security Simplify data compliance and stop breaches KEY FEATURES AND Today’s digital and knowledge economy is fueling exponential data growth by using BENEFITS more data to drive value for the business. To protect your data, and your business, you need compliance and security solutions that take a data-centric approach. Detect and prioritize data threats using data science, machine Imperva Database Security helps organizations unleash the power of their data by learning and behavior analytics reducing the risk of non-compliance or a security breach incident. Pinpoint risky data access activity – for all users including A better way to manage data risk privileged users The complexity of achieving compliant and secure data is daunting to a large Gain visibility by monitoring and enterprise organization. Rapid platform change and scarce security resources make it auditing all database activity almost impossible to keep up with on your own. Security staff is often overwhelmed Protect data with real-time by a deluge of alerts coming from multiple security tools. alerting or user access blocking of policy violations Imperva provides an automated solution to streamline compliance processes and help security staff pinpoint data risk before it becomes a serious event. With Imperva Uncover hidden risks with data Database Security you can quickly cover your most critical assets, for fast time to discovery, classification and value, and then gradually widen the net. vulnerability assessments Imperva standardizes audit and security controls across large and complex enterprise Reduce the attack surface with database environments, mitigating risks to sensitive data on-premises, in the cloud, static data masking and across multiple clouds. -

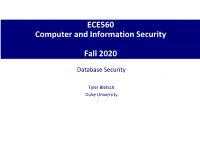

Database Security

ECE560 Computer and Information Security Fall 2020 Database Security Tyler Bletsch Duke University Table of data consisting of rows and columns Each column holds a particular type of data Each row contains a specific value for each column Ideally has one column where all values are unique, forming an identifier/key for that row Enables the creation of multiple tables linked together by a unique identifier that is present in all tables Use a relational query language to access the database Allows the user to request data that fit a given set of criteria Primary key • Uniquely identifies a row • Consists of one or more column names Foreign key Relation/table/file • Links one table to attributes in another Tuple/row/record Attribute/column/field View/virtual table • Result of a query that returns selected rows and columns from one or more tables Department Table Employee Table Did Dname Dacctno Ename Did Salarycode Eid Ephone 4 human resources 528221 Robin 15 23 2345 6127092485 8 education 202035 Neil 13 12 5088 6127092246 9 accounts 709257 Jasmine 4 26 7712 6127099348 13 public relations 755827 Cody 15 22 9664 6127093148 15 services 223945 Holly 8 23 3054 6127092729 Robin 8 24 2976 6127091945 primary key Smith 9 21 4490 6127099380 foreign primary key key (a) Two tables in a relational database Dname Ename Eid Ephone human resources Jasmine 7712 6127099348 education Holly 3054 6127092729 education Robin 2976 6127091945 accounts Smith 4490 6127099380 public relations Neil 5088 6127092246 services Robin 2345 6127092485 services Cody -

A New Database Encryption Model Based on Encryption Classes

Journal of Computer Science Original Research Paper A New Database Encryption Model Based on Encryption Classes Karim El Bouchti, Soumia Ziti, Fouzia Omary and Nassim Kharmoum Department of Computer Science, Intelligent Processing Systems & Security Team, Faculty of Sciences, Mohammed V University in Rabat, Morocco Article history Abstract: Data encryption is one of the advanced measures used to Received: 30-03-2019 consolidate data security inside Databases. It is an essential technique to Revised: 17-05-2019 protect data against theft, disclosure or modification from different typologies Accepted: 25-06-2019 of attacks. In this work, we will propose a new database encryption model. It Karim El Bouchti is based on a novel concept called "Encryption Classes". The proposed model Department of Computer is full compared to the existing models; it integrates many security Science, Intelligent Processing mechanisms starting from the keys generation and their protection until the Systems and Security Team, data encryption. Furthermore, our proposed model performed a new feature Faculty of Sciences, which is the Database structure encryption. Mohammed V University in Rabat, Morocco Keywords: Database Security, Database Security Model, Data Security, E-mail: [email protected] Computer Security, Database Encryption Model, Database Encryption Introduction The DBs encryption is based on developing efficient encryption models. A relevant model must meet criteria Recently, the security of databases (DB) has been such as encryption granularity level, efficient encryption subject of multiple studies and researches conducted by keys management and high performance (Shmueli et al., the computer security worldwide community; it aims to 2014; Elovici et al., 2018). In the literature, several DB protect sensitive data stored in centralized or distributed encryption models have been proposed. -

Classification of Various Security Techniques in Databases and Their Comparative Analysis

Classification of Various Security Techniques in Databases and their Comparative Analysis Harshavardhan Kayarkar M.G.M’s College of Engineering and Technology, Navi Mumbai, India Email: [email protected] ABSTRACT: Data security is one of the most information stored varies and depends on crucial and a major challenge in the digital different organizations and companies. world. Security, privacy and integrity of data are Nevertheless, every type of information needs demanded in every operation performed on some security to preserve data. The level of internet. Whenever security of data is discussed, security depends on the nature of information. it is mostly in the context of secure transfer of For example, the military databases require top data over the unreliable communication and high level security so that the information is networks. But the security of the data in not accessed by an outsider but the concerned databases is also as important. In this paper we authority because the leakage of critical will be presenting some of the common security information in this case could be dangerous and techniques for the data that can be implemented even life threatening. Whereas the level of in fortifying and strengthening the databases. security needed for the database of a public server may not be as intensive as the military Keywords: Security techniques, cryptography, database. hashing, steganography. Database security is essential because they 1. INTRODUCTION suffer from security threats that may prove harmful and disastrous if disclosed or accessed In this ever growing cyber world where millions publicly. Below we will present some security and trillions of bytes of data is transferred everyday over the internet, the security of this threats that are suffered by the databases. -

Implementing Database Security and Auditing

Implementing Database Security and Auditing Related Titles from Digital Press Oracle SQL Jumpstart with Examples, Gavin Powell ISBN: 1-55558-323-7, 2005 Oracle High Performance Tuning for 9i and 10g, Gavin Powell, ISBN: 1-55558-305-9, 2004 Oracle Real Applications Clusters, Murali Vallath, ISBN: 1-55558-288-5, 2004 Oracle 9iR2 Data Warehousing, Hobbs, et al ISBN: 1-55558-287-7, 2004 Oracle 10g Data Warehousing, Hobbs et al ISBN: 1-55558-322-9, 2005 For more information or to order these and other Digital Press titles, please visit our website at www.books.elsevier.com/digitalpress! At www.books.elsevier.com/digitalpress you can: •Join the Digital Press Email Service and have news about our books delivered right to your desktop •Read the latest news on titles •Sample chapters on featured titles for free •Question our expert authors and editors •Download free software to accompany select texts Implementing Database Security and Auditing A guide for DBAs, information security administrators and auditors Ron Ben Natan Amsterdam • Boston • Heidelberg • London • New York • Oxford Paris • San Diego• San Francisco • Singapore • Sydney • Tokyo Elsevier Digital Press 30 Corporate Drive, Suite 400, Burlington, MA 01803, USA Linacre House, Jordan Hill, Oxford OX2 8DP, UK Copyright © 2005, Elsevier Inc. All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior written permission of the publisher. Permissions may be sought directly from Elsevier’s Science & Technology Rights Department in Oxford, UK: phone: (+44) 1865 843830, fax: (+44) 1865 853333, e-mail: [email protected]. -

Introduction to Database Security

© Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Digital_Art/Shutterstock CHAPTER © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC 8NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT IntroductionFOR SALE OR DISTRIBUTION toNOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE ORDatabase DISTRIBUTION SecurityNOT FOR SALE OR DISTRIBUTION © Jones & Bartlett Learning, LLC © Jones & Bartlett Learning, LLC NOT FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION TABLE OF CONTENTS CHAPTER OBJECTIVES © Jones8.1 & IssuesBartlett in Database Learning, Security LLC © Jones In & this Bartlett chapter you Learning, will learn the LLC NOT 8.2FOR SALE Fundamentals OR DISTRIBUTION of Access Control NOT FORfollowing: SALE OR DISTRIBUTION U 8.3 Database Access Control The meaning of database 8.4 Using Views for Access Control security U 8.5 Security Logs and Audit Trails How security protects privacy and confidentiality © Jones & Bartlett8.6 Learning, Encryption LLC © Jones & Bartlett Learning, LLC U Examples of accidental or NOT FOR SALE OR8.7 DISTRIBUTION SQL Data Control Language NOT FOR SALE OR DISTRIBUTION deliberate threats to security 8.8 Security in Oracle U Some database security 8.9 Statistical Database Security measures 8.10 SQL Injection U The meaning of user 8.11 Database© Jones Security & and Bartlett the Internet Learning, LLC © Jones & Bartlett Learning, LLC authentication 8.12 ChapterNOT Summary FOR SALE OR DISTRIBUTION NOT FOR SALE OR DISTRIBUTION U The meaning of authorization Exercises U How access control can be represented © Jones & Bartlett Learning, LLC © JonesU & How Bartlett the view Learning, functions as a LLC NOT FOR SALE OR DISTRIBUTION NOT FOR securitySALE device OR DISTRIBUTION © Jones & Bartlett Learning, LLC© Jones & Bartlett Learning, LLC.© NOTJones FOR SALE& Bartlett OR DISTRIBUTION. -

Mcafee Database Security Insights

SOLUTION BRIEF McAfee Database Security Insights Key Advantages Managing the multitude of alerts, reports, and events and sometimes finding the ■ Visualization of large amounts of proverbial needle in a haystack is challenging. Monitoring the activity on busy database security events enterprise databases can easily create millions of events in a short period of time, ■ Elastic-based backend and not all require action and not need to be looked at. Cybersecurity professionals ■ Google-like search abilities ■ should not have to spend most of their time wading through events and alerts to Customizable widgets ■ Workflow management identify those that require action. Instead, they should be able to focus on critical security and data protection tasks. McAfee® Database Security Insights eliminates the need to wade through millions of alerts and allows cybersecurity or data protection professionals to visualize all events and alerts, assign appropriate workflow users to take action, and have access to meaningful database risk gradings and highly configurable widgets that allow for customization. Connect With Us 1 McAfee Database Security Insights SOLUTION BRIEF Database Monitoring Challenges Visualizing Large Amounts of Data Large amounts of similar or exactly the same type of Insights helps organizations visualizing the vast number events make it very difficult to accurately assess the of database security events. importance and risk of specific events captured. Finding Drilling down into individual databases allows for a the unknown within a huge number of events is difficult detailed overview of the individual risk level, a scoring and often flawed. Cybersecurity and data protection system for potential vulnerabilities found, and access specialists invest more time in finding meaningful events distribution. -

Database Administration: Concepts, Tools, Experiences, and Problems

A111D3 DflTb?! NAri INST OF STANDARDS & TECH R.I.C. A1 1103089671 QC100 .U57 NO.500-, 28, 1978 C.2 NBS-PUB DATABASE ADMINISTRATION: CONCEPTS, TOOLS, EXPERIENCES, AND PROBLEMS NBS Special Publication 500-28 U.S. DEPARTMENT OF COMMERCE National Bureau of Standards t)-28 " %fAU Of NATIONAL BUREAU OF STANDARDS The National Bureau of Standards^ was established by an act of Congress March 3, 1901. The Bureau's overall goal is to strengthen and advance the Nation's science and technology and facilitate their effective application for public benefit. To this end, the Bureau conducts research and provides: (1) a basis for the Nation's physical measurement system, (2) scientific and technological services for industry and government, (3) a technical basis for equity in trade, and (4) technical services to pro- mote public safety. The Bureau consists of the Institute for Basic Standards, the Institute for Materials Research, the Institute for Applied Technology, the Institute for Computer Sciences and Technology, the Office for Information Programs, and the Office of Experimental Technology Incentives Program. THE INSTITUTE FOR BASIC STANDARDS provides the central basis within the United States of a complete and consist- ent system of physical measurement; coordinates that system with measurement systems of other nations; and furnishes essen- tial services leading to accurate and uniform physical measurements throughout the Nation's scientific community, industry, and commerce. The Institute consists of the Office of Measurement Services, and the following center and divisions: Applied Mathematics — Electricity — Mechanics — Heat — Optical Physics — Center for Radiation Research — Lab- oratory Astrophysics- — Cryogenics^ — Electromagnetics'' — Time and Frequency'. -

Database Security & Access Control Models: a Brief Overview

International Journal of Engineering Research & Technology (IJERT) ISSN: 2278-0181 Vol. 2 Issue 5, May - 2013 Database Security & Access Control Models: A Brief Overview Kriti, Indu Kashyap CSE Dept. Manav Rachna International University, Faridabad, India Abstract 1.1 Threats to Database Security Database security is a growing concern evidenced A threat can be identified with a hostile agent by increase in number of reported incidents of loss who either accidently or intentionally gains an of or unauthorized exposure of sensitive data. unauthorized access to the protected database Security models are the basic theoretical tool to resource [3] [4]. start with when developing a security system. These In organizations there are top ten type of threats models enforce security policies which are are recognized with can’t only increase the risk of governing rules adopted by any organization. database exposure but also cause disastrous Access control models are security models whose consequences on the entire organization. Some of purpose is to limit the activities of legitimate users. these threats are described below [19]: The main types of access control include discretionary, mandatory and role based. All the Threat 1 - Excessive Privilege Abuse three techniques have their drawbacks and When users (or applications) are granted benefits. The selection of a proper access control database access privileges that exceed the model depends on the requirement and the type of requirements of their job function, these privileges attacks to which the system is vulnerable. The aim may be abused for malicious purpose. of this paper is to give brief information on database security threats and discusses the three Threat 2 - Legitimate Privilege Abuse models of access control DAC, MAC & RBAC.